使用MR求解多个矩阵的乘积之后

首先介绍涉及到的知识点,如下:

1)value的类型是IntArrayWritable,将整型数组值取出的方法有两种。

a.其一,就是使用value的toArray()方法,返回值是一个Object obValue,然后使用Array.get(obValue,index),就可以获得数组中的元素(Object类型),其中index是数组索引,然后再利用Integer.parseInt(Array.get(obValue,index).toString())将其转化为int型即可。

b.第二种方法是使用value的get()方法获得数组,不过是Writable[],但是不能直接使用IntWritable[] intArr=(IntWritable[])value.get(),不能完成Writable[]到IntWritable[]的转化。正确的方式是:

for(Writable val:value.get()){ IntWritable intVal=(IntWritable)val; }

2)利用InputSplit获得分片的文件名,因为待求解的问题如下所示:

X=(B+u(s+b+d+f))*F

矩阵s、b、d、f与F相乘之前,要扩大u倍,而B矩阵则不需要,通过检测文件名来判断是否先扩大u倍然后再与F相乘。获得文件名的代码如下:

InputSplit inputSplit=context.getInputSplit();

String fileName=((FileSplit)inputSplit).getPath().getName();

如果是要获得文件的上级目录名,可以使用以下代码(http://blog.csdn.net/shallowgrave/article/details/7757914):

String dirName = ((FileSplit) inputSplit).getPath().getParent().getName();

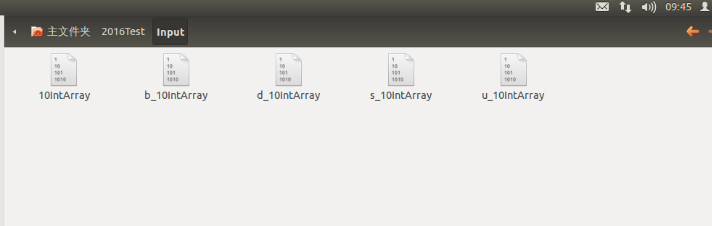

矩阵文件如下所示:

map()中实现矩阵乘法的代码如下:obValue=value.toArray(); InputSplit inputSplit=context.getInputSplit(); String fileName=((FileSplit)inputSplit).getPath().getName(); if (!(fileName.startsWith("10"))) { for (int i=0;i<rightMatrix[0].length;++i){ sum=0; for (int j=0;j<rightMatrix.length;++j){ sum+= Integer.parseInt(Array.get(obValue,j).toString())*2*rightMatrix[j][i]; } arraySum[i]=new IntWritable(sum); } map_value.set(arraySum); } else{ for (int i=0;i<rightMatrix[0].length;++i){ sum=0; for (int j=0;j<rightMatrix.length;++j){ sum+= Integer.parseInt(Array.get(obValue,j).toString())*rightMatrix[j][i]; } arraySum[i]=new IntWritable(sum); } map_value.set(arraySum); } context.write(key,map_value); }

3)第三个知识点就是IntArrayWritable中toString()方法的定义中StringBuilder的使用。

1 public String toString(){ 2 StringBuilder sb=new StringBuilder(); 3 for (Writable val:get()){ 4 IntWritable intWritable=(IntWritable)val;//使用1)中的第二种方法 5 sb.append(intWritable.get());//在sb后追加一个字符 6 sb.append(",");//再追加一个','字符 7 } 8 sb.deleteCharAt(sb.length()-1);//删除最后一个字符,也就是最后一个‘,’ 9 return sb.toString();//将sb转化为字符串返回

完整代码如下:

1 /** 2 * Created with IntelliJ IDEA. 3 * User: hadoop 4 * Date: 16-3-5 5 * Time: 上午9:24 6 * To change this template use File | Settings | File Templates. 7 */ 8 import org.apache.hadoop.conf.Configuration; 9 import org.apache.hadoop.fs.FileSystem; 10 import java.io.IOException; 11 import java.lang.reflect.Array; 12 import java.net.URI; 13 import java.util.Arrays; 14 15 import org.apache.hadoop.fs.Path; 16 import org.apache.hadoop.io.IntWritable; 17 import org.apache.hadoop.io.ArrayWritable; 18 import org.apache.hadoop.io.Text; 19 import org.apache.hadoop.io.Writable; 20 import org.apache.hadoop.mapreduce.InputSplit; 21 import org.apache.hadoop.mapreduce.Job; 22 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 23 import org.apache.hadoop.mapreduce.lib.input.FileSplit; 24 import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat; 25 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 26 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 27 import org.apache.hadoop.mapreduce.Reducer; 28 import org.apache.hadoop.mapreduce.Mapper; 29 30 public class MutiInputMatrixProduct { 31 public static class MyMapper extends Mapper<IntWritable,IntArrayWritable,IntWritable,IntArrayWritable>{ 32 public IntArrayWritable map_value=new IntArrayWritable(); 33 public static int[][] rightMatrix=new int[][]{{10,10,10,10,10},{10,10,10,10,10},{10,10,10,10,10}, 34 {10,10,10,10,10}}; 35 public Object obValue=null; 36 public IntWritable[] arraySum=new IntWritable[rightMatrix[0].length]; 37 public int sum=0; 38 public void map(IntWritable key,IntArrayWritable value,Context context) throws IOException, InterruptedException { 39 obValue=value.toArray(); 40 InputSplit inputSplit=context.getInputSplit(); 41 String fileName=((FileSplit)inputSplit).getPath().getName(); 42 43 if (!(fileName.equals("10IntArray"))) { 44 for (int i=0;i<rightMatrix[0].length;++i){ 45 sum=0; 46 for (int j=0;j<rightMatrix.length;++j){ 47 sum+= Integer.parseInt(Array.get(obValue,j).toString())*2*rightMatrix[j][i]; 48 } 49 arraySum[i]=new IntWritable(sum); 50 } 51 map_value.set(arraySum); 52 } 53 else{ 54 for (int i=0;i<rightMatrix[0].length;++i){ 55 sum=0; 56 for (int j=0;j<rightMatrix.length;++j){ 57 sum+= Integer.parseInt(Array.get(obValue,j).toString())*rightMatrix[j][i]; 58 } 59 arraySum[i]=new IntWritable(sum); 60 } 61 map_value.set(arraySum); 62 } 63 context.write(key,map_value); 64 } 65 } 66 public static class MyReducer extends Reducer<IntWritable,IntArrayWritable,IntWritable,Text>{ 67 public int[] sum=null; 68 public Object obValue=null; 69 public Text resultText=null; 70 71 public void setup(Context context){ 72 sum=new int[Integer.parseInt(context.getConfiguration().get("leftMatrixNum"))]; 73 } 74 75 public void reduce(IntWritable key,Iterable<IntArrayWritable>value,Context context) throws IOException, InterruptedException { 76 for(IntArrayWritable intValue:value){ 77 obValue=intValue.toArray(); 78 for (int i=0;i<Array.getLength(obValue);++i){ 79 sum[i]+=Integer.parseInt(Array.get(obValue,i).toString()); 80 } 81 82 } 83 resultText=new Text(Arrays.toString(sum)); 84 for (int i=0;i<sum.length;++i){ 85 sum[i]=0; 86 } 87 context.write(key,resultText); 88 } 89 90 } 91 92 public static void main(String[]args) throws IOException, ClassNotFoundException, InterruptedException { 93 String uri="/home/hadoop/2016Test/Input"; 94 String outUri="/home/hadoop/2016Test/Output"; 95 Configuration conf=new Configuration(); 96 FileSystem fs=FileSystem.get(URI.create(uri),conf); 97 fs.delete(new Path(outUri),true); 98 conf.set("leftMatrixNum","5"); 99 Job job=new Job(conf,"MultiMatrix"); 100 job.setJarByClass(MutiInputMatrixProduct.class); 101 job.setInputFormatClass(SequenceFileInputFormat.class); 102 job.setOutputFormatClass(TextOutputFormat.class); 103 job.setMapperClass(MyMapper.class); 104 job.setReducerClass(MyReducer.class); 105 job.setMapOutputKeyClass(IntWritable.class); 106 job.setMapOutputValueClass(IntArrayWritable.class); 107 job.setOutputKeyClass(IntWritable.class); 108 job.setOutputValueClass(Text.class); 109 FileInputFormat.setInputPaths(job, new Path(uri)); 110 FileOutputFormat.setOutputPath(job,new Path(outUri)); 111 System.exit(job.waitForCompletion(true)?0:1); 112 } 113 } 114 class IntArrayWritable extends ArrayWritable { 115 public IntArrayWritable(){ 116 super(IntWritable.class); 117 } 118 public String toString(){ 119 StringBuilder sb=new StringBuilder(); 120 for (Writable val:get()){ 121 IntWritable intWritable=(IntWritable)val; 122 sb.append(intWritable.get()); 123 sb.append(","); 124 } 125 sb.deleteCharAt(sb.length()-1); 126 return sb.toString(); 127 } 128 }