定义DoubleArray并将其作为value写入SequenceFile

1)上代码:

1 /** 2 * Created with IntelliJ IDEA. 3 * User: hadoop 4 * Date: 16-1-20 5 * Time: 下午7:30 6 * To change this template use File | Settings | File Templates. 7 */ 8 import org.apache.hadoop.io.*; 9 import java.util.*; 10 public class DoubleWritableArray { 11 public static class DoubleArray extends ArrayWritable{ 12 public DoubleArray(){ 13 super(DoubleWritable.class); 14 } 15 // public DoubleWritable[] get(){return values;} 16 17 18 public static double[] convert2double(DoubleWritable[] w){ 19 double[] value=new double[w.length]; 20 for (int i = 0; i < value.length; i++) { 21 value[i]=Double.valueOf(w[i].get()); 22 } 23 return value; 24 } 25 26 27 } 28 29 public static void main(String[]args){ 30 31 32 ArrayWritable aw=new ArrayWritable(DoubleWritable.class); 33 aw.set(new DoubleWritable[] {new DoubleWritable(4.34),new DoubleWritable(6.56), 34 new DoubleWritable(9.56)}); 35 36 DoubleWritable[] values=(DoubleWritable[])aw.get(); 37 for(DoubleWritable val1:values){ 38 System.out.println(val1); 39 } 40 //与ArrayWritable相比,新定义的DoubleArray只是在调用构造函数的时候少写了DoubleWritable类而已 41 DoubleArray d=new DoubleArray(); 42 d.set(new DoubleWritable[] {new DoubleWritable(4.34),new DoubleWritable(6.56), 43 new DoubleWritable(9.56)}); 44 45 double[] temp=DoubleArray.convert2double((DoubleWritable[])d.get()) ; 46 for (double val:temp) 47 System.out.println(val); 48 49 50 51 } 52 53 54 }

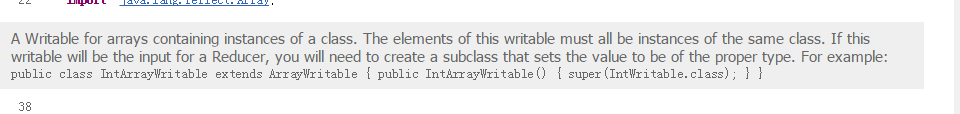

ArrayWritable作为reduce的输入的话,需要创建其子类,在子类中创建一个无参构造函数。

2)读取txt文件并将其以<long,DoubleArray>作为键值对的形式写入SequenceFile

1 package convert; 2 3 /** 4 * Created with IntelliJ IDEA. 5 * User: hadoop 6 * Date: 16-1-19 7 * Time: 下午3:09 8 * To change this template use File | Settings | File Templates. 9 */ 10 import java.io.IOException; 11 import java.net.URI; 12 13 import org.apache.hadoop.conf.Configuration; 14 import org.apache.hadoop.fs.FileSystem; 15 import org.apache.hadoop.fs.Path; 16 import org.apache.hadoop.io.DoubleWritable; 17 import org.apache.hadoop.io.IOUtils; 18 import org.apache.hadoop.io.IntWritable; 19 import org.apache.hadoop.io.LongWritable; 20 import org.apache.hadoop.io.SequenceFile; 21 import org.apache.hadoop.io.Text; 22 import org.apache.commons.io.FileUtils; 23 import org.apache.commons.io.LineIterator; 24 25 26 27 //import Jama.Matrix.*; 28 //import java.io.IOException; 29 import java.io.File; 30 31 //import javax.sound.midi.SysexMessage; 32 public class SequenceFileWriteDemo { 33 public static void main(String[] args) throws IOException { 34 String uri ="/home/hadoop/srcData/bDoubleArraySeq"; 35 Configuration conf = new Configuration(); 36 FileSystem fs = FileSystem.get(URI.create(uri), conf); 37 Path path = new Path(uri); 38 LongWritable key = new LongWritable(); 39 DoubleArrayWritable value = new DoubleArrayWritable(); 40 SequenceFile.Writer writer = null; 41 try { 42 writer = SequenceFile.createWriter(fs, conf, path, key.getClass(), 43 value.getClass()); 44 45 46 final LineIterator it2 = FileUtils.lineIterator(new File("/home/hadoop/srcData/transB.txt"), "UTF-8"); 47 try { 48 int i=0; 49 String[] strings; 50 DoubleWritable[] ArrayDoubleWritables; 51 while (it2.hasNext()) { 52 ++i; 53 final String line = it2.nextLine(); 54 key.set(i); 55 strings=line.split("\t"); 56 ArrayDoubleWritables=new DoubleWritable[strings.length]; 57 for (int j = 0; j < ArrayDoubleWritables.length; j++) { 58 ArrayDoubleWritables[j] =new DoubleWritable(Double.valueOf(strings[j])); 59 60 } 61 62 value.set(ArrayDoubleWritables); 63 writer.append(key,value); 64 //System.out.println("ffd"); 65 66 } 67 } finally { 68 it2.close(); 69 } 70 71 }finally { 72 IOUtils.closeStream(writer); 73 } 74 System.out.println("ok"); 75 76 } 77 78 }