Dolphinscheduler-3.2.0集群部署安装

一、下载二进制安装包

集群配置

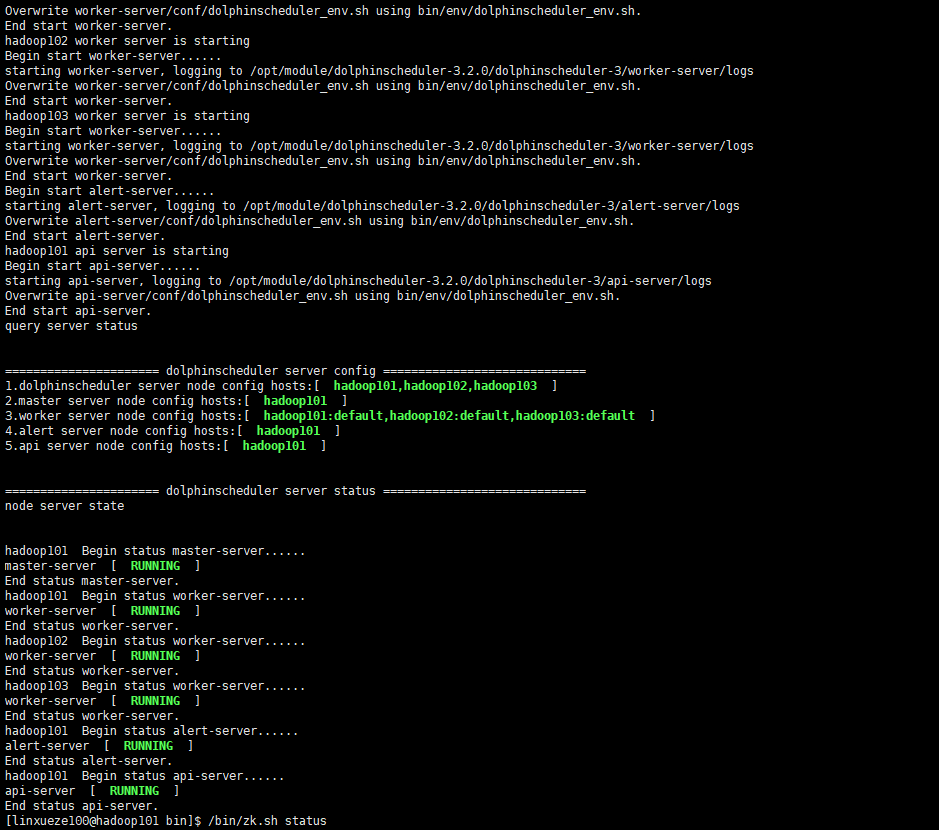

| 主机名 | IP | 部署服务 |

| hadoop101 | 192.168.12.101 | MasterServer、WorkServer、ApiServer、AlertServer |

| hadoop102 | 192.168.12.102 | WorkServer |

| hadoop103 | 192.168.12.103 | WorkServer |

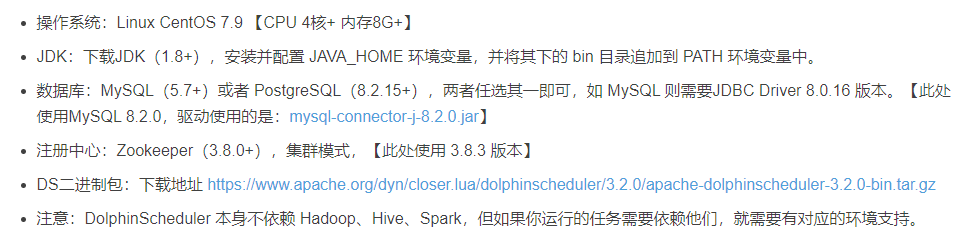

二、配置环境

1.

2.

3.

4.

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

#

# including master, worker, api, alert. If you want to deploy in pseudo-distributed

# mode, just write a pseudo-distributed hostname

# modify it if you use different ssh port

sshPort=${sshPort:-"22"}

# A comma separated list of machine hostname or IP would be installed Master server, it

# must be a subset of configuration `ips`.

# Example for hostnames: masters="ds1,ds2", Example for IPs: masters="192.168.8.1,192.168.8.2"

masters=${masters:-"hadoop101"}

# A comma separated list of machine <hostname>:<workerGroup> or <IP>:<workerGroup>.All hostname or IP must be a

# subset of configuration `ips`, And workerGroup have default value as `default`, but we recommend you declare behind the hosts

# Example for hostnames: workers="ds1:default,ds2:default,ds3:default", Example for IPs: workers="192.168.8.1:default,192.168.8.2:default,192.168.8.3:default"

workers=${workers:-"hadoop101:default,hadoop102:default,hadoop103:default"}

# A comma separated list of machine hostname or IP would be installed Alert server, it

# must be a subset of configuration `ips`.

# Example for hostname: alertServer="ds3", Example for IP: alertServer="192.168.8.3"

alertServer=${alertServer:-"hadoop101"}

# A comma separated list of machine hostname or IP would be installed API server, it

# must be a subset of configuration `ips`.

# Example for hostname: apiServers="ds1", Example for IP: apiServers="192.168.8.1"

apiServers=${apiServers:-"hadoop101"}

# The directory to install DolphinScheduler for all machine we config above. It will automatically be created by `install.sh` script if not exists.

# Do not set this configuration same as the current path (pwd). Do not add quotes to it if you using related path.

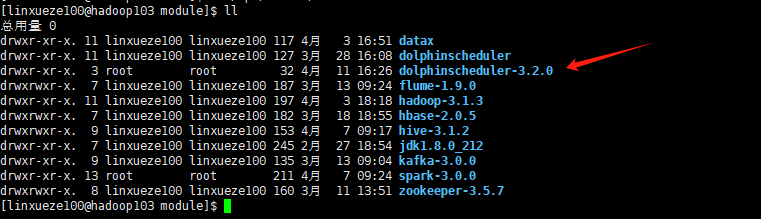

installPath=${installPath:-"/opt/module/dolphinscheduler-3.2.0/dolphinscheduler-3"}

# The user to deploy DolphinScheduler for all machine we config above. For now user must create by yourself before running `install.sh`

# script. The user needs to have sudo privileges and permissions to operate hdfs. If hdfs is enabled than the root directory needs

# to be created by this user

deployUser=${deployUser:-"linxueze100"}

# The root of zookeeper, for now DolphinScheduler default registry server is zookeeper.

# It will delete ${zkRoot} in the zookeeper when you run install.sh, so please keep it same as registry.zookeeper.namespace in yml files.

# Similarly, if you want to modify the value, please modify registry.zookeeper.namespace in yml files as well.

zkRoot=${zkRoot:-"/dolphinscheduler"}

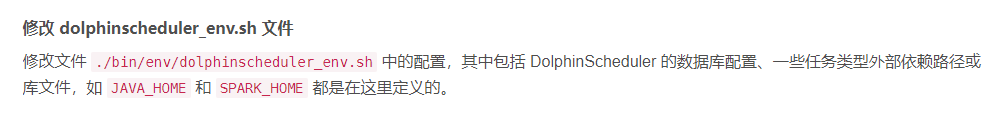

5.

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# applicationId auto collection related configuration, the following configurations are unnecessary if setting appId.collect=log

#export HADOOP_CLASSPATH=`hadoop classpath`:${DOLPHINSCHEDULER_HOME}/tools/libs/*

#export SPARK_DIST_CLASSPATH=$HADOOP_CLASSPATH:$SPARK_DIST_CLASS_PATH

#export HADOOP_CLIENT_OPTS="-javaagent:${DOLPHINSCHEDULER_HOME}/tools/libs/aspectjweaver-1.9.7.jar":$HADOOP_CLIENT_OPTS

#export SPARK_SUBMIT_OPTS="-javaagent:${DOLPHINSCHEDULER_HOME}/tools/libs/aspectjweaver-1.9.7.jar":$SPARK_SUBMIT_OPTS

#export FLINK_ENV_JAVA_OPTS="-javaagent:${DOLPHINSCHEDULER_HOME}/tools/libs/aspectjweaver-1.9.7.jar":$FLINK_ENV_JAVA_OPTS

# 数据库配置信息

export DATABASE=${DATABASE:-mysql}

export SPRING_PROFILES_ACTIVE=${DATABASE}

export SPRING_DATASOURCE_URL="jdbc:mysql://hadoop101:3306/dolphinscheduler3?useSSL=false&useUnicode=true&allowPublicKeyRetrieval=true&characterEncoding=UTF-8"

export SPRING_DATASOURCE_USERNAME=dolphinscheduler3

export SPRING_DATASOURCE_PASSWORD=000000

# DolphinScheduler服务器相关配置,这里用默认的就好了

export SPRING_CACHE_TYPE=${SPRING_CACHE_TYPE:-none}

export SPRING_JACKSON_TIME_ZONE=${SPRING_JACKSON_TIME_ZONE:-UTC}

export MASTER_FETCH_COMMAND_NUM=${MASTER_FETCH_COMMAND_NUM:-10}

# 注册表中心配置(Zookeeper),确定注册表中心的类型和链接

export REGISTRY_TYPE=${REGISTRY_TYPE:-zookeeper}

export REGISTRY_ZOOKEEPER_CONNECT_STRING=${REGISTRY_ZOOKEEPER_CONNECT_STRING:-hadoop101:2181,hadoop102:2181,hadoop103:2181}

# 任务相关组件路径配置,如果使用相关任务,则需要更改配置

export HADOOP_HOME=${HADOOP_HOME:-/opt/module/hadoop-3.1.3}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/opt/module/hadoop-3.1.3/etc/hadoop}

export SPARK_HOME=${SPARK_HOME:-/opt/module/spark-3.0.0}

export HIVE_HOME=${HIVE_HOME:-/opt/module/hive-3.1.2}

export DATAX_HOME=${DATAX_HOME:-/opt/module/datax}

#export PYTHON_LAUNCHER=${PYTHON_LAUNCHER:-/opt/soft/python}

#export FLINK_HOME=${FLINK_HOME:-/opt/soft/flink}

# 配置路径导出

export PATH=$HADOOP_HOME/bin:$SPARK_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin:$DATAX_HOME:/bin:$PATH

6.

7.

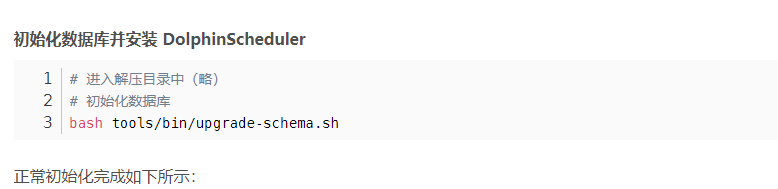

8.

不要为了追逐,而忘记当初的样子。

浙公网安备 33010602011771号

浙公网安备 33010602011771号