Flink:分流写入Kafka

前言:接上一篇

需求描述:数据类型分别是页面数据、曝光数据、启动数据,分成三个流写入Kafka

// 5.使用侧输出流将 启动、曝光、页面数据分流 OutputTag<String> startoutputTag = new OutputTag<String>("start"){ }; OutputTag<String> displayoutputTag = new OutputTag<String>("display") { }; SingleOutputStreamOperator<String> pageDS = jsonObjWithNewFlag.process(new ProcessFunction<JSONObject, String>() { @Override public void processElement(JSONObject jsonObject, Context ctx, Collector<String> out) throws Exception { // 获取启动数据 String start = jsonObject.getString("start"); if (start != null && start.length() > 0) { // 为启动数据 ctx.output(startoutputTag, jsonObject.toJSONString()); } else { // 不是启动数据,则一定为页面数据 out.collect(jsonObject.toJSONString()); // 获取曝光数据 JSONArray displays = jsonObject.getJSONArray("displays"); // 取出公共字段、页面信息、时间戳 JSONObject common = jsonObject.getJSONObject("common"); JSONObject page = jsonObject.getJSONObject("page"); Long ts = jsonObject.getLong("ts"); // 判断曝光数据是否存在 if (displays != null && displays.size() > 0) { JSONObject displayObj = new JSONObject(); displayObj.put("common", common); displayObj.put("page", page); displayObj.put("ts", ts); // 遍历每一个曝光信息 for (Object display : displays) { displayObj.put("display", display); // 输出到侧输出流 ctx.output(displayoutputTag, displayObj.toJSONString()); } } } } }); // 6.将三个流的数据写入Kafka jsonObjDS.getSideOutput(dirty).print("Dirty>>>>>>>>>>>>"); pageDS.print("page>>>>>>>>>"); pageDS.getSideOutput(startoutputTag).print("Start>>>>>>>>>>>"); pageDS.getSideOutput(displayoutputTag).print("Dirty>>>>>>>>>>");

// 创建Kakfa主题

String pageSinkTopic = "dwd_page_log";

String startSinkTopic = "dwd_start_log";

String displaySinkTopic = "dwd_display_log";

// 写入Kafka主题

pageDS.addSink(MyKafkaUtil.getKafkaSink(pageSinkTopic));

pageDS.getSideOutput(startoutputTag).addSink(MyKafkaUtil.getKafkaSink(startSinkTopic));

pageDS.getSideOutput(displayoutputTag).addSink(MyKafkaUtil.getKafkaSink(displaySinkTopic));

测试一:

执行IDEA主程序,启动Kafka生产者

bin/kafka-console-producer.sh --broker-list hadoop1:9092 --topic ods_base_log

并在生产者中写入数据

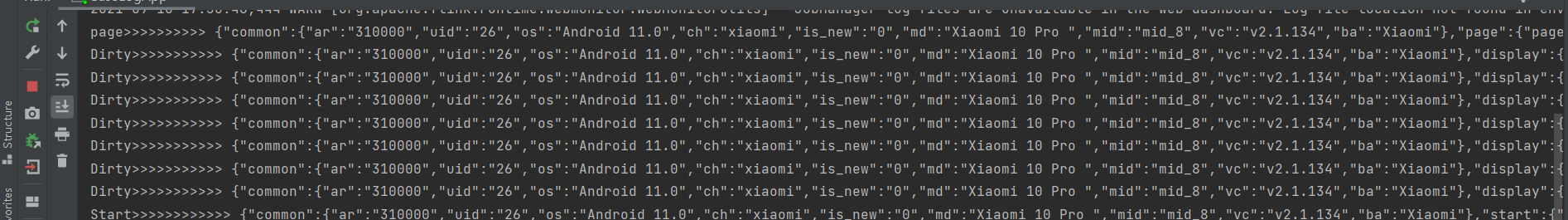

曝光页面日志

{"common":{"ar":"310000","ba":"Xiaomi","ch":"xiaomi","is_new":"0","md":"Xiaomi 10 Pro ","mid":"mid_8","os":"Android 11.0","uid":"26","vc":"v2.1.134"},"displays":[{"display_type":"query","item":"9","item_type":"sku_id","order":1,"pos_id":5},{"display_type":"recommend","item":"8","item_type":"sku_id","order":2,"pos_id":2},{"display_type":"promotion","item":"6","item_type":"sku_id","order":3,"pos_id":4},{"display_type":"query","item":"10","item_type":"sku_id","order":4,"pos_id":3},{"display_type":"promotion","item":"5","item_type":"sku_id","order":5,"pos_id":3},{"display_type":"query","item":"8","item_type":"sku_id","order":6,"pos_id":3},{"display_type":"promotion","item":"1","item_type":"sku_id","order":7,"pos_id":4}],"page":{"during_time":15161,"item":"口红","item_type":"keyword","last_page_id":"home","page_id":"good_list"},"ts":1622273617000}

启动日志

{"common":{"ar":"310000","ba":"Xiaomi","ch":"xiaomi","is_new":"0","md":"Xiaomi 10 Pro ","mid":"mid_8","os":"Android 11.0","uid":"26","vc":"v2.1.134"},"start":{"entry":"icon","loading_time":3652,"open_ad_id":18,"open_ad_ms":8355,"open_ad_skip_ms":0},"ts":1622273617000}

查看IDEA客户端是否成功分流

测试二:

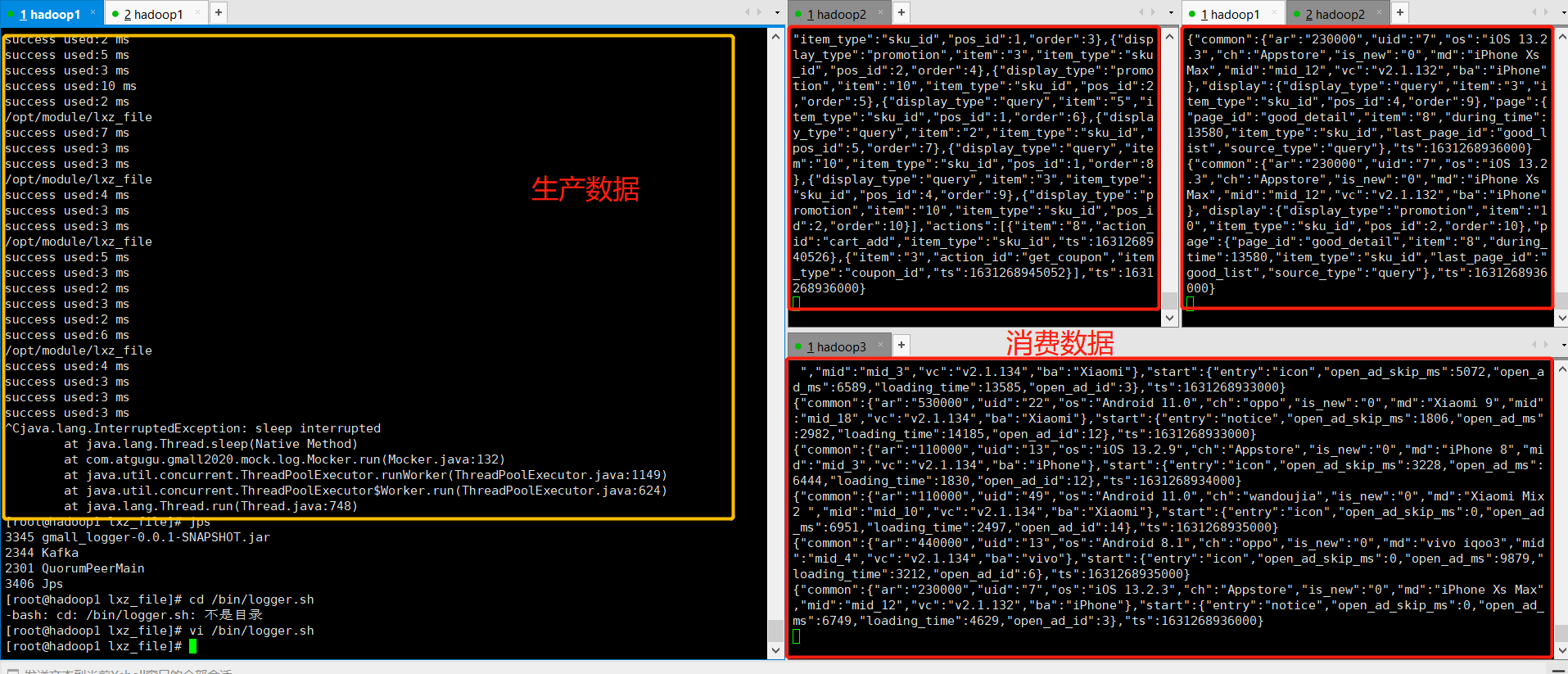

实现Nginx下的动态分流,将生产环境下的数据分流写入不同的Kafka主题

环境:zk、kafka、logger.sh、nginx负载均衡、mysql-binlog、FlinkCDC

1.需要开启服务器zk、kafka集群还有logger.sh

logger.sh脚本

#!/bin/bash JAVA_BIN=/opt/module/jdk1.8.0_144/bin/java APPNAME=gmall_logger-0.0.1-SNAPSHOT.jar case $1 in "start") { for i in hadoop1 hadoop2 hadoop3 do echo "========: $i===============" ssh $i "$JAVA_BIN -Xms32m -Xmx64m -jar /opt/module/lxz_file/$APPNAME >/dev/null 2>&1 &" done echo "========NGINX===============" /opt/module/nginx/sbin/nginx };; "stop") { echo "======== NGINX===============" /opt/module/nginx/sbin/nginx -s stop for i in hadoop1 hadoop2 hadoop3 do echo "========: $i===============" ssh $i "ps -ef|grep $APPNAME |grep -v grep|awk '{print \$2}'|xargs kill" >/dev/null 2>&1 done };; esac

2.开启三个流的kafka消费者

# 页面日志消费者

bin/kafka-console-consumer.sh --bootstrap-server hadoop102:9092 --topic dwd_page_log # 曝光日志消费者 bin/kafka-console-consumer.sh --bootstrap-server hadoop102:9092 --topic dwd_display_log # 启动日志消费者 bin/kafka-console-consumer.sh --bootstrap-server hadoop102:9092 --topic dwd_start_log

3.启动IDEA主程序BaseLogApp

4.服务器启动mock.log(注意修改application的访问端口是Nginx的)

5.查看三个消费者是否成功分流消费数据

不要为了追逐,而忘记当初的样子。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· ollama系列01:轻松3步本地部署deepseek,普通电脑可用

· 按钮权限的设计及实现

· 25岁的心里话