爬虫的学习

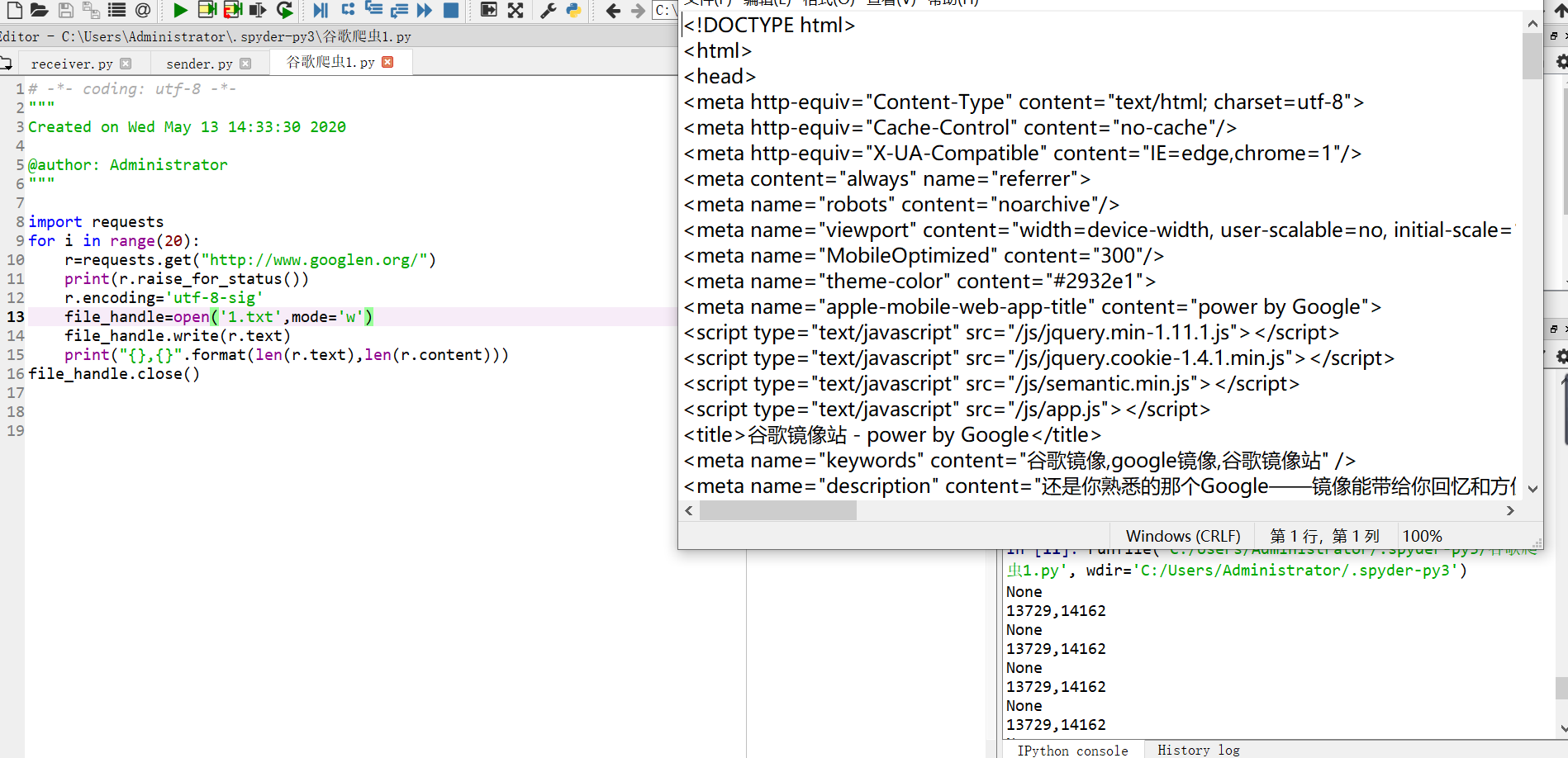

1.用requests库的ge()函数反问一个网站20次,打印返回状态,text()内容,计算text()属性和content()属性所返回网页内容的长度。

1 import requests 2 for i in range(20): 3 r=requests.get("http://www.googlen.org/") 4 print(r.raise_for_status()) 5 r.encoding='utf-8-sig' 6 file_handle=open('1.txt',mode='w') 7 file_handle.write(r.text) 8 print("{},{}".format(len(r.text),len(r.content))) 9 file_handle.close()

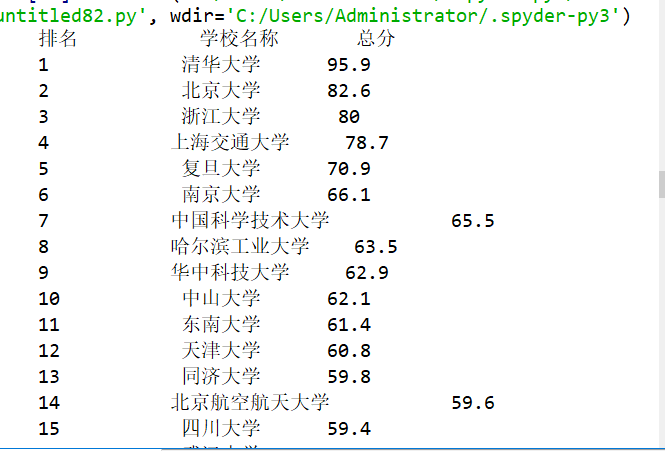

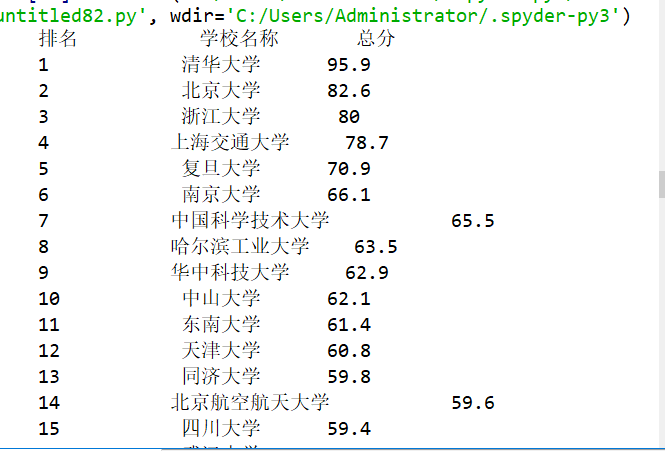

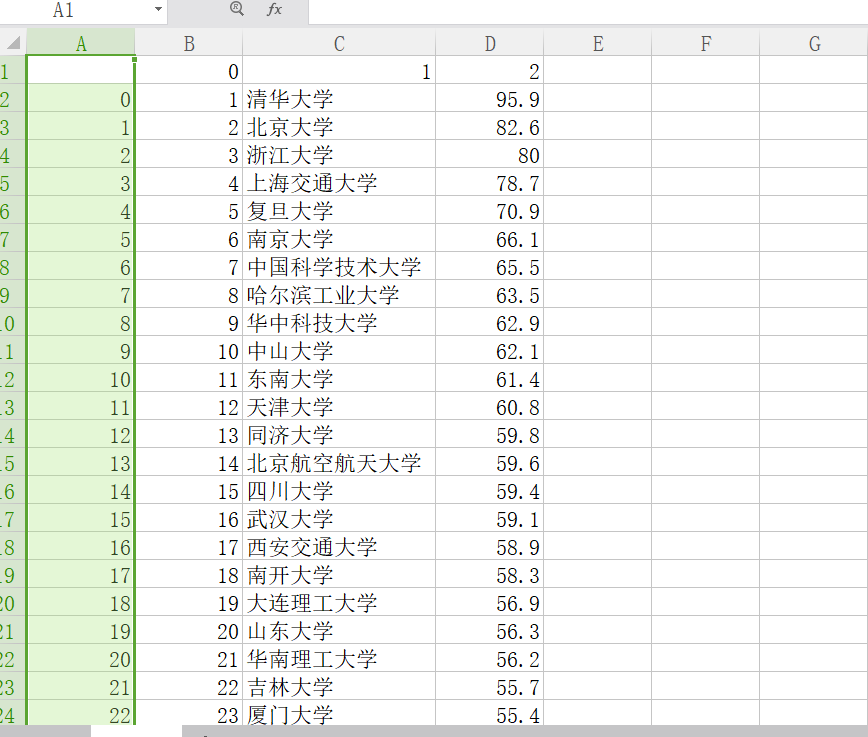

2.中国大学排名网络爬虫

import requests from bs4 import BeautifulSoup import bs4 import pandas as pd def getHTMLText(url): #爬取最好大学排名网站内容 try: r = requests.get(url, timeout = 30) r.raise_for_status() r.encoding = r.apparent_encoding return r.text except: return "" def fillUnivList(ulist, html): #将爬取的内容中的所需内容找出并存入列表 soup = BeautifulSoup(html, "html.parser") for tr in soup.find('tbody').children: if isinstance(tr, bs4.element.Tag): tds = tr('td') ulist.append([tds[0].string, tds[1].string, tds[3].string]) data1 = pd.DataFrame(ulist) data1.to_csv('data1.csv') def printUnivList(ulist, num): #将信息以列表的形式输出 print("{:^10}\t{:^6}\t{:^10}".format("排名", "学校名称", "总分")) for i in range(num): u = ulist[i] print("{:^10}\t{:^6}\t{:^10}".format(u[0], u[1], u[2])) def main(): uinfo = [] url = 'http://www.zuihaodaxue.cn/zuihaodaxuepaiming2016.html' html = getHTMLText(url) fillUnivList(uinfo, html) printUnivList(uinfo, 300) main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号