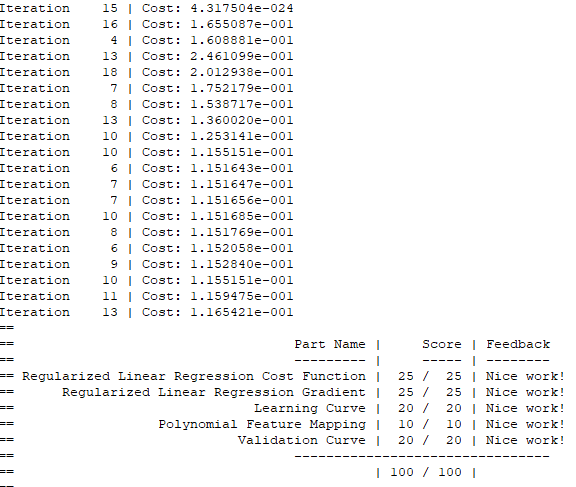

linearRegCostFunction.m

J = (sum((X*theta - y).^2)+(sum(theta.^2) - theta(1).^2)*lambda)/(2*m); theta1 = [0 ; theta(2:size(theta), :)]; grad = (X'*(X*theta - y) + lambda*theta1)/m;

learningCurve.m

n = size(Xval, 1); for i=1:m [theta] = trainLinearReg(X(1:i, :), y(1:i), lambda); error_train(i) = sum((X(1:i, :)*theta - y(1:i)).^2)/(2 * i); error_val(i) = sum((Xval*theta - yval).^2)/(2*n); end

polyFeatures.m

for i=1:p X_poly(:, i) = X.^i; end

validationCurve.m

for i=1:length(lambda_vec) lambda=lambda_vec(i); theta=trainLinearReg(X,y, lambda); [error_train(i),grad]=linearRegCostFunction(X, y, theta, 0); [error_val(i), grad]=linearRegCostFunction(Xval, yval, theta, 0); end