PYTORCH基本功-ResNet

PYTORCH基本功-ResNet

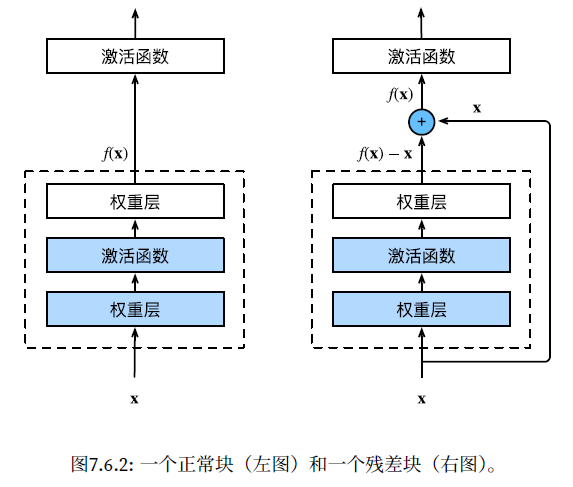

一、结构

二、原理

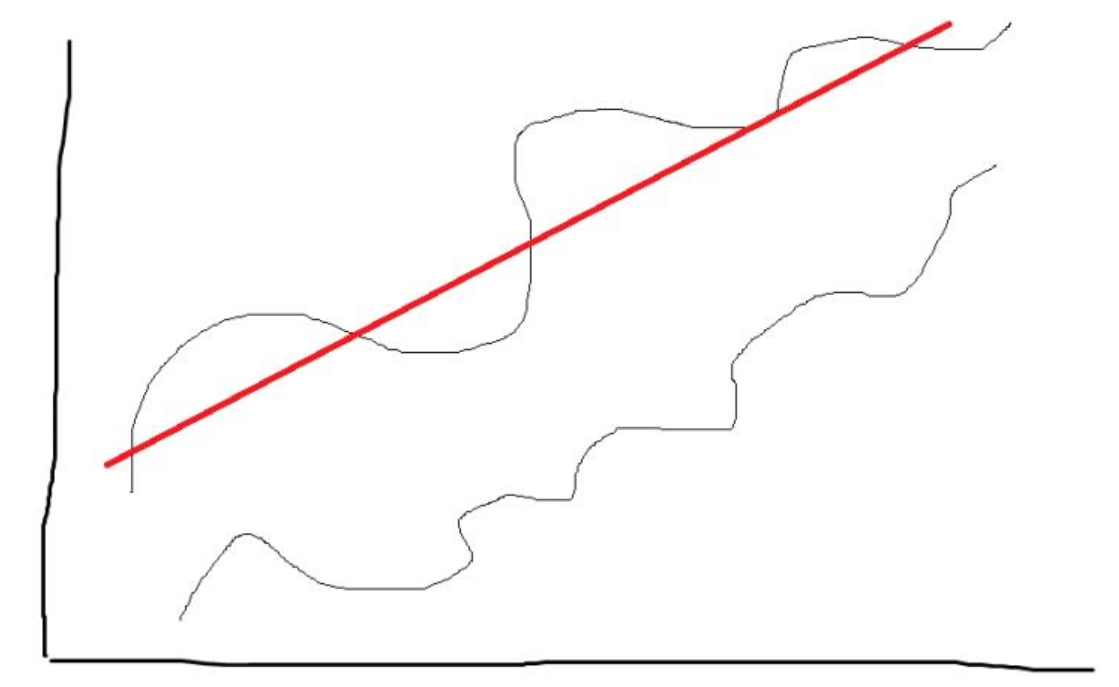

为什么计算差值会比计算fx更容易优化

极端的例子是 真实的目标函数就是红线,训练集是上方的曲线,减去红线变为下方的 曲线。

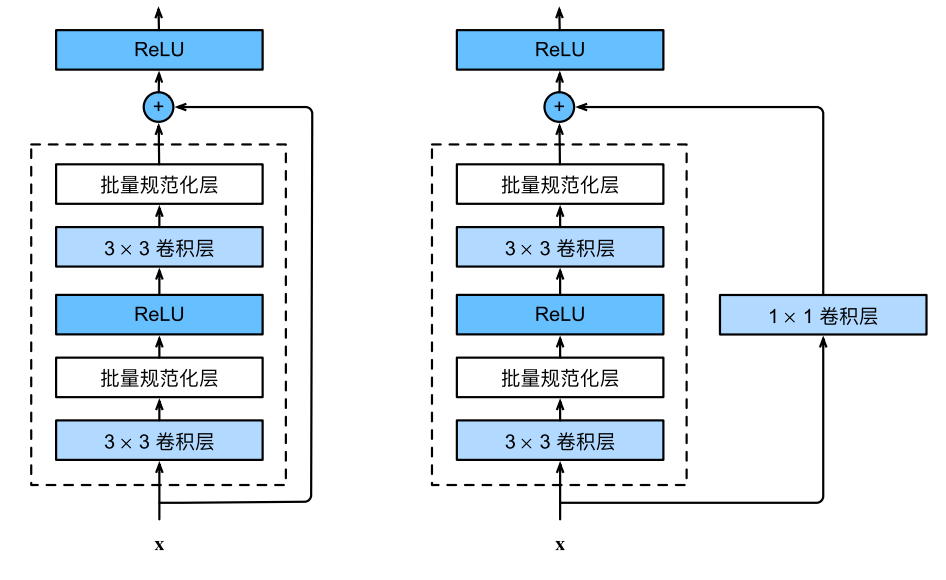

1 训练可以减少资源

2 减法后的残差,量级减少,模型更可以专注 局部或者外轮廓的变化,会拟合得更好。

技巧:如果需要改变这个块的输出尺寸,需要添加一个conv1x1的卷积

import torch

import torchvision

import numpy as np

from torch import nn

from torch.nn import functional as F

class Resduial(nn.Module):

def __init__(self,input_channels, num_channels, use_conv1x1, stride):

super(Resduial, self).__init__()

self.conv1 = nn.Conv2d(in_channels=input_channels, out_channels=num_channels, kernel_size=3,padding=1, stride=stride)

self.conv2 = nn.Conv2d(in_channels=num_channels, out_channels=num_channels,kernel_size=3, padding=1, stride=stride)

self.bn1 = nn.BatchNorm2d(num_channels)

self.bn2 = nn.BatchNorm2d(num_channels)

if use_conv1x1:

self.conv1x1 = nn.Conv2d(in_channels=input_channels, out_channels=num_channels,kernel_size=1,stride=stride)

else:

self.conv1x1 = None

pass

def forward(self, x):

import pdb; pdb.set_trace()

#1

y = self.conv1(x)

y = self.bn1(y)

y = F.relu(y)

#2

y = self.conv2(y)

y = self.bn2(y)

if self.conv1x1:

x = self.conv1x1(x)

y = y + x

y = F.relu(y)

return y

x = torch.rand(4,3,6,6)

resd = Resduial(3,3,use_conv1x1=True, stride=1)

y = resd(x)

print(y.shape)

bottleneck结构代码

参考链接代码:https://blog.csdn.net/hxxjxw/article/details/106582884