Centos下安装Spark

(注:由于第一次安装操作失误,所以重新安装了,因此截图为第一次的截图,命令为第二次安装的命令)

(注:图是本人安装所截图,本人安装参考网址:https://www.cnblogs.com/shaosks/p/9242536.html)

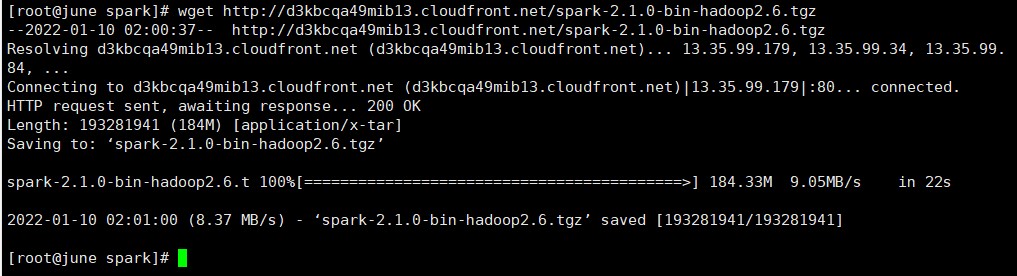

1、下载压缩包

命令:wget https://downloads.lightbend.com/scala/2.11.8/scala-2.11.8.tgz

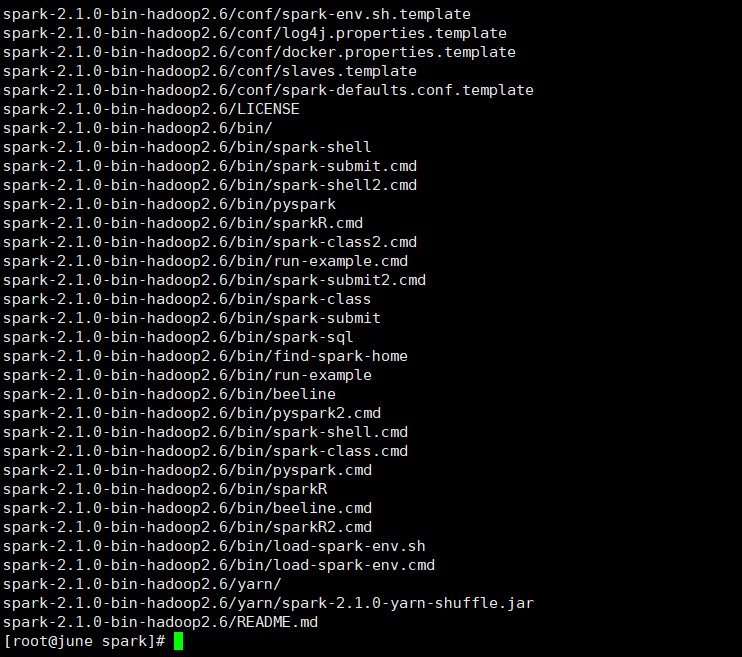

2、解压压缩包

命令:tar -xzvf scala-2.11.8.tgz

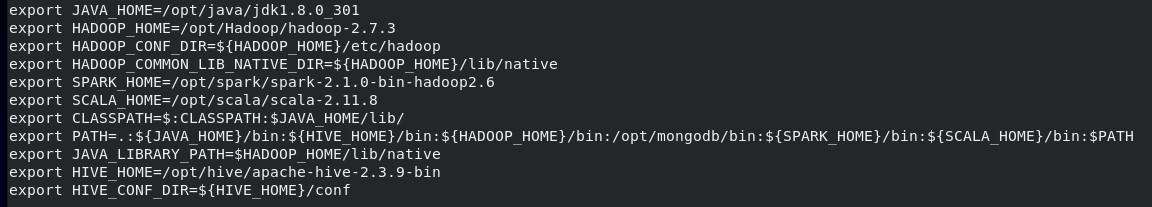

3、文件配置

环境配置如下:复制自己所需内容即可

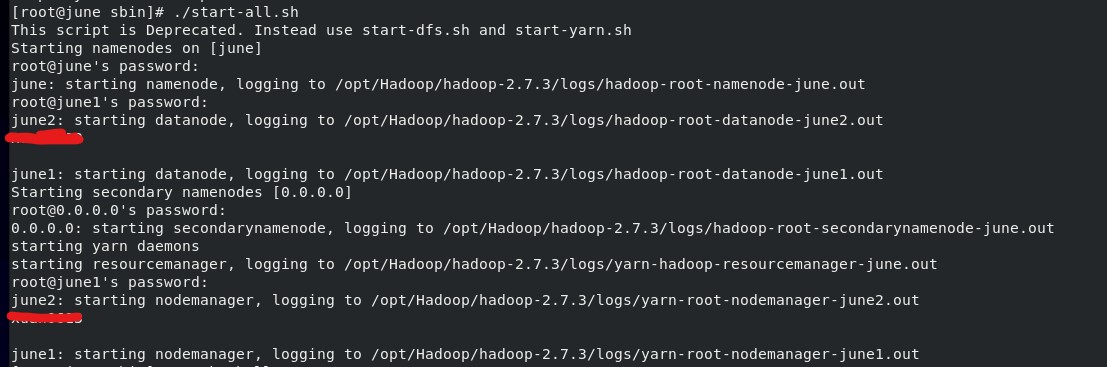

4、启动Hadoop

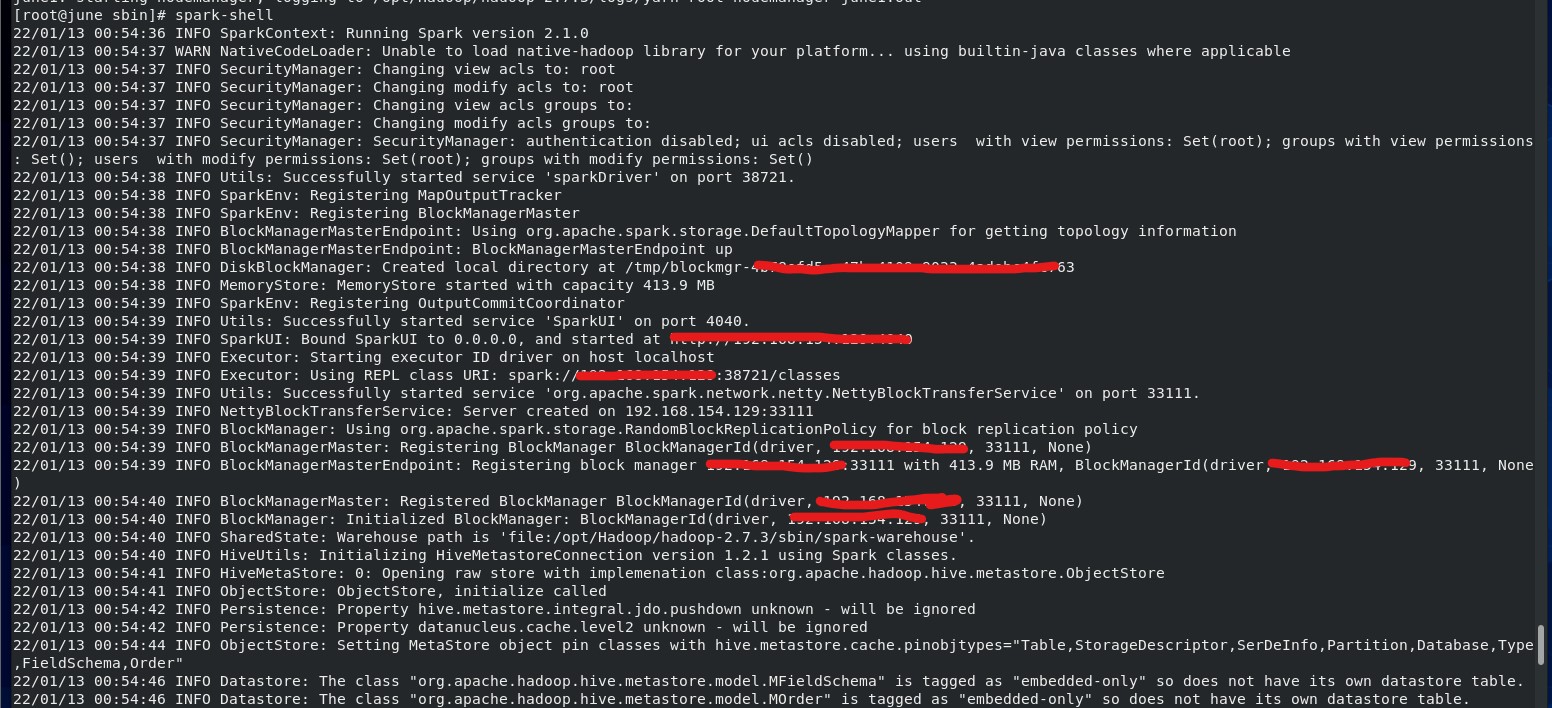

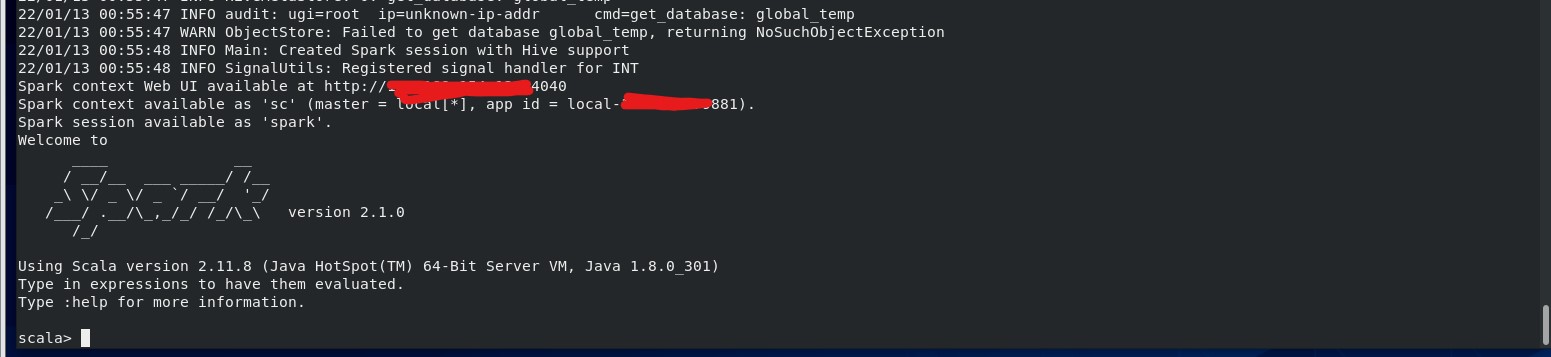

5、启动Spark

命令(没有空格!!没有空格!!!没有空格!!!闷头查了半天bash,,,,):spark-shell

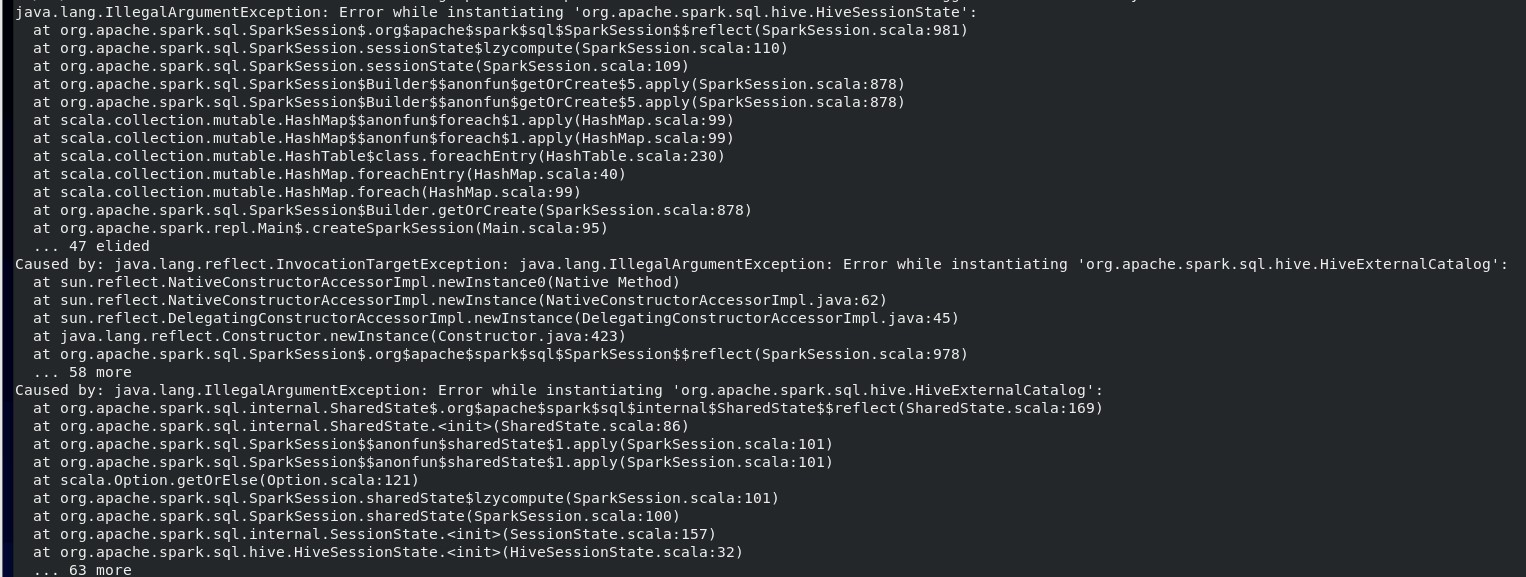

6、启动报错

1、未启动Hadoop时,报错如下:

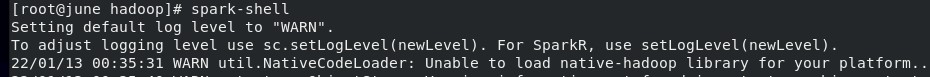

2、未修改log4j.properties前报错如下:

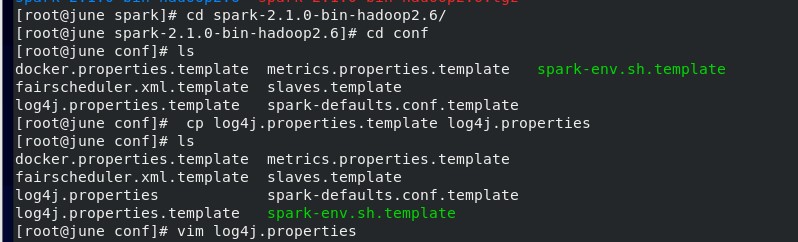

修改log4j.properties:

进入conf文件夹下进行如下操作,如图:

找到下图位置修改,修改完后如图:

__EOF__

本文作者:往心。

本文链接:https://www.cnblogs.com/lx06/p/15793593.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

本文链接:https://www.cnblogs.com/lx06/p/15793593.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

· AI与.NET技术实操系列(六):基于图像分类模型对图像进行分类

2021-01-12 1月12日 家庭小账本(改) 开发记录