基于kubernetes平台微服务的部署

基于kubernetes平台微服务的部署

首先下载插件:

kubernetes Continuous Deploy

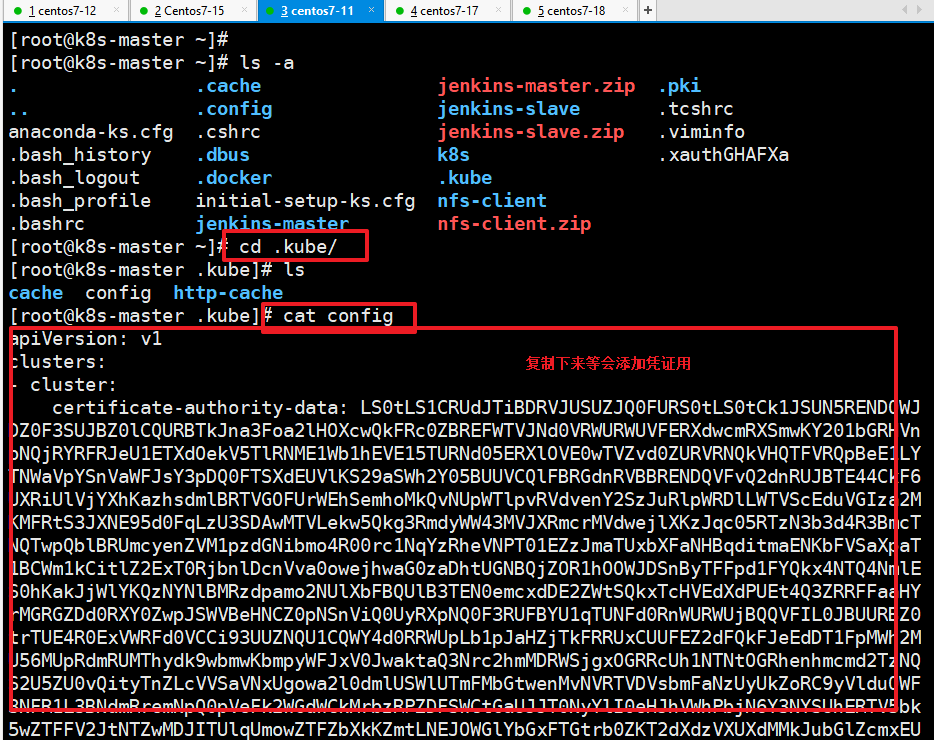

然后去找 .kube/ 里的config 复制里面的内容

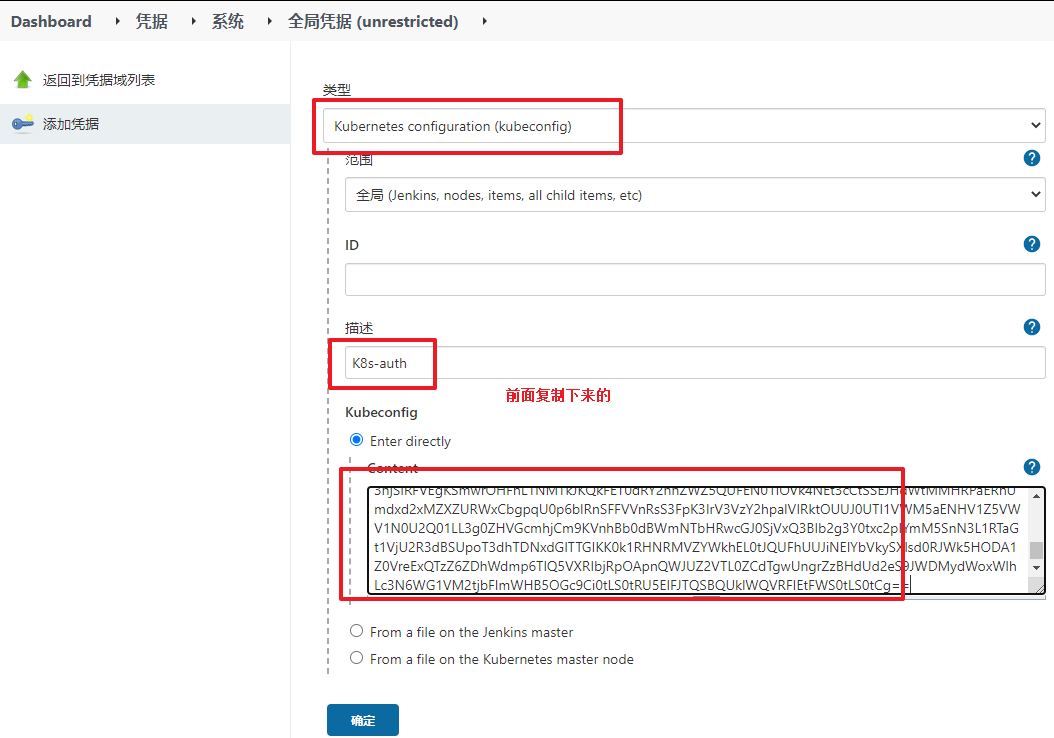

去添加凭据:

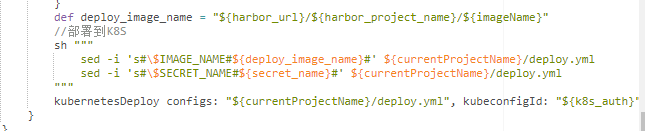

然后就是脚本更新:

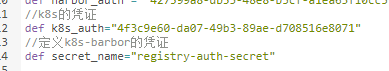

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 | def git_address = "http://20.0.0.20:82/root/tensquare_back.git"def git_auth = "904eff5d-41c8-44ad-ba24-7f539a0edb96"//构建版本的名称def tag = "latest"//Harbor私服地址def harbor_url = "20.0.0.50:85"//Harbor的项目名称def harbor_project_name = "tensquare"//Harbor的凭证def harbor_auth = "427399a8-db35-48e8-b5cf-a1ea63f10cc5"//k8s的凭证def k8s_auth="4f3c9e60-da07-49b3-89ae-d708516e8071"//定义k8s-barbor的凭证def secret_name="registry-auth-secret"podTemplate(label: 'jenkins-slave', cloud: 'kubernetes', containers: [ containerTemplate( name: 'jnlp', image: "20.0.0.50:85/library/jenkins-slave-maven:latest" ), containerTemplate( name: 'docker', image: "docker:stable", ttyEnabled: true, command: 'cat' ), ], volumes: [ hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock'), nfsVolume(mountPath: '/usr/local/apache-maven/repo', serverAddress: '20.0.0.10' , serverPath: '/opt/nfs/maven'), ],){node("jenkins-slave"){ // 第一步 stage('pull code'){ checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_address}"]]]) } // 第二步 stage('make public sub project'){ //编译并安装公共工程 sh "mvn -f tensquare_common clean install" } // 第三步 stage('make image'){ //把选择的项目信息转为数组 def selectedProjects = "${project_name}".split(',') for(int i=0;i<selectedProjects.size();i++){ //取出每个项目的名称和端口 def currentProject = selectedProjects[i]; //项目名称 def currentProjectName = currentProject.split('@')[0] //项目启动端口 def currentProjectPort = currentProject.split('@')[1] //定义镜像名称 def imageName = "${currentProjectName}:${tag}" //编译,构建本地镜像 sh "mvn -f ${currentProjectName} clean package dockerfile:build" container('docker') { //给镜像打标签 sh "docker tag ${imageName} ${harbor_url}/${harbor_project_name}/${imageName}" //登录Harbor,并上传镜像 withCredentials([usernamePassword(credentialsId: "${harbor_auth}", passwordVariable: 'password', usernameVariable: 'username')]) { //登录 sh "docker login -u ${username} -p ${password} ${harbor_url}" //上传镜像 sh "docker push ${harbor_url}/${harbor_project_name}/${imageName}" } //删除本地镜像 sh "docker rmi -f ${imageName}" sh "docker rmi -f ${harbor_url}/${harbor_project_name}/${imageName}" } def deploy_image_name = "${harbor_url}/${harbor_project_name}/${imageName}" //部署到K8S sh """ sed -i 's#\$IMAGE_NAME#${deploy_image_name}#' ${currentProjectName}/deploy.yml sed -i 's#\$SECRET_NAME#${secret_name}#' ${currentProjectName}/deploy.yml """ kubernetesDeploy configs: "${currentProjectName}/deploy.yml", kubeconfigId: "${k8s_auth}" } }}} |

要更改的就是:

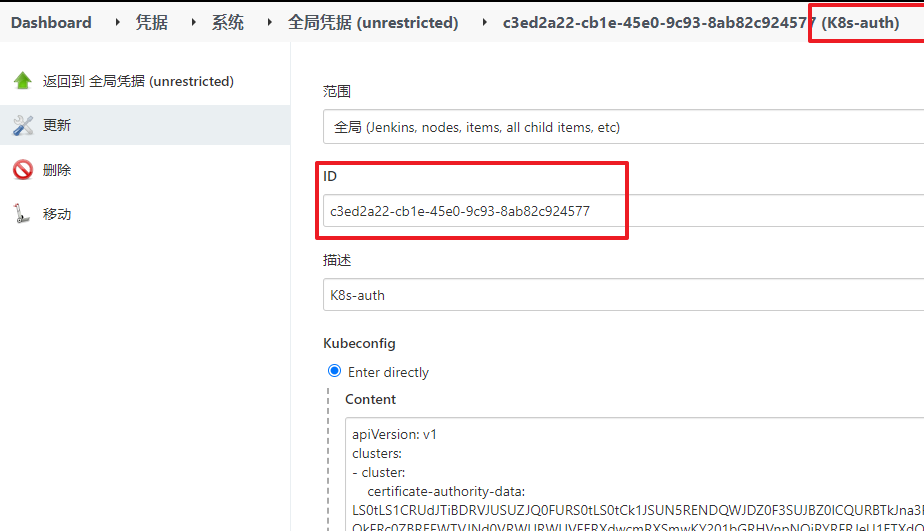

添加k8s的凭证:

然后在eureka 目录下创建deploy文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | metadata: name: eurekaspec: serviceName: "eureka" replicas: 2 selector: matchLabels: app: eureka template: metadata: labels: app: eureka spec: imagePullSecrets: - name: $SECRET_NAME containers: - name: eureka image: $IMAGE_NAME ports: - containerPort: 10086 env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: EUREKA_SERVER value: "http://eureka-0.eureka:10086/eureka/,http://eureka- 1.eureka:10086/eureka/" - name: EUREKA_INSTANCE_HOSTNAME value: ${MY_POD_NAME}.eureka podManagementPolicy: "Parallel" |

里面的application.yml配置文件更改如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | server: port: ${PORT:10086}spring: application: name: eurekaeureka: server: # 续期时间,即扫描失效服务的间隔时间(缺省为60*1000ms) eviction-interval-timer-in-ms: 5000 enable-self-preservation: false use-read-only-response-cache: false client: # eureka client间隔多久去拉取服务注册信息 默认30s registry-fetch-interval-seconds: 5 serviceUrl: defaultZone: ${EUREKA_SERVER:http://127.0.0.1:${server.port}/eureka/} instance: # 心跳间隔时间,即发送一次心跳之后,多久在发起下一次(缺省为30s) lease-renewal-interval-in-seconds: 5 # 在收到一次心跳之后,等待下一次心跳的空档时间,大于心跳间隔即可,即服务续约到期时间(缺省为90s) lease-expiration-duration-in-seconds: 10 instance-id: ${EUREKA_INSTANCE_HOSTNAME:${spring.application.name}}:${server.port}@${random.l ong(1000000,9999999)} hostname: ${EUREKA_INSTANCE_HOSTNAME:${spring.application.name}} |

然后在提交前在k8s所有主机上操作

1 | docker login -u lvbu -p Lvbu1234 20.0.0.50:85 |

1 | kubectl create secret docker-registry registry-auth-secret --docker-server=20.0.0.50:85 --docker-username=lvbu --docker-password=Lvbu1234 -- docker-email=lbu@qq.com |

然后去提交之前的修改的配置

提交完之后就可以构建了!

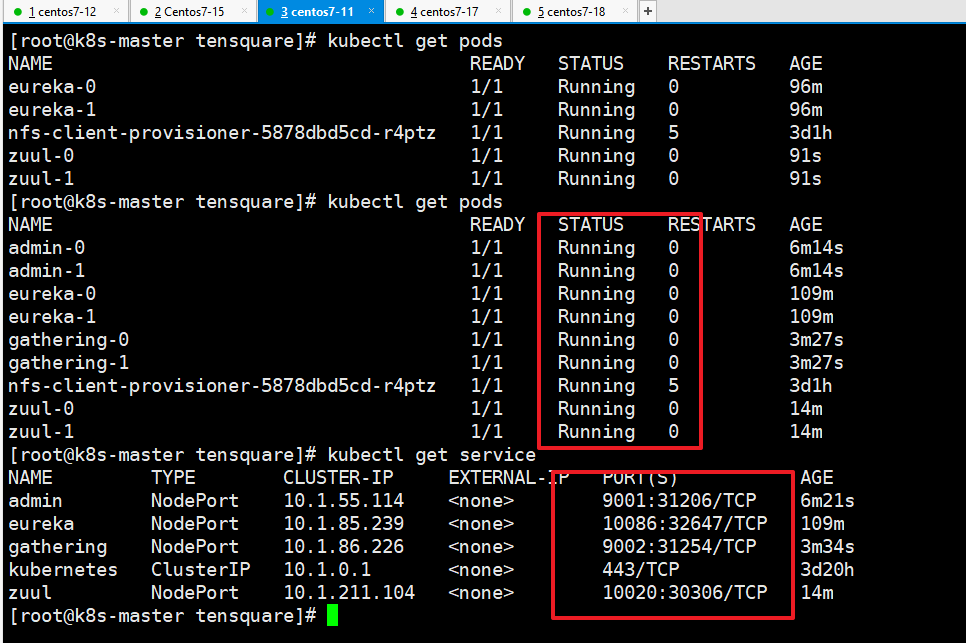

然后去查看:

1 | kubectl get secrets |

1 | kubectl get pods |

1 | kubectl get servicef 查看能看到端口 访问node节点的端口 就会发现注册中心有了 |

然后部署服务网关:

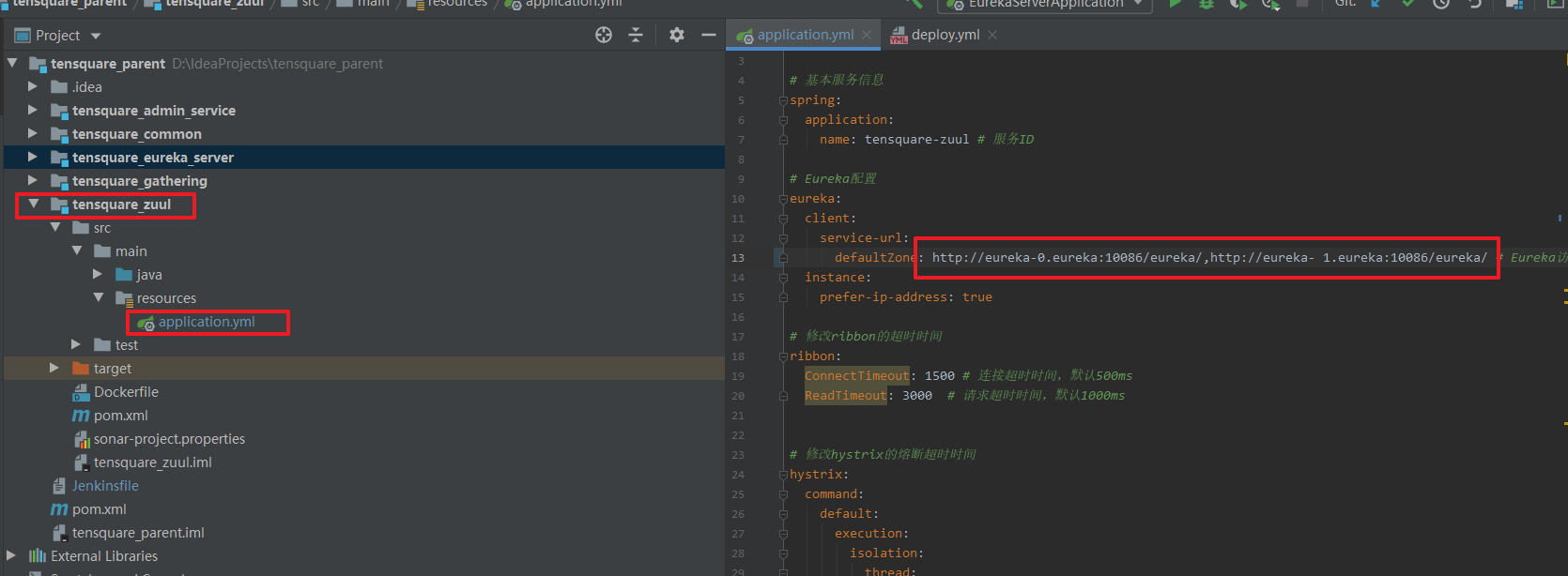

同理更改配置文件中的eureka集群地址:

1 | http://eureka-0.eureka:10086/eureka/,http://eureka- 1.eureka:10086/eureka/ |

所有的都要更改!

然后一样的操作在网关低下创建deploy.yml文件:

内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | ---apiVersion: v1kind: Servicemetadata: name: zuul labels: app: zuulspec: type: NodePort ports: - port: 10020 name: zuul targetPort: 10020 selector: app: zuul---apiVersion: apps/v1kind: StatefulSetmetadata: name: zuulspec: serviceName: "zuul" replicas: 2 selector: matchLabels: app: zuul template: metadata: labels: app: zuul spec: imagePullSecrets: - name: $SECRET_NAME containers: - name: zuul image: $IMAGE_NAME ports: - containerPort: 10020 podManagementPolicy: "Parallel" |

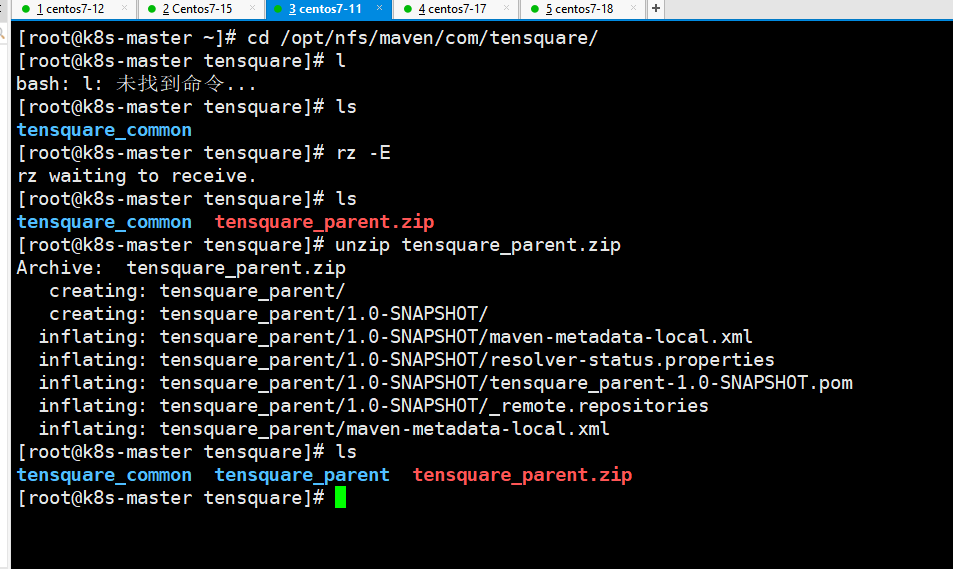

在提交前先去手动上传父工程依赖到NFS的maven共享仓库目录中:

然后在构建!

然后就是部署admin_service:

也是创建deploy.yml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | ---apiVersion: v1kind: Servicemetadata: name: admin labels: app: adminspec: type: NodePort ports: - port: 9001 name: admin targetPort: 9001 selector: app: admin---apiVersion: apps/v1kind: StatefulSetmetadata: name: adminspec: serviceName: "admin" replicas: 2 selector: matchLabels: app: admin template: metadata: labels: app: admin spec: imagePullSecrets: - name: $SECRET_NAME containers: - name: admin image: $IMAGE_NAME ports: - containerPort: 9001 podManagementPolicy: "Parallel" |

然后 集群地址也要更改!

接下来的部署gatjering 也是如此:

deploy.yml文件如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | ---apiVersion: v1kind: Servicemetadata: name: gathering labels: app: gatheringspec: type: NodePort ports: - port: 9002 name: gathering targetPort: 9002 selector: app: gathering---apiVersion: apps/v1kind: StatefulSetmetadata: name: gatheringspec: serviceName: "gathering" replicas: 2 selector: matchLabels: app: gathering template: metadata: labels: app: gathering spec: imagePullSecrets: - name: $SECRET_NAME containers: - name: gathering image: $IMAGE_NAME ports: - containerPort: 9002 podManagementPolicy: "Parallel" |

也要修改集群地址eureka!

然后就可以一次性提交代码然后一次性构建!

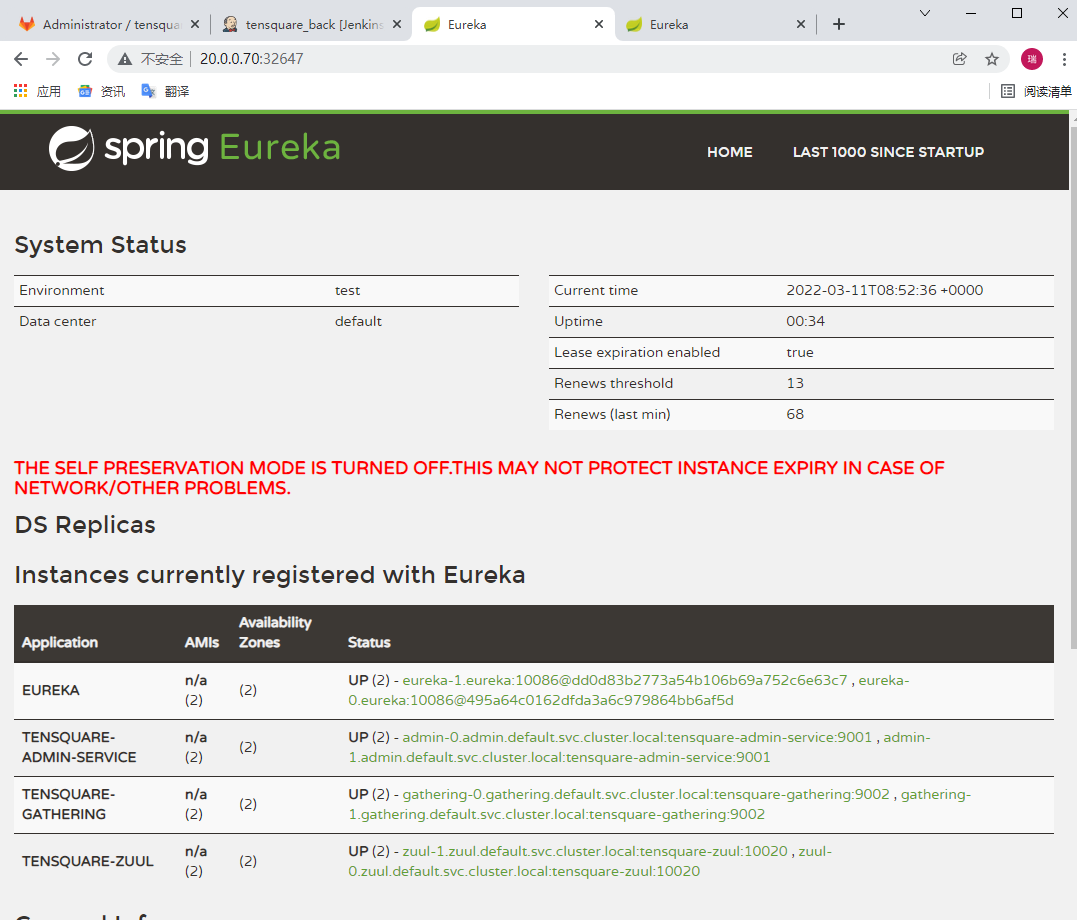

结果如下:

自古英雄多磨难

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· 写一个简单的SQL生成工具

· AI 智能体引爆开源社区「GitHub 热点速览」

· C#/.NET/.NET Core技术前沿周刊 | 第 29 期(2025年3.1-3.9)