Kafka使用

一、主题

创建主题:

kafka-topics.sh --create --zookeeper 192.168.217.4:2181,192.168.217.5:2181,192.168.217.6:2181 --replication-factor 1 --partitions 1 --topic demo_message

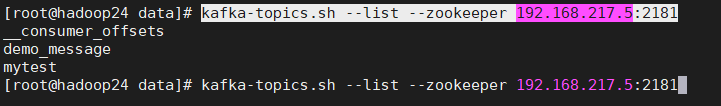

通过zookeeper查看topics

查看主题:

kafka-topics.sh --list --zookeeper 192.168.217.5:2181

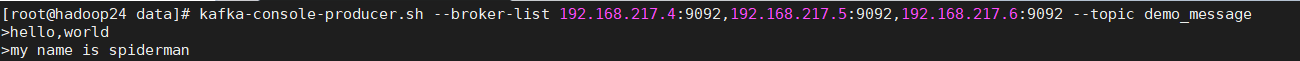

二、生产者

创建生产者客户端

kafka-console-producer.sh --broker-list 192.168.217.4:9092,192.168.217.5:9092,192.168.217.6:9092 --topic demo_message

三、消费者

创建消费者客户端

kafka-console-consumer.sh --bootstrap-server 192.168.217.4:9092,192.168.217.5:9092,192.168.217.6:9092 --topic demo_message --from-beginning

四、demo

maven

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.10.2.0</version>

</dependency>

生产者:

1 package com.luyizhou.demo;

2

3 import org.apache.kafka.clients.producer.KafkaProducer;

4 import org.apache.kafka.clients.producer.ProducerConfig;

5 import org.apache.kafka.clients.producer.ProducerRecord;

6 import org.apache.kafka.common.serialization.StringSerializer;

7

8 import java.util.Properties;

9 import java.util.Random;

10

11 public class KafkaProducerDemo {

12

13 private static String topic = "demo_message";

14

15 public static void main(String[] args) {

16 try {

17 Producer();

18 } catch (InterruptedException e) {

19 e.printStackTrace();

20 }

21 }

22

23 public static void Producer() throws InterruptedException {

24 Properties properties = new Properties();

25 properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.217.4:9092,192.168.217.5:9092,192.168.217.6:9092");

26 properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

27 properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

28 KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(properties);

29 try {

30 while (true) {

31 String msg = "hello, " + new Random().nextInt(100);

32 ProducerRecord<String, String> record = new ProducerRecord<String, String>(topic, msg);

33 kafkaProducer.send(record);

34 System.out.println("消息发送成功: " + msg);

35 Thread.sleep(500);

36 }

37 } finally {

38 kafkaProducer.close();

39 }

40

41 }

42

43 }

消费者:

1 package com.luyizhou.demo; 2 3 import org.apache.kafka.clients.consumer.ConsumerConfig; 4 import org.apache.kafka.clients.consumer.ConsumerRecord; 5 import org.apache.kafka.clients.consumer.ConsumerRecords; 6 import org.apache.kafka.clients.consumer.KafkaConsumer; 7 import org.apache.kafka.common.serialization.StringDeserializer; 8 9 import java.util.Collections; 10 import java.util.Properties; 11 12 public class KafkaConsumerDemo { 13 14 public static void main(String[] args) { 15 consumerDemo(); 16 } 17 18 public static void consumerDemo() { 19 Properties properties = new Properties(); 20 properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.217.4:9092,192.168.217.5:9092,192.168.217.6:9092"); 21 properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class); 22 properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class); 23 properties.put(ConsumerConfig.GROUP_ID_CONFIG, "demo_message"); 24 KafkaConsumer<String, String> stringStringKafkaConsumer = new KafkaConsumer<String, String>(properties); 25 stringStringKafkaConsumer.subscribe(Collections.singletonList("demo_message")); 26 while (true) { 27 ConsumerRecords<String, String> records = stringStringKafkaConsumer.poll(100); 28 for (ConsumerRecord<String, String> record : records) { 29 System.out.println(record.value()); 30 // System.out.println(String.format("topic:%s,offset:%d,消息:%s", record.topic(), record.offset(), record.value())); 31 } 32 } 33 } 34 35 }