探究 Parquet 生成方式(impala,hive都可以查询)分区(二)

1.可以先参考第一篇文章

https://www.cnblogs.com/luxj/p/14144972.html

2.分区的好处(例如按年月日生成表)

3.可以参考第一篇文章(先建表) 区别在于

PARTITIONED BY (year INT, month INT, day INT)

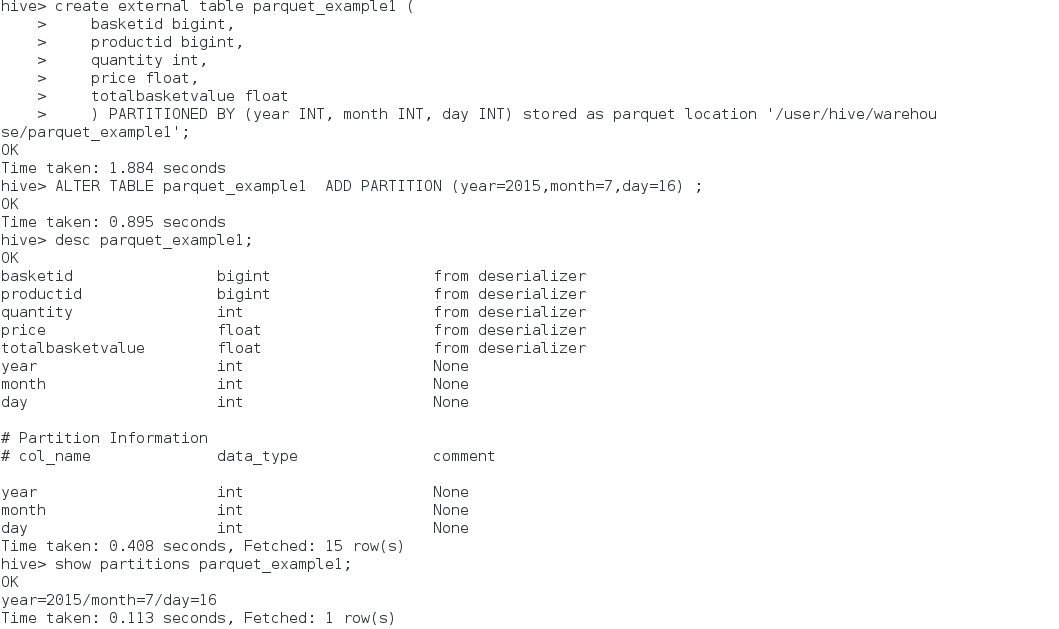

create external table parquet_example1 ( basketid bigint, productid bigint, quantity int, price float, totalbasketvalue float ) PARTITIONED BY (year INT, month INT, day INT) stored as parquet location '/user/hive/warehouse/parquet_example1';

4.修改别分区

ALTER TABLE parquet_example1 ADD PARTITION (year=2015,month=7,day=16) ;

5.查看别分区

show partitions parquet_example1;

6.相关java代码

public class BasketParquetWriter { public static void main(String[] args) throws IOException { DateFormat dateFormat = new SimpleDateFormat("YYYYMMddHHmmss"); new BasketParquetWriter().generateBasketData("part_"+dateFormat.format(new Date())); } private void generateBasketData(String outFilePath) throws IOException { final MessageType schema = MessageTypeParser.parseMessageType("message basket { required int64 basketid; required int64 productid; required int32 quantity; required float price; required float totalbasketvalue; }"); Configuration config = new Configuration(); DataWritableWriteSupport.setSchema(schema, config); Path outDirPath = new Path("hdfs://192.168.0.80/user/hive/warehouse/parquet_example1/year=2015/month=7/day=16/"+outFilePath); ParquetWriter writer = new ParquetWriter(outDirPath, new DataWritableWriteSupport () { @Override public WriteContext init(Configuration configuration) { if (configuration.get(DataWritableWriteSupport.PARQUET_HIVE_SCHEMA) == null) { configuration.set(DataWritableWriteSupport.PARQUET_HIVE_SCHEMA, schema.toString()); } return super.init(configuration); } }, CompressionCodecName.SNAPPY, 256*1024*1024, 100*1024); int numBaskets = 1000000; Random numProdsRandom = new Random(); Random quantityRandom = new Random(); Random priceRandom = new Random(); Random prodRandom = new Random(); for (int i = 0; i < numBaskets; i++) { int numProdsInBasket = numProdsRandom.nextInt(30); numProdsInBasket = Math.max(7, numProdsInBasket); float totalPrice = priceRandom.nextFloat(); totalPrice = (float)Math.max(0.1, totalPrice) * 100; for (int j = 0; j < numProdsInBasket; j++) { Writable[] values = new Writable[5]; values[0] = new LongWritable(i); values[1] = new LongWritable(prodRandom.nextInt(200000)); values[2] = new IntWritable(quantityRandom.nextInt(10)); values[3] = new FloatWritable(priceRandom.nextFloat()); values[4] = new FloatWritable(totalPrice); ArrayWritable value = new ArrayWritable(Writable.class, values); writer.write(value); } } writer.close(); } }

3-6 步骤 hive 操作如下

7.可以通过hive界面查询

相关源代码

https://github.com/wangxuehui/writeparquet/

转载于:https://my.oschina.net/skyim/blog/479252