PyTorch+LSTM实现新闻分类

目录

RNN(递归神经网络):前一时刻的特征会对后一时刻产生影响(前一次得到的结果保留,与后一层一起输入)。LSTM网络是RNN的一种变种,相较于RNN他可以过滤掉中间没必要的特征,可以有效地解决RNN的梯度爆炸或者消失问题。

步骤:

- 本文通过LSTM网络实现对新闻标题进行10分类。首先需要预处理数据,划分成一个一个字基于词典转换成索引值;然后利用索引在embedding文件中查,替换成对应的向量。其次,搭建含有embedding层、LSTM层、全连接层的网络模型。最后,传入词向量进行训练。

1.LSTM实现

1.配置参数

class Config(object):

"""配置参数"""

def __init__(self, dataset, embedding):

self.model_name = 'TextRNN'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单:10个

self.vocab_path = dataset + '/data/vocab.pkl' # 词表:每个词对应索引

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果,指定保存位置

self.log_path = dataset + '/log/' + self.model_name

self.embedding_pretrained = torch.tensor( #nupmy工具包加载embedding,转换成tensor格式

np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32'))\

if embedding != 'random' else None # 预训练词向量

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.dropout = 0.5 # 随机失活

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数:10个

self.n_vocab = 0 # 词表大小,在运行时赋值

self.num_epochs = 10 # epoch数,训练次数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 1e-3 # 学习率

self.embed = self.embedding_pretrained.size(1)\

if self.embedding_pretrained is not None else 300 # 字向量维度, 若使用了预训练词向量,则维度统一,300维

self.hidden_size = 128 # lstm隐藏层

self.num_layers = 2 # lstm层数

2.构建词典:每个字对应一个索引

def build_vocab(file_path, tokenizer, max_size, min_freq):

vocab_dic = {}

with open(file_path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):

lin = line.strip()

if not lin:

continue

content = lin.split('\t')[0]

for word in tokenizer(content):

vocab_dic[word] = vocab_dic.get(word, 0) + 1

vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1], reverse=True)[:max_size]

vocab_dic = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}

vocab_dic.update({UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})

return vocab_dic

def build_dataset(config, ues_word):

if ues_word:

tokenizer = lambda x: x.split(' ') # 以空格隔开,word-level

else:

tokenizer = lambda x: [y for y in x] # char-level

if os.path.exists(config.vocab_path):

vocab = pkl.load(open(config.vocab_path, 'rb'))#基于字的语料表,每个字对应一个索引,共有4762个

else:

vocab = build_vocab(config.train_path, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pkl.dump(vocab, open(config.vocab_path, 'wb'))

print(f"Vocab size: {len(vocab)}")

3.根据词典索引将字转换成索引

def load_dataset(path, pad_size=32):#读数据

contents = []

with open(path, 'r', encoding='UTF-8') as f:

for line in tqdm(f):#读入每一句

lin = line.strip()

if not lin:

continue

content, label = lin.split('\t')#以\t进行切分

words_line = []

token = tokenizer(content)#切成一个一个字

seq_len = len(token)#计算切完后长度

if pad_size:#默认执行pad_size

if len(token) < pad_size:#如果小于32

token.extend([vocab.get(PAD)] * (pad_size - len(token)))#添加特殊字符PAD补成32位

else:

token = token[:pad_size]

seq_len = pad_size

# word to id

for word in token:

words_line.append(vocab.get(word, vocab.get(UNK)))#在语料表中传入词拿到索引(后续利用索引在embedding里查找向量)

contents.append((words_line, int(label), seq_len))#全转换成索引

return contents # [([...], 0), ([...], 1), ...]

train = load_dataset(config.train_path, config.pad_size)#读取数据集(配置好的训练数据路径,每句话最大长度)

dev = load_dataset(config.dev_path, config.pad_size)

test = load_dataset(config.test_path, config.pad_size)

return vocab, train, dev, test

4.导入embedding文件

在下一步构建网络时根据索引在embedding文件中查,替换成对应的向量(一般300维)

# 搜狗新闻:embedding_SougouNews.npz, 腾讯:embedding_Tencent.npz, 随机初始化:random

embedding = 'embedding_SougouNews.npz'#词嵌入模型,包含词对应的向量,(用别人训练好的:词的意思差不多)

if args.embedding == 'random':

embedding = 'random'

model_name = args.model #TextCNN, TextRNN,

if model_name == 'FastText':

from utils_fasttext import build_dataset, build_iterator, get_time_dif

embedding = 'random'

else:

from utils import build_dataset, build_iterator, get_time_dif

5.建立网络模型(embedding层、LSTM层、全连接层)

class Model(nn.Module):#LSTM网络构建

def __init__(self, config):

super(Model, self).__init__()

if config.embedding_pretrained is not None:

self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)#词转换成向量

else:

self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)

self.lstm = nn.LSTM(config.embed, config.hidden_size, config.num_layers,

bidirectional=True, batch_first=True, dropout=config.dropout)#batch_first=True:第一个维度是一个batch

# LSTM网络构建,config.embed:300维,config.hidden_size:隐藏层神经元128个;config.num_layers:2层;bidirectional=True:双向

#双向LSTM:从前往后和从后往前都有,将得到的向量拼接起来

self.fc = nn.Linear(config.hidden_size * 2, config.num_classes)#全连接层(config.hidden_size * 2:双向128*2; config.num_classes:10个类别)

def forward(self, x):#前向传播

x, _ = x

out = self.embedding(x) # [batch_size, seq_len, embeding]=[128, 32, 300],embedding层

out, _ = self.lstm(out)#LSTM层

out = self.fc(out[:, -1, :]) # 句子最后时刻的 hidden state,全连接层,-1表示最后一个时刻的。得到每个batch属于10个类别的可能性

return out

6.训练网络

def train(config, model, train_iter, dev_iter, test_iter,writer):

start_time = time.time()

model.train()#训练模式

optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)#优化器

# 学习率指数衰减,每次epoch:学习率 = gamma * 学习率

# scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)

total_batch = 0 # 记录进行到多少batch

dev_best_loss = float('inf')#当前最好损失

last_improve = 0 # 记录上次验证集loss下降的batch数

flag = False # 记录是否很久没有效果提升

#writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))#可保存结果后续做展示

for epoch in range(config.num_epochs):#每一批

print('Epoch [{}/{}]'.format(epoch + 1, config.num_epochs))

# scheduler.step() # 学习率衰减

for i, (trains, labels) in enumerate(train_iter):

#print (trains[0].shape)

outputs = model(trains)#前向传播

model.zero_grad()#梯度置0

loss = F.cross_entropy(outputs, labels)#定义损失函数

loss.backward()#反向传播

optimizer.step()#梯度更新

if total_batch % 100 == 0:#每隔100次进行一次验证

# 每多少轮输出在训练集和验证集上的效果

true = labels.data.cpu()

predic = torch.max(outputs.data, 1)[1].cpu()#选概率最大的作为预测值

train_acc = metrics.accuracy_score(true, predic)#metrics模块计算准确率

dev_acc, dev_loss = evaluate(config, model, dev_iter)

if dev_loss < dev_best_loss:#当前验证集损失是否比之前的好

dev_best_loss = dev_loss#更新

torch.save(model.state_dict(), config.save_path)#保存模型

improve = '*'

last_improve = total_batch

else:

improve = ''

time_dif = get_time_dif(start_time)

msg = 'Iter: {0:>6}, Train Loss: {1:>5.2}, Train Acc: {2:>6.2%}, Val Loss: {3:>5.2}, Val Acc: {4:>6.2%}, Time: {5} {6}'

print(msg.format(total_batch, loss.item(), train_acc, dev_loss, dev_acc, time_dif, improve))#打印

writer.add_scalar("loss/train", loss.item(), total_batch)#保存结果

writer.add_scalar("loss/dev", dev_loss, total_batch)

writer.add_scalar("acc/train", train_acc, total_batch)

writer.add_scalar("acc/dev", dev_acc, total_batch)

model.train()

total_batch += 1

if total_batch - last_improve > config.require_improvement:

# 验证集loss超过1000batch没下降,结束训练

print("No optimization for a long time, auto-stopping...")

flag = True

break

if flag:

break

writer.close()

test(config, model, test_iter)

7.测试及评估网络

def test(config, model, test_iter):

# test

model.load_state_dict(torch.load(config.save_path))

model.eval()

start_time = time.time()

test_acc, test_loss, test_report, test_confusion = evaluate(config, model, test_iter, test=True)

msg = 'Test Loss: {0:>5.2}, Test Acc: {1:>6.2%}'

print(msg.format(test_loss, test_acc))

print("Precision, Recall and F1-Score...")

print(test_report)

print("Confusion Matrix...")

print(test_confusion)

time_dif = get_time_dif(start_time)

print("Time usage:", time_dif)

def evaluate(config, model, data_iter, test=False):#验证集上计算准确率损失等

model.eval()

loss_total = 0

predict_all = np.array([], dtype=int)

labels_all = np.array([], dtype=int)

with torch.no_grad():

for texts, labels in data_iter:

outputs = model(texts)

loss = F.cross_entropy(outputs, labels)

loss_total += loss

labels = labels.data.cpu().numpy()

predic = torch.max(outputs.data, 1)[1].cpu().numpy()

labels_all = np.append(labels_all, labels)

predict_all = np.append(predict_all, predic)

acc = metrics.accuracy_score(labels_all, predict_all)

if test:

report = metrics.classification_report(labels_all, predict_all, target_names=config.class_list, digits=4)

confusion = metrics.confusion_matrix(labels_all, predict_all)

return acc, loss_total / len(data_iter), report, confusion

return acc, loss_total / len(data_iter)

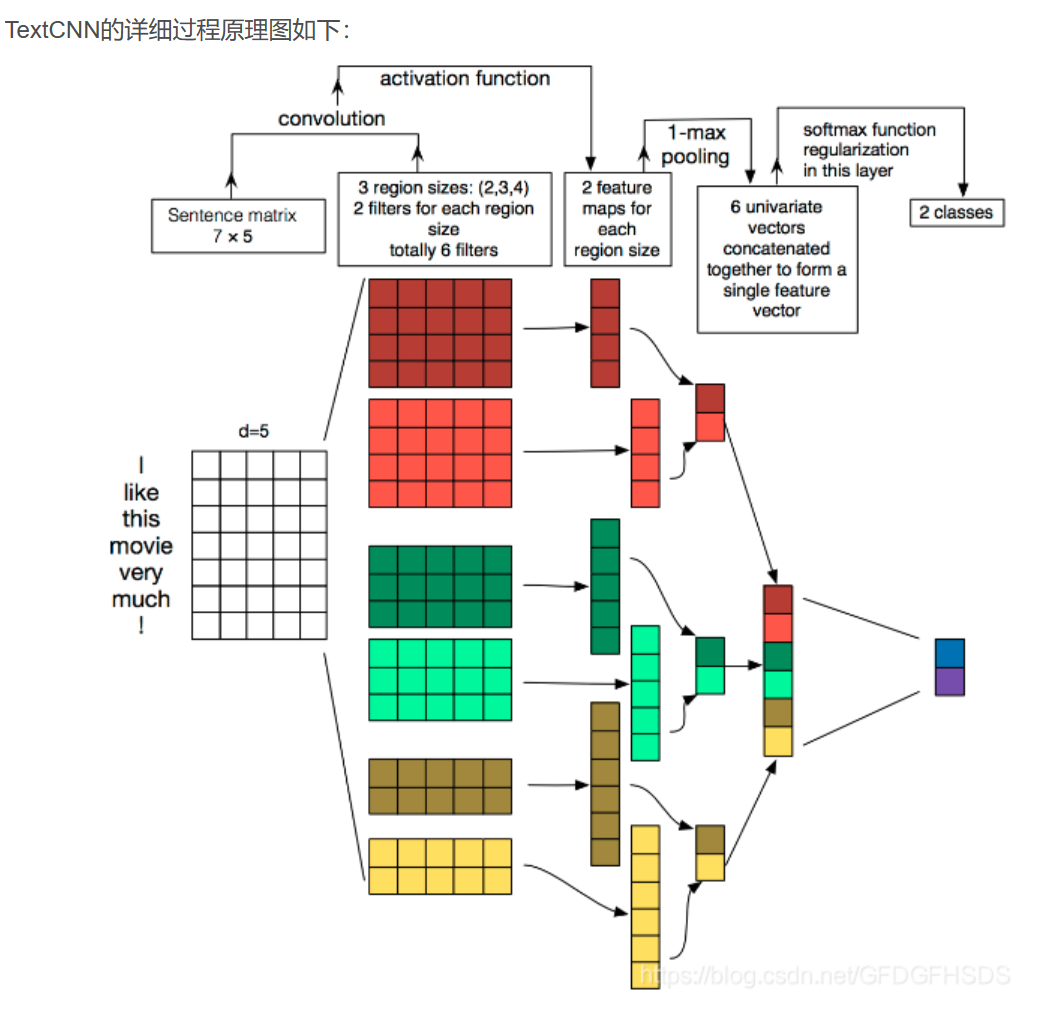

2.卷积网络(CNN)实现

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

if config.embedding_pretrained is not None:

self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)#词转换成向量

else:

self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)

self.convs = nn.ModuleList(#卷积层

[nn.Conv2d(1, config.num_filters, (k, config.embed)) for k in config.filter_sizes])#卷积核:2*300;3*300,4*300

self.dropout = nn.Dropout(config.dropout)

self.fc = nn.Linear(config.num_filters * len(config.filter_sizes), config.num_classes)

#全连接层:config.num_filters * len(config.filter_sizes):256*3

def conv_and_pool(self, x, conv):

# print(x.shape)#128,1,32,300

x = F.relu(conv(x)).squeeze(3)

# print(x.shape)#128,256,31(256表示特征图个数,31表示经过完卷积后特征图向量大小)

x = F.max_pool1d(x, x.size(2)).squeeze(2)#1d池化,x.size(2)).squeeze(2):31

# print(x.shape)#128,256(31压缩成了一个特征值)

return x

def forward(self, x):

#print (x[0].shape)

out = self.embedding(x[0])

#print(out.shape)#128,32,300

out = out.unsqueeze(1)#颜色通道维度改为1

# print(out.shape)#128,1,32,300

out = torch.cat([self.conv_and_pool(out, conv) for conv in self.convs], 1)#处理每个特征

# print(out.shape)#128,768(768表示2,3,4得到的256收尾相连)

out = self.dropout(out)

# print(out.shape)#128,768

out = self.fc(out)

# print(out.shape)#128,10

return out

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· winform 绘制太阳,地球,月球 运作规律

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人