Pytorch实现线性回归模型

初识pytorch,本文基于pytorch构建最基本的神经网络,实现线性回归模型。

(1)构造一组输入数据X和其对应的标签y

x_values = [i for i in range(11)]

x_train = np.array(x_values, dtype=np.float32)#np.array格式

x_train = x_train.reshape(-1, 1)#把数据转换成矩阵,防止出错

y_values = [2*i + 1 for i in x_values]#定义回归方程y=2x+1

y_train = np.array(y_values, dtype=np.float32)#np.array格式

y_train = y_train.reshape(-1, 1)

(2)构建模型

class LinearRegressionModel(nn.Module):#定义类,import torch.nn as nn,nn.Module:只用写用哪个层

def __init__(self, input_dim, output_dim):#构造函数,写用到哪些层

super(LinearRegressionModel, self).__init__()

self.linear = nn.Linear(input_dim, output_dim) #nn.Linear()全连接层,传入输入维度,输出维度

def forward(self, x):#前向传播函数,用到的层如何使用的

out = self.linear(x)#在全连接层中输入x得到结果

return out

(3)指定好参数和损失函数

input_dim = 1

output_dim = 1

model = LinearRegressionModel(input_dim, output_dim)#定义模型

epochs = 1000#一共迭代1000次

learning_rate = 0.01#学习率

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)#优化器,SGD,传入需要优化的参数和学习率

criterion = nn.MSELoss()#损失函数,分类任务常用交叉熵损失,回归任务常用MSE均方差损失函数

(4)训练模型

for epoch in range(epochs):#遍历1000次

epoch += 1

# np.array格式不能直接进行训练,转换成tensor格式

inputs = torch.from_numpy(x_train)

labels = torch.from_numpy(y_train)

# 梯度要清零每一次迭代

optimizer.zero_grad() #如果不清空梯度会累加起来

# 前向传播

outputs = model(inputs)

# 计算损失

loss = criterion(outputs, labels)

# 返向传播

loss.backward()

# 更新权重参数

optimizer.step()

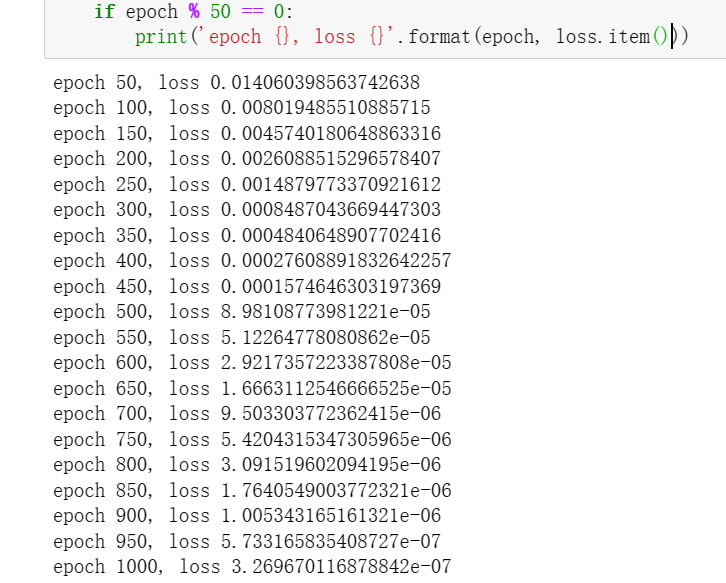

if epoch % 50 == 0:

print('epoch {}, loss {}'.format(epoch, loss.item()))

结果:

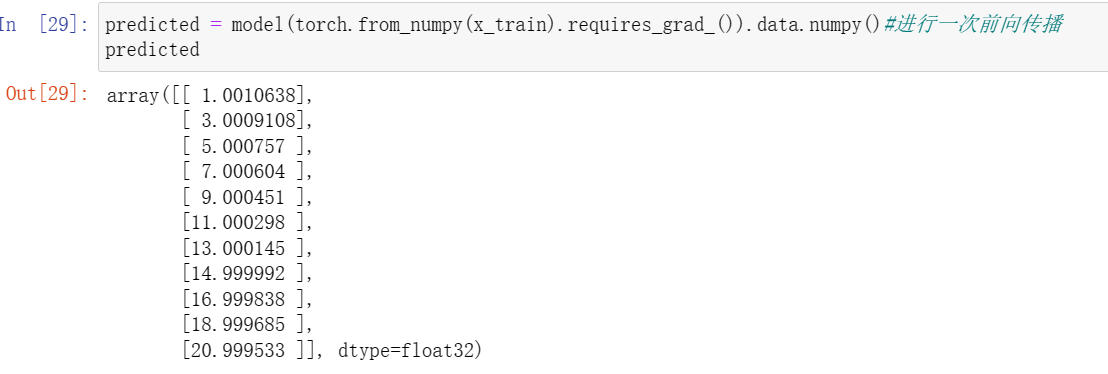

(5)测试模型预测结果

predicted = model(torch.from_numpy(x_train).requires_grad_()).data.numpy()#进行一次前向传播

结果:

(6)模型的保存与读取

torch.save(model.state_dict(), 'model.pkl')#model.state_dict():模型的权重参数

model.load_state_dict(torch.load('model.pkl'))#读取

(7)使用GPU进行训练

只需要把数据和模型传入到cuda里面就可以了,与CPU训练代码有两点不同,已加注释说明

import torch

import torch.nn as nn

import numpy as np

class LinearRegressionModel(nn.Module):

def __init__(self, input_dim, output_dim):

super(LinearRegressionModel, self).__init__()

self.linear = nn.Linear(input_dim, output_dim)

def forward(self, x):

out = self.linear(x)

return out

input_dim = 1

output_dim = 1

model = LinearRegressionModel(input_dim, output_dim)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")#指定设备cuda,如果cuda配置好的用cuda否则用CPU

model.to(device)#把模型放入cuda

criterion = nn.MSELoss()

learning_rate = 0.01

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

epochs = 1000

for epoch in range(epochs):

epoch += 1

inputs = torch.from_numpy(x_train).to(device)#把输入x传入cuda

labels = torch.from_numpy(y_train).to(device)#把输入y传入cuda

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

if epoch % 50 == 0:

print('epoch {}, loss {}'.format(epoch, loss.item()))

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· winform 绘制太阳,地球,月球 运作规律

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人