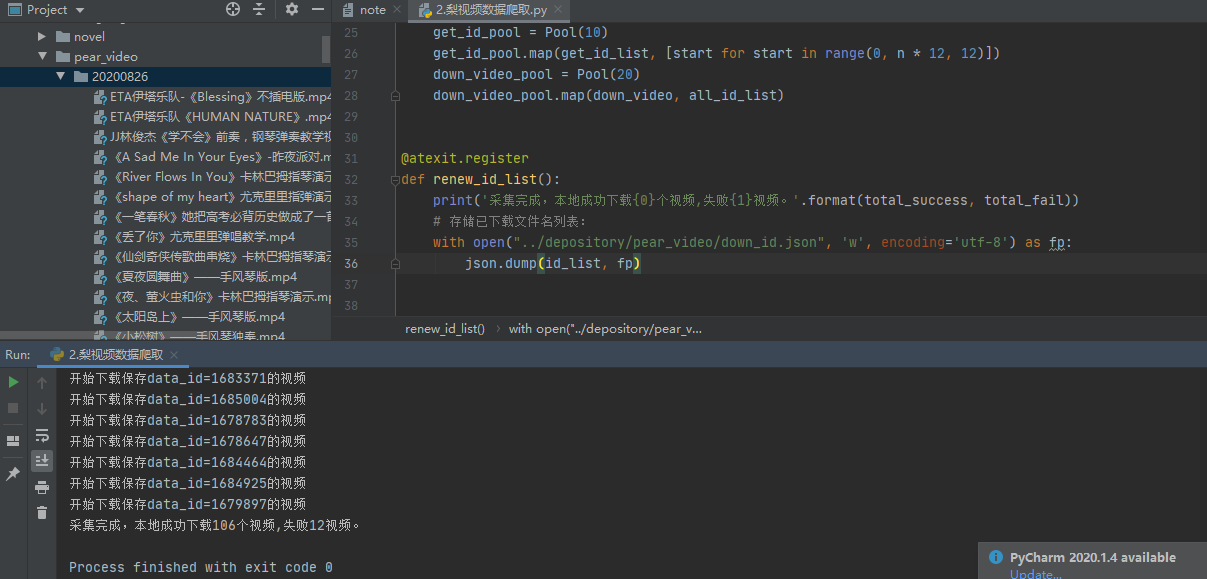

dummy多线程爬取梨视频例子

# _*_ coding:utf-8 _*_ """ @FileName :2.梨视频数据爬取.py @CreateTime :2020/8/26 0026 15:26 @Author : Lurker Zhang @E-mail : 289735192@qq.com @Desc. : 爬取梨视频音乐版块视频,地址:https://www.pearvideo.com/popular_59 """ import atexit from setting.config import * from lxml import etree import requests import json import re import time import os from multiprocessing.dummy import Pool def main(): # 设置获取视频数:n*12 n = 1 global all_id_list, id_list get_id_pool = Pool(4) get_id_pool.map(get_id_list, [start for start in range(0, n * 12, 12)]) down_video_pool = Pool(4) down_video_pool.map(down_video, all_id_list) @atexit.register def renew_id_list(): print('采集完成,本地成功下载{0}个视频,失败{1}视频。'.format(total_success, total_fail)) # 存储已下载文件名列表: with open("../depository/pear_video/down_id.json", 'w', encoding='utf-8') as fp: json.dump(id_list, fp) def get_id_list(start): """ get 12 video id list :param start: start :return: video id list """ global all_id_list print("解析strart={}的12个视频ID".format(start)) url = 'https://www.pearvideo.com/category_loading.jsp?reqType=5&categoryId=59&start={}'.format(start) # 获取视频列表页面,从start开始,返回了12个视频 video_list_page = requests.get(url=url, headers=headers).text tree = etree.HTML(video_list_page) all_id_list += [url.split('_')[1] for url in tree.xpath('/html/body/li/div/a/@href')] def down_video(data_id): """ Download video content according to video ID :param data_id: the video id for download :return: None """ global path, id_list,total_fail,total_success print("开始下载保存data_id={}的视频".format(data_id)) url = "https://www.pearvideo.com/video_{}".format(data_id) if data_id in id_list: total_fail += 1 print(data_id, "已经下载过了,跳过!") else: video_preview_page = requests.get(url=url, headers=headers).text # 获取该视频的视频源地址 """ 分析网页,视频地址为动态加载的,视频源地址在javascript代码中 用re进行获取 """ url_ex = 'srcUrl="(.*?)"' title_ex = '"video-tt">(.*?)</h1>' try: video_url = re.findall(url_ex, video_preview_page)[0] video_title = re.findall(title_ex, video_preview_page)[0] except Exception: return 0 # 下载视频 video_content = requests.get(url=video_url, headers=headers).content try: with open(path + video_title + ".mp4", 'wb') as fp: fp.write(video_content) except Exception: return 0 else: id_list.append(data_id) total_success += 1 if __name__ == '__main__': # 读取已下载视频ID if not os.path.exists('../depository/pear_video/down_id.json'): with open("../depository/pear_video/down_id.json", 'w', encoding="utf-8") as fp: json.dump([], fp) with open("../depository/pear_video/down_id.json", "r", encoding="utf-8") as fp: id_list = json.load(fp) # 设置视频保存位置 path = '../depository/pear_video/' + time.strftime('%Y%m%d', time.localtime()) + '/' if not os.path.exists(path): os.mkdir(path) # 记录本次采集图片的数量 total_success = 0 total_fail = 0 all_id_list = [] # 保存本次要下载的视频ID main()