Python爬虫——豆瓣网(电影/书籍)评论并可视化

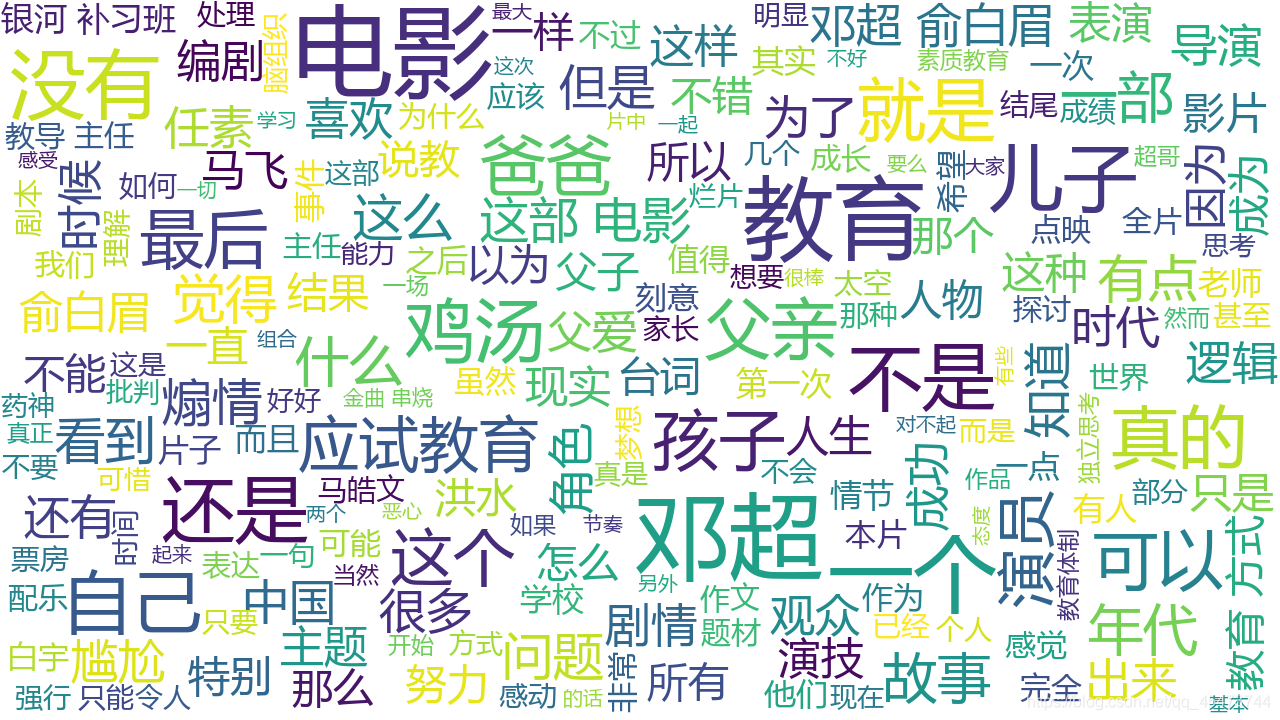

通过爬虫,获取 豆瓣网 的电影和书籍短评的数据(可视化)

实例化需要输入movie或者book类别,还有相应的id

可以大概的对电影或书籍的信息可视化(仅供参考)

爬取主要使用requests库

数据提取使用re正则

加入了3秒延迟睡眠

使用pandas简单的数据分析统计

matplotlib绘制图表

使用了结巴分词处理短评,生成词云

数据保存在当前目录下

# -*- coding: utf-8 -*-

# @Author : LuoXian

# @Date : 2020/2/11 22:07

# Software : PyCharm

# version: Python 3.8

# @File : demo.py

# 导入需要的库

import time

import re

import requests

import seaborn as sns

import jieba # 结巴分词

import pandas as pd # 数据分析

import matplotlib.pyplot as plt # 绘制图表

from wordcloud import WordCloud # 词云

# 创建分析豆瓣评论数据的类

class DouBanshorts(object):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)\

Chrome/79.0.3945.130 Safari/537.36',

}

def __init__(self):

self.douban_type = input('请输入需要获取的类型(movie/book):')

self.douban_id = input('请输入电影/书籍的id:')

print('\t开始数据分析豆瓣短评\n-------------------------------\n')

# 请求页面数据

@staticmethod

def get_html(url):

r = requests.get(url, headers=DouBanshorts.headers)

r.encoding = 'utf-8'

return r.text

# 获取书籍名称

def get_book_name(self):

url = f'https://book.douban.com/subject/{self.douban_id}/comments/hot?p=1' if self.douban_type == 'book' \

else f'https://movie.douban.com/subject/{self.douban_id}/comments?start=0&limit=20&sort=new_score&status=P'

html = DouBanshorts.get_html(url)

book_name = re.findall('<h1>(.*?) 短评</h1>', html)[0]

return book_name

# 提取shorts和stars数据

def parse_html(self):

book_name = self.get_book_name()

print(book_name)

if self.douban_type == 'book':

urls = [f'https://book.douban.com/subject/{self.douban_id}/comments/hot?p={i}' for i in range(1, 100)]

else:

urls = [f'https://movie.douban.com/subject/{self.douban_id}/comments?start={i}&limit=20&sort=new_score&status=P' for i in range(0, 1000, 20)]

shorts, stars = [], []

# 编译正则表达式

re_short = re.compile('<span class="short">(.*?)</span>', re.S)

re_star = re.compile('rating" title="(.*?)"></span>')

count = 0

# 循环获取影评 和 stars

for url in urls:

count += 1

print(f'提取第{count}页评论...')

html = DouBanshorts.get_html(url)

short = re_short.findall(html)

eva = re_star.findall(html)

# 当提取到评论后,将数据写入列表

if short:

print(short[0])

for i in zip(short, eva):

shorts.append(i[0])

stars.append(i[1])

else:

break

time.sleep(3)

print('\n数据提取完毕...over\n')

self.pandas_data(book_name, stars, shorts)

# pandas 保存数据

def pandas_data(self, book_name, stars, shorts):

# 用pandas保存为csv数据

data = pd.DataFrame()

data['stars'] = stars

data['shorts'] = shorts

data.to_csv(f'{book_name}.csv', index=False, encoding='utf-8', mode='a')

# 绘制饼图的函数

def pie(self, data=None, title=None, bookName='饼图', length=6, height=6, dpi=100):

sns.set_style('ticks') # 设置绘图风格

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 解决保存图像时负号'-'显示为方块的问题

fig, ax = plt.subplots(figsize=(length, height), dpi=dpi)

size = 0.5

labels = data.index

ax.pie(data, labels=labels,

startangle=90, autopct='%.1f%%', colors=sns.color_palette('husl', len(data)),

radius=1, # 控制饼图半径,默认为1

pctdistance=0.75, # 控制百分比显示位置

wedgeprops=dict(width=size, edgecolor='w'), # 控制甜甜圈的宽度,边缘颜色等

textprops=dict(fontsize=10) # 控制字号及颜色

)

ax.set_title(title, fontsize=15)

plt.savefig(f'{bookName}_可视化.png') # 保存图片

plt.show()

# 展示饼图

def show_chart(self, book_name):

df = pd.read_csv(f'{book_name}.csv')

stars = df['stars'].value_counts().sort_values(ascending=False)

print(stars)

print('生成饼图中...')

stars_title = f'{book_name} Stars Show:'

self.pie(stars, stars_title, book_name)

# 展示词云 , encoding='gbk'

def show_words(self, book_name):

print('生成词云中...')

df = pd.read_csv(f'{book_name}.csv')

df['shorts'].to_csv(f'{book_name}.txt', encoding='utf-8', index=False)

with open(f'{book_name}.txt', 'r', encoding='utf-8') as f:

content = f.read()

words = jieba.lcut(content)

all_words = ' '.join(words)

word_cloud = WordCloud(background_color='white', width=1280, height=720, font_path='msyh.ttc',

max_words=200, max_font_size=100).generate(all_words)

word_cloud.to_file(f'{book_name}词云.png')

# 输入书籍id获取数据

def super(self):

book_name = self.get_book_name()

self.parse_html()

self.show_chart(book_name)

self.show_words(book_name)

# 实例化并调用

douban = DouBanshorts()

douban.super()

`