docker基础之六network-4-weave

weave

需要FQ:

curl -L git.io/weave -o /usr/local/bin/weave chmod a+x /usr/local/bin/weave

在nas4中运行命令

weave launch --no-detect-tls

启动weave相关服务,weave组件以容器方式运行

老版本weave 1.8.2运行三个容器:

weave是主程序,负责建立weave网络,收发数据,提供dns服务

weaveplugin是libnetwork CNM driver,实现docker网络

weaveproxy提供docker命令的代理服务,当用户运行docker cli创建容器时,它会自动将容器添加到weave网络

当前版本

[root@nas4 ~]# weave version weave script 2.5.2 weave 2.5.2

列出weave相关容器

[root@nas4 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES e4833cab904b weaveworks/weave:2.5.2 "/home/weave/weaver …" 5 hours ago Up 24 minutes weave 6399794ec9eb weaveworks/weaveexec:2.5.2 "data-only" 5 hours ago Created weavevolumes-2.5.2 6715af48c9d5 weaveworks/weavedb:latest "data-only" 5 hours ago Created weavedb

weave-2.5.2创建了三个容器,仅仅运行了一个容器,容器内的进程如下:

/home/weave/weaver --port 6783 --nickname nas4 --host-root=/host --docker-bridge docker0 --weave-bridge weave --datapath datapath --ipalloc-range 10.32.0.0/12 --dns-listen-address 10.5.4.1:53 --http-addr 127.0.0.1:6784 --status-addr 127.0.0.1:6782 --resolv-conf /var/run/weave/etc/resolv.conf -H unix:///var/run/weave/weave.sock --plugin --proxy

weave会创建一个新的docker网络weave

[root@nas4 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 14d77facdfd3 bridge bridge local d2847ceac9a3 host host local 11b44b36f998 none null local 26875ffedd52 weave weavemesh local

driver为weavemesh,ip范围10.32.0.0/12

[root@nas4 ~]# docker network inspect weave

[

{

"Name": "weave",

"Id": "26875ffedd52eb7fcc03965f768170b57cb65c62a191126ce8d86ce10452009a",

"Created": "2019-08-26T11:49:46.656245729+08:00",

"Scope": "local",

"Driver": "weavemesh",

"EnableIPv6": false,

"IPAM": {

"Driver": "weavemesh",

"Options": null,

"Config": [

{

"Subnet": "10.32.0.0/12"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {

"works.weave.multicast": "true"

},

"Labels": {}

}

]

运行容器前

[root@nas4 ~]# echo $(weave env) export DOCKER_HOST=unix:///var/run/weave/weave.sock ORIG_DOCKER_HOST= [root@nas4 ~]# eval $(weave env)

作用就是将后续的docker命令发给weaveexec(或称weaveproxy)处理,若执行后想恢复原先的环境 eval $(weave env --restore)

nas4上运行第一个容器

[root@nas4 ~]# docker run --name bbox1 -itd busybox 4fe6729d8cb1c72e3aa382e560111721f1772147d6ab620228acc72664f8b966

查看当前容器的bbox1的网络配置

[root@nas4 ~]# docker exec bbox1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:0a:05:04:02 brd ff:ff:ff:ff:ff:ff

inet 10.5.4.2/24 brd 10.5.4.255 scope global eth0

valid_lft forever preferred_lft forever

16: ethwe@if17: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue

link/ether 2a:6d:18:f4:d2:c1 brd ff:ff:ff:ff:ff:ff

inet 10.32.0.1/12 brd 10.47.255.255 scope global ethwe

valid_lft forever preferred_lft forever

bbox1有两个网卡eth0和ethwe:

其一,eth0连接的是默认bridge网络,即网桥docker0

[root@nas4 ~]# ip a |grep 15: 15: veth6df5fc7@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default [root@nas4 ~]# brctl show docker0 bridge name bridge id STP enabled interfaces docker0 8000.0242877f9a3f no veth6df5fc7

其二,网卡ethwe,与vethwepl8554是一对veth pair,而vethwepl8554挂载nas4的linux bridge weave上,

[root@nas4 ~]# ip a |grep 17: 17: vethwepl8554@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master weave state UP group default [root@nas4 ~]# brctl show weave bridge name bridge id STP enabled interfaces weave 8000.ba793b554f35 no vethwe-bridge vethwepl8554

另外weave上还挂了一个vethwe-bridge,

[root@nas4 ~]# ip a |grep vethwe-bridge 11: vethwe-datapath@vethwe-bridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master datapath state UP group default 12: vethwe-bridge@vethwe-datapath: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master weave state UP group default

vethwe-bridge与vethwe-datapath是veth pair

vethwe-datapath 的父设备(master)是datapath

[root@nas4 ~]# ip a |grep datapath 6: datapath: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UNKNOWN group default qlen 1000 11: vethwe-datapath@vethwe-bridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master datapath state UP group default 12: vethwe-bridge@vethwe-datapath: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master weave state UP group default 13: vxlan-6784: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue master datapath state UNKNOWN group default qlen 1000

datapath是一个openvswitch

[root@nas4 ~]# ip -d link |grep -A 2 " datapath: "

6: datapath: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 4e:2b:53:e0:4b:d6 brd ff:ff:ff:ff:ff:ff promiscuity 1

openvswitch addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

vxlan-6784是vxlan interface,其master也是datapath,weave主机间是通过vxlan通信

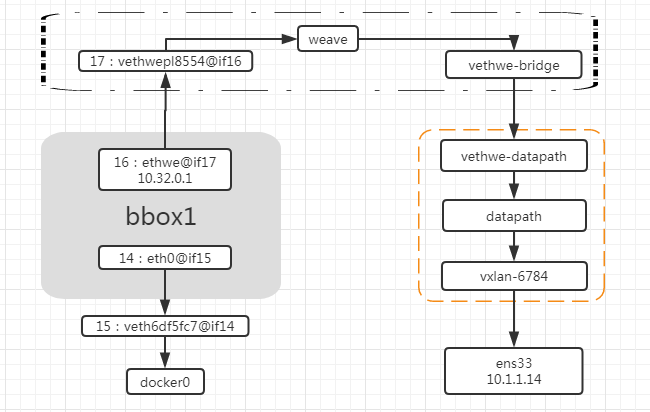

nas4的网络结构图如下:

weave网络包含两个虚拟交换机:

linux bridge weave和openvswitch datapath,veth pair vethwe-bridge和vethwe-datapath将二者连接在一起,weave和datapath分工不同,weave负责将容器接入weave网络,datapath负责在主机间vxlan隧道中收发数据

再运行一个容器bbox2

docker run --name bbox2 -itd busybox

[root@nas4 ~]# docker exec -it bbox2 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

20: eth0@if21: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:0a:05:04:03 brd ff:ff:ff:ff:ff:ff

inet 10.5.4.3/24 brd 10.5.4.255 scope global eth0

valid_lft forever preferred_lft forever

22: ethwe@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue

link/ether 9a:ad:bb:6a:32:b1 brd ff:ff:ff:ff:ff:ff

inet 10.32.0.2/12 brd 10.47.255.255 scope global ethwe

valid_lft forever preferred_lft forever

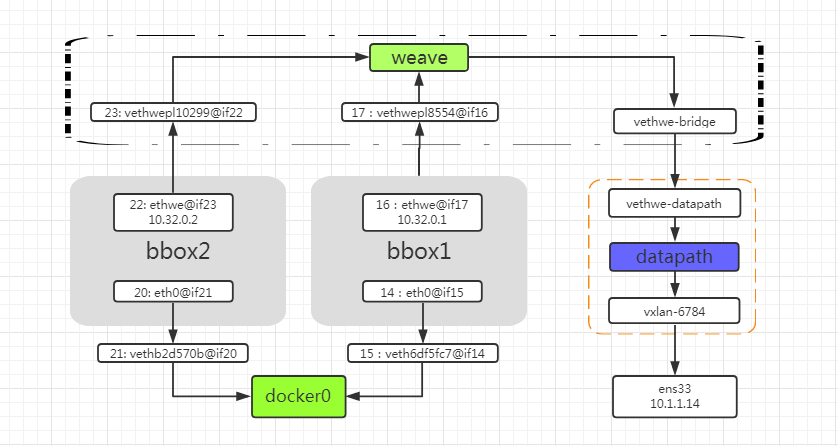

查看网络变化

[root@nas4 ~]# brctl show docker0 bridge name bridge id STP enabled interfaces docker0 8000.0242877f9a3f no veth6df5fc7 vethb2d570b [root@nas4 ~]# ip a |grep 21: 21: vethb2d570b@if20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default [root@nas4 ~]# ip a |grep 23: 23: vethwepl10299@if22: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master weave state UP group default

weave dns为容器创建了默认域名weave.local,bbox1能够直接通过hostname与bbox2通信

[root@nas4 ~]# docker exec bbox1 hostname bbox1 [root@nas4 ~]# docker exec bbox2 hostname bbox2 [root@nas4 ~]# docker exec bbox1 ping -c 2 bbox2 PING bbox2 (10.32.0.2): 56 data bytes 64 bytes from 10.32.0.2: seq=0 ttl=64 time=0.181 ms 64 bytes from 10.32.0.2: seq=1 ttl=64 time=0.188 ms --- bbox2 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.181/0.184/0.188 ms

当前nas4网络结构图如下:

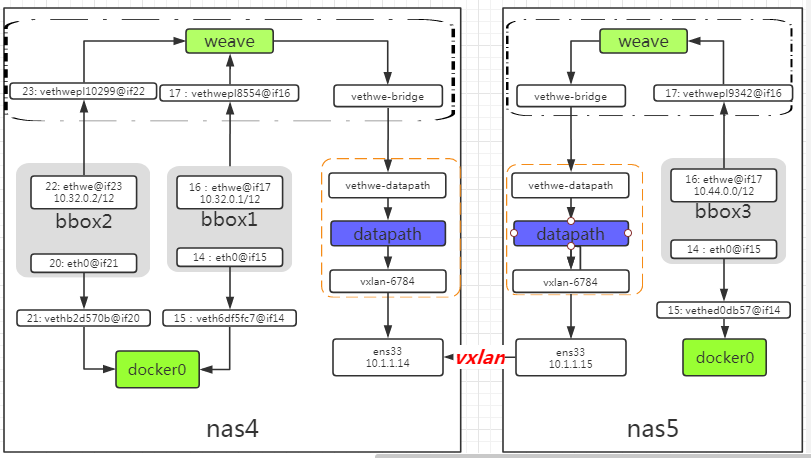

部署nas5并分析weave的连通与隔离特性

weave launch 10.1.1.14 --no-detect-tls

必须指定ip,俩台主机的weave网络才能在一个网络里

nas5上运行容器

[root@nas5 ~]# eval $(weave env)

[root@nas5 ~]# docker run -itd --name bbox3 busybox

991edd3726ea088bb9025550ce1569b860a7bac1cca44edb02081e9ab6a06c31

[root@nas5 ~]#

[root@nas5 ~]# docker exec bbox3 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:05:38:02 brd ff:ff:ff:ff:ff:ff

inet 10.5.56.2/24 brd 10.5.56.255 scope global eth0

valid_lft forever preferred_lft forever

16: ethwe@if17: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue

link/ether 56:b7:40:d7:3f:f5 brd ff:ff:ff:ff:ff:ff

inet 10.44.0.0/12 brd 10.47.255.255 scope global ethwe

valid_lft forever preferred_lft forever

[root@nas5 ~]# docker exec bbox3 ping -c 2 bbox1

PING bbox1 (10.32.0.1): 56 data bytes

64 bytes from 10.32.0.1: seq=0 ttl=64 time=7.822 ms

64 bytes from 10.32.0.1: seq=1 ttl=64 time=1.068 ms

--- bbox1 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.068/4.445/7.822 ms

[root@nas5 ~]# docker exec bbox3 ping -c 2 bbox2

PING bbox2 (10.32.0.2): 56 data bytes

64 bytes from 10.32.0.2: seq=0 ttl=64 time=8.946 ms

64 bytes from 10.32.0.2: seq=1 ttl=64 time=1.308 ms

--- bbox2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.308/5.127/8.946 ms

bbox1、bbox2和bbox3的ip分别为10.32.0.1/12、10.32.0.2/12和10.44.0.0/12,掩码都为12,实际三个ip位于同一个subnet 10.32.0.0/12。通过nas4和nas5之间的vxlan隧道,三个容器逻辑上是在同一在lan中,所以可直接通信,bbox3 ping bbox1数据流如下图:

分析流量:

1)数据包目的地址为10.32.0.1,根据bbox3的路由表,数据从ethwe发送出去

[root@nas5 ~]# docker exec bbox3 route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 10.5.56.1 0.0.0.0 UG 0 0 0 eth0 10.5.56.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 10.32.0.0 0.0.0.0 255.240.0.0 U 0 0 0 ethwe 224.0.0.0 0.0.0.0 240.0.0.0 U 0 0 0 ethwe

2)nas5 weave查询到目的主机,将数据通过vxlan发送给nas4

3)nas4接受到数据,根据目的ip将数据发给bbox1

weave网络隔离

默认配置下,weave使用一个subnet(10.32.0.0/12),所有主机的容器都从这个地址空间中分配ip,因为同属于一个subnet,容器可以直接通信。如果要实现网络隔离,可以通过环境变量WEAVE_CIDR为容器分配不同subnet的ip,

weave网络隔离,-e设置环境变量,

为容器分配不同的subnet的ip

[root@nas5 ~]# docker run -e WEAVE_CIDR=net:10.32.2.0/24 -itd --name bbox4 busybox

f931ab9b44a2e449aeb0ac23145563d8bf00aed711b8f0a27664b061b36f9a07

[root@nas5 ~]# docker exec bbox4 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

18: eth0@if19: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:05:38:03 brd ff:ff:ff:ff:ff:ff

inet 10.5.56.3/24 brd 10.5.56.255 scope global eth0

valid_lft forever preferred_lft forever

20: ethwe@if21: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue

link/ether 86:17:14:3b:6a:1d brd ff:ff:ff:ff:ff:ff

inet 10.32.2.128/24 brd 10.32.2.255 scope global ethwe

valid_lft forever preferred_lft forever

[root@nas5 ~]# docker exec bbox4 ping -c 2 bbox3

PING bbox3 (10.44.0.0): 56 data bytes

--- bbox3 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

[root@nas5 ~]# docker exec bbox4 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.5.56.1 0.0.0.0 UG 0 0 0 eth0

10.5.56.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

10.32.2.0 0.0.0.0 255.255.255.0 U 0 0 0 ethwe

224.0.0.0 0.0.0.0 240.0.0.0 U 0 0 0 ethwe

bbox3和bbox4分别处于10.32.0.0/12、10.32.2.0/24,位于不同subnet,所以不通

为容器分配指定的ip

[root@nas5 ~]# docker run -e WEAVE_CIDR=ip:10.32.6.6/24 -itd --name=bbox5 busybox

97d6b68b91434c5b3735d19312cc7719573b2f4d9ebe2a7a366b6e271440f308

[root@nas5 ~]# docker exec bbox5 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

22: eth0@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:05:38:04 brd ff:ff:ff:ff:ff:ff

inet 10.5.56.4/24 brd 10.5.56.255 scope global eth0

valid_lft forever preferred_lft forever

24: ethwe@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1376 qdisc noqueue

link/ether 46:65:07:93:9a:93 brd ff:ff:ff:ff:ff:ff

inet 10.32.6.6/24 brd 10.32.6.255 scope global ethwe

valid_lft forever preferred_lft forever

weave与外网的连通性

weave是一个私有的vxlan网络,默认与外部网络隔离,

[root@nas4 ~]# ip a show weave

8: weave: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UP group default qlen 1000

link/ether ba:79:3b:55:4f:35 brd ff:ff:ff:ff:ff:ff

inet6 fe80::b879:3bff:fe55:4f35/64 scope link

valid_lft forever preferred_lft forever

如何实现外部网络能访问到weave中的容器?

将主机加入到weave网络,然后把主机当作访问weave网络的网关

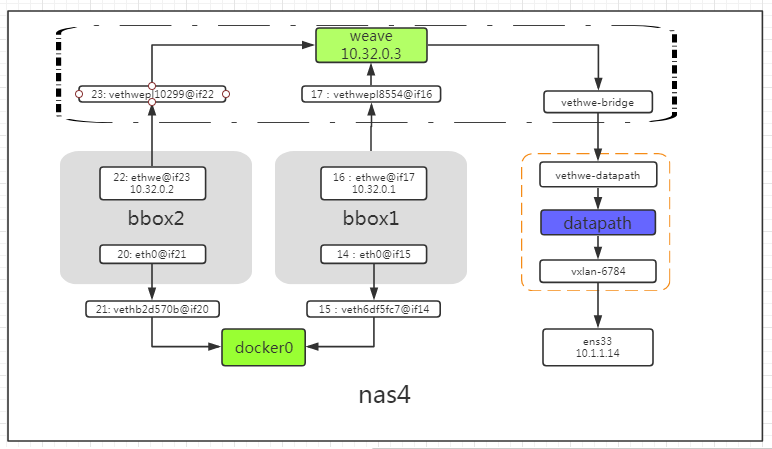

[root@nas4 ~]# weave expose 10.32.0.3

该ip 10.32.0.3会被配置到host1的weave网桥上

[root@nas4 ~]# ip a show weave

8: weave: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UP group default qlen 1000

link/ether ba:79:3b:55:4f:35 brd ff:ff:ff:ff:ff:ff

inet 10.32.0.3/12 brd 10.47.255.255 scope global weave

valid_lft forever preferred_lft forever

inet6 fe80::b879:3bff:fe55:4f35/64 scope link

valid_lft forever preferred_lft forever

当前host1的网络结构:如下图

weave网桥位于root namespace,它负责将容器接入weave网络。给weave配置同一subnet的ip其本质就是将nas4接入weave网络,nas4现在已经可以直接与同一weave网络中的容器通信啦,无论容器是否位于nas4

测试,在nas4中ping 同一主机的bbox1

[root@nas4 ~]# ping -c 2 10.32.0.1 PING 10.32.0.1 (10.32.0.1) 56(84) bytes of data. 64 bytes from 10.32.0.1: icmp_seq=1 ttl=64 time=0.271 ms 64 bytes from 10.32.0.1: icmp_seq=2 ttl=64 time=0.095 ms --- 10.32.0.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 0.095/0.183/0.271/0.088 ms

ping nas5上的bbox3

[root@nas4 ~]# ping -c 2 10.44.0.0 PING 10.44.0.0 (10.44.0.0) 56(84) bytes of data. 64 bytes from 10.44.0.0: icmp_seq=1 ttl=64 time=8.23 ms 64 bytes from 10.44.0.0: icmp_seq=2 ttl=64 time=0.895 ms --- 10.44.0.0 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1002ms rtt min/avg/max/mdev = 0.895/4.567/8.239/3.672 ms

其他非weave主机访问bbox1和,bbox3,添加路由,网关指向weave机器就好

[root@nas5 ~]# route add -net 10.32.0.0 netmask 255.240.0.0 gw 10.1.1.14 [root@nas5 ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.200.1 0.0.0.0 UG 0 0 0 ens37 10.1.1.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33 10.5.56.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0 10.32.0.0 10.1.1.14 255.240.0.0 UG 0 0 0 ens33 169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens33 169.254.0.0 0.0.0.0 255.255.0.0 U 1003 0 0 ens37 192.168.200.0 0.0.0.0 255.255.255.0 U 0 0 0 ens37 [root@node12 ~]# ip route default via 192.168.2.1 dev ens33 proto dhcp metric 100 10.32.0.0/12 via 192.168.2.215 dev ens33 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.2.0/24 dev ens33 proto kernel scope link src 192.168.2.102 metric 100 [root@nas5 ~]# ping -c 2 10.32.0.1 PING 10.32.0.1 (10.32.0.1) 56(84) bytes of data. 64 bytes from 10.32.0.1: icmp_seq=1 ttl=63 time=2.23 ms 64 bytes from 10.32.0.1: icmp_seq=2 ttl=63 time=0.631 ms --- 10.32.0.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1002ms rtt min/avg/max/mdev = 0.631/1.431/2.232/0.801 ms [root@nas5 ~]# ping -c 2 10.44.0.0 PING 10.44.0.0 (10.44.0.0) 56(84) bytes of data. 64 bytes from 10.44.0.0: icmp_seq=1 ttl=63 time=5.01 ms 64 bytes from 10.44.0.0: icmp_seq=2 ttl=63 time=2.07 ms --- 10.44.0.0 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1002ms rtt min/avg/max/mdev = 2.074/3.545/5.016/1.471 ms

通过上面添加路由实现外网到weave方向的通信,反方向呢?

容器本身挂载默认的bridge网络上,docker0已经是实现了nat可访问外网

IPAM

10.32.0.0/12 是 weave 网络使用的默认 subnet,如果此地址空间与现有 IP 冲突,可以通过 --ipalloc-range 分配特定的 subnet。

weave launch --ipalloc-range 10.2.0.0/16

不过请确保所有 host 都使用相同的 subnet。

浙公网安备 33010602011771号

浙公网安备 33010602011771号