Kubernetes进阶实战读书笔记:Replicaset控制器

一、关于Pod控制器

1、Pod存在问题

Pod对象遇到的意外删除,或者工作节点自身发生的故障时,又该如何处理呢?

kubelet是Kubernetes集群节点代理程序,它在每个工作节点上都运行着一个示例。因而集群中的某工作节点发生故障时,其kubelet也必将不可用、于是节点上的Pod存活性一般要由工作节点之外的Pod控制器来保证。事实上。遭到意外删除的Pod资源的回复也依赖于其控制器

2、 Pod控制器概述

kube-apiserver:仅负责将资源存储与etcd张红、并将其变动通知给各相关的客户端程序、如kubelet、kube-controller-manager、kube-scheduler、kube-proxy等

kube-scheduler:监控到处于未绑定状态的Pod对象出现时逐调度器为其挑选适配的工作节点,然而,Kubernetes的核心功能之一还在于确保各资源对象的当前状态以匹配用户期望的状态,使当前状态不断地向期望状态"和解"来完成应用管理而这些则是kube-controller-manager的任务

kube-controller-manager:是一个独立的单体守护进程、然而它包含了众多功能不同的控制器类型分别用于各类和解任务

创建为具体的控制对象之后,每个控制器均通过apiserver提供的接口持续监控相关资源对象的当前状态,并在因故障、更新或其他原因导致系统状态发生变化,尝试让资源的当前状态向期望状态迁移和逼近

简单来说、每个控制器对象运行一个和解循环负责状态和解、并将目标资源对象的当前状态写入到其status字段中。控制器的"和解"循环如下图所示

List-Watch是Kubernetes实现的核心机制之一、在资源对象的状态发生变动时,由apiserver负责写入etcd并通过水平触发机制主动通知相关的客户端程序以却白其不会错过任何一个时间。

控制器通过apiserver的watch几口实时监测目标资源对象的变动并执行和解操作,但并不会与其他控制器进行任何交互、甚至彼此之间根本就意识不到对方的存在

3、 控制器与Pod对象

Pod控制器资源通过持续性地监控集群中运行着的Pod资源对象来确保受其管理的资源严格符合用户期望的状态

例如资源副本的数量要符合期望等,通常、一个Pod控制器资源至少应该包含三个基本的组成部分

1、标签选择器:匹配并关联Pod资源对象,并据此完成受其管控的Pod资源计数

2、期望的副本数:期望在全及群众精确运行着的Pod资源的对象数量

3、Pod模板:用于新建Pod资源对象的Pod模板资源

4、Pod模板资源

二、replicaset控制器

1 replicaset概述

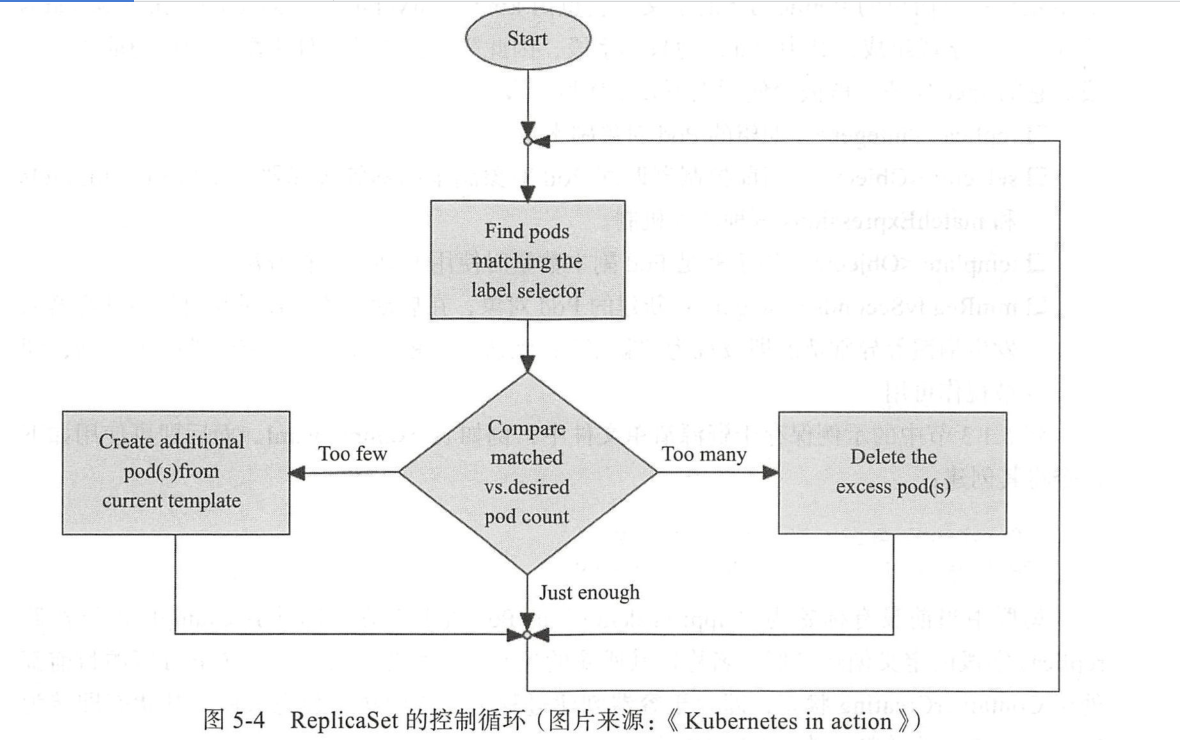

用于确保由其管控的Pod对象副本数在任一时刻都能精确满足期望的数量

replicaset控制器资源启动后会查找集群中匹配其标签选择器的Pod资源对象,当前活动对象的数量与期望的数量不吻合时,多则删除少则通过Pod模板创建以补足,

等Pod资源副本数量符合期望值后即进入下一轮和解循环

Pod模板的改动也只会对后来新建的Pod副本产生影响、相比较于手动创建和管理Pod资源来说、replicaset能够实现以下功能

确保Pod资源对象的数量精确反应期望值:replicaset需要确保由其控制运行的Pod副本数量精确温和配置中定义的期望值、否则就会自动补足所缺或终止所余

确保Pod健康运行:探测到尤其管控的Pod对象因其所在的工作节点故障而不可用时,自动请求由调度器于其他工作节点创建确实的Pod副本

弹性伸缩:业务规模因各种原因时常存在明显波动,在波峰或波谷期间、可以通过replicaset控制器动态吊证相关Pod资源对象的数量

此外、在必要时可以通过HPA控制器实现Pod资源规模的自动伸缩

三、创建replicaset

1、rs.spec属性字段含义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | [root@master ~]# kubectl explain rs.specKIND: ReplicaSetVERSION: apps/v1RESOURCE: spec <Object>DESCRIPTION: Spec defines the specification of the desired behavior of the ReplicaSet. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status ReplicaSetSpec is the specification of a ReplicaSet.FIELDS: minReadySeconds <integer> #新建的pod对象、在启动后的多长时间内如果其容器未发生崩溃等异常情况即被视作可用 Minimum number of seconds for which a newly created pod should be ready without any of its container crashing, for it to be considered available. Defaults to 0 (pod will be considered available as soon as it is ready) replicas <integer> #期望的pod对象副本数 Replicas is the number of desired replicas. This is a pointer to distinguish between explicit zero and unspecified. Defaults to 1. More info: https://kubernetes.io/docs/concepts/workloads/controllers/replicationcontroller/#what-is-a-replicationcontroller selector <Object> -required- #当前控制器匹配pod对象副本的标签选择器、支持matchLabels和matchExpressions两种匹配机制 Selector is a label query over pods that should match the replica count. Label keys and values that must match in order to be controlled by this replica set. It must match the pod template's labels. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#label-selectors template <Object> #用于补足pod副本数量时使用的pod模板资源 Template is the object that describes the pod that will be created if insufficient replicas are detected. More info: https://kubernetes.io/docs/concepts/workloads/controllers/replicationcontroller#pod-template |

2、rs清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | [root@master chapter5]# cat rs-example.yaml apiVersion: apps/v1kind: ReplicaSetmetadata: name: myapp-rsspec: replicas: 2 selector: matchLabels: app: myapp-pod template: metadata: labels: app: myapp-pod spec: containers: - name: myapp image: ikubernetes/myapp:v1 ports: - name: http containerPort: 80 |

3、创建运行

1 2 | [root@master chapter5]# kubectl apply -f rs-example.yaml replicaset.apps/myapp-rs created |

4、效果验证

1 2 3 4 5 6 7 8 9 10 11 12 | [root@master chapter5]# kubectl get rsNAME DESIRED CURRENT READY AGEmyapp-rs 2 2 2 118s[root@master chapter5]# kubectl get rs -o wideNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTORmyapp-rs 2 2 2 2m48s myapp ikubernetes/myapp:v1 app=myapp-pod[root@master chapter5]# kubectl get pods -l app=myapp-podNAME READY STATUS RESTARTS AGEmyapp-rs-qdn78 1/1 Running 0 110smyapp-rs-x5d58 1/1 Running 0 110s |

四、replicaset管控下的pod对象

然而、实际中存在着不少可能导致pod对象数目与期望值不符合的可能性,

1、pod对象标签的变动:已有的资源变的不匹配控制器的标签选择器,或者外部的pod资源标签变得匹配到了控制器的标签选择器

2、控制器的标签选择器变动。甚至是工作节点故障等

控制器的和解循环过程能够实现监控到这类异常,并及时启动和解操作

1、缺少副本

1、手动删除

任何原因导致的相关pod对象丢失、都会由replicaset控制器自动不足、例如手段删除上面列出的一个pod对象命令如下

1 2 3 4 5 6 7 | [root@master ~]# kubectl get pods -l app=myapp-pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-fkmsl 1/1 Running 0 3m13s 192.168.166.147 node1 <none> <none>myapp-rs-rqdqh 1/1 Running 0 2m36s 192.168.166.148 node1 <none> <none>[root@master ~]# kubectl delete pods myapp-rs-fkmslpod "myapp-rs-fkmsl" deleted |

动态观察pod创建过程

1 2 3 4 5 6 7 8 9 10 11 12 13 | [root@master ~]# kubectl get pods -l app=myapp-pod -o wide -wNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-fkmsl 1/1 Running 0 3m49s 192.168.166.147 node1 <none> <none>myapp-rs-rqdqh 1/1 Running 0 3m12s 192.168.166.148 node1 <none> <none>myapp-rs-fkmsl 1/1 Terminating 0 4m5s 192.168.166.147 node1 <none> <none>myapp-rs-ktns6 0/1 Pending 0 0s <none> <none> <none> <none>myapp-rs-ktns6 0/1 Pending 0 0s <none> node2 <none> <none>myapp-rs-ktns6 0/1 ContainerCreating 0 0s <none> node2 <none> <none>myapp-rs-fkmsl 0/1 Terminating 0 4m6s 192.168.166.147 node1 <none> <none>myapp-rs-ktns6 0/1 ContainerCreating 0 1s <none> node2 <none> <none>myapp-rs-ktns6 1/1 Running 0 2s 192.168.104.14 node2 <none> <none>myapp-rs-fkmsl 0/1 Terminating 0 4m10s 192.168.166.147 node1 <none> <none>myapp-rs-fkmsl 0/1 Terminating 0 4m10s 192.168.166.147 node1 <none> <none> |

2、强行修改隶属于控制器rs-example的某个pod资源的标签也会触发缺失不足机制

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@master ~]# kubectl get pods -l app=myapp-pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-ktns6 1/1 Running 0 90s 192.168.104.14 node2 <none> <none>myapp-rs-rqdqh 1/1 Running 0 4m58s 192.168.166.148 node1 <none> <none>[root@master ~]# kubectl label pods myapp-rs-ktns6 app= --overwritepod/myapp-rs-ktns6 labeled[root@master ~]# kubectl get pods -l app=myapp-pod -o wide -wNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-ktns6 1/1 Running 0 98s 192.168.104.14 node2 <none> <none>myapp-rs-rqdqh 1/1 Running 0 5m6s 192.168.166.148 node1 <none> <none>myapp-rs-ktns6 1/1 Running 0 2m39s 192.168.104.14 node2 <none> <none>myapp-rs-mbgxz 0/1 Pending 0 0s <none> <none> <none> <none>myapp-rs-mbgxz 0/1 Pending 0 0s <none> node1 <none> <none>myapp-rs-mbgxz 0/1 ContainerCreating 0 0s <none> node1 <none> <none>myapp-rs-mbgxz 0/1 ContainerCreating 0 1s <none> node1 <none> <none>myapp-rs-mbgxz 1/1 Running 0 2s 192.168.166.149 node1 <none> <none |

2、多出pod副本

一旦被标签选择器匹配到的pod资源数量因任何原因抄书期望值、多余的部分都将被控制器自动删除

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | [root@master ~]# kubectl get pods -l app=myapp-pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-mbgxz 1/1 Running 0 4m4s 192.168.166.149 node1 <none> <none>myapp-rs-rqdqh 1/1 Running 0 10m 192.168.166.148 node1 <none> <none>[root@master ~]# kubectl label pods liveness-exec app=myapp-podpod/liveness-exec labeled[root@master ~]# kubectl get pods -l app=myapp-pod -o wide -wNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-mbgxz 1/1 Running 0 4m4s 192.168.166.149 node1 <none> <none>myapp-rs-rqdqh 1/1 Running 0 10m 192.168.166.148 node1 <none> <none>liveness-exec 1/1 Running 946 3d 192.168.104.9 node2 <none> <none>liveness-exec 1/1 Running 946 3d 192.168.104.9 node2 <none> <none>liveness-exec 1/1 Terminating 946 3d 192.168.104.9 node2 <none> <none>[root@master ~]# kubectl get pods -l app=myapp-pod -o wide -wNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-mbgxz 1/1 Running 0 4m4s 192.168.166.149 node1 <none> <none>myapp-rs-rqdqh 1/1 Running 0 10m 192.168.166.148 node1 <none> <none>liveness-exec 1/1 Running 946 3d 192.168.104.9 node2 <none> <none>liveness-exec 1/1 Running 946 3d 192.168.104.9 node2 <none> <none>liveness-exec 1/1 Terminating 946 3d 192.168.104.9 node2 <none> <none>liveness-exec 0/1 Terminating 946 3d <none> node2 <none> <none>liveness-exec 0/1 Terminating 946 3d <none> node2 <none> <none>liveness-exec 0/1 Terminating 946 3d <none> node2 <none> <none> |

3、查看pod资源变动的相关事件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | [root@master ~]# kubectl describe replicasets/myapp-rsName: myapp-rsNamespace: defaultSelector: app=myapp-podLabels: <none>......Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 23m replicaset-controller Created pod: myapp-rs-wcjmv Normal SuccessfulCreate 22m replicaset-controller Created pod: myapp-rs-fkmsl Normal SuccessfulCreate 22m replicaset-controller Created pod: myapp-rs-rqdqh Normal SuccessfulCreate 18m replicaset-controller Created pod: myapp-rs-ktns6 Normal SuccessfulCreate 16m replicaset-controller Created pod: myapp-rs-mbgxz Normal SuccessfulDelete 11m replicaset-controller Deleted pod: liveness-exec |

五、更新replicaset控制器

1、更新pod模板、升级应用

1 2 3 4 5 6 7 8 9 10 | [root@master chapter5]# cat rs-example.yaml|grep image image: ikubernetes/myapp:v2[root@master chapter5]# kubectl replace -f rs-example.yaml replicaset.apps/myapp-rs replaced[root@master chapter5]# kubectl get pods -l app=myapp-pod -o \custom-columns=Name:metadata.name,Image:spec.containers[0].imageName Imagemyapp-rs-mbgxz ikubernetes/myapp:v1myapp-rs-rqdqh ikubernetes/myapp:v1 |

2、手动删除更新(一次性删除rs-example相关的所有pod副本)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | [root@master chapter5]# kubectl delete pods -l app=myapp-podpod "myapp-rs-mbgxz" deletedpod "myapp-rs-rqdqh" deleted[root@master chapter5]# kubectl get pods -l app=myapp-pod -o \custom-columns=Name:metadata.name,Image:spec.containers[0].imageName Imagemyapp-rs-l42sm ikubernetes/myapp:v2myapp-rs-wg6ws ikubernetes/myapp:v2[root@master ~]# kubectl get pods -l app=myapp-pod -o wide -wNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmyapp-rs-mbgxz 1/1 Running 0 4m4s 192.168.166.149 node1 <none> <none>myapp-rs-rqdqh 1/1 Running 0 10m 192.168.166.148 node1 <none> <none>......myapp-rs-mbgxz 0/1 Terminating 0 32m 192.168.166.149 node1 <none> <none>myapp-rs-rqdqh 0/1 Terminating 0 38m <none> node1 <none> <none>myapp-rs-rqdqh 0/1 Terminating 0 38m <none> node1 <none> <none>myapp-rs-mbgxz 0/1 Terminating 0 32m 192.168.166.149 node1 <none> <none>myapp-rs-mbgxz 0/1 Terminating 0 32m 192.168.166.149 node1 <none> <none> |

3、扩容和缩容

1、扩容

1 2 3 4 5 6 | [root@master chapter5]# kubectl scale replicasets myapp-rs --replicas=5 replicaset.apps/myapp-rs scaled[root@master chapter5]# kubectl get rs myapp-rsNAME DESIRED CURRENT READY AGEmyapp-rs 5 5 2 47h |

2、缩容

1 2 3 4 5 6 7 8 | [root@master chapter5]# kubectl scale replicasets myapp-rs --replicas=3replicaset.apps/myapp-rs scaled[root@master chapter5]# kubectl get rs myapp-rsNAME DESIRED CURRENT READY AGEmyapp-rs 3 3 3 47h[root@master chapter5]# kubectl scale replicasets myapp-rs --current-replicas=2 --replicas=4error: Expected replicas to be 2, was 3 |

4、 删除replicaset控制器资源

1 2 | [root@master chapter5]# kubectl delete replicasets myapp-rs --cascade=falsereplicaset.apps "myapp-rs" deleted |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构