Kubernetes进阶实战读书笔记:Service基础-发现-暴露

一、service资源资源基础应用

1、service资源清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | --- myapp-deploy.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: myapp-deployspec: replicas: 3 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: myapp image: ikubernetes/myapp:v1 ports: - containerPort: 80 name: http--- myapp-svc.yaml kind: ServiceapiVersion: v1metadata: name: myapp-svcspec: selector: app: myapp ports: - protocol: TCP port: 80 targetPort: 80 |

2、创建资源

1 2 3 4 5 | [root@master chapter5]# kubectl apply -f myapp-deploy.yaml deployment.apps/myapp-deploy created[root@master chapter6]# kubectl apply -f myapp-svc.yaml service/myapp-svc created |

3、验证

1 2 3 4 5 6 7 8 | [root@master chapter6]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18dmyapp-svc ClusterIP 10.111.104.25 <none> 80/TCP 6s[root@master chapter6]# kubectl get endpoints myapp-svc NAME ENDPOINTS AGEmyapp-svc 10.244.0.72:80,10.244.0.73:80,10.244.2.154:80 71s |

4、向service对象请求服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@master ~]# kubectl exec -it busybox shkubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead./home # curl http://10.111.104.25:80Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>/home # for loop in 1 2 3 4;do curl http://10.111.104.25:80/hostname.html;done/home # for loop in `seq 10`;do curl http://10.111.104.25:80/hostname.html;donemyapp-deploy-5cbd66595b-7s94hmyapp-deploy-5cbd66595b-fzgcrmyapp-deploy-5cbd66595b-fzgcrmyapp-deploy-5cbd66595b-fzgcrmyapp-deploy-5cbd66595b-fzgcrmyapp-deploy-5cbd66595b-fzgcrmyapp-deploy-5cbd66595b-fzgcrmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxq |

二、会话粘性

1、sessionAffinity字段含义

1 2 3 4 5 6 7 8 9 10 11 | [root@master chapter6]# kubectl explain svc.spec.sessionAffinityKIND: ServiceVERSION: v1FIELD: sessionAffinity <string>DESCRIPTION: Supports "ClientIP" and "None". Used to maintain session affinity. Enable client IP based session affinity. Must be ClientIP or None. Defaults to None. More info: https://kubernetes.io/docs/concepts/services-networking/service/#virtual-ips-and-service-proxies |

None:不使用sessionAffinity、默认值

ClientIP:基于客户端IP地址识别客户端身份、把来自同一个源IP地址的请求始终调度至同一个POD对象

2、修改此前myapp-svc使用Session Affinity机制

1 2 | [root@master chapter6]# kubectl patch service myapp-svc -p '{"spec": {"sessionAffinity": "ClientIP"}}'service/myapp-svc patched |

3、验证会话粘性效果

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@master chapter6]# kubectl exec -it busybox shkubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead./home # for loop in 1 2 3 4;do curl http://10.111.104.25:80/hostname.html;donemyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxq/home # for loop in `seq 10`;do curl http://10.111.104.25:80/hostname.html;donemyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxqmyapp-deploy-5cbd66595b-nlpxq |

三、服务发现方式

1、服务发现方式:环境变量

1、kubernetes service 环境变量

kubernetes为每个service资源生成包括以下形式的环境变量在内的一系列环境变量、在同一个名称空间中创建的pod对象都会自动

拥有这些变量

1 2 | {SVCNAME}_SERVICE_HOST{SVCNAME}_SERVICE_PORT |

如果SVCNAME中使用了连接线、那么kubernetes会在定义为环境变量时将其转换为下划线

2、Docker Link形式的环境变量

1 2 3 4 5 6 7 8 | / # printenv |grep MYAPPMYAPP_SVC_PORT_80_TCP_ADDR=10.98.57.156MYAPP_SVC_PORT_80_TCP_PORT=80MYAPP_SVC_PORT_80_TCP_PROTO=tcpMYAPP_SVC_PORT_80_TCP=tcp://10.98.57.156:80MYAPP_SVC_SERVICE_HOST=10.98.57.156MYAPP_SVC_PORT=tcp://10.98.57.156:80MYAPP_SVC_SERVICE_PORT=80 |

2、ClusterDNS和服务发现

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | alertmanager-main-1.alertmanager-operated. A: read udp 10.244.0.82:39751-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:45.27078922Z"}alertmanager-main-2.alertmanager-operated. AAAA: read udp 10.244.0.82:39147-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:46.290351841Z"}alertmanager-main-1.alertmanager-operated. A: read udp 10.244.0.82:55091-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:47.040067334Z"}alertmanager-main-1.alertmanager-operated. AAAA: read udp 10.244.0.82:50521-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:47.04012648Z"}alertmanager-main-1.alertmanager-operated. A: read udp 10.244.0.82:59715-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:47.273682812Z"}alertmanager-main-1.alertmanager-operated. ANY: dial tcp 218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:47.291473366Z"}alertmanager-main-2.alertmanager-operated. A: read udp 10.244.0.82:56171-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:49.278965657Z"}alertmanager-main-1.alertmanager-operated. AAAA: read udp 10.244.0.82:47290-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:49.295755243Z"}alertmanager-main-2.alertmanager-operated. A: read udp 10.244.0.82:46048-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:51.05192443Z"}alertmanager-main-1.alertmanager-operated. AAAA: read udp 10.244.0.82:55961-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:51.297235485Z"}alertmanager-main-2.alertmanager-operated. A: read udp 10.244.0.82:47002-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:53.053631874Z"}alertmanager-main-2.alertmanager-operated. AAAA: read udp 10.244.0.82:41021-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:53.303883147Z"}alertmanager-main-2.alertmanager-operated. AAAA: read udp 10.244.0.82:58909-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:55.305017334Z"}alertmanager-main-1.alertmanager-operated. AAAA: read udp 10.244.0.82:37589-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:57.312243213Z"}alertmanager-main-1.alertmanager-operated. AAAA: read udp 10.244.0.82:40354-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:08:59.314302823Z"}alertmanager-main-2.alertmanager-operated. ANY: dial tcp 8.8.8.8:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:09:12.972172135Z"}alertmanager-main-1.alertmanager-operated. ANY: dial tcp 8.8.8.8:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:09:13.175673423Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:38018-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:10:56.443739327Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:50209-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:13:06.47535098Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:37913-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:13:06.475834378Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:40603-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:13:11.475643832Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:55579-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:15:56.563127798Z"}grafana.com. A: read udp 10.244.0.82:32968-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:17:50.49961549Z"}raw.githubusercontent.com. AAAA: read udp 10.244.0.82:37557-\u003e8.8.8.8:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:17:55.879321934Z"}raw.githubusercontent.com. AAAA: read udp 10.244.0.82:56239-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:17:58.879968186Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:55239-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:18:41.588908206Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:37380-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:18:46.588852056Z"}1.0.244.10.in-addr.arpa. PTR: read udp 10.244.0.82:54808-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:18:52.583971405Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:47436-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:20:56.628149749Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:46755-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:21:36.631153451Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:46023-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:21:41.63077241Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:56997-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:21:41.63119581Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:40320-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:22:41.64384947Z"}common-service-weave-scope.weave-scope.svc. AAAA: read udp 10.244.0.82:55737-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:23:16.654371006Z"}common-service-weave-scope.weave-scope.svc. A: read udp 10.244.0.82:38601-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:23:21.652466109Z"}109.1.244.10.in-addr.arpa. PTR: read udp 10.244.0.82:32915-\u003e218.30.19.40:53: i/o timeout\n","stream":"stdout","time":"2020-07-30T02:24:18.520335799Z"} |

3、服务发现式DNS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | /home # cat /etc/resolv.conf nameserver 10.96.0.10search default.svc.cluster.local svc.cluster.local cluster.localoptions ndots:5/home # nslookup myapp-svc.defaultServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: myapp-svc.defaultAddress 1: 10.111.104.25 myapp-svc.default.svc.cluster.local/home # nslookup prometheus-operator.monitoringServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: prometheus-operator.monitoringAddress 1: 10.244.1.16 10-244-1-16.prometheus-operator.monitoring.svc.cluster.local/home # nslookup kube-state-metrics.monitoringServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: kube-state-metrics.monitoringAddress 1: 10.244.1.17 10-244-1-17.kube-state-metrics.monitoring.svc.cluster.local |

四、Service的四种类型

1、ClusterIP

通过集群内部IP地址暴露服务、此地址仅在集群内部可达、而无法被集群外部的客户端访问如图6-8所示、此为默认service类型

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | [root@master chapter6]# cat myapp-svc.yaml kind: ServiceapiVersion: v1metadata: name: myapp-svcspec: selector: app: myapp ports: - protocol: TCP port: 80 targetPort: 80[root@master chapter6]# kubectl apply -f myapp-svc.yaml service/myapp-svc unchanged[root@master chapter6]# kubectl get svc myapp-svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEmyapp-svc ClusterIP 10.111.104.25 <none> 80/TCP 2d4h |

2、NodePort

建立在ClusterIP之上、其在每个节点的IP地址的某静态端口暴露服务、因此、它依然会为serbice分配集群IP地址、并将此作为NodePort的路由目标

简单来说NodePort类型就是在工作节点的IP地址上选择一个端口用于将集群外部的用户请求转发到至目标service的ClusterIP和port

因此这种类型的service即可如ClusterIP一样受到集群内部客户端pod的访问、也会受到集群外部客户端通过套接字<NodeIP>:<NodePort>进行的请求

1、创建验证资源:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | [root@master chapter6]# cat myapp-svc-nodeport.yaml kind: ServiceapiVersion: v1metadata: name: myapp-svc-nodeportspec: type: NodePort selector: app: myapp ports: - protocol: TCP port: 80 targetPort: 80 nodePort: 30008[root@master chapter6]# kubectl apply -f myapp-svc-nodeport.yaml service/myapp-svc-nodeport created[root@master chapter6]# kubectl get svc myapp-svc-nodeport NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEmyapp-svc-nodeport NodePort 10.107.241.246 <none> 80:30008/TCP 21s |

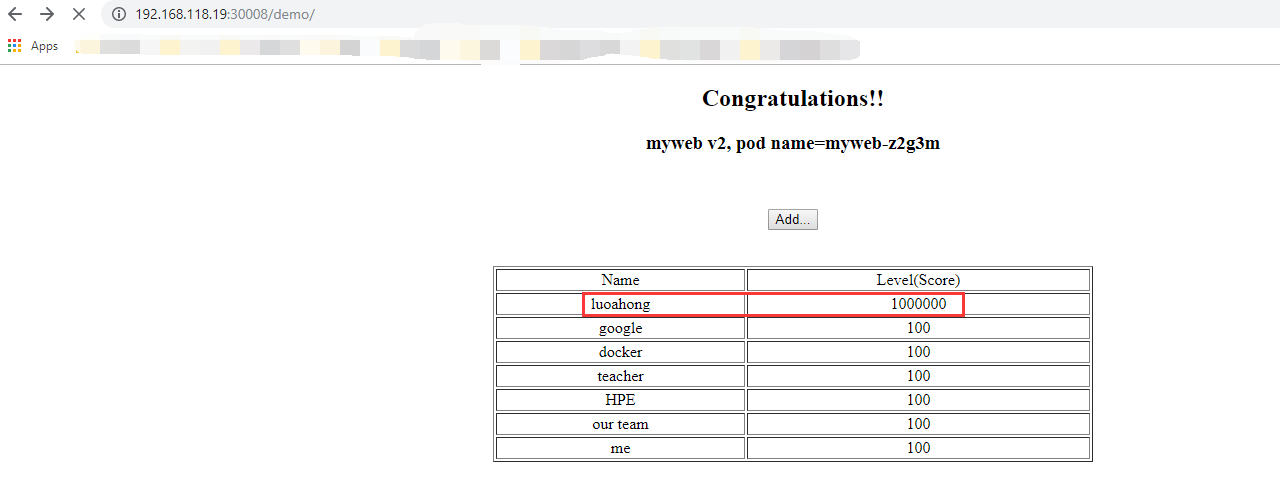

2、浏览器访问

3、LoadBalancer 阿里云ELB

会指向关联至集群外部的切实存在的某个负载均衡设备,该设备通过工作节点之上的NodePort向集群内部发送请求流量

1 2 3 4 5 6 7 8 9 10 11 12 | [root@master chapter6]# cat myapp-svc-lb.yaml kind: ServiceapiVersion: v1metadata: name: myapp-svc-lbspec: selector: app: myapp ports: - protocol: TCP port: 80 targetPort: 80 |

例如阿里云计算环境中的ELB实例即为此类的负载均衡设备、此类型的优势在于、他能够把来自于集群外部客户端的请求调度至所有节点的NodePort之上,而不是依赖客户端自行决定连接至那个节点、

从而避免了因客户端指定的节点故障而导致的服务不可用

4、ExternalName:内部pod访问外部资源

1、资源清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | [root@master chapter6]# cat external-redis.yaml kind: ServiceapiVersion: v1metadata: name: external-www-svcspec: type: ExternalName externalName: www.kubernetes.io ports: - protocol: TCP port: 6379 targetPort: 6379 nodePort: 0 selector: {} |

2、创建

1 2 | [root@master chapter6]# kubectl apply -f external-redis.yaml service/external-www-svc created |

3、验证效果

1 2 3 4 5 | [root@master chapter6]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEexternal-www-svc ExternalName <none> www.kubernetes.io 6379/TCP 7skubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19dmyapp-svc ClusterIP 10.111.104.25 <none> 80/TCP 95m |

4、解析验证

1 2 3 4 5 6 7 8 9 10 11 12 | /home # nslookup external-www-svcServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: external-www-svcAddress 1: 147.75.40.148[root@master chapter6]# ping www.kubernetes.ioPING kubernetes.io (147.75.40.148) 56(84) bytes of data.64 bytes from 147.75.40.148 (147.75.40.148): icmp_seq=1 ttl=49 time=180 ms64 bytes from 147.75.40.148 (147.75.40.148): icmp_seq=2 ttl=49 time=180 ms64 bytes from 147.75.40.148 (147.75.40.148): icmp_seq=3 ttl=49 time=180 ms |

五、headless:直达pod不经过service

客户端需要直接访问Service资源后端的所有Pod资源、这时就应该向客户端暴露每个pod资源的ip地址、而不再是中间层service对象的ClusterIP这种资源便称为headless Service

1、创建资源

1 2 3 4 5 6 7 8 9 10 11 12 13 | [root@master chapter6]# cat myapp-headless-svc.yaml kind: ServiceapiVersion: v1metadata: name: myapp-headless-svcspec: clusterIP: None #只需要将clusterIP字段的值设置为:"None" selector: app: myapp ports: - port: 80 targetPort: 80 name: httpport |

只需要将clusterIP字段的值设置为:"None"

2、创建运行

1 2 | [root@master chapter6]# kubectl apply -f myapp-headless-svc.yaml service/myapp-headless-svc created |

3、重点看有无ClusterIP

1 2 3 4 | [root@master chapter6]# kubectl describe svc myapp-headless-svc.....Endpoints: 10.244.0.81:80,10.244.0.83:80..... |

和其他类型区别无ClusterIP

headless Service对象没有ClusterIP、于是kube-proxy便无需处理此类请求、也就更没有了负载均衡或代理它的需要、在前端应用拥有自有的其他服务发现机制时、headless Service即可以省去定义ClusterIP的需求

至于如何为此类Service资源配置ip地址、则取决于它的标签选择器的定义

六、pod资源发现

1 2 3 4 5 6 7 | /home # nslookup myapp-headless-svcServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: myapp-headless-svcAddress 1: 10.244.0.81 10-244-0-81.myapp-svc.default.svc.cluster.localAddress 2: 10.244.0.83 10-244-0-83.myapp-headless-svc.default.svc.cluster.local |

具有标签选择器:端点控制器会在api中为其创建Endpoints记录、并将ClusterDNS服务中的A记录直接解析到此Service后端的各pod对象的ip地址上

没有标签选择器:端点控制器不会在api中为其创建Endpoints记录、ClusterDNS的配置分为两种情形:

1、对ExternalName类型的服务创建CNAME记录

2、对其他三种类型来说、为那些于当前Service共享名称的所有Endpoints对象创建一条记录

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

2017-07-30 函数和常用模块【day04】:内置函数分类总结(十一)