kubernetes云平台管理实战: 集群部署(CentOS 7.8 + docker 1.13 + kubectl 1.52)

一、环境规划

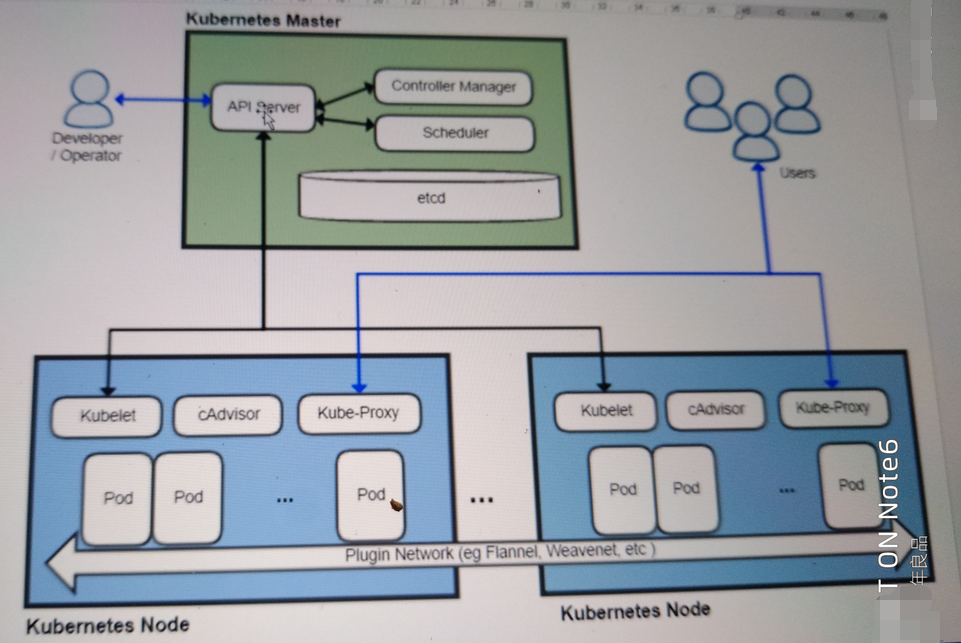

1、架构拓扑图

2、主机规划

master 192.168.118.18

node01 192.168.118.19

node02 192.168.118.20

192.168.118.18即时master也是node

3、软件版本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | [root@master ~]# cat /etc/redhat-releaseCentOS Linux release 7.8.2003 (Core)[root@master ~]# docker versionClient: Version: 1.13.1 API version: 1.26 Package version: docker-1.13.1-161.git64e9980.el7_8.x86_64 Go version: go1.10.3 Git commit: 64e9980/1.13.1 Built: Tue Apr 28 14:43:01 2020 OS/Arch: linux/amd64Server: Version: 1.13.1 API version: 1.26 (minimum version 1.12) Package version: docker-1.13.1-161.git64e9980.el7_8.x86_64 Go version: go1.10.3 Git commit: 64e9980/1.13.1 Built: Tue Apr 28 14:43:01 2020 OS/Arch: linux/amd64 Experimental: false[root@master ~]# kubectl versionClient Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.2", GitCommit:"269f928217957e7126dc87e6adfa82242bfe5b1e", GitTreeState:"clean", BuildDate:"2017-07-03T15:31:10Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}Server Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.2", GitCommit:"269f928217957e7126dc87e6adfa82242bfe5b1e", GitTreeState:"clean", BuildDate:"2017-07-03T15:31:10Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}[root@master ~]# kubectl cluster-infoKubernetes master is running at http://localhost:8080Heapster is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/heapsterKubeDNS is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kube-dnskubernetes-dashboard is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboardmonitoring-grafana is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/monitoring-grafanaTo further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.[root@master ~]# kubectl get nodeNAME STATUS AGEmaster Ready 5dnode01 Ready 7dnode02 Ready 7d |

4、修改主机和host解析

1、修改主机

1 2 3 | hostnamectl set-hostname masterhostnamectl set-hostname node1hostnamectl set-hostname node2 |

2、hosts解析

1 2 3 4 5 6 7 8 9 10 | [root@master ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6master 192.168.118.18node1 192.168.118.19node2 192.168.118.20scp -rp /etc/hosts 192.168.118.19:/etc/hostsscp -rp /etc/hosts 192.168.118.20:/etc/hosts |

二、master节点安装etcd

k8s数据库kv类型存储,原生支持做集群

1、安装

1 | yum install etcd.x86_64 -y |

2、配置

1 2 3 4 | vim /etc/etcd/etcd.conf 修改一下两行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"ETCD_ADVERTISE_CLIENT_URLS="http://192.168.118.18:2379" |

3、启动

1 2 | systemctl start etcd.servicesystemctl enable etcd.service |

4、健康检查

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | [root@master ~]# netstat -lntupActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN 1396/etcd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1162/sshd tcp6 0 0 :::2379 :::* LISTEN 1396/etcd tcp6 0 0 :::22 :::* LISTEN 1162/sshd udp 0 0 0.0.0.0:30430 0.0.0.0:* 1106/dhclient udp 0 0 0.0.0.0:68 0.0.0.0:* 1106/dhclient udp 0 0 127.0.0.1:323 0.0.0.0:* 869/chronyd udp6 0 0 :::42997 :::* 1106/dhclient udp6 0 0 ::1:323 :::* 869/chronyd [root@master ~]# etcdctl set tesstdir/testkey0 00[root@master ~]# etcdctl get tesstdir/testkey00[root@master ~]# etcdctl -C http://192.168.118.18:2379 cluster-healthmember 8e9e05c52164694d is healthy: got healthy result from http://192.168.118.18:2379cluster is healthy[root@master ~]# systemctl stop etcd.service[root@master ~]# etcdctl -C http://192.168.118.18:2379 cluster-healthcluster may be unhealthy: failed to list membersError: client: etcd cluster is unavailable or misconfigured; error #0: dial tcp 192.168.118.18:2379: getsockopt: connection refusederror #0: dial tcp 192.168.118.18:2379: getsockopt: connection refused[root@master ~]# systemctl start etcd.service[root@master ~]# etcdctl -C http://192.168.118.18:2379 cluster-healthmember 8e9e05c52164694d is healthy: got healthy result from http://192.168.118.18:2379cluster is healthy |

三、master节点安装kubernetes,k8s

1、安装

1 | [root@master ~]# yum install kubernetes-master.x86_64 -y |

2、配置

1 2 3 4 5 6 7 8 9 10 | [root@master ~]#vim /etc/kubernetes/apiserver修改如下:KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"KUBE_API_PORT="--port=8080"KUBELET_PORT="--kubelet-port=10250"KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.118.18:2379"KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"[root@master ~]#vim /etc/kubernetes/config 修改如下:KUBE_MASTER="--master=http://192.168.118.18:8080" |

3、启动

1 2 3 4 5 6 | systemctl start kube-apiserver.service systemctl start kube-controller-manager.service systemctl start kube-scheduler.servicesystemctl enable kube-apiserver.service systemctl enable kube-controller-manager.service systemctl enable kube-scheduler.service |

4、组件作用

api-server: 接受并响应用户的请求

controller: 控制器管理,保证容器始终存活

scheduler: 调度器,选择启动容器的node节点

四、node节点安装kubernetes

1、安装

1 | [root@node01 ~]#yum install kubernetes-node.x86_64 -y |

2、配置

1 2 3 4 5 6 7 8 9 10 | [root@node01 ~]# vim /etc/kubernetes/config 修改如下内容 :KUBE_MASTER="--master=http://192.168.118.18:8080"[root@node01 ~]# vim /etc/kubernetes/kubelet修改如下内容:KUBELET_ADDRESS="--address=0.0.0.0"KUBELET_PORT="--port=10250"KUBELET_HOSTNAME="--hostname-override=node01"KUBELET_API_SERVER="--api-servers=http://192.168.118.18:8080" |

3、启动

1 2 3 4 5 | [root@node01 ~]# systemctl enable kubelet.service Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.[root@node01 ~]# systemctl start kubelet.service [root@node01 ~]# systemctl enable kube-proxy.service [root@node01 ~]# systemctl start kube-proxy.service |

4、组件作用

kubelet 调用docker,管理容器生命周期

nova-compute 调用libvirt,管理虚拟机的生命周期

kube-poxy 提供容器网络访问

五、所有节点配置flannel网络

1、master节点

1、安装

1 | [root@master ~]# yum install flannel -y |

2、配置

1 2 3 | [root@master ~]# sed -i 's#http://127.0.0.1:2379#http://192.168.118.18:2379#g' /etc/sysconfig/flanneld [root@master ~]# etcdctl mk /atomic.io/network/config '{ "Network":"172.16.0.0/16" }'{ "Network":"172.16.0.0/16" } |

3、启动

1 2 3 4 | [root@master ~]# systemctl enable flanneld.service Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.[root@master ~]# systemctl start flanneld.service |

4、检查是否安装成功

1 2 3 4 5 6 7 8 9 10 11 12 13 | [root@master ~]# systemctl restart docker[root@master ~]# systemctl restart kube-apiserver.service[root@master ~]# systemctl restart kube-controller-manager.service[root@master ~]# systemctl restart kube-scheduler.service [root@master ~]# ifconfig flannel0flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 172.16.56.0 netmask 255.255.0.0 destination 172.16.56.0 inet6 fe80::c35d:3f3e:55a2:b2b prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 2514602 bytes 562235712 (536.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3058595 bytes 212519408 (202.6 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

2、node节点

1、安装

1 2 | [root@node01 ~]# yum install flannel -y[root@k8s-node2 ~]# yum install flannel -y |

2、配置

1 2 | [root@node01 ~]#sed -i 's#http://127.0.0.1:2379#http://192.168.118.18:2379#g' /etc/sysconfig/flannel[root@k8s-node2 ~]#sed -i 's#http://127.0.0.1:2379#http://192.168.118.18:2379#g' /etc/sysconfig/flannel |

3、启动

1 2 3 4 5 6 7 8 | [root@node01 ~]# systemctl enable flanneld.service Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.[root@node01 ~]# systemctl start flanneld.service [root@k8s-node2 ~]# systemctl enable flanneld.service Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.[root@k8s-node2 ~]# systemctl start flanneld.service |

4、检查是否安装成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@node01 ~]# ifconfig flannel0flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 172.16.60.0 netmask 255.255.0.0 destination 172.16.60.0 inet6 fe80::deee:4e2f:e3c3:bee8 prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 2978758 bytes 205823520 (196.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2527914 bytes 567379746 (541.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0[root@node02 xiaoniao]# ifconfig flannel0flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 172.16.99.0 netmask 255.255.0.0 destination 172.16.99.0 inet6 fe80::65c9:9772:ba49:b765 prefixlen 64 scopeid 0x20<link> unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 837 bytes 78994 (77.1 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1004 bytes 145863 (142.4 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

3、容器网络互通测试

1、所有节点启动容器并获取容器ip地址

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | [root@master ~]# kubectl get nodesNAME STATUS AGEnode01 Ready 10hk8s-node2 NotReady 9h[root@master ~]# docker run -it busybox /bin/shUnable to find image 'busybox:latest' locallyTrying to pull repository docker.io/library/busybox ... latest: Pulling from docker.io/library/busybox57c14dd66db0: Pull complete Digest: sha256:7964ad52e396a6e045c39b5a44438424ac52e12e4d5a25d94895f2058cb863a0/ # ifconfig|grep eth0eth0 Link encap:Ethernet HWaddr 02:42:AC:10:30:02 inet addr:172.16.48.2 Bcast:0.0.0.0 Mask:255.255.255.0[root@node01 ~]# docker run -it busybox /bin/shUnable to find image 'busybox:latest' locallyTrying to pull repository docker.io/library/busybox ... latest: Pulling from docker.io/library/busybox57c14dd66db0: Pull complete Digest: sha256:7964ad52e396a6e045c39b5a44438424ac52e12e4d5a25d94895f2058cb863a0/ # ifconfig|grep eth0eth0 Link encap:Ethernet HWaddr 02:42:AC:10:0A:02 inet addr:172.16.10.2 Bcast:0.0.0.0 Mask:255.255.255.0[root@k8s-node2 ~]# docker run -it busybox /bin/shUnable to find image 'busybox:latest' locallyTrying to pull repository docker.io/library/busybox ... latest: Pulling from docker.io/library/busybox57c14dd66db0: Pull complete Digest: sha256:7964ad52e396a6e045c39b5a44438424ac52e12e4d5a25d94895f2058cb863a0/ # ifconfig|grep eth0eth0 Link encap:Ethernet HWaddr 02:42:AC:10:30:02 inet addr:172.16.48.2 Bcast:0.0.0.0 Mask:255.255.255.0 |

2、网络互通性测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | [root@k8s-node2 ~]# docker run -it busybox /bin/shUnable to find image 'busybox:latest' locallyTrying to pull repository docker.io/library/busybox ... latest: Pulling from docker.io/library/busybox57c14dd66db0: Pull complete Digest: sha256:7964ad52e396a6e045c39b5a44438424ac52e12e4d5a25d94895f2058cb863a0/ # ifconfig|grep eth0eth0 Link encap:Ethernet HWaddr 02:42:AC:10:30:02 inet addr:172.16.48.2 Bcast:0.0.0.0 Mask:255.255.255.0 / # ping 172.16.10.2PING 172.16.10.2 (172.16.10.2): 56 data bytes64 bytes from 172.16.10.2: seq=0 ttl=60 time=5.212 ms64 bytes from 172.16.10.2: seq=1 ttl=60 time=1.076 ms^C--- 172.16.10.2 ping statistics ---4 packets transmitted, 4 packets received, 0% packet lossround-trip min/avg/max = 1.076/2.118/5.212 ms/ # ping 172.16.48.2PING 172.16.48.2 (172.16.48.2): 56 data bytes64 bytes from 172.16.48.2: seq=0 ttl=60 time=5.717 ms64 bytes from 172.16.48.2: seq=1 ttl=60 time=1.108 ms^C--- 172.16.48.2 ping statistics ---7 packets transmitted, 7 packets received, 0% packet lossround-trip min/avg/max = 1.108/1.904/5.717 ms |

4、遇到的坑:

如果用docker-1.31可能会有网络不通的情况,解决办法如下

1 2 3 4 | [root@node02 ~]# vim /usr/lib/systemd/system/docker.serviceExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT [root@node02 ~]# iptables -P FORWARD ACCEPT[root@node02 ~]# systemctl daemon-reload |

六、配置master为镜像仓库

1、master节点

1、配置

1 2 3 4 5 | vim /etc/sysconfig/docker修改内容如下:OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=192.168.118.18:5000'systemctl restart docker |

2、启动私有仓库

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | [root@master ~]# docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registryUnable to find image 'registry:latest' locallyTrying to pull repository docker.io/library/registry ... latest: Pulling from docker.io/library/registrycd784148e348: Pull complete 0ecb9b11388e: Pull complete 918b3ddb9613: Pull complete 5aa847785533: Pull complete adee6f546269: Pull complete Digest: sha256:1cd9409a311350c3072fe510b52046f104416376c126a479cef9a4dfe692cf57ba8d9b958c7c0867d5443ee23825f842a580331c87f6555678709dfc8899ff17[root@master ~]# docker ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESba8d9b958c7c registry "/entrypoint.sh /etc/" 11 seconds ago Up 9 seconds 0.0.0.0:5000->5000/tcp registry15340ee09614 busybox "/bin/sh" 3 hours ago Exited (1) 19 minutes ago sleepy_mccarthy |

3、推送镜像测试

1 2 3 4 5 | [root@master ~]# docker tag docker.io/busybox:latest 192.168.118.18:5000/busybox:latest[root@master ~]# docker push 192.168.118.18:5000/busybox:latestThe push refers to a repository [192.168.118.18:5000/busybox]683f499823be: Pushed latest: digest: sha256:bbb143159af9eabdf45511fd5aab4fd2475d4c0e7fd4a5e154b98e838488e510 size: 527 |

2、node节点

1、配置

1 2 3 4 5 | vim /etc/sysconfig/docker修改内容如下:OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=192.168.118.18:5000'systemctl restart docker |

2、测试

1 2 3 4 | [root@node01 ~]# docker pull 192.168.118.18:5000/busybox:latestTrying to pull repository 192.168.118.18:5000/busybox ... latest: Pulling from 192.168.118.18:5000/busyboxDigest: sha256:bbb143159af9eabdf45511fd5aab4fd2475d4c0e7fd4a5e154b98e838488e510 |

作者:罗阿红

出处:http://www.cnblogs.com/luoahong/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。

分类:

云计算Kubernetes

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

2019-05-20 深入浅出计算机组成原理学习笔记: 第九讲

2019-05-20 深入浅出计算机组成原理学习笔记:第十一讲