机器学习笔记(七)——岭回归(sklearn)

本博客仅用于个人学习,不用于传播教学,主要是记自己能够看得懂的笔记(

学习知识、资源和数据来自:机器学习算法基础-覃秉丰_哔哩哔哩_bilibili

岭回归就是正则化衍生出来的回归方式。正则化的目的是防止过拟合,使曲线尽量平滑,所以在Loss函数后面加了个 ,其中的λ就是sklearn中岭回归库ridge里的alpha。

,其中的λ就是sklearn中岭回归库ridge里的alpha。

根据矩阵变换,可得到:

![]()

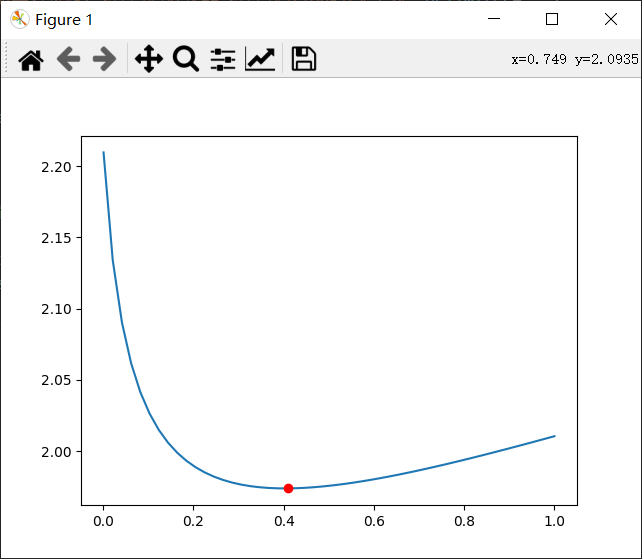

在sklearn的库中,有RidgeCV函数,可以进行交叉验证法,来选出Loss最小的alpha。其中store_cv_value可以判断是否储存每次交叉验证的结果。

最后画一个alpha与Loss函数的关系图。

数据和相关博客在底下。

Python代码如下:

import matplotlib.pyplot as plt import numpy as np from sklearn import linear_model data=np.genfromtxt('C:/Users/Lenovo/Desktop/学习/机器学习资料/线性回归以及非线性回归/longley.csv',delimiter=',') x_data=data[1:,2:] y_data=data[1:,1] als=np.linspace(0.001,1) #创建50个0.001到1的数据 model=linear_model.RidgeCV(alphas=als,store_cv_values=True) #交叉验证法,选出平均Loss最小的那一个alpha model.fit(x_data,y_data) print(model.alpha_) print(model.cv_values_.shape) #50个alpha,每个alpha都要交叉验证16次,得到16*50个Loss plt.plot(als,model.cv_values_.mean(axis=0)) #每个alpha的16个Loss求平均 plt.plot(model.alpha_,min(model.cv_values_.mean(axis=0)),'ro') #最低点 print(y_data[2]) print(model.predict(x_data[2,np.newaxis])) #预测值与真实值比较 plt.show()

得到结果:

0.40875510204081633

(16, 50)

88.2

[88.11216213]

所用数据:

"","GNP.deflator","GNP","Unemployed","Armed.Forces","Population","Year","Employed"

"1947",83,234.289,235.6,159,107.608,1947,60.323

"1948",88.5,259.426,232.5,145.6,108.632,1948,61.122

"1949",88.2,258.054,368.2,161.6,109.773,1949,60.171

"1950",89.5,284.599,335.1,165,110.929,1950,61.187

"1951",96.2,328.975,209.9,309.9,112.075,1951,63.221

"1952",98.1,346.999,193.2,359.4,113.27,1952,63.639

"1953",99,365.385,187,354.7,115.094,1953,64.989

"1954",100,363.112,357.8,335,116.219,1954,63.761

"1955",101.2,397.469,290.4,304.8,117.388,1955,66.019

"1956",104.6,419.18,282.2,285.7,118.734,1956,67.857

"1957",108.4,442.769,293.6,279.8,120.445,1957,68.169

"1958",110.8,444.546,468.1,263.7,121.95,1958,66.513

"1959",112.6,482.704,381.3,255.2,123.366,1959,68.655

"1960",114.2,502.601,393.1,251.4,125.368,1960,69.564

"1961",115.7,518.173,480.6,257.2,127.852,1961,69.331

"1962",116.9,554.894,400.7,282.7,130.081,1962,70.551

参考博客: