【Azure 事件中心】开启 Apache Flink 制造者 Producer 示例代码中的日志输出 (连接 Azure Event Hub Kafka 终结点)

问题描述

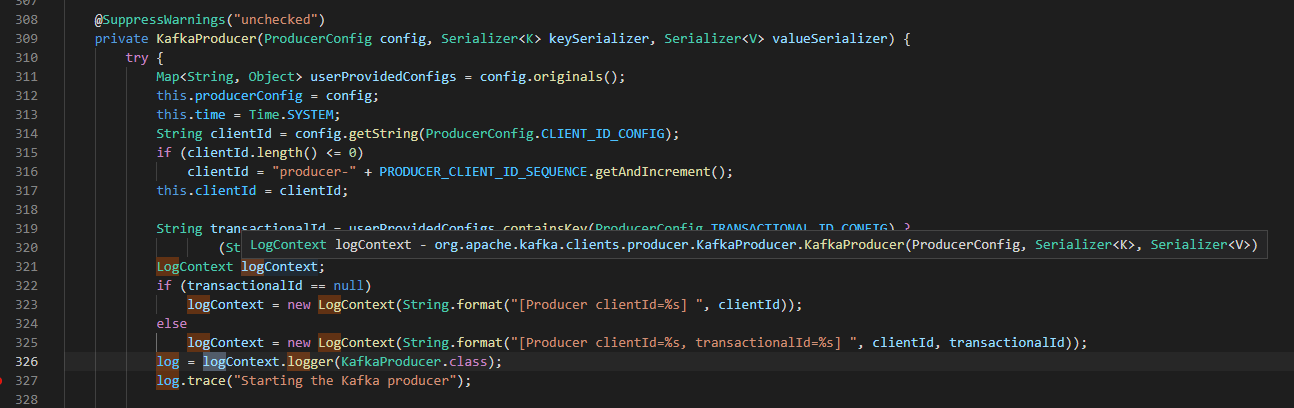

Azure Event Hub 在标准版以上就默认启用的Kafka终结点,所以可以通过Apache Kafka协议连接到Event Hub进行消息的生产和消费。通过示例代码下载到本地运行后,发现没有 Kafka Producer 的详细日志输出。当查看SDK源码中,发现使用的是 org.slf4j.Logger 输出日志,如:

但是,当运行 Producer 代码后,得到的输出取没有包含连接的详细信息,对出现连接问题的Debug没有任何帮助。

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder". SLF4J: Defaulting to no-operation (NOP) logger implementation SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details. Test Data #0 from thread #18 org.apache.kafka.common.errors.IllegalSaslStateException: Invalid SASL mechanism response, server may be expecting a different protocol

那么如何来输出更加详细的日志呢?

问题解决

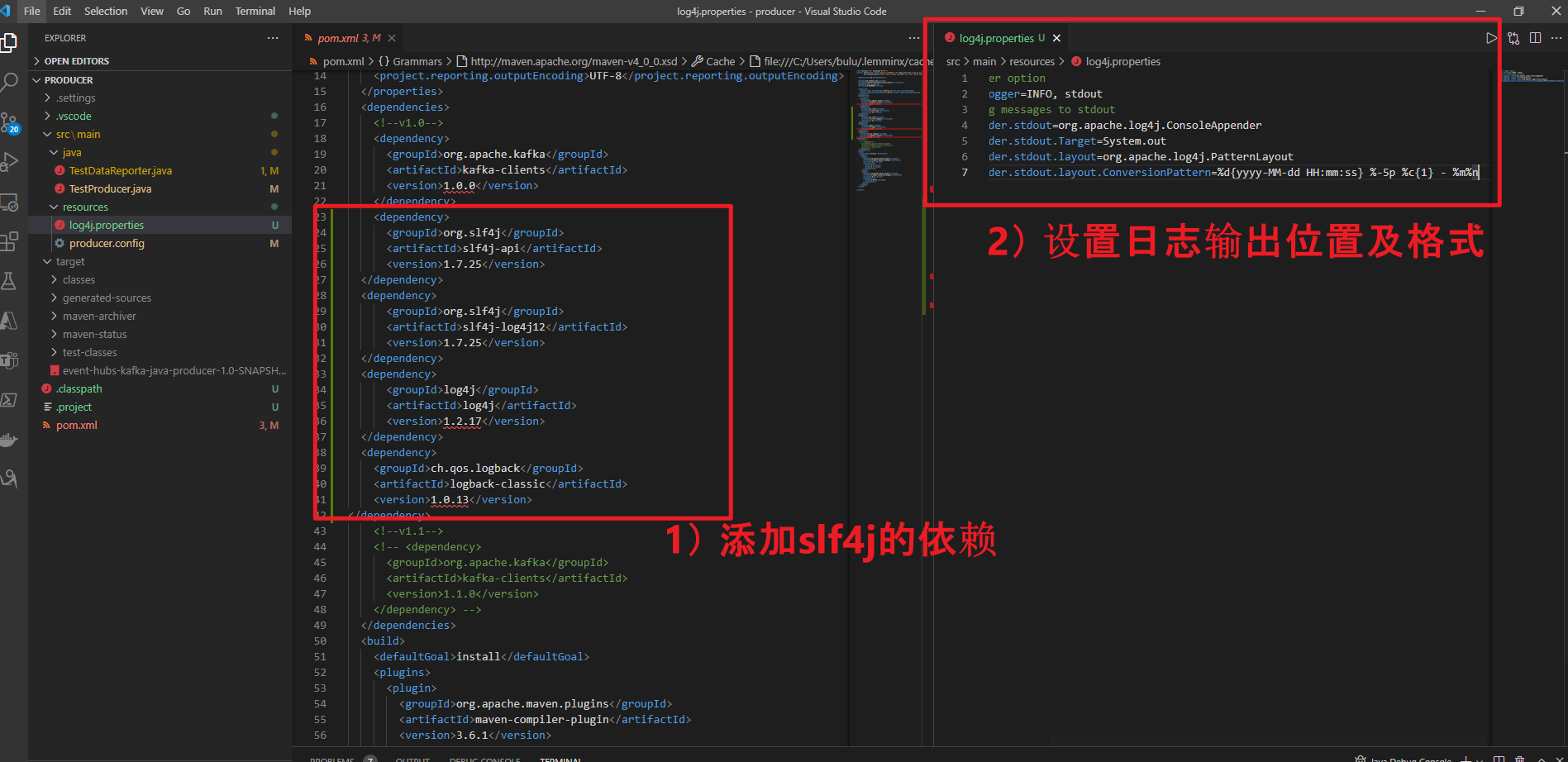

根据日志显示, SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder". 明确指出是因为没有加载到 org.slf4j.impl.StaticLoggerBinder 类,因为在程序执行的过程中,必须提供实际的日志记录实现,否则SLF4J讲忽略所有日志信息,SLF4J API 通过 SLF4J 绑定与实际的日志记录实现进行通信Log4j。所以需要在pom.xml中引入 org.slf4j 的相关依赖。

在pom.xml中加入

<dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.25</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.25</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> </dependency> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-classic</artifactId> <version>1.0.13</version>

然后,添加上log4j的配置文件,在resources文件夹下添加名为 log4j.properties文件,内容为:

# Root logger option

log4j.rootLogger=INFO, stdout

# Direct log messages to stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target=System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss} %-5p %c{1} - %m%n

修改后的文件内容如截图所示:

修改完成,运行得到完整日志

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/C:/Users/.m2/repository/org/slf4j/slf4j-log4j12/1.7.25/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/C:/Users/.m2/repository/ch/qos/logback/logback-classic/1.0.13/logback-classic-1.0.13.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2022-01-11 20:03:12 INFO ProducerConfig - ProducerConfig values:

acks = 1

batch.size = 16384

bootstrap.servers = [testeventxxxxxx.servicebus.chinacloudapi.cn:9093]

buffer.memory = 33554432

client.id = KafkaExampleProducer

compression.type = none

connections.max.idle.ms = 540000

enable.idempotence = false

interceptor.classes = null

key.serializer = class org.apache.kafka.common.serialization.LongSerializer

linger.ms = 0

max.block.ms = 60000

max.in.flight.requests.per.connection = 5

max.request.size = 1048576

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

security.protocol = SASL_SSL

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.timeout.ms = 60000

transactional.id = null

value.serializer = class org.apache.kafka.common.serialization.StringSerializer

2022-01-11 20:03:16 INFO AbstractLogin - Successfully logged in.

2022-01-11 20:03:17 INFO AppInfoParser - Kafka version : 1.0.0

2022-01-11 20:03:17 INFO AppInfoParser - Kafka commitId : aaa7af6d4a11b29d

2022-01-11 20:03:21 INFO TestProducer - test java logs : info

2022-01-11 20:03:21 ERROR TestProducer - test java logs : error

2022-01-11 20:03:21 WARN TestProducer - test java logs : warn

Test Data #0 from thread #18

2022-01-11 20:03:22 ERROR NetworkClient - [Producer clientId=KafkaExampleProducer] Connection to node -1 failed authentication due to: Invalid SASL mechanism response, server may be expecting a different protocol

org.apache.kafka.common.errors.IllegalSaslStateException: Invalid SASL mechanism response, server may be expecting a different protocol

org.apache.kafka.clients.producer.KafkaProducer@47dec663

参考资料

Slf4j Configuration File Example:https://examples.javacodegeeks.com/enterprise-java/slf4j/slf4j-configuration-file-example/

将 Apache Flink 与适用于 Apache Kafka 的 Azure 事件中心配合使用: https://docs.azure.cn/zh-cn/event-hubs/event-hubs-kafka-flink-tutorial

在微软云中国区 (Mooncake) 上实验以Apache Kafka协议方式发送/接受Event Hubs消息 (Java版) : https://www.cnblogs.com/lulight/p/14375190.html

当在复杂的环境中面临问题,格物之道需:浊而静之徐清,安以动之徐生。 云中,恰是如此!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?