1.问题描述

-

7月4号,18:53分,个人在值班时,发现别的开发组的服务WMS-SEVICE出现服务负载飙高的告警。

- 自动生成的Dump

2.问题分析排查过程:

-

看下服务监控

- 如下图,在18:50~18:55分这段时间内,确实服务CUP很高。

为什么这么高啊?有大的网络IO操作吗?继续排查。

-

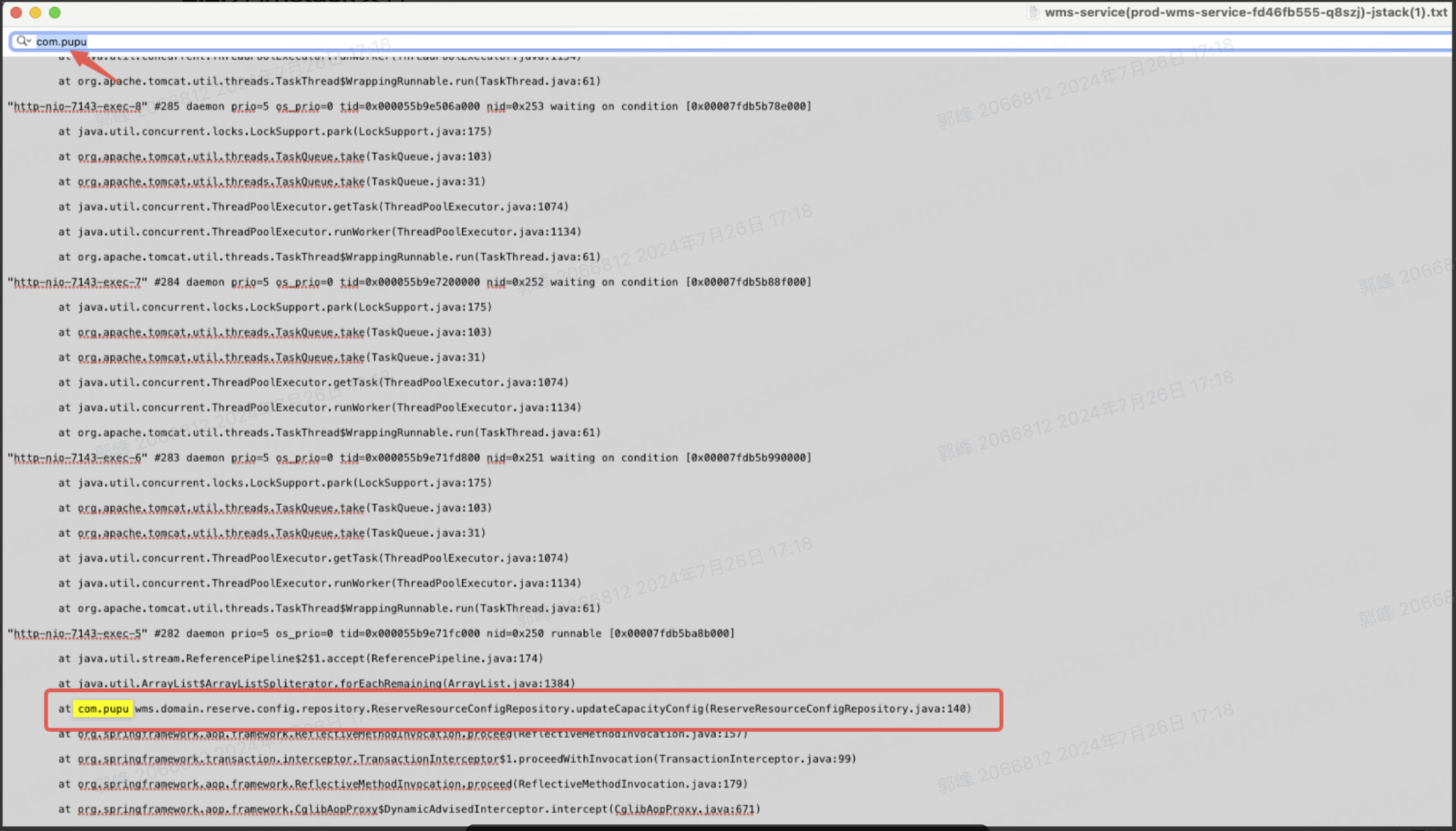

分析jstack文件

- 打开jstack文件,搜索"com.pupu",发现是截图所示的代码可能导致的这个问题

"http-nio-7143-exec-5" #282 daemon prio=5 os_prio=0 tid=0x000055b9e71fc000 nid=0x250 runnable [0x00007fdb5ba8b000] java.lang.Thread.State: RUNNABLE at java.util.stream.ReferencePipeline$2$1.accept(ReferencePipeline.java:174) at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384) at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482) at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472) at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708) at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234) at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566) at com.pupu.wms.domain.reserve.config.repository.ReserveResourceConfigRepository.updateCapacityConfig(ReserveResourceConfigRepository.java:140)

- 线程正在执行updateCapacityConfig方法.

- 该方法内部使用了java流(java.util.stream包)来处理数据。

- 流操作涉及到了accept方法(在ReferencePipeline$2$1类中),这通常与流的forEach,map,filter等中间操作相关。

- 流正在处理一个ArrayList(在ArrayList$ArrayListSpliterator类中),并且正在执行forEachRemaining方法来遍历列表中的元素。

- 最终,流操作会导致数据的收集(collect方法),这可能是将流中的元素聚合到一个新的集合中。

简单理解就是updateCapacityConfig()方法中流操作导致的。

找到对应的代码:

@Transactional(rollbackFor = Exception.class)

public Integer updateCapacityConfig(List<ReserveReceivingCapacityConfigPO> pos) {

if (CollectionUtils.isEmpty(pos)) {

return 0;

}

List<ReserveReceivingCapacityConfig> poReserveReceivingCapacityConfigs = ConverterUtil.convert(pos, ReserveReceivingCapacityConfig.class);

List<String> storeIds = poReserveReceivingCapacityConfigs.stream().map(ReserveReceivingCapacityConfig::getStoreId).distinct().collect(Collectors.toList());

List<Integer> dates = poReserveReceivingCapacityConfigs.stream().map(ReserveReceivingCapacityConfig::getConfigDate).distinct().sorted().collect(Collectors.toList());

LambdaQueryWrapper<ReserveReceivingCapacityConfig> query = Wrappers.lambdaQuery();

query.eq(ReserveReceivingCapacityConfig :: getHasDeleted,false);

query.in(ReserveReceivingCapacityConfig :: getStoreId,storeIds);

query.between(ReserveReceivingCapacityConfig :: getConfigDate,dates.get(0),dates.get(dates.size() - 1));

List<ReserveReceivingCapacityConfig> reserveReceivingCapacityConfigs = reserveReceivingCapacityConfigMapper.selectList(query);

Iterator<ReserveReceivingCapacityConfig> iterator = poReserveReceivingCapacityConfigs.iterator();

List<Long> removeIds = Lists.newArrayList();

while (iterator.hasNext()) {

ReserveReceivingCapacityConfig po = iterator.next();

List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) &&

r.getConfigDate().equals(po.getConfigDate()) &&

r.getConfigHour().equals(po.getConfigHour()))

.collect(Collectors.toList());

if (CollectionUtils.isNotEmpty(list)) {

// 如果完全相同则不更新

List<ReserveReceivingCapacityConfig> removes = list.stream().filter(r -> r.getSkuCount().equals(po.getSkuCount()) && r.getProductCount().equals(po.getProductCount())).collect(Collectors.toList());

if (CollectionUtils.isNotEmpty(removes)) {

iterator.remove();

} else {

// 如果配置发生变化,则移除旧的保存新的

removeIds.addAll(list.stream().map(ReserveReceivingCapacityConfig::getId).distinct().collect(Collectors.toList()));

}

}

}

if (CollectionUtils.isNotEmpty(removeIds)) {

log.warn("更新预约容量配置:removeIds = {}", JSONObject.toJSONString(removeIds));

LambdaUpdateWrapper<ReserveReceivingCapacityConfig> updateWrapper = Wrappers.lambdaUpdate();

updateWrapper

.in(ReserveReceivingCapacityConfig::getId, removeIds)

.set(ReserveReceivingCapacityConfig::getHasDeleted, true)

.set(ReserveReceivingCapacityConfig::getTimeUpdate,System.currentTimeMillis());

reserveReceivingCapacityConfigMapper.update(updateWrapper);

}

if (CollectionUtils.isNotEmpty(poReserveReceivingCapacityConfigs)) {

log.warn("更新预约容量配置:pos = {}", JSONObject.toJSONString(poReserveReceivingCapacityConfigs));

return reserveReceivingCapacityConfigMapper.insertBatchSomeColumn(poReserveReceivingCapacityConfigs);

}

return 0;

}

jstack文件提示的updateCapacityConfig方法中的140行如下:

List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) && r.getConfigDate().equals(po.getConfigDate()) && r.getConfigHour().equals(po.getConfigHour())) .collect(Collectors.toList());

确实是stream流操作.继续往下走.

看下这段代码的调用流程.下面是这段代码所在的方法,从该方法可以看到该代码位于循环内,且循环会遍历每个po对象。

假设下面的poReserveReceivingCapacityConfigs集合有200条数据,查询结果reserveReceivingCapacityConfigs集合有100条数据,

这段代码在每次批量调用中可能会被执行高达2万次(200个po对象 * 每次查询返回的约100条记录)

@Transactional(rollbackFor = Exception.class)

public Integer updateCapacityConfig(List<ReserveReceivingCapacityConfigPO> pos) {

if (CollectionUtils.isEmpty(pos)) {

return 0;

}

List<ReserveReceivingCapacityConfig> poReserveReceivingCapacityConfigs = ConverterUtil.convert(pos, ReserveReceivingCapacityConfig.class);

List<String> storeIds = poReserveReceivingCapacityConfigs.stream().map(ReserveReceivingCapacityConfig::getStoreId).distinct().collect(Collectors.toList());

List<Integer> dates = poReserveReceivingCapacityConfigs.stream().map(ReserveReceivingCapacityConfig::getConfigDate).distinct().sorted().collect(Collectors.toList());

LambdaQueryWrapper<ReserveReceivingCapacityConfig> query = Wrappers.lambdaQuery();

query.eq(ReserveReceivingCapacityConfig :: getHasDeleted,false);

query.in(ReserveReceivingCapacityConfig :: getStoreId,storeIds);

query.between(ReserveReceivingCapacityConfig :: getConfigDate,dates.get(0),dates.get(dates.size() - 1));

List<ReserveReceivingCapacityConfig> reserveReceivingCapacityConfigs = reserveReceivingCapacityConfigMapper.selectList(query);

Iterator<ReserveReceivingCapacityConfig> iterator = poReserveReceivingCapacityConfigs.iterator();

List<Long> removeIds = Lists.newArrayList();

while (iterator.hasNext()) {

ReserveReceivingCapacityConfig po = iterator.next();

List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) &&

r.getConfigDate().equals(po.getConfigDate()) &&

r.getConfigHour().equals(po.getConfigHour()))

.collect(Collectors.toList());

if (CollectionUtils.isNotEmpty(list)) {

// 如果完全相同则不更新

List<ReserveReceivingCapacityConfig> removes = list.stream().filter(r -> r.getSkuCount().equals(po.getSkuCount()) && r.getProductCount().equals(po.getProductCount())).collect(Collectors.toList());

if (CollectionUtils.isNotEmpty(removes)) {

iterator.remove();

} else {

// 如果配置发生变化,则移除旧的保存新的

removeIds.addAll(list.stream().map(ReserveReceivingCapacityConfig::getId).distinct().collect(Collectors.toList()));

}

}

}

if (CollectionUtils.isNotEmpty(removeIds)) {

log.warn("更新预约容量配置:removeIds = {}", JSONObject.toJSONString(removeIds));

LambdaUpdateWrapper<ReserveReceivingCapacityConfig> updateWrapper = Wrappers.lambdaUpdate();

updateWrapper

.in(ReserveReceivingCapacityConfig::getId, removeIds)

.set(ReserveReceivingCapacityConfig::getHasDeleted, true)

.set(ReserveReceivingCapacityConfig::getTimeUpdate,System.currentTimeMillis());

reserveReceivingCapacityConfigMapper.update(updateWrapper);

}

if (CollectionUtils.isNotEmpty(poReserveReceivingCapacityConfigs)) {

log.warn("更新预约容量配置:pos = {}", JSONObject.toJSONString(poReserveReceivingCapacityConfigs));

return reserveReceivingCapacityConfigMapper.insertBatchSomeColumn(poReserveReceivingCapacityConfigs);

}

return 0;

}

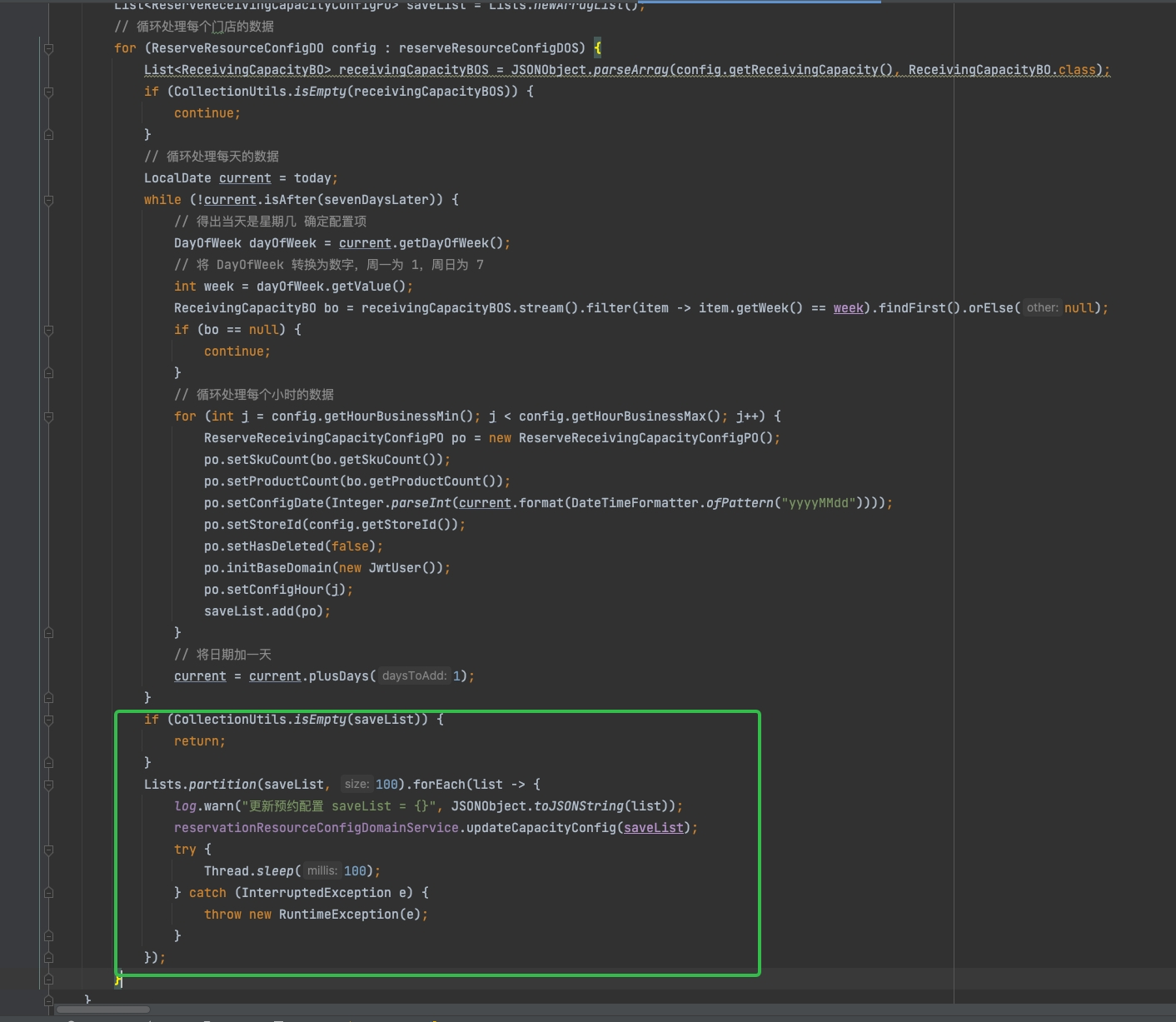

看下这个方法在哪里调用的?如下截图所示,每100分批调用的.分批调用了多少次?根据log.warn("更新预约配置")的日志,查询到调用了75次。意思就是这个要分批的saveList集合总共有7500条.其实这个方法主要干的事情就是做数据的更新操作.

再回到调用的代码处,由上面的分析得出,updateCapacityConfig(List<ReserveReceivingCapacityConfigPO> pos)这个方法被调用了75次,每次调用方法入参集合是100条数据,reserveReceivingCapacityConfigMapper.selectList(query)我抽取了3次调用的结果,看了一下返回结果集合,有返回100条的,有返回95条的,有返回97条的,这3次调用就取个平均值97.reserveReceivingCapacityConfigs集合大小可以看做是97.

@Transactional(rollbackFor = Exception.class)

public Integer updateCapacityConfig(List<ReserveReceivingCapacityConfigPO> pos) {

if (CollectionUtils.isEmpty(pos)) {

return 0;

}

List<ReserveReceivingCapacityConfig> poReserveReceivingCapacityConfigs = ConverterUtil.convert(pos, ReserveReceivingCapacityConfig.class);

List<String> storeIds = poReserveReceivingCapacityConfigs.stream().map(ReserveReceivingCapacityConfig::getStoreId).distinct().collect(Collectors.toList());

List<Integer> dates = poReserveReceivingCapacityConfigs.stream().map(ReserveReceivingCapacityConfig::getConfigDate).distinct().sorted().collect(Collectors.toList());

LambdaQueryWrapper<ReserveReceivingCapacityConfig> query = Wrappers.lambdaQuery();

query.eq(ReserveReceivingCapacityConfig :: getHasDeleted,false);

query.in(ReserveReceivingCapacityConfig :: getStoreId,storeIds);

query.between(ReserveReceivingCapacityConfig :: getConfigDate,dates.get(0),dates.get(dates.size() - 1));

List<ReserveReceivingCapacityConfig> reserveReceivingCapacityConfigs = reserveReceivingCapacityConfigMapper.selectList(query);

Iterator<ReserveReceivingCapacityConfig> iterator = poReserveReceivingCapacityConfigs.iterator();

List<Long> removeIds = Lists.newArrayList();

while (iterator.hasNext()) {

ReserveReceivingCapacityConfig po = iterator.next();

List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) &&

r.getConfigDate().equals(po.getConfigDate()) &&

r.getConfigHour().equals(po.getConfigHour()))

.collect(Collectors.toList());

if (CollectionUtils.isNotEmpty(list)) {

// 如果完全相同则不更新

List<ReserveReceivingCapacityConfig> removes = list.stream().filter(r -> r.getSkuCount().equals(po.getSkuCount()) && r.getProductCount().equals(po.getProductCount())).collect(Collectors.toList());

if (CollectionUtils.isNotEmpty(removes)) {

iterator.remove();

} else {

// 如果配置发生变化,则移除旧的保存新的

removeIds.addAll(list.stream().map(ReserveReceivingCapacityConfig::getId).distinct().collect(Collectors.toList()));

}

}

}

if (CollectionUtils.isNotEmpty(removeIds)) {

log.warn("更新预约容量配置:removeIds = {}", JSONObject.toJSONString(removeIds));

LambdaUpdateWrapper<ReserveReceivingCapacityConfig> updateWrapper = Wrappers.lambdaUpdate();

updateWrapper

.in(ReserveReceivingCapacityConfig::getId, removeIds)

.set(ReserveReceivingCapacityConfig::getHasDeleted, true)

.set(ReserveReceivingCapacityConfig::getTimeUpdate,System.currentTimeMillis());

reserveReceivingCapacityConfigMapper.update(updateWrapper);

}

if (CollectionUtils.isNotEmpty(poReserveReceivingCapacityConfigs)) {

log.warn("更新预约容量配置:pos = {}", JSONObject.toJSONString(poReserveReceivingCapacityConfigs));

return reserveReceivingCapacityConfigMapper.insertBatchSomeColumn(poReserveReceivingCapacityConfigs);

}

return 0;

}

那结果就是在每次循环中,都会对reserveReceivingCapacityConfigs集合列表执行一次过滤操作。由于集合列表大小约为97条记录,且每次批量调用都会执行100次循环,因此这段代码在每次调用中可能会被执行高达9700次过滤操作(100次循环 * 97条记录).

List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) &&

r.getConfigDate().equals(po.getConfigDate()) &&

r.getConfigHour().equals(po.getConfigHour()))

.collect(Collectors.toList());

-

调用链路

- 整个链路调用,总共耗时619908毫秒.

-

数据库DB监控

-

看下出问题的时间段的数据库DB监控:证明这段时间“reservecapacityconfigs”这个表,确实有大量的数据库更新查询操作.

-

-

jmap文件

- 再看看jmap文件:用"com.pupu"去搜索,产生了很多新的对象(ReserveReceivingCapacityConfig).如下图所示,其实就是出问题的那段代码导致的.每次循环产生了1个新的List<ReserveReceivingCapacityConfig>集合对象.循环了75*100=7500次.

- List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) && r.getConfigDate().equals(po.getConfigDate()) && r.getConfigHour().equals(po.getConfigHour())) .collect(Collectors.toList());

- 其实上面看它的执行链路,grafna上看数据库DB情况,这个只能证明出问题的确实是在updateCapacityConfig()这个方法中,匹配了这个方法的执行流程.比如这个方法中确实执行了很多次数据库的更新和查询操作,但定位这个问题需要结合jstack文件和jmap文件.jstack文件展示了这个线程堆栈跟踪的情况,jmap文件显示了Java堆中对象的统计信息,包括类名、对象数量以及占用的内存大小.

3.问题定位

- 经过上面的这些排查,大概可以确定是下面如图所示的循环下(每次调用执行9700次过滤操作)的流操作引起的.

while (iterator.hasNext()) {

ReserveReceivingCapacityConfig po = iterator.next();

List<ReserveReceivingCapacityConfig> list = reserveReceivingCapacityConfigs.stream().filter(r -> r.getStoreId().equals(po.getStoreId()) &&

r.getConfigDate().equals(po.getConfigDate()) &&

r.getConfigHour().equals(po.getConfigHour()))

.collect(Collectors.toList());

- 对于reserveReceivingCapacityConfigs列表中的每个元素,都会调用getStoreId().equals(),getConfigDate().equals()和getConfigHour().equals()方法。这些方法的执行需要CPU计算.虽然单个 equals方法的调用可能消耗的CPU资源很少,但在大量数据或频繁调用(9700次)的情况下,这种消耗会变得显著.

- 过滤后的元素需要通过collect(Collectors.toList())收集到一个新的列表中.collect方法会遍历过滤后的元素,并将它们添加到一个新的ArrayList中。这个遍历和添加操作也需要CPU资源,在大量数据或频繁调用的情况下,这种消耗会变得显著.

4.优化建议

- 因为这块代码是其他开发组的,只单纯的针对于这个问题,是否可以不要在while循环里面每次遍历reserveReceivingCapacityConfigs这整个集合,可不可以把reserveReceivingCapacityConfigs这个集合转换成1个Map,map的key,就是那些比较字段合在一起,比如:storeId_configdate_configHour,value就是对应的每个对象.然后while循环去遍历的时候,直接拼装成key,去map中取就行了,不用每次都要再遍历整个reserveReceivingCapacityConfigs集合了.

5.问题处理

- 停掉该接口对应的定时任务,不让其继续运行.

6.处理结果

- 1.确认对业务没有影响,新建的表,新写的接口,业务还没有开始使用

- 2.第二天,优化后发版上线

- 3.生产问题复盘

浙公网安备 33010602011771号

浙公网安备 33010602011771号