卷积神经网络

实现一个卷积神经网络的一些模块

import numpy as np

import h5py

import matplotlib.pyplot as plt

%matplotlib inline

plt.rcParams['figure.figsize'] = (5.0, 4.0)

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

# 在ipython里已经import过的模块修改后需要重新reload

# 在执行用户代码前,重新装入软件的扩展和模块

%load_ext autoreload

#autoreload 2:装入所有 %aimport 不包含的模块

%autoreload 2

np.random.seed(1) #指定随机种子

Convolutional Neural Networks

Zero-Padding

def zero_pad(X, pad):

"""

Pad with zeros all images of the dataset X. The padding is applied to the height and width of an image,

as illustrated in Figure 1.

Argument:

X -- python numpy array of shape (m, n_H, n_W, n_C) representing a batch of m images

pad -- integer, amount of padding around each image on vertical and horizontal dimensions

Returns:

X_pad -- padded image of shape (m, n_H + 2*pad, n_W + 2*pad, n_C)

"""

# 只是添加维数,且都是添加的0

X_pad = np.pad(X, ((0, 0), (pad, pad), (pad, pad), (0, 0)), 'constant') # constant连续一样的值填充

return X_pad

np.random.seed(1)

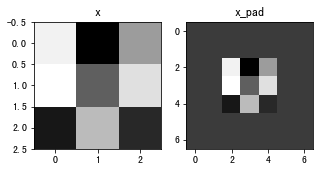

x = np.random.randn(4, 3, 3, 2)

x_pad = zero_pad(x, 2)

print ("x.shape =", x.shape)

print ("x_pad.shape =", x_pad.shape)

print ("x[1,1] =", x[1,1])

print ("x_pad[1,1] =", x_pad[1,1])

fig, axarr = plt.subplots(1, 2)

axarr[0].set_title('x')

axarr[0].imshow(x[0,:,:,0])

axarr[1].set_title('x_pad')

axarr[1].imshow(x_pad[0,:,:,0])

x.shape = (4, 3, 3, 2)

x_pad.shape = (4, 7, 7, 2)

x[1,1] = [[ 0.90085595 -0.68372786]

[-0.12289023 -0.93576943]

[-0.26788808 0.53035547]]

x_pad[1,1] = [[0. 0.]

[0. 0.]

[0. 0.]

[0. 0.]

[0. 0.]

[0. 0.]

[0. 0.]]

<matplotlib.image.AxesImage at 0x7fb98e2a9190>

Single step of convolution

def conv_single_step(a_slice_prev, W, b):

"""

Apply one filter defined by parameters W on a single slice (a_slice_prev) of the output activation

of the previous layer.

Arguments:

a_slice_prev -- slice of input data of shape (f, f, n_C_prev)

W -- Weight parameters contained in a window - matrix of shape (f, f, n_C_prev)

b -- Bias parameters contained in a window - matrix of shape (1, 1, 1)

Returns:

Z -- a scalar value, result of convolving the sliding window (W, b) on a slice x of the input data

"""

s = np.multiply(a_slice_prev, W)

Z = np.sum(s)

Z = Z + float(b)

return Z

np.random.seed(1)

a_slice_prev = np.random.randn(4, 4, 3)

W = np.random.randn(4, 4, 3)

b = np.random.randn(1, 1, 1)

Z = conv_single_step(a_slice_prev, W, b)

print("Z =", Z)

Z = -6.999089450680221

Forward pass

使用多种过滤器对输入的数据进行卷积操作,每个过滤器会产生一个2D的矩阵,把它们堆叠成高维的矩阵。

def conv_forward(A_prev, W, b, hparameters):

"""

Implements the forward propagation for a convolution function

Arguments:

A_prev -- output activations of the previous layer, numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

W -- Weights, numpy array of shape (f, f, n_C_prev, n_C)

b -- Biases, numpy array of shape (1, 1, 1, n_C)

hparameters -- python dictionary containing "stride" and "pad"

Returns:

Z -- conv output, numpy array of shape (m, n_H, n_W, n_C)

cache -- cache of values needed for the conv_backward() function

"""

#获取来自上一层数据的基本信息

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

#获取权重矩阵的基本信息

(f, f, n_C_prev, n_C) = W.shape

#获取超参数hparameters的值

stride = hparameters["stride"]

pad = hparameters["pad"]

#计算卷积后的图像的宽度高度,int()来取整

n_H = int(( n_H_prev - f + 2 * pad )/ stride) + 1

n_W = int(( n_W_prev - f + 2 * pad )/ stride) + 1

#使用0来初始化卷积输出Z

Z = np.zeros((m,n_H,n_W,n_C))

#通过A_prev创建填充过了的A_prev_pad

A_prev_pad = zero_pad(A_prev,pad)

for i in range(m): #遍历样本

a_prev_pad = A_prev_pad[i] #选择第i个样本的扩充后的激活矩阵

for h in range(n_H): #在输出的垂直轴上循环

for w in range(n_W): #在输出的水平轴上循环

for c in range(n_C): #循环遍历输出的通道

#定位当前的切片位置

vert_start = h * stride #竖向,开始的位置

vert_end = vert_start + f #竖向,结束的位置

horiz_start = w * stride #横向,开始的位置

horiz_end = horiz_start + f #横向,结束的位置

#切片位置定位后“穿透”取出来的,

a_slice_prev = a_prev_pad[vert_start:vert_end,horiz_start:horiz_end,:]

#执行单步卷积

Z[i,h,w,c] = conv_single_step(a_slice_prev,W[: ,: ,: ,c],b[0,0,0,c]) # W是矩阵,b是个数

#数据处理完毕,验证数据格式是否正确

assert(Z.shape == (m , n_H , n_W , n_C ))

#存储一些缓存值,以便于反向传播使用

cache = (A_prev,W,b,hparameters)

return (Z , cache)

np.random.seed(1)

A_prev = np.random.randn(10,4,4,3)

W = np.random.randn(2,2,3,8)

b = np.random.randn(1,1,1,8)

hparameters = {"pad" : 2,

"stride": 2}

Z, cache_conv = conv_forward(A_prev, W, b, hparameters)

print("Z's mean =", np.mean(Z))

print("Z[3,2,1] =", Z[3,2,1])

print("cache_conv[0][1][2][3] =", cache_conv[0][1][2][3])

Z's mean = 0.048995203528855794

Z[3,2,1] = [-0.61490741 -6.7439236 -2.55153897 1.75698377 3.56208902 0.53036437

5.18531798 8.75898442]

cache_conv[0][1][2][3] = [-0.20075807 0.18656139 0.41005165]

Pooling layer

池化层会减少输入的宽度和高度,这样它会较少计算量的同时也使特征检测器对其在输入中的位置更加稳定。

Forward Pooling

def pool_forward(A_prev, hparameters, mode = "max"):

"""

Implements the forward pass of the pooling layer

Arguments:

A_prev -- Input data, numpy array of shape (m, n_H_prev, n_W_prev, n_C_prev)

hparameters -- python dictionary containing "f" and "stride"

mode -- the pooling mode you would like to use, defined as a string ("max" or "average")

Returns:

A -- output of the pool layer, a numpy array of shape (m, n_H, n_W, n_C)

cache -- cache used in the backward pass of the pooling layer, contains the input and hparameters

"""

(m, n_H_prev, n_W_prev, n_C_prev) = A_prev.shape

f = hparameters['f']

stride = hparameters['stride']

n_H = int(1 + (n_H_prev - f) / stride)

n_W = int(1 + (n_W_prev - f) / stride)

n_C = n_C_prev

A = np.zeros((m, n_H, n_W, n_C))

for i in range(m):

for h in range(n_H):

for w in range(n_W):

for c in range(n_C):

vert_start = h * stride

vert_end = vert_start + f

horiz_start = w * stride

horiz_end = horiz_start + f

a_prev_slice = A_prev[i, vert_start:vert_end, horiz_start:horiz_end, c]

if mode == 'max':

A[i, h, w, c] = np.max(a_prev_slice)

elif mode == 'average':

A[i, h, w, c] = np.mean(a_prev_slice)

cache = (A_prev, hparameters)

assert(A.shape == (m, n_H, n_W, n_C))

return A, cache

np.random.seed(1)

A_prev = np.random.randn(2, 4, 4, 3)

hparameters = {"stride" : 2, "f": 3}

A, cache = pool_forward(A_prev, hparameters)

print("mode = max")

print("A =", A)

print()

A, cache = pool_forward(A_prev, hparameters, mode = "average")

print("mode = average")

print("A =", A)

mode = max

A = [[[[1.74481176 0.86540763 1.13376944]]]

[[[1.13162939 1.51981682 2.18557541]]]]

mode = average

A = [[[[ 0.02105773 -0.20328806 -0.40389855]]]

[[[-0.22154621 0.51716526 0.48155844]]]]