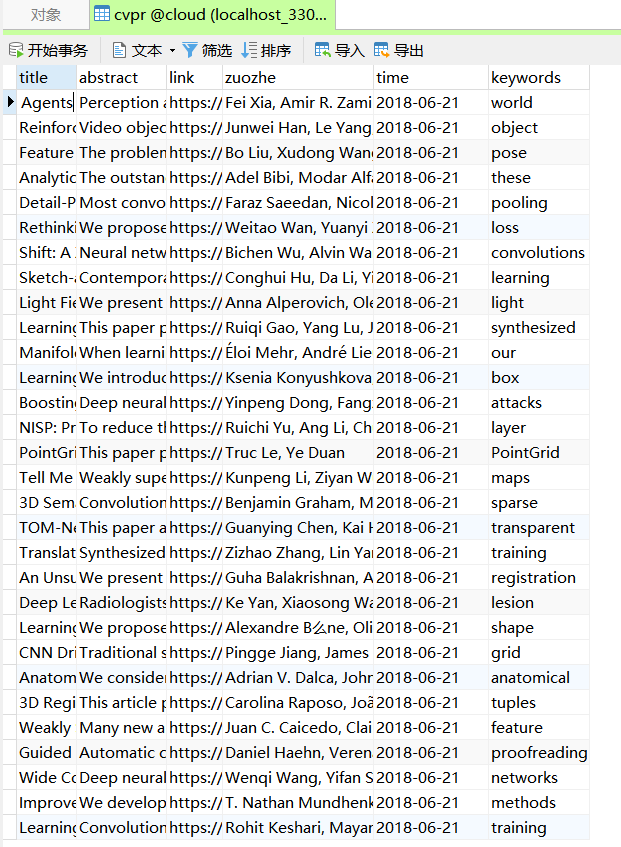

顶会热词统计1

在完成python的相关配置后,首先进行数据的爬取

import requests

import pymysql

from jieba.analyse import extract_tags

from lxml import etree # 导入库

from bs4 import BeautifulSoup

import re

import time

db = pymysql.connect(

host='localhost',

port=3306,

user='root',

password='liutianwen0613',

db='cloud',

charset='utf8'

)

cursor = db.cursor()

# 定义爬虫类

class Spider():

def __init__(self):

self.url = 'https://openaccess.thecvf.com/CVPR2018?day=2018-06-21'

#self.url='https://openaccess.thecvf.com/CVPR2018?day=2018-06-20'

#self.url='https://openaccess.thecvf.com/CVPR2018?day=2018-06-19'

#self.url = 'https://openaccess.thecvf.com/CVPR2019?day=2019-06-18'

#self.url = 'https://openaccess.thecvf.com/CVPR2019?day=2019-06-19'

#self.url = 'https://openaccess.thecvf.com/CVPR2019?day=2019-06-20'

#self.url = 'https://openaccess.thecvf.com/ICCV2019?day=2019-10-29'

#self.url = 'https://openaccess.thecvf.com/ICCV2019?day=2019-10-31'

#self.url = 'https://openaccess.thecvf.com/CVPR2021?day=2021-06-22'

self.headers = {

'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 '

'Safari/537.36 '

}

r = requests.get(self.url, headers=self.headers)

r.encoding = r.apparent_encoding

self.html = r.text

def lxml_find(self):

'''用lxml解析'''

tonum = 200

number = 1

start = time.time() # 三种方式速度对比

selector = etree.HTML(self.html) # 转换为lxml解析的对象

titles = selector.xpath('//dt[@class="ptitle"]/a/@href') # 这里返回的是一个列表

for each in titles[200:]:

title0 = each.strip() # 去掉字符左右的空格

# print("https://openaccess.thecvf.com/content_CVPR_2019"+title[17:])

chaolianjie = "https://openaccess.thecvf.com/content_cvpr_2018" + title0[17:]

req = requests.get(chaolianjie, headers=self.headers)

req.encoding = req.apparent_encoding

onehtml = req.text

selector1 = etree.HTML(req.text)

title = selector1.xpath('//div[@id="papertitle"]/text()')

# print(title[0][1:])

abst = selector1.xpath('//div[@id="abstract"]/text()')

hre0 = selector1.xpath('//a/@href')

hre = "https://openaccess.thecvf.com" + hre0[5][5:]

# print(hre)

zuozhe = selector1.xpath('//dd/div[@id="authors"]/b/i/text()')

va = []

for keywords, weight in extract_tags(abst[0].strip(), topK=1, withWeight=True):

print('%s %s' % (keywords, weight))

va.append(title)

va.append(hre)

va.append(abst)

va.append(zuozhe)

va.append("2018-06-21")

va.append(keywords)

sql = "insert into cvpr (title,link,abstract,zuozhe,time,keywords) values (%s,%s,%s,%s,%s,%s)"

cursor.execute(sql, va)

db.commit()

print("已爬取" + str(number) + "条数据")

number = number + 1

end = time.time()

print('共耗时:', end - start)

if __name__ == '__main__':

spider = Spider()

spider.lxml_find()

cursor.close()

db.close()

python初学者爬取数据完整教程