cannot assign instance of scala.Some to field org.apache.spark.scheduler.Task.appAttemptId

问题

执行 spark程序,出现以下异常:

Caused by: java.lang.ClassCastException: cannot assign instance of scala.Some to

field org.apache.spark.scheduler.Task.appAttemptId of type scala.Option

in instance of org.apache.spark.scheduler.ResultTask

at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2301)

at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1431)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2350)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2268)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2126)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1625)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:465)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:423)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:75)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:114)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:376)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

原因

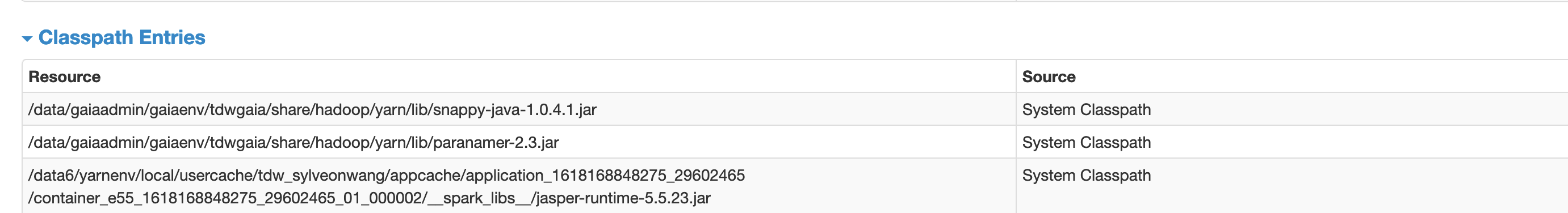

一般是由于scala的sdk版本冲突导致,检查spark集群环境的jar包,在spark程序监控页面的Enviroment Tab下检查,在下图这个位置(图太大了不全),

此时通过浏览器搜索scala, 就能发现spark集群环境中的scala包了,我们将其称之为A版本。

然后使用vim 打开jar包,搜索scala,确定打包的jar中存在scala库类,再借助mvn dependency:tree 命令分析依赖(或是其他构建工具对应的方法),找到B版本的scala库sdk。

解决

从AB两个版本的中选择一个,如将被传递依赖引入的scala库给exclude掉,或是在打包阶段过滤掉等。