sklearn机器学习-特征提取1

scikit-learn机器学习的特征提取部分较多nlp内容,故学到一半学不下去,看完nltk再来补上

scikit-learn机器学习的特征提取这一章感觉讲的不是特别好,所以会结合着来看

首先是Dictvectorizer

from sklearn.feature_extraction import DictVectorizer

onehot_encoder = DictVectorizer()

X = [{'city':'New York'},{'city':'San Francisco'},{'city':'Chapel Hill'}]

print(onehot_encoder.fit_transform(X).toarray())

[[0. 1. 0.]

[0. 0. 1.]

[1. 0. 0.]]

这里的toarray方法,在很多one-hot方法中都有,需要注意一下

然后这里必须传入字典形式

measurements = [{'city':'Beijing','country':'CN','temperature':33.},{'city':'London','country':'UK','temperature':12.},{'city':'San Fransisco','country':'USA','temperature':18.}]

#从sklearn.feature_extraction导入DictVectorizer

from sklearn.feature_extraction import DictVectorizer

vec = DictVectorizer()

#输出转化后的特征矩阵

print(vec.fit_transform(measurements).toarray())

#输出各个维度的特征含义

print(vec.get_feature_names_out())

[[ 1. 0. 0. 1. 0. 0. 33.]

[ 0. 1. 0. 0. 1. 0. 12.]

[ 0. 0. 1. 0. 0. 1. 18.]]

['city=Beijing' 'city=London' 'city=San Fransisco' 'country=CN'

'country=UK' 'country=USA' 'temperature']

StandardScalar:

$X = \frac{x-\mu}{\sigma}$

MinMaxScalar:

$X = \frac{x-x_{min}(axis=0)}{x_{max}(axis=0)-x_{min}(axis=0)}$

scale:

$X = \frac{x-x_{mean}}{\sigma}$

或者还可以使用RoubustScalar

from sklearn import preprocessing

import numpy as np

X = np.array([[0,0,5,13,9,1],[0,0,13,15,10,15],[0,3,15,2,0,11]])

print(preprocessing.scale(X))

[[ 0. -0.70710678 -1.38873015 0.52489066 0.59299945 -1.35873244]

[ 0. -0.70710678 0.46291005 0.87481777 0.81537425 1.01904933]

[ 0. 1.41421356 0.9258201 -1.39970842 -1.4083737 0.33968311]]

或者也可以直接写

from sklearn.preprocessing import scale

import numpy as np

X = np.array([[0,0,5,13,9,1],[0,0,13,15,10,15],[0,3,15,2,0,11]])

sc = scale(X)

print(sc)

词袋模型

希望我能用一段中文描述,多记一下这个CountVectorizer

CountVectorizer是属于常见的特征数值计算类,是一个文本特征提取方法,对于每一个训练文本,只考虑每种词汇在该训练文本中出现的频率,也即ConutVectorizer会将文本中的词语转换为词频矩阵

corpus = ['UNC played Duke in basketball','duke lost the basketball game']

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

print(vectorizer.fit_transform(corpus).todense())

print(vectorizer.vocabulary_)

注意,这里有Duke,还有duke

[[1 1 0 1 0 1 0 1]

[1 1 1 0 1 0 1 0]]

{'unc': 7, 'played': 5, 'duke': 1, 'in': 3, 'basketball': 0, 'lost': 4, 'the': 6, 'game': 2}

corpus.append("I ate a sandwich")

print(vectorizer.fit_transform(corpus).todense())

print(vectorizer.vocabulary_)

[[0 1 1 0 1 0 1 0 0 1]

[0 1 1 1 0 1 0 0 1 0]

[1 0 0 0 0 0 0 1 0 0]]

{'unc': 9, 'played': 6, 'duke': 2, 'in': 4, 'basketball': 1, 'lost': 5, 'the': 8, 'game': 3, 'ate': 0, 'sandwich': 7}

上述文档中,第一行应该是和第二行比较接近,在sklearn中,用eculidean_distances来计算向量间的距离

from sklearn.metrics.pairwise import euclidean_distances

from sklearn.feature_extraction.text import CountVectorizer

corpus = ['UNC played Duke in basketball','Duke lost the basketball game','I ate a sandwich']# 文集

vectorizer =CountVectorizer()#

counts = vectorizer.fit_transform(corpus).todense() #得到文集corpus的特征向量,并将其转为密集矩阵

print(counts)

for x,y in [[0,1],[0,2],[1,2]]:

dist = euclidean_distances(counts[x],counts[y])

print('文档{}与文档{}的距离{}'.format(x,y,dist))

[[0 1 1 0 1 0 1 0 0 1]

[0 1 1 1 0 1 0 0 1 0]

[1 0 0 0 0 0 0 1 0 0]]

文档0与文档1的距离[[2.44948974]]

文档0与文档2的距离[[2.64575131]]

文档1与文档2的距离[[2.64575131]]

维度太高的话,复杂度会比较大,一般需要用到降维的方法

方法一在上述中用到了,就是所有大写都变成了小写

方法二,停用词过滤,即加上stop_words

vectorizer = CountVectorizer(stop_words='english')

print(vectorizer.fit_transform(corpus).todense())

print(vectorizer.vocabulary_)

[[0 1 1 0 0 1 0 1]

[0 1 1 1 1 0 0 0]

[1 0 0 0 0 0 1 0]]

{'unc': 7, 'played': 5, 'duke': 2, 'basketball': 1, 'lost': 4, 'game': 3, 'ate': 0, 'sandwich': 6}

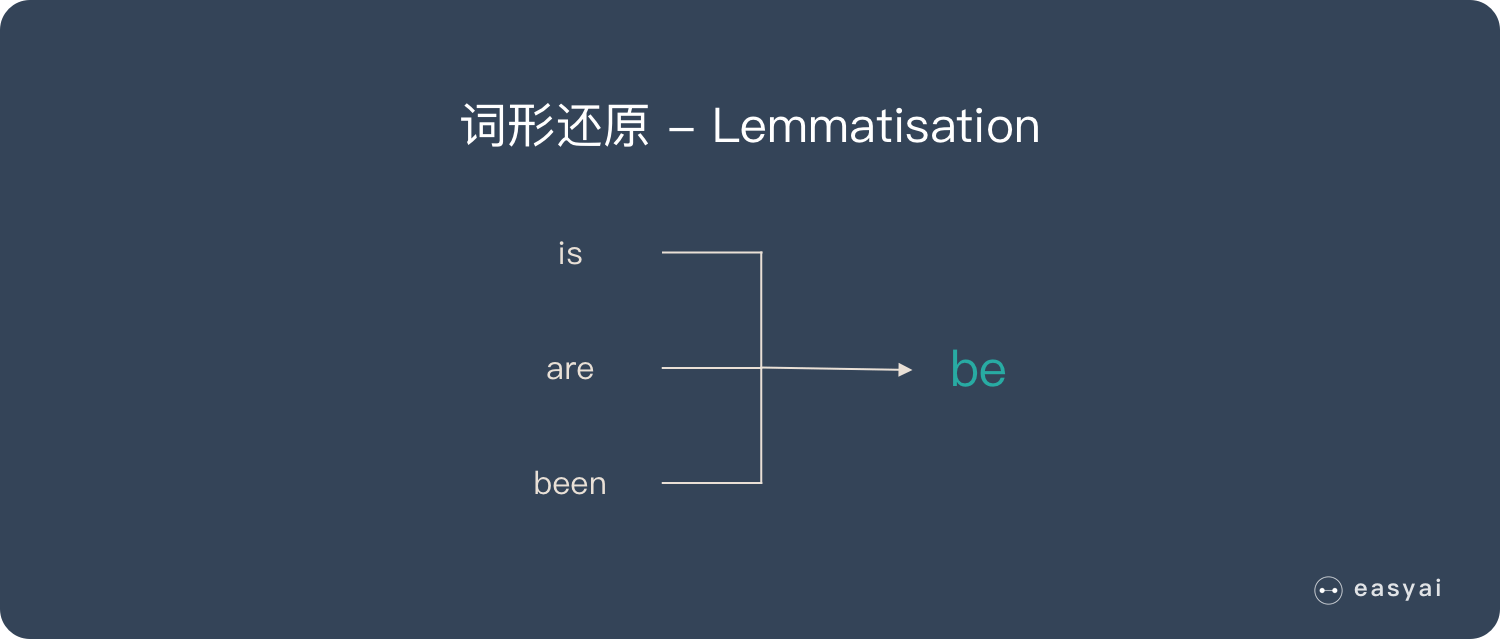

方法三,还是CountVectorizer,这次是词干提取和词形还原

词干提取:去后缀

词形还原:将单词的复杂形态转变成最基础的形态

词干提取主要方法:Porter、Snowball、Lancaster

词形还原主要方法:使用NLTK库,再加上WordNet方法

在实际的代码中,注意一下一下内容的输出

corpus = ['he ate the sandwiches ','Every sandwich was eaten by him']

vectorizer = CountVectorizer(binary=True,stop_words='english')

print(vectorizer.fit_transform(corpus).todense())

print(vectorizer.vocabulary_)

[[1 0 0 1]

[0 1 1 0]]

{'ate': 0, 'sandwiches': 3, 'sandwich': 2, 'eaten': 1}

corpus = ['he ate the sandwiches every day','Every sandwich was eaten by him']

vectorizer = CountVectorizer(binary=True,stop_words='english')

print(vectorizer.fit_transform(corpus).todense())

print(vectorizer.vocabulary_)

[[1 1 0 0 1]

[0 0 1 1 0]]

{'ate': 0, 'sandwiches': 4, 'day': 1, 'sandwich': 3, 'eaten': 2}

corpus = ['jack ate the sandwiches every day','Every sandwich was eaten by him']

vectorizer = CountVectorizer(binary=True,stop_words='english')

print(vectorizer.fit_transform(corpus).todense())

print(vectorizer.vocabulary_)

[[1 1 0 1 0 1]

[0 0 1 0 1 0]]

{'jack': 3, 'ate': 0, 'sandwiches': 5, 'day': 1, 'sandwich': 4, 'eaten': 2}

可以看出,上述的词干提取,并不是简答剔除重复的,而是将无用的信息页剔除掉了,这一点需要注意。同时也反映了CountVectorizer的好用

接下来使用nltk

corpus = ['I am gathering ingredients for the sandwich.','There were many wizards at the gathering.']

from nltk.stem.wordnet import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

print(lemmatizer.lemmatize('gathering','v'))

print(lemmatizer.lemmatize('gathering','n'))

gather

gathering