cs231n(一)

1.Nearest Neighbor Classifier

计算未分类数据(图片)与已标记类别的训练数据(training set)的距离(L1,L2等距离),然后返回与训练数据(图片)最小距离的图片所属类别作为此未分类图片的类别。

此分类器对异常数据非常敏感,容易受到噪声的影响,波动大。

2.K Nearest Neighbor Classifier

为了解决Nearest Neighbor Classifier的缺点,我们使用离待分类数据最近的k个点的类别进行投票决定待分类点的类别,这样可以使分类器对于异常点有更好的robost。对于k值的选择,需要经验和对比。

3.使用Validation sets 对我们的模型进行Hyperparameter 的调节

把训练集划分为训练和验证两个部分,使用验证集来调整所有的Hyperparameters,最后在test set中进行一次测试,作为模型的最终性能。

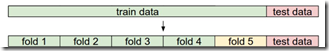

对于把训练集分为训练和验证两部分,有专门的cross validation方法,对于k折交叉验证,就是把训练集平均分为k份,每次把k-1份作为训练集,进行训练,把剩下的一份作为验证集,把验证集的结果平均作为模型性能进行调参。常用的有3折交叉验证,5折交叉验证,10折交叉验证,cross-validation有个不足就是需要的计算开销比较大,不适合大规模的数据训练。

4.pros and cons of Nearest Neighbor Classifier.

Pros: 易于实现和理解,不需要消耗训练时间(根本不需要训练)

Cons: 在分类时,待分类的数据需要与每个训练数据计算距离,当训练数据量大时,需要消耗不少的计算时间,而在实际应用中,我们是要求在识别分类时更快更有效率。

As an aside, the computational complexity of the Nearest Neighbor classifier is an active area of research, and several Approximate Nearest Neighbor (ANN) algorithms and libraries exist that can accelerate the nearest neighbor lookup in a dataset (e.g. FLANN). These algorithms allow one to trade off the correctness of the nearest neighbor retrieval with its space/time complexity during retrieval, and usually rely on a pre-processing/indexing stage that involves building a kdtree, or running the k-means algorithm.

深度神经网络刚好相反,在训练的时候需要耗费很长的训练时间,一旦训练完成,在使用的时候就非常高效,这就是我们所期望的。

5.应用场景:

Nearest Neighbor Classifier 可能对一些低维的数据有很好的表现,但在实际的图片分类中基本没什么卵用。为什么这样说呢,一是图片一般都是高维对象,Pixel-based distances on high-dimensional data (and images especially) can be very unintuitive

images that are nearby each other are much more a function of the general color distribution of the images, or the type of background rather than their semantic identity.

6.总结

we saw that the use of L1 or L2 distances on raw pixel values is not adequate since the distances correlate more strongly with backgrounds and color distributions of images than with their semantic content

浙公网安备 33010602011771号

浙公网安备 33010602011771号