CDH5.7Hadoop集群搭建(离线版)

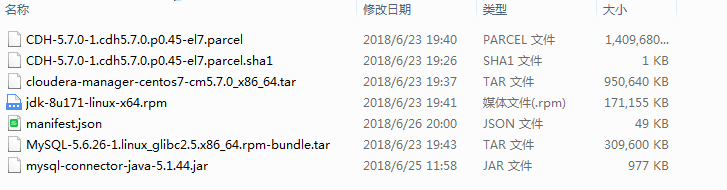

一、安装介质的准备

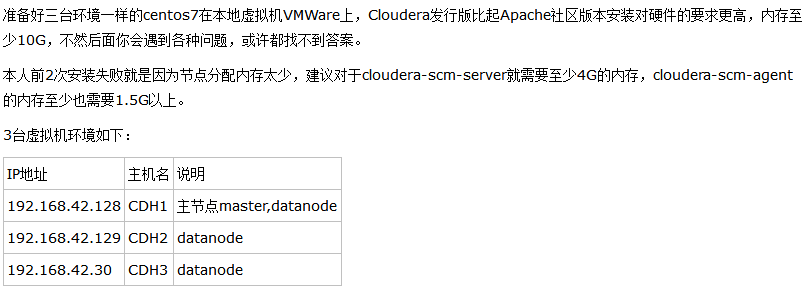

二、操作系统的准备

三.控制节点的准备步骤

1)安装jdk(首先卸载原有的openJDK)

[root@cdh1~]$ java -version [root@cdh1~]$ rpm -qa | grep jdk java-1.7.0-openjdk-1.7.0.75-2.5.4.2.el7_0.x86_64 java-1.7.0-openjdk-headless-1.7.0.75-2.5.4.2.el7_0.x86_64 [root@cdh1~]# yum -y remove java-1.7.0-openjdk-1.7.0.75-2.5.4.2.el7_0.x86_64 [root@cdh1~]# yum -y remove java-1.7.0-openjdk-headless-1.7.0.75-2.5.4.2.el7_0.x86_64 [root@cdh1~]# java -version bash: /usr/bin/java: No such file or directory [root@cdh1~]# rpm -ivh jdk-8u101-linux-x64.rpm [root@cdh1~]# java -version java version "1.8.0_101" Java(TM) SE Runtime Environment (build 1.8.0_101-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.101-b13, mixed mode)

2)修改NETWORKING,HOSTNAME,hosts(编辑的时候直接把内容添加到对应的

[root@cdh1~]# vi /etc/sysconfig/network NETWORKING=yes HOSTNAME=cdh1 [root@cdh1~]# vi /etc/hosts 127.0.0.1 localhost.cdh1 192.168.42.128 cdh1 192.168.42.129 cdh2 192.168.42.130 cdh3

3)selinux关闭,然后机器重启

[root@cdh1~]# vi /etc/sysconfig/selinux SELINUX=disabled //重启 [root@cdh1~]#reboot //重启之后检查是否已经关闭 [root@cdh1~]#sestatus -v SELinux status: disabled 表示已经关闭了

4)关闭防火墙

[root@cdh1~]# systemctl stop firewalld [root@cdh1~]# systemctl disable firewalld rm '/etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service' rm '/etc/systemd/system/basic.target.wants/firewalld.service' [root@cdh1~]# systemctl status firewalld firewalld.service - firewalld - dynamic firewall daemon Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled) Active: inactive (dead)

5)ssh无密码登录配置,在控制节点中去设置各个节点免登录密码

下面以192.168.42.128到192.168.42.129的免密登录设置举例

[root@cdh1 /]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): /root/.ssh/id_rsa already exists. Overwrite (y/n)? y Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 1d:e9:b4:ed:1d:e5:c6:a7:f3:23:ac:02:2b:8c:fc:ca root@cdh1 The key's randomart image is: +--[ RSA 2048]----+ | | | . | | + .| | + + + | | S + . . =| | . . . +.| | . o o o + | | .o o . . o + | | Eo.. ... . o| +-----------------+ [root@cdh1 /]# ssh-copy-id 192.168.42.129 /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@192.168.42.129's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '192.168.42.129'" and check to make sure that only the key(s) you wanted were added.

6)安装mysql

[root@cdh1 /]# rpm -qa | grep mariadb

mariadb-libs-5.5.41-2.el7_0.x86_64

[root@cdh1 /]# rpm -e --nodeps mariadb-libs-5.5.41-2.el7_0.x86_64

[root@cdh1 /]# tar -xvf MySQL-5.6.26-1.linux_glibc2.5.x86_64.rpm-bundle.tar //mysql rpm包拷贝到服务器上然后解压

//在解压出来的rpm按照一下顺序安装,安装语句为 rpm -ivh

/*

mysql-shared

mysql-server

mysql-client

mysql-devel

mysql-shared

*/

[root@cdh1 /]# cp /usr/share/mysql/my-default.cnf /etc/my.cnf

[root@cdh1 /]# vi /etc/my.cnf //在配置文件中增加以下配置并保存

[mysqld]

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

[root@cdh1 /]# /usr/bin/mysql_install_db //初始化mysql

[root@cdh1 /]# service mysql restart //启动mysql

ERROR! MySQL server PID file could not be found!

Starting MySQL... SUCCESS!

[root@cdh1 /]# cat /root/.mysql_secret //查看mysql root初始化密码

# The random password set for the root user at Fri Sep 22 11:13:25 2017 (local time): 9mp7uYFmgt6drdq3

[root@cdh1 /]# mysql -u root -p9mp7uYFmgt6drdq3 //登录进行去更改密码

mysql> SET PASSWORD=PASSWORD('123456');

mysql>use mysql

mysql> update user set host='%' where user='root' and host='localhost'; //允许mysql远程访问

Query OK, 1 row affected (0.05 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

[root@cdh1 /]# chkconfig mysql on //配置开机启动

[root@cdh1 /]# tar -zcvf mysql-connector-java-5.1.44.tar.gz // 解压mysql-connector-java-5.1.44.tar.gz得到mysql-connector-java-5.1.44-bin.jar

[root@cdh1 /]# mkdir /usr/share/java // 在各节点创建java文件夹

[root@cdh1 /]# cp mysql-connector-java-5.1.44-bin.jar /usr/share/java/mysql-connector-java.jar //将mysql-connector-java-5.1.44-bin.jar拷贝到/usr/share/java路径下并重命名为mysql-connector-java.jar

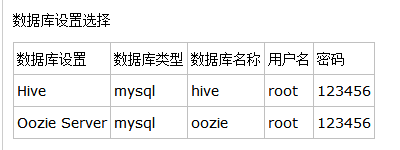

- 创建数据库

create database hive DEFAULT CHARSET utf8 COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) create database amon DEFAULT CHARSET utf8 COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) create database hue DEFAULT CHARSET utf8 COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) create database monitor DEFAULT CHARSET utf8 COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) create database oozie DEFAULT CHARSET utf8 COLLATE utf8_general_ci; Query OK, 1 row affected (0.00 sec) grant all on *.* to root@"%" Identified by "123456"; //这些数据库用了cdh集成的选取数据库步骤中使用

7)安装Cloudera-Manager

//解压cm tar包到指定目录所有服务器都要(或者在主节点解压好,然后通过scp到各个节点同一目录下)

[root@cdh1 ~]#mkdir /opt/cloudera-manager

[root@cdh1 ~]# tar -axvf cloudera-manager-centos7-cm5.7.0_x86_64.tar.gz -C /opt/cloudera-manager

//创建cloudera-scm用户(所有节点)

[root@cdh1 ~]# useradd --system --home=/opt/cloudera-manager/cm-5.7.0/run/cloudera-scm-server --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

//在主节点创建cloudera-manager-server的本地元数据保存目录

[root@cdh1 ~]# mkdir /var/cloudera-scm-server

[root@cdh1 ~]# chown cloudera-scm:cloudera-scm /var/cloudera-scm-server

[root@cdh1 ~]# chown cloudera-scm:cloudera-scm /opt/cloudera-manager

//配置从节点cloudera-manger-agent指向主节点服务器

[root@cdh1 ~]# vi /opt/cloudera-manager/cm-5.7.0/etc/cloudera-scm-agent/config.ini

将server_host改为CMS所在的主机名即cdh1

//主节点中创建parcel-repo仓库目录

[root@cdh1 ~]# mkdir -p /opt/cloudera/parcel-repo

[root@cdh1 ~]# chown cloudera-scm:cloudera-scm /opt/cloudera/parcel-repo

[root@cdh1 ~]# cp CDH-5.7.0-1.cdh5.7.0.p0.18-el7.parcel CDH-5.7.0-1.cdh5.7.0.p0.18-el7.parcel.sha manifest.json /opt/cloudera/parcel-repo

注意:其中CDH-5.7.0-1.cdh5.7.0.p0.18-el5.parcel.sha1 后缀要把1去掉

//所有节点创建parcels目录

[root@cdh1 ~]# mkdir -p /opt/cloudera/parcels

[root@cdh1 ~]# chown cloudera-scm:cloudera-scm /opt/cloudera/parcels

解释:Clouder-Manager将CDHs从主节点的/opt/cloudera/parcel-repo目录中抽取出来,分发解压激活到各个节点的/opt/cloudera/parcels目录中

//初始脚本配置数据库scm_prepare_database.sh(在主节点上)

[root@cdh1 ~]# /opt/cloudera-manager/cm-5.7.0/share/cmf/schema/scm_prepare_database.sh mysql -hcdh1 -uroot -p123456 --scm-host cdh1 scmdbn scmdbu scmdbp

说明:这个脚本就是用来创建和配置CMS需要的数据库的脚本。各参数是指:

mysql:数据库用的是mysql,如果安装过程中用的oracle,那么该参数就应该改为oracle。

-cdh1:数据库建立在cdh1主机上面,也就是主节点上面。

-uroot:root身份运行mysql。-123456:mysql的root密码是***。

--scm-host cdh1:CMS的主机,一般是和mysql安装的主机是在同一个主机上,最后三个参数是:数据库名,数据库用户名,数据库密码。

如果报错:

ERROR com.cloudera.enterprise.dbutil.DbProvisioner - Exception when creating/dropping database with user 'root' and jdbc url 'jdbc:mysql://localhost/?useUnicode=true&characterEncoding=UTF-8'

java.sql.SQLException: Access denied for user 'root'@'cdh1' (using password: YES)

则参考 http://forum.spring.io/forum/spring-projects/web/57254-java-sql-sqlexception-access-denied-for-user-root-localhost-using-password-yes

运行如下命令:

update user set PASSWORD=PASSWORD('123456') where user='root';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'cdh1' IDENTIFIED BY '123456' WITH GRANT OPTION;

FLUSH PRIVILEGES;

//启动主节点

[root@cdh1 ~]# cp /opt/cloudera-manager/cm-5.7.0/etc/init.d/cloudera-scm-server /etc/init.d/cloudera-scm-server

[root@cdh1 ~]# chkconfig cloudera-scm-server on

[root@cdh1 ~]# vi /etc/init.d/cloudera-scm-server

CMF_DEFAULTS=${CMF_DEFAULTS:-/etc/default}改为=/opt/cloudera-manager/cm-5.7.0/etc/default

[root@cdh1 ~]# service cloudera-scm-server start

//同时为了保证在每次服务器重启的时候都能启动cloudera-scm-server,应该在开机启动脚本/etc/rc.local中加入命令:service cloudera-scm-server restart

//启动cloudera-scm-agent所有节点

[root@cdhX ~]# mkdir /opt/cloudera-manager/cm-5.7.0/run/cloudera-scm-agent

[root@cdhX ~]# cp /opt/cloudera-manager/cm-5.7.0/etc/init.d/cloudera-scm-agent /etc/init.d/cloudera-scm-agent

[root@cdhX ~]# chkconfig cloudera-scm-agent on

[root@cdhX ~]# vi /etc/init.d/cloudera-scm-agent

CMF_DEFAULTS=${CMF_DEFAULTS:-/etc/default}改为=/opt/cloudera-manager/cm-5.7.0/etc/default

[root@cdhX ~]# service cloudera-scm-agent start

//同时为了保证在每次服务器重启的时候都能启动cloudera-scm-agent,应该在开机启动脚本/etc/rc.local中加入命令:service cloudera-scm-agent restart

四.数据节点的准备步骤

1)安装jdk,首先卸载原有的openJDK

[root@cdh2~]$ java -version [root@cdh2~]$ rpm -qa | grep jdk java-1.7.0-openjdk-1.7.0.75-2.5.4.2.el7_0.x86_64 java-1.7.0-openjdk-headless-1.7.0.75-2.5.4.2.el7_0.x86_64 [root@cdh2~]# yum -y remove java-1.7.0-openjdk-1.7.0.75-2.5.4.2.el7_0.x86_64 [root@cdh2~]# yum -y remove java-1.7.0-openjdk-headless-1.7.0.75-2.5.4.2.el7_0.x86_64 [root@cdh2~]# java -version bash: /usr/bin/java: No such file or directory [root@cdh2~]# rpm -ivh jdk-8u101-linux-x64.rpm [root@cdh2~]# java -version java version "1.8.0_101" Java(TM) SE Runtime Environment (build 1.8.0_101-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.101-b13, mixed mode)

2)修改NETWORKING,HOSTNAME,hosts(编辑的时候直接把内容添加到对应的)

[root@cdh2~]# vi /etc/sysconfig/network NETWORKING=yes HOSTNAME=cdh2 [root@cdh2~]# vi /etc/hosts 127.0.0.1 localhost.cdh2 192.168.42.128 cdh1 192.168.42.129 cdh2 192.168.42.130 cdh3

3)关闭selinux

[root@cdh2~]# vi /etc/sysconfig/selinux SELINUX=disabled [root@cdh2~]#sestatus -v SELinux status: disabled 表示已经关闭了

4)关闭防火墙

[root@cdh1~]# systemctl stop firewalld [root@cdh1~]# systemctl disable firewalld rm '/etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service' rm '/etc/systemd/system/basic.target.wants/firewalld.service' [root@cdh1~]# systemctl status firewalld firewalld.service - firewalld - dynamic firewall daemon Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled) Active: inactive (dead)

5)安装Cloudera-Manager

//解压cm tar包到指定目录所有服务器都要(或者在主节点解压好,然后通过scp到各个节点同一目录下)

[root@cdh2 ~]#mkdir /opt/cloudera-manager

[root@cdh2 ~]# tar -axvf cloudera-manager-centos7-cm5.7.2_x86_64.tar.gz -C /opt/cloudera-manager

//创建cloudera-scm用户(所有节点)

[root@cdh2 ~]# useradd --system --home=/opt/cloudera-manager/cm-5.7.2/run/cloudera-scm-server --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

//配置从节点cloudera-manger-agent指向主节点服务器

[root@cdh2 ~]# vi /opt/cloudera-manager/cm-5.7.2/etc/cloudera-scm-agent/config.ini

将server_host改为CMS所在的主机名即cdh1

//所有节点创建parcels目录

[root@cdh2 ~]# mkdir -p /opt/cloudera/parcels

[root@cdh2 ~]# chown cloudera-scm:cloudera-scm /opt/cloudera/parcels

解释:Clouder-Manager将CDHs从主节点的/opt/cloudera/parcel-repo目录中抽取出来,分发解压激活到各个节点的/opt/cloudera/parcels目录中

//启动cloudera-scm-agent所有节点

[root@cdh2 ~]# mkdir /opt/cloudera-manager/cm-5.7.2/run/cloudera-scm-agent

[root@cdh2 ~]# cp /opt/cloudera-manager/cm-5.7.2/etc/init.d/cloudera-scm-agent /etc/init.d/cloudera-scm-agent

[root@cdh2 ~]# chkconfig cloudera-scm-agent on

[root@cdh2 ~]# vi /etc/init.d/cloudera-scm-agent

CMF_DEFAULTS=${CMF_DEFAULTS:-/etc/default}改为=/opt/cloudera-manager/cm-5.7.2/etc/default

[root@cdhX ~]# service cloudera-scm-agent start

//同时为了保证在每次服务器重启的时候都能启动cloudera-scm-agent,应该在开机启动脚本/etc/rc.local中加入命令:service cloudera-scm-agent restart

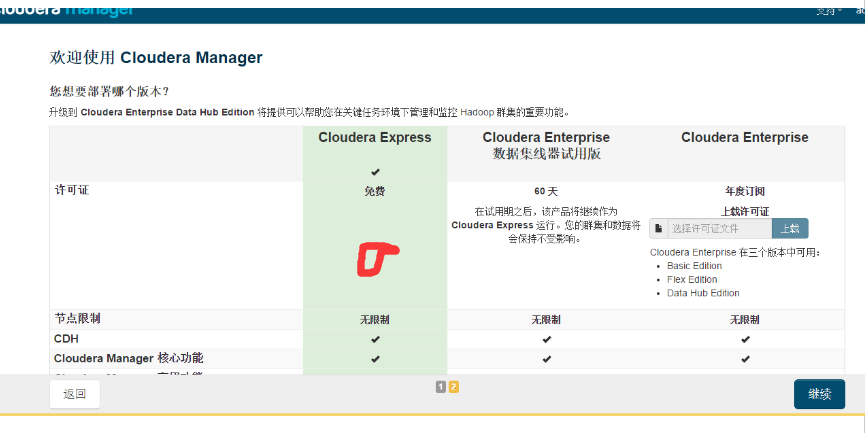

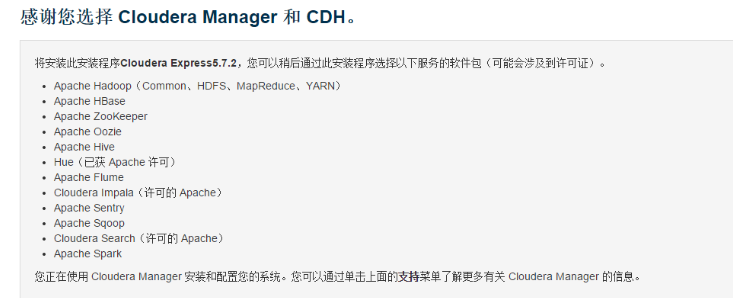

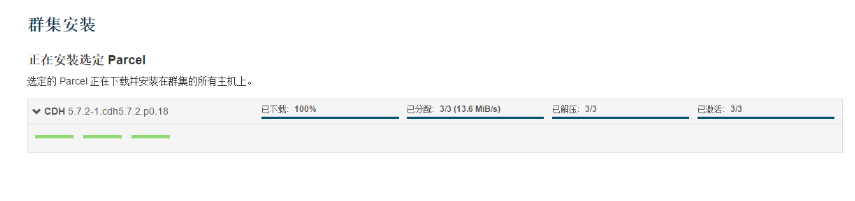

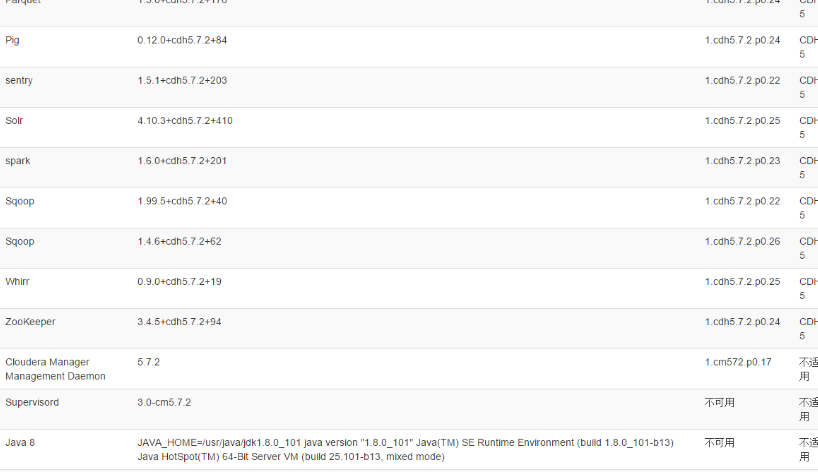

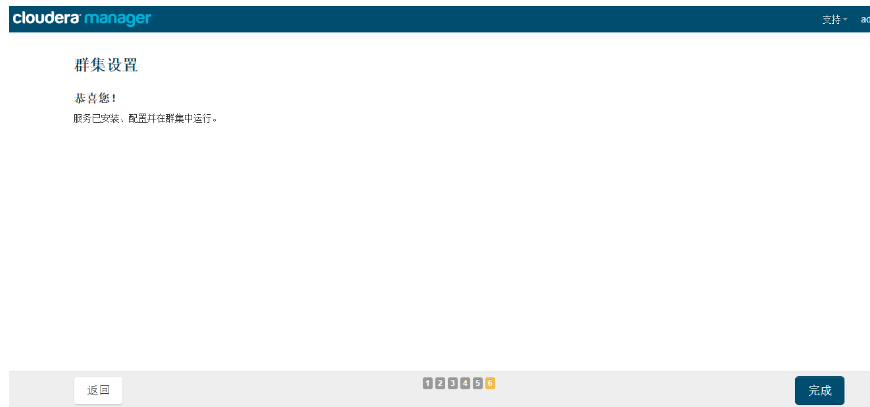

五.在线浏览器安装CDH

1)在浏览器输入192.168.42.128:7180,账号和密码都为admin,完成登录

2)进行以下图示安装

六、测试

在集群的一台机器上执行以下模拟Pi的示例程序:

sudo -u hdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi 10 100

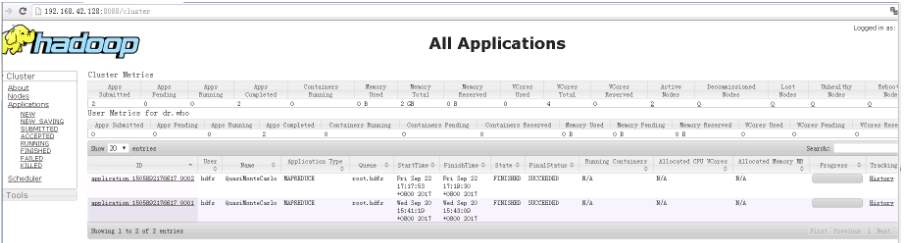

通过YARN的Web管理界面也可以看到MapReduce的执行状态:

MapReduce执行过程中终端的输出如下:

Number of Maps = 10

Samples per Map = 100

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Wrote input for Map #5

Wrote input for Map #6

Wrote input for Map #7

Wrote input for Map #8

Wrote input for Map #9

Starting Job

17/09/22 17:17:50 INFO client.RMProxy: Connecting to ResourceManager at cdh1/192.168.42.128:8032

17/09/22 17:17:52 INFO input.FileInputFormat: Total input paths to process : 10

17/09/22 17:17:52 INFO mapreduce.JobSubmitter: number of splits:10

17/09/22 17:17:53 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1505892176617_0002

17/09/22 17:17:53 INFO impl.YarnClientImpl: Submitted application application_1505892176617_0002

17/09/22 17:17:54 INFO mapreduce.Job: The url to track the job: http://cdh1:8088/proxy/application_1505892176617_0002/

17/09/22 17:17:54 INFO mapreduce.Job: Running job: job_1505892176617_0002

17/09/22 17:18:07 INFO mapreduce.Job: Job job_1505892176617_0002 running in uber mode : false

17/09/22 17:18:07 INFO mapreduce.Job: map 0% reduce 0%

17/09/22 17:18:22 INFO mapreduce.Job: map 10% reduce 0%

17/09/22 17:18:29 INFO mapreduce.Job: map 20% reduce 0%

17/09/22 17:18:37 INFO mapreduce.Job: map 30% reduce 0%

17/09/22 17:18:43 INFO mapreduce.Job: map 40% reduce 0%

17/09/22 17:18:49 INFO mapreduce.Job: map 50% reduce 0%

17/09/22 17:18:56 INFO mapreduce.Job: map 60% reduce 0%

17/09/22 17:19:02 INFO mapreduce.Job: map 70% reduce 0%

17/09/22 17:19:10 INFO mapreduce.Job: map 80% reduce 0%

17/09/22 17:19:16 INFO mapreduce.Job: map 90% reduce 0%

17/09/22 17:19:24 INFO mapreduce.Job: map 100% reduce 0%

17/09/22 17:19:30 INFO mapreduce.Job: map 100% reduce 100%

17/09/22 17:19:32 INFO mapreduce.Job: Job job_1505892176617_0002 completed successfully

17/09/22 17:19:32 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=91

FILE: Number of bytes written=1308980

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2590

HDFS: Number of bytes written=215

HDFS: Number of read operations=43

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=10

Launched reduce tasks=1

Data-local map tasks=10

Total time spent by all maps in occupied slots (ms)=58972

Total time spent by all reduces in occupied slots (ms)=5766

Total time spent by all map tasks (ms)=58972

Total time spent by all reduce tasks (ms)=5766

Total vcore-seconds taken by all map tasks=58972

Total vcore-seconds taken by all reduce tasks=5766

Total megabyte-seconds taken by all map tasks=60387328

Total megabyte-seconds taken by all reduce tasks=5904384

Map-Reduce Framework

Map input records=10

Map output records=20

Map output bytes=180

Map output materialized bytes=340

Input split bytes=1410

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=340

Reduce input records=20

Reduce output records=0

Spilled Records=40

Shuffled Maps =10

Failed Shuffles=0

Merged Map outputs=10

GC time elapsed (ms)=1509

CPU time spent (ms)=10760

Physical memory (bytes) snapshot=4541886464

Virtual memory (bytes) snapshot=30556168192

Total committed heap usage (bytes)=3937402880

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1180

File Output Format Counters

Bytes Written=97

Job Finished in 102.286 seconds

Estimated value of Pi is 3.14800000000000000000

每一章内容都是心血,希望大家不要抄袭~

浙公网安备 33010602011771号

浙公网安备 33010602011771号