一 .BeautifulSoup库使用和参数

1 .Beautiful简介

简单来说,Beautiful Soup是python的一个库,最主要的功能是从网页抓取数据。官方解释如下:

Beautiful Soup提供一些简单的、python式的函数用来处理导航、搜索、修改分析树等功能。

它是一个工具箱,通过解析文档为用户提供需要抓取的数据,因为简单,所以不需要多少代码就可以写出一个完整的应用程序。

Beautiful Soup自动将输入文档转换为Unicode编码,输出文档转换为utf-8编码。你不需要考虑编码方式

,除非文档没有指定一个编码方式,这时,Beautiful Soup就不能自动识别编码方式了。然后,你仅仅需要说明

一下原始编码方式就可以了。Beautiful Soup已成为和lxml、html6lib一样出色的python解释器,

为用户灵活地提供不同的解析策略或强劲的速度。

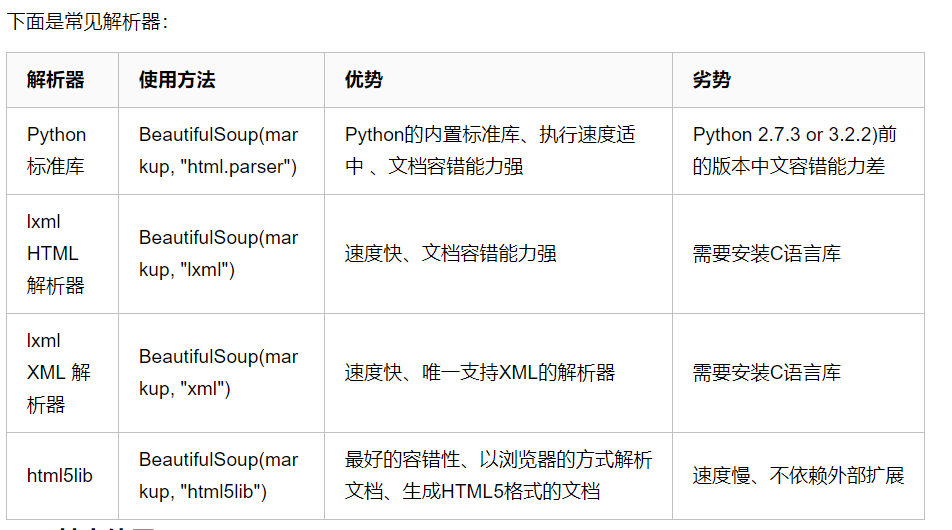

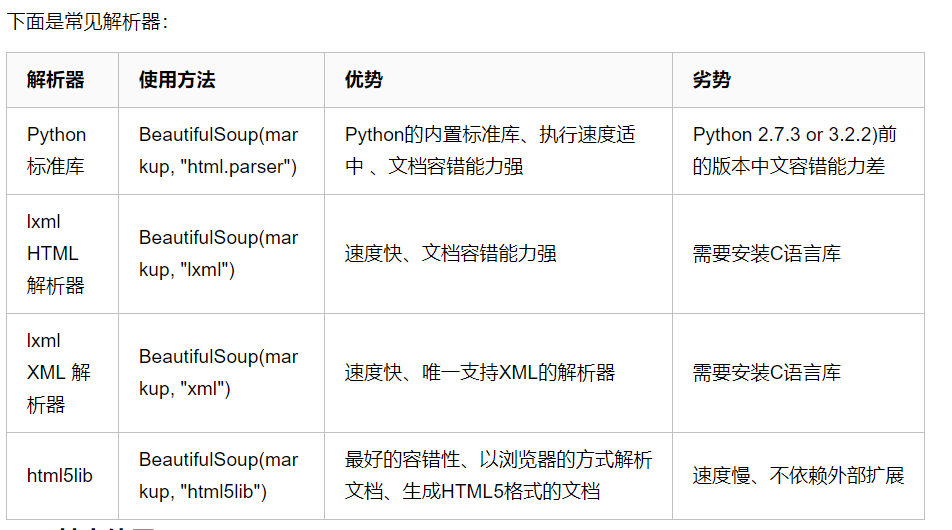

2. 常用解析库

Beautiful Soup支持Python标准库中的HTML解析器,还支持一些第三方的解析器,如果我们不安装它,则 Python 会使用 Python默认的解析器,

lxml 解析器更加强大,速度更快,推荐安装。

3. 基本使用

from bs4 import BeautifulSoup

html = '''

<html><head><title>哈哈哈哈</title></head>

<body>

<p class="title"><b>The Dormouse's story</b></p>

<p class="story">Once upon a 威威time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">你好</a>,

<a href="http://example.com/lacie" class="sister" id="link2">天是</a> and

<a href="http://example.com/tillie" class="sister" id="link3">草泥</a>;

and they lived at the bottom of a well.</p>

<p class="story">...</p>

'''

soup = BeautifulSoup(html,'lxml') # 创建BeautifulSoup对象

print(soup.prettify()) # 格式化输出 html格式

print(soup.title) # 打印标签中的所有内容

print(soup.title.name) # 获取标签对象的名字

print(soup.title.string) # 获取标签中的文本内容 == soup.title.text

print(soup.title.parent.name) # 获取父级标签的名字

print(soup.p) # 获取第一个p标签的内容

print(soup.p["class"]) # 获取第一个p标签的class属性

print(soup.a) # 获取第一个a标签

print(soup.find_all('a')) # 获取所有的a标签

print(soup.find(id='link3')) # 获取id为link3的标签

print(soup.p.attrs) # 获取第一个p标签的所有属性

print(soup.p.attrs['class']) # 获取第一个p标签的class属性

print(soup.find_all('p',class_='title')) # 查找属性为title的p

# 通过下面代码可以分别获取所有的链接以及文字内容

for link in soup.find_all('a'):

print(link.get('href')) # 获取链接

print(soup.get_text())# 获取所有文本

4. 标签选择器

from bs4 import BeautifulSoup

html = '''

<html>

<head>

<title>哈哈哈哈</title>

</head>

<body>

<p class="title"><b>哈哈哈哈哈哈哈哈哈</b></p>

<p class="story">Once upon a 威威time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">你好</a>,

<a href="http://example.com/lacie" class="sister" id="link2">天是</a> and

<a href="http://example.com/tillie" class="sister" id="link3">草泥</a>;

and they lived at the bottom of a well.</p>

<p class="story">666666666666666666666</p>

'''

soup = BeautifulSoup(html,'lxml') # 创建BeautifulSoup对象

print(soup.prettify())

print(soup.title) # <title>哈哈哈哈</title>

print(type(soup.title)) # <class 'bs4.element.Tag'>

print(soup.head) # <head><title>哈哈哈哈</title></head>

print(soup.p) # <p class="title"><b>哈哈哈哈哈哈哈哈哈</b></p>

通过这种soup.标签名 我们就可以获得这个标签的内容

这里有个问题需要注意,通过这种方式获取标签,如果文档中有多个这样的标签,

返回的结果是第一个标签的内容,如我们通过soup.p获取p标签,而文档中有多个p标签,

但是只返回了第一个p标签内容。

5. 获取名称

soup = BeautifulSoup(html,'lxml') # 创建BeautifulSoup对象

print(soup.title.name) # title

当我们通过soup.title.name的时候就可以获得该title标签的名称,即title。

6. 获取属性

# BeautifulSoup入门

from bs4 import BeautifulSoup

# https://www.cnblogs.com/felixwang2/p/8711746.html

html = '''

<html><head><title>哈哈哈哈</title></head>

<body>

<p class="title" name="AA"><b>The Dormouse's story</b></p>

<p class="story">Once upon a 威威time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">11111111111</a>,

<a href="http://example.com/lacie" class="sister" id="link2">222222222222</a> and

<a href="http://example.com/tillie" class="sister" id="link3">333333333333</a>;

and they lived at the bottom of a well.</p>

<p class="story">...</p>

'''

soup = BeautifulSoup(html,'lxml') # 创建BeautifulSoup对象

print(soup.p.attrs['name']) #AA

print(soup.p['name'])#AA

7. 获取内容

soup = BeautifulSoup(html,'lxml') # 创建BeautifulSoup对象

print(soup.p.string) # 表示获取第一个p标签的值

# The Dormouse's story

8 .嵌套选择

soup = BeautifulSoup(html,'lxml') # 创建BeautifulSoup对象

# 我们直接可以通过下面嵌套的方式获取

print(soup.head.title.string)# 哈哈哈哈

9. 子节点和子孙节点(contents的使用)和children的使用

contents的使用

html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story">

Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>

and they lived at the bottom of a well.

</p>

<p class="story">...</p>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html,'lxml')

print(soup.p.contents) # 获取p标签中的所有内容,各部分存入一个列表

################################ 运行结果

['\n Once upon a time there were three little sisters; and their names were\n ', <a class="sister" href="http://example.com/elsie" id="link1">

<span>Elsie</span>

</a>, '\n', <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>, '\n and\n ', <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>, '\n and they lived at the bottom of a well.\n ']

children的使用

html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story">

Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>

and they lived at the bottom of a well.

</p>

<p class="story">...</p>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html,'lxml')

print(soup.p.children)

for i,child in enumerate(soup.p.children):

print(i,child)

# 通过children也可以获取内容,和contents获取的结果是一样的,但是children是一个迭代对象,而不是列表,只能通过循环的方式获取信息

print(soup.descendants)# 获取子孙节点

10 .父节点和祖父节点

html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story">

Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>

and they lived at the bottom of a well.

</p>

<p class="story">...</p>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html,'lxml')

print(soup.a.parent)

通过soup.a.parent就可以获取父节点的信息

通过list(enumerate(soup.a.parents))可以获取祖先节点,这个方法返回的结果是一个列表,会分别将a标签的父节点的信息存放到列表中,

以及父节点的父节点也放到列表中,并且最后还会讲整个文档放到列表中,所有列表的最后一个元素以及倒数第二个元素都是存的整个文档的信息

11. 兄弟节点

html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story">

Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>

and they lived at the bottom of a well.

</p>

<p class="story">...</p>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html,'lxml')

print(soup.a.next_siblings )# 获取后面的兄弟节点

print(soup.a.previous_siblings) # 获取前面的兄弟节点

print(soup.a.next_sibling )# 获取下一个兄弟标签

print(soup.a.previous_sinbling )# 获取上一个兄弟标签

12. 标准选择器 (find_all(name,attrs,recursive,text,**kwargs))可以根据标签名,属性,内容查找文档

find_all

html='''

<div class="panel">

<div class="panel-heading">

<h4>Hello</h4>

</div>

<div class="panel-body">

<ul class="list" id="list-1">

<li class="element">Foo</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.find_all('ul')) # 找到所有ul标签

print(type(soup.find_all('ul')[0])) # 拿到第一个ul标签

# find_all可以多次嵌套,如拿到ul中的所有li标签

for ul in soup.find_all('ul'):

print(ul.find_all('li'))

attrs

html='''

<div class="panel">

<div class="panel-heading">

<h4>Hello</h4>

</div>

<div class="panel-body">

<ul class="list" id="list-1" name="elements">

<li class="element">Foo</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.find_all(attrs={'id': 'list-1'})) # 找到id为ilist-1的标签

print(soup.find_all(attrs={'name': 'elements'})) # 找到name属性为elements的标签

注意:attrs可以传入字典的方式来查找标签,但是这里有个特殊的就是class,

因为class在python中是特殊的字段,

所以如果想要查找class相关的可以更改attrs={'class_':'element'}或者soup.find_all('',{"class":"element}),特殊的标签属性可以不写attrs,

例如id

text

html='''

<div class="panel">

<div class="panel-heading">

<h4>Hello</h4>

</div>

<div class="panel-body">

<ul class="list" id="list-1">

<li class="element">Foo111</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo111</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.find_all(text='Foo111')) # 查到所有text="Foo"的文本 返回一个列表

# ['Foo111', 'Foo111']

13. find

find(name,attrs,recursive,text,**kwargs)

find返回的匹配结果的第一个元素

其他一些类似的用法:

find_parents()返回所有祖先节点,find_parent()返回直接父节点。

find_next_siblings()返回后面所有兄弟节点,find_next_sibling()返回后面第一个兄弟节点。

find_previous_siblings()返回前面所有兄弟节点,find_previous_sibling()返回前面第一个兄弟节点。

find_all_next()返回节点后所有符合条件的节点, find_next()返回第一个符合条件的节点

find_all_previous()返回节点后所有符合条件的节点, find_previous()返回第一个符合条件的节点

14. CSS选择器

html='''

<div class="panel">

<div class="panel-heading">

<h4>Hello</h4>

</div>

<div class="panel-body">

<ul class="list" id="list-1">

<li class="element">Foo</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.select('.panel .panel-heading'))

print(soup.select('ul li'))

print(soup.select('#list-2 .element'))

print(type(soup.select('ul')[0]))

通过select()直接传入CSS选择器就可以完成选择

熟悉前端的人对CSS可能更加了解,其实用法也是一样的

.表示class #表示id

标签1,标签2 找到所有的标签1和标签2

标签1 标签2 找到标签1内部的所有的标签2

[attr] 可以通过这种方法找到具有某个属性的所有标签

[atrr=value] 例子[target=_blank]表示查找所有target=_blank的标签

15. 获取内容(通过get_text()就可以获取文本内容)

html='''

<div class="panel">

<div class="panel-heading">

<h4>Hello</h4>

</div>

<div class="panel-body">

<ul class="list" id="list-1">

<li class="element">Foo</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

for li in soup.select('li'):

print(li.get_text())

# Foo

# Bar

# Jay

# Foo

# Bar

16. 获取属性(获取属性的时候可以通过[属性名]或者attrs[属性名])

html='''

<div class="panel">

<div class="panel-heading">

<h4>Hello</h4>

</div>

<div class="panel-body">

<ul class="list" id="list-1">

<li class="element">Foo</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

for ul in soup.select('ul'):

print(ul['id'])

print(ul.attrs['id'])

浙公网安备 33010602011771号

浙公网安备 33010602011771号