对爬取京东商品按照标题为其进行自动分类---基于逻辑回归的文本分类

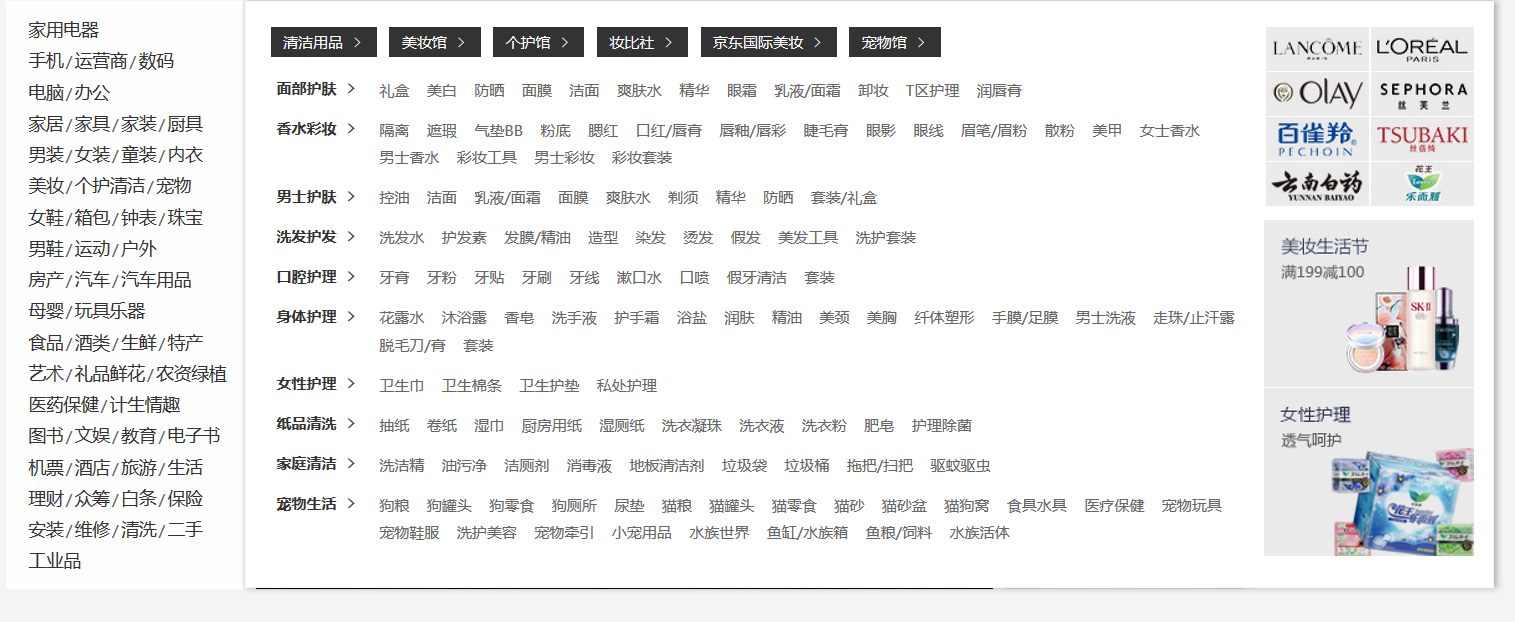

1.首先先从京东爬取商品作为训练数据集(以京东的商品分类作为分类的基础)

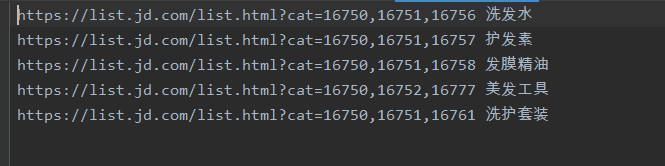

复制该类别的链接,写入JD_type文件中(文件如下图所示)

爬取训练集代码如下:

import requests from lxml import etree import time import csv import random from User_agent import User_Agent class Foo: cou=0 # 一个user_agent爬取了的页数 i=0 agents =User_Agent #正在爬取所用'user-agent' using_agent='Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36' # 定义函数抓取第一页以及总页数和第二页的url def crow_first(url,name): print('-------'+name+'-------crow_first---------------------') if Foo.cou>=14:#如果爬取的页数等于15页数,则换一个user_agent if Foo.i==35:#如果所有的agent都用过来了,则再重新用一遍 Foo.i=0 Foo.using_agent=Foo.agents[Foo.i] Foo.cou=0 Foo.i=Foo.i+1 # 获取当前的Unix时间戳,并且保留小数点后5位 a = time.time() b = '%.5f' % a #url = 'https://list.jd.com/list.html?cat=737,1276,739' print(url) head = {'authority': 'search.jd.com', 'method': 'GET', 'scheme': 'https', 'user-agent': Foo.using_agent, 'x-requested-with': 'XMLHttpRequest', 'Cookie': 'qrsc=3; pinId=RAGa4xMoVrs; xtest=1210.cf6b6759; ipLocation=%u5E7F%u4E1C; _jrda=5; TrackID=1aUdbc9HHS2MdEzabuYEyED1iDJaLWwBAfGBfyIHJZCLWKfWaB_KHKIMX9Vj9_2wUakxuSLAO9AFtB2U0SsAD-mXIh5rIfuDiSHSNhZcsJvg; shshshfpa=17943c91-d534-104f-a035-6e1719740bb6-1525571955; shshshfpb=2f200f7c5265e4af999b95b20d90e6618559f7251020a80ea1aee61500; cn=0; 3AB9D23F7A4B3C9B=QFOFIDQSIC7TZDQ7U4RPNYNFQN7S26SFCQQGTC3YU5UZQJZUBNPEXMX7O3R7SIRBTTJ72AXC4S3IJ46ESBLTNHD37U; ipLoc-djd=19-1607-3638-3638.608841570; __jdu=930036140; user-key=31a7628c-a9b2-44b0-8147-f10a9e597d6f; areaId=19; __jdv=122270672|direct|-|none|-|1529893590075; PCSYCityID=25; mt_xid=V2_52007VwsQU1xaVVoaSClUA2YLEAdbWk5YSk9MQAA0BBZOVQ0ADwNLGlUAZwQXVQpaAlkvShhcDHsCFU5eXENaGkIZWg5nAyJQbVhiWR9BGlUNZwoWYl1dVF0%3D; __jdc=122270672; shshshfp=72ec41b59960ea9a26956307465948f6; rkv=V0700; __jda=122270672.930036140.-.1529979524.1529984840.85; __jdb=122270672.1.930036140|85.1529984840; shshshsID=f797fbad20f4e576e9c30d1c381ecbb1_1_1529984840145' } r = requests.get(url, headers=head) r.encoding = 'utf-8' html1 = etree.HTML(r.text) datas = html1.xpath('//li[contains(@class,"gl-item")]') with open('洗发护发/JD_'+name+'.csv', 'a', newline='', encoding='utf-8-sig')as f: write = csv.writer(f) for data in datas: p_name = data.xpath('div/div[@class="p-name"]/a/em/text()') if p_name!=[]: cc=''.join(p_name[0]).split() n_name="" for c in cc: n_name=n_name+c p_urll = data.xpath('div/div[@class="p-name"]/a/@href') print(n_name) if n_name!="": write.writerow([n_name,name]) f.close() count=html1.xpath('//div[contains(@class,"f-pager")]//span[contains(@class,"fp-text")]//i//text()')#总页数 next_page=html1.xpath('//a[contains(@class,"pn-next")]//@href')#下一页的url pages=''+count[0] next_url="https://list.jd.com"+next_page[0] Foo.cou = Foo.cou + 1 return pages+' '+next_url # 定义函数抓取第二页以及以后的商品信息 def crow_then(url,name): # 获取当前的Unix时间戳,并且保留小数点后5位 a = time.time() b = '%.5f' % a if Foo.cou>=14:#如果爬取的页数等于15页数,则换一个user_agent if Foo.i == 35: # 如果所有的agent都用过来了,则再重新用一遍 Foo.i = 0 Foo.using_agent=Foo.agents[Foo.i] Foo.cou=0 Foo.i=Foo.i+1 #url = 'https://list.jd.com/list.html?cat=737,1276,739&page=3&sort=sort_rank_asc&trans=1&JL=6_0_0#J_main' head = {'authority': 'search.jd.com', 'method': 'GET', 'scheme': 'https', 'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36', 'x-requested-with': 'XMLHttpRequest', 'Cookie': 'qrsc=3; pinId=RAGa4xMoVrs; xtest=1210.cf6b6759; ipLocation=%u5E7F%u4E1C; _jrda=5; TrackID=1aUdbc9HHS2MdEzabuYEyED1iDJaLWwBAfGBfyIHJZCLWKfWaB_KHKIMX9Vj9_2wUakxuSLAO9AFtB2U0SsAD-mXIh5rIfuDiSHSNhZcsJvg; shshshfpa=17943c91-d534-104f-a035-6e1719740bb6-1525571955; shshshfpb=2f200f7c5265e4af999b95b20d90e6618559f7251020a80ea1aee61500; cn=0; 3AB9D23F7A4B3C9B=QFOFIDQSIC7TZDQ7U4RPNYNFQN7S26SFCQQGTC3YU5UZQJZUBNPEXMX7O3R7SIRBTTJ72AXC4S3IJ46ESBLTNHD37U; ipLoc-djd=19-1607-3638-3638.608841570; __jdu=930036140; user-key=31a7628c-a9b2-44b0-8147-f10a9e597d6f; areaId=19; __jdv=122270672|direct|-|none|-|1529893590075; PCSYCityID=25; mt_xid=V2_52007VwsQU1xaVVoaSClUA2YLEAdbWk5YSk9MQAA0BBZOVQ0ADwNLGlUAZwQXVQpaAlkvShhcDHsCFU5eXENaGkIZWg5nAyJQbVhiWR9BGlUNZwoWYl1dVF0%3D; __jdc=122270672; shshshfp=72ec41b59960ea9a26956307465948f6; rkv=V0700; __jda=122270672.930036140.-.1529979524.1529984840.85; __jdb=122270672.1.930036140|85.1529984840; shshshsID=f797fbad20f4e576e9c30d1c381ecbb1_1_1529984840145' } r = requests.get(url, headers=head) r.encoding = 'utf-8' html1 = etree.HTML(r.text) datas = html1.xpath('//li[contains(@class,"gl-item")]') with open('洗发护发/JD_' + name + '.csv', 'a', newline='', encoding='utf-8-sig')as f: write = csv.writer(f) for data in datas: p_name = data.xpath('div/div[@class="p-name"]/a/em/text()') cc=''.join(p_name[0]).split() n_name = "" for c in cc: n_name = n_name + c p_urll = data.xpath('div/div[@class="p-name"]/a/@href') if n_name != "": print(n_name) write.writerow([n_name,name]) f.close() Foo.cou=Foo.cou+1 def getMessage(urll,namee): pages_url=crow_first(urll,namee) str=pages_url.split(' ') pages=int(str[0]) next_url=str[1] next=next_url.split("&") for i in range(2,pages): try: url=next[0]+'&page='+'%d'%i+'&'+next[2]+'&'+next[3]+'&'+next[4] print(namee+' url: ' +url) crow_then(url,namee) print(' Finish') except Exception as e: print(e) i=i+1 if __name__ == '__main__': f = open(r"JD_type.txt", 'r',encoding='utf-8') s = f.read() f.close() # 切割文件中的字符串 zifuchuan = s.split("\n"); # 按行分割 i = 0 url = [] # 第一页网址 name = [] # type for ss in zifuchuan: if ss != '': # 去掉空行 zifu = ss.split("\t") url.append(zifu[0]) name.append(zifu[1]) #print(i,":","https:"+zifu[0] + " " + zifu[1]) getMessage(zifu[0],zifu[1]) i=i+1

该爬取算法会按每个类别生成一个csv文件,总的需要自己将所有csv文件合成一个训练文件(此处不做赘述了)

2.对数据进行预处理(去停用词、分词)训练集和测试集都要进行预处理。

import csv import re import codecs import string title=[] with open('good_test.csv', 'r', encoding='utf-8') as file_handler: # 返回csv迭代器file_reader,用于迭代得到样本 file_reader = csv.reader(file_handler) # 通过迭代器,遍历csv文件中的所有样本 for sample in file_reader: title.append(sample) # 将所有商品标题转换为list # 对每个标题进行分词,使用jieba分词 import jieba import pandas as pd title_s = [] for line in title: #去除数字 line[1]=result = re.sub(r'\d+', '', line[1]) title_cut = jieba.lcut(line[1]) title_s.append([line[0],title_cut]) # 导入停用此表 stopwords = [line.strip() for line in open('stop_words.txt', 'r', encoding='utf-8').readlines()] # 剔除停用词 ''' 数据预处理之后将测试集存入good_test1.csv文件 ''' file_csv = codecs.open("good_test1.csv", 'w', encoding='utf-8-sig') # 追加 writer = csv.writer(file_csv) title_clean = [] x=0 for line in title_s: line_clean = [] after_title="" print(line[0]) for word in line[1]: if word not in stopwords: line_clean.append(word) after_title=after_title+" "+word title_clean.append(line_clean) writer.writerow([line[0],after_title]) x=x+1

3.基于逻辑回归的自动分类(逻辑回归的原理在此不做赘述)

import pandas as pd # 是python的一个数据分析包,该工具是为了解决数据分析任务而创建的。Pandas 纳入了大量库和一些标准的数据模型,提供了高效地操作大型数据集所需的工具。 from sklearn.linear_model import LogisticRegression from sklearn.feature_extraction.text import CountVectorizer df_train = pd.read_csv('good_train4.csv') df_test = pd.read_csv('good_test1.csv') # df_train.drop(columns=[' article', 'id'], # inplace=True) # .drop()删除指定列,inplace可选参数。如果手动设定为True(默认为False),那么原数组直接就被替换,不需要进行重新赋值。 # df_test.drop(columns=['article'], inplace=True) vectorizer = CountVectorizer(ngram_range=(1, 1), min_df=0.0, max_df=1.0, max_features=100000) # x_train=vectorizer.fit_transform(df_train['word_seg'])等同于以下两行代码。注:fit_transform(X)拟合模型,并返回文本矩阵 vectorizer.fit(df_train['word_seg']) x_train = vectorizer.transform(df_train['word_seg']) x_test = vectorizer.transform(df_test['word_seg']) y_train = df_train['class'] - 1 lg = LogisticRegression(penalty='l2', dual=False, tol=0.0001, C=1.0,fit_intercept=True, intercept_scaling=1, class_weight=None, random_state=None,solver='liblinear', max_iter=100, multi_class='ovr', verbose=0,warm_start=False, n_jobs=1) lg.fit(x_train, y_train) """根据训练好的分类型对测试集的样本进行预测""" y_test = lg.predict(x_test) print(y_test) # test_accuracy = lg.score(x_test, y_test) # print(test_accuracy) """保存预测结果至本地""" df_test['class'] = y_test.tolist() # tolist()将数组或者矩阵转换成列表 df_test['class'] = df_test['class'] + 1 df_result = df_test.loc[:, ['id', 'class']] df_result.to_csv('result2.csv', index=False) print("finish..................")

在此就完成了,准确率为81.5%