部署安装基于containerd 运行时kubernetes-v1.26.0

一、准备

| 系统 | CPU | RAM | IP | 网卡 | 主机名 |

|---|---|---|---|---|---|

| centos7 | 2 | 2 | 192.168.84.128 | NAT | master |

| centos7 | 2 | 2 | 192.168.84.129 | NAT | node |

环境配置(所有节点)

- 修改主机名

#master节点

hostnamectl set-hostname master

bash

#node节点

hostnamectl set-hostname node

bash

- 配置hosts映射

cat >> /etc/hosts << EOF

192.168.84.128 master

192.168.84.129 node

EOF

-

关闭防火墙

systemctl stop firewalld systemctl disable firewalld -

关闭selinux

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

- 关闭交换分区

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

- 转发 IPv4 并让 iptables 看到桥接流

#转发 IPv4 并让 iptables 看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

lsmod | grep br_netfilter

#验证br_netfilter模块

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

- 配置 时间同步

yum install ntpdate -y

ntpdate ntp1.aliyun.com

安装containerd(所有节点操作)

-

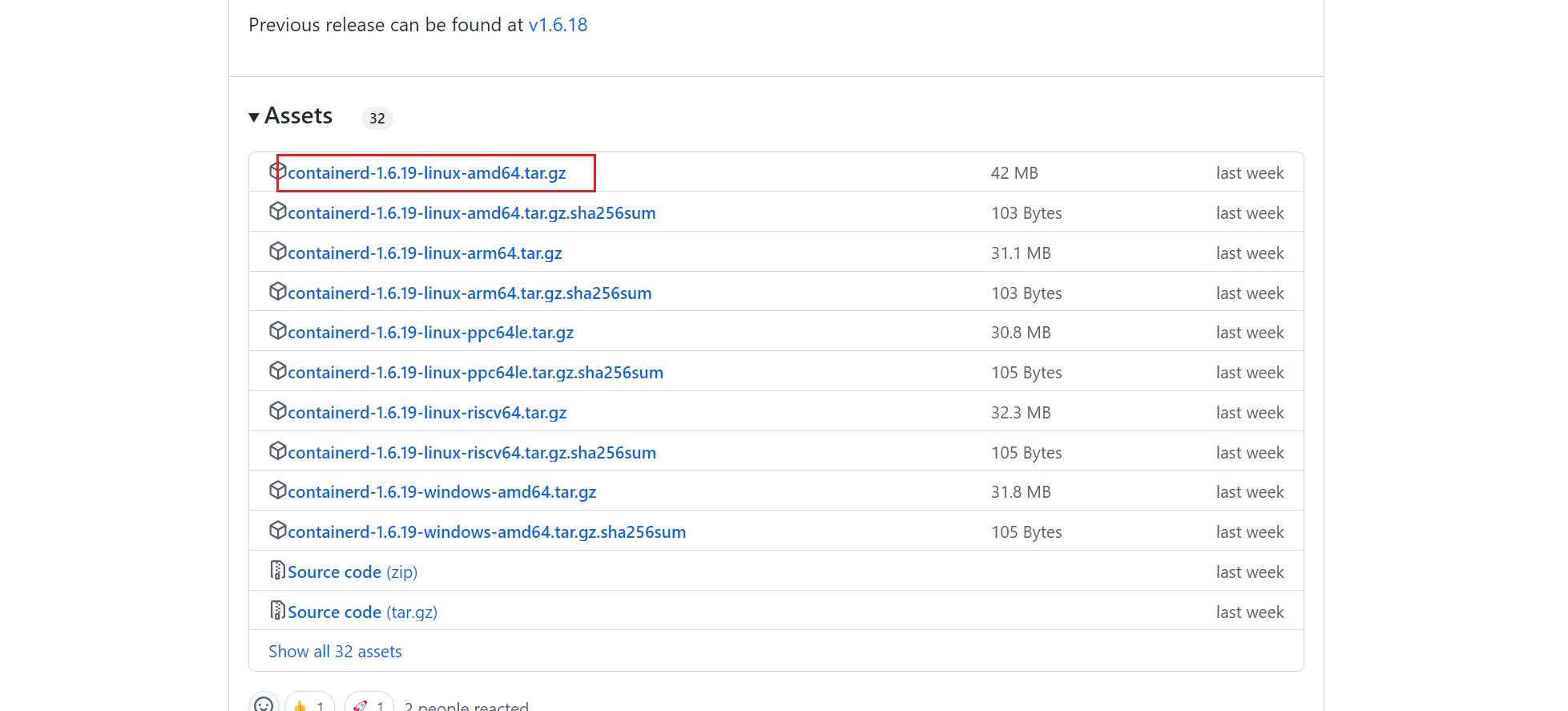

下载containerd

我这里下载1.16.9

-

上传到所有节点

-

安装

tar Cvzxf /usr/local containerd-1.6.19-linux-amd64.tar.gz

# 通过 systemd 启动 containerd

$ vi /etc/systemd/system/containerd.service

# Copyright The containerd Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

#uncomment to enable the experimental sbservice (sandboxed) version of containerd/cri integration

#Environment="ENABLE_CRI_SANDBOXES=sandboxed"

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

# 加载配置、启动

systemctl daemon-reload

systemctl enable --now containerd

# 验证

ctr version

#生成配置文件

mkdir /etc/containerd

containerd config default > /etc/containerd/config.toml

systemctl restart containerd

安装runc(所有节点操作)

- 下载runc

- 安装

install -m 755 runc.amd64 /usr/local/sbin/runc

# 验证

runc -v

安装CNI

- 下载并上传到服务器

下载地址

- 安装

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.2.0.tgz

配置加速器(所有节点操作)

#参考:https://github.com/containerd/containerd/blob/main/docs/cri/config.md#registry-configuration

#添加 config_path = "/etc/containerd/certs.d"

sed -i 's/config_path\ =.*/config_path = \"\/etc\/containerd\/certs.d\"/g' /etc/containerd/config.toml

mkdir /etc/containerd/certs.d/docker.io -p

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://vh3bm52y.mirror.aliyuncs.com"]

capabilities = ["pull", "resolve"]

EOF

systemctl daemon-reload && systemctl restart containerd

cgroup 驱动(所有节点操作)

#把SystemdCgroup = false修改为:SystemdCgroup = true

sed -i 's/SystemdCgroup\ =\ false/SystemdCgroup\ =\ true/g' /etc/containerd/config.toml

#把sandbox_image = "k8s.gcr.io/pause:3.6"修改为:sandbox_image="registry.aliyuncs.com/google_containers/pause:3.9"

sed -i 's/sandbox_image\ =.*/sandbox_image\ =\ "registry.aliyuncs.com\/google_containers\/pause:3.9"/g' /etc/containerd/config.toml|grep sandbox_image

systemctl daemon-reload

systemctl restart containerd

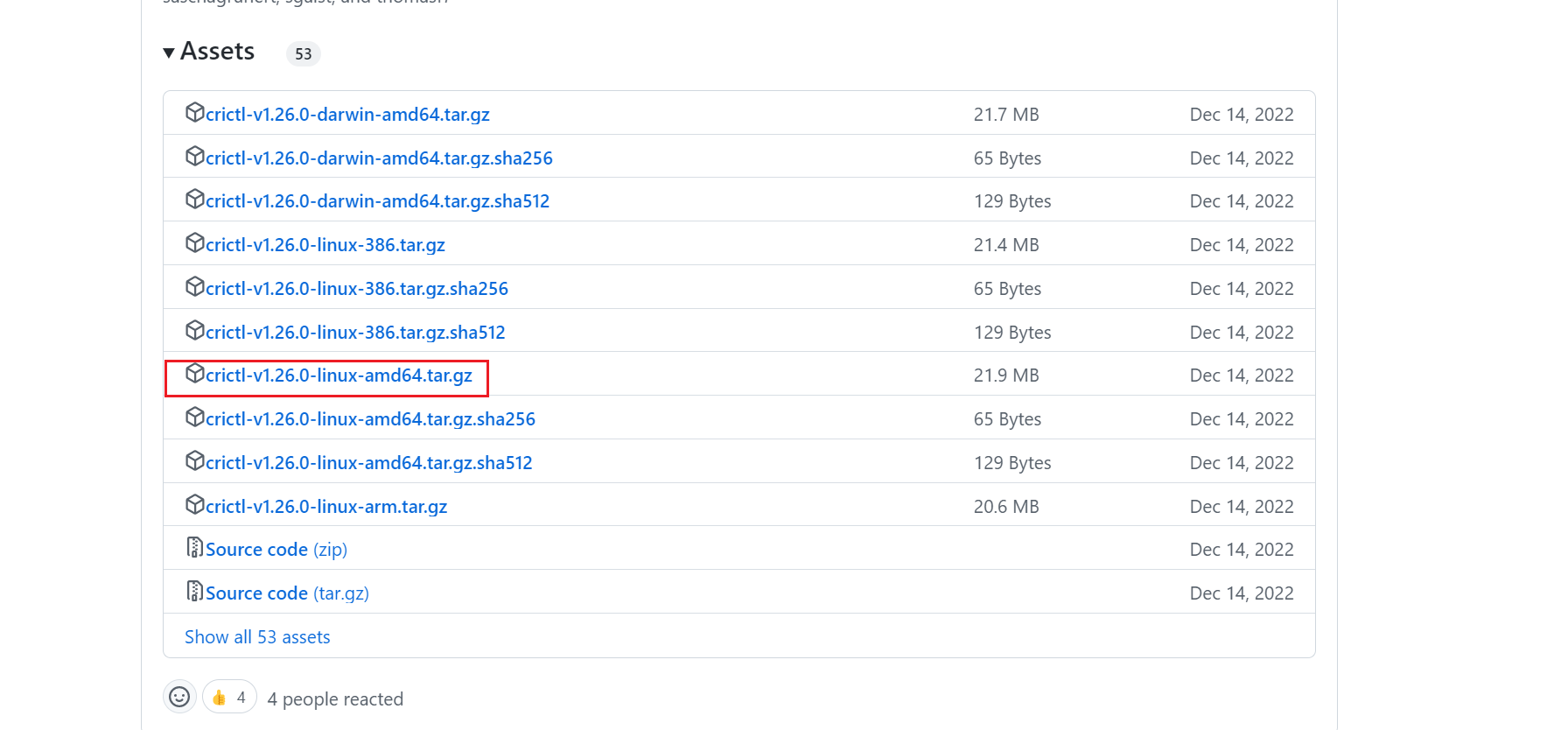

安装crictl(所有节点)

- 下载

下载地址

这里我选择v1.26.0这个版本的

-

安装

-

上传到服务器

-

tar -vzxf crictl-v1.26.0-linux-amd64.tar.gz mv crictl /usr/local/bin/ cat >> /etc/crictl.yaml << EOF runtime-endpoint: unix:///var/run/containerd/containerd.sock image-endpoint: unix:///var/run/containerd/containerd.sock timeout: 10 debug: true EOF systemctl restart containerd

-

安装kubelet,kubeadm,kubectl(所有节点)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install --nogpgcheck kubelet kubeadm kubectl -y

systemctl enable kubelet

配置ipvs(所有节点)

#参考:https://cloud.tencent.com/developer/article/1717552#:~:text=%E5%9C%A8%E6%89%80%E6%9C%89%E8%8A%82%E7%82%B9%E4%B8%8A%E5%AE%89%E8%A3%85ipset%E8%BD%AF%E4%BB%B6%E5%8C%85%20yum%20install%20ipset%20-y,%E4%B8%BA%E4%BA%86%E6%96%B9%E4%BE%BF%E6%9F%A5%E7%9C%8Bipvs%E8%A7%84%E5%88%99%E6%88%91%E4%BB%AC%E8%A6%81%E5%AE%89%E8%A3%85ipvsadm%20%28%E5%8F%AF%E9%80%89%29%20yum%20install%20ipvsadm%20-y

#安装ipset和ipvsadm

yum install ipset ipvsadm -y

#由于ipvs已经加入到了内核的主干,所以为kube-proxy开启ipvs的前提需要加载以下的内核模块

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

#执行加载模块脚本

/bin/bash /etc/sysconfig/modules/ipvs.modules

#查看对应的模块是否加载成功

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#配置kubelet

cat >> /etc/sysconfig/kubelet << EOF

# KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

初始化master节点

- 生成默认文件

kubeadm config print init-defaults > kubeadm.yaml

- 修改kubeadm.yaml文件

vim kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.200.5 # 修改为宿主机ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: master # 修改为宿主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 修改为阿里镜像

kind: ClusterConfiguration

kubernetesVersion: 1.26.0 # kubeadm的版本为多少这里就修改为多少

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 ## 设置pod网段

scheduler: {}

###添加内容:配置kubelet的CGroup为systemd

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

- 下载镜像

kubeadm config images pull --image-repository=registry.aliyuncs.com/google_containers --kubernetes-version=v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.26.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.6-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.9.3

- 初始化

kubeadm init --config kubeadm.yaml

等待一些时间后有如下输出表示master节点已经初始化完成

[init] Using Kubernetes version: v1.26.2

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.84.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.84.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.84.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.001191 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.84.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:13dbb19283360eed18d55d1ee758e183ad79f123dc2598afaa76eac0dc0d9890

- 创建相关目录

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

node节点加入到集群

[root@node ~]# kubeadm join 192.168.200.5:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:7d52da1b42af69666db3483b30a389ab143a1a199b500843741dfd5f180bcb3f

有如下内容表示node节点加入k8s集群成功

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

验证(master节点)

[root@master ~]# kubectl get nodes

如下输出

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 3m25s v1.26.0

node NotReady <none> 118s v1.26.0

[root@master ~]# kubectl get pod -A

kube-system coredns-5bbd96d687-7d6dl 0/1 Pending 0 2m47s

kube-system coredns-5bbd96d687-frz87 0/1 Pending 0 2m47s

kube-system etcd-master 1/1 Running 0 3m

kube-system kube-apiserver-master 1/1 Running 0 3m

kube-system kube-controller-manager-master 1/1 Running 0 3m

kube-system kube-proxy-7tnw2 1/1 Running 0 2m47s

kube-system kube-proxy-lmh4z 1/1 Running 0 74s

kube-system kube-scheduler-master 1/1 Running 0 3m1s

注意:此时2个节点(一个master,一个node)此时是notReady,同时查看pod,发现coredns是pending状态,这是正常的。是由于没有安装网络插件,需要安装网络插件。这里我选择了calico

安装网络插件(master节点)

1.根据自己的选择去安装网络插件。这里我选择calico

curl https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml -O

- 安装

kubectl apply -f calico.yaml

问题:此时k8s拉取calico镜像失败,报错如下

Dec 02 21:58:49 node2 containerd[7285]: time="2022-12-02T21:58:49.627179374+08:00" level=error msg="Failed to handle backOff event &ImageCreate{Name:docker.io/calico/cni:v3.24.5,Labels:map[string]string{io.cri-containerd.image: managed,},XXX_unrecognized:[],} for docker.io/calico/cni:v3.24.5" error="update image store for \"docker.io/calico/cni:v3.24.5\": get image info from containerd: get image diffIDs: unexpected media type text/html for sha256:0238205317023105e6589e4001e2a3d81b84c71740b0d9563f6157ddb32c4ea4: not found"

Dec 02 21:58:50 node2 containerd[7285]: time="2022-12-02T21:58:50.627858264+08:00" level=info msg="ImageCreate event &ImageCreate{Name:docker.io/calico/kube-controllers:v3.24.5,Labels:map[string]string{io.cri-containerd.image: managed,},XXX_unrecognized:[],}"

Dec 02 21:58:50 node2 containerd[7285]: time="2022-12-02T21:58:50.627954441+08:00" level=error msg="Failed to handle backOff event &ImageCreate{Name:docker.io/calico/kube-controllers:v3.24.5,Labels:map[string]string{io.cri-containerd.image: managed,},XXX_unrecognized:[],} for docker.io/calico/kube-controllers:v3.24.5" error="update image store for \"docker.io/calico/kube-controllers:v3.24.5\": get image info from containerd: get image diffIDs: unexpected media type text/html for sha256:0238205317023105e6589e4001e2a3d81b84c71740b0d9563f6157ddb32c4ea4: not found"

Dec 02 21:59:01 node2 containerd[7285]: time="2022-12-02T21:59:01.446288924+08:00" level=info msg="Events for \"docker.io/calico/cni:v3.24.5\" is in backoff, enqueue event &ImageDelete{Name:docker.io/calico/cni:v3.24.5,XXX_unrecognized:[],}"

Dec 02 21:59:10 node2 containerd[7285]: time="2022-12-02T21:59:10.628919112+08:00" level=info msg="ImageCreate event &ImageCreate{Name:docker.io/calico/node:v3.24.5,Labels:map[string]string{io.cri-containerd.image: managed,},XXX_unrecognized:[],}"

Dec 02 21:59:10 node2 containerd[7285]: time="2022-12-02T21:59:10.629086106+08:00" level=error msg="Failed to handle backOff event &ImageCreate{Name:docker.io/calico/node:v3.24.5,Labels:map[string]string{io.cri-containerd.image: managed,},XXX_unrecognized:[],} for docker.io/calico/node:v3.24.5" error="update image store for \"docker.io/calico/node:v3.24.5\": get image info from containerd: get image diffIDs: unexpected media type text/html for sha256:0238205317023105e6589e4001e2a3d81b84c71740b0d9563f6157ddb32c4ea4: not found"

Dec 02 21:59:39 node2 containerd[7285]: time="2022-12-02T21:59:39.956573672+08:00" level=error msg="(*service).Write failed" error="rpc error: code = Canceled desc = context canceled" expected="sha256:0797c5cde8c8c54e2b4c3035fc178e8e062d3636855b72b4cfbb37e7a4fb133d" ref="layer-sha256:0797c5cde8c8c54e2b4c3035fc178e8e062d3636855b72b4cfbb37e7a4fb133d" total=86081905

解决方案参考: https://blog.csdn.net/MssGuo/article/details/128149704

删除containerd 加速器配置

vim /etc/containerd/certs.d/docker.io/hosts.toml

server = "https://docker.io"

[host."https://xxxmyelo.mirror.aliyuncs.com"] #这个配置为自己阿里云的镜像加速地址

capabilities = ["pull", "resolve"]

root@node2:~# systemctl stop containerd.service && systemctl start containerd.service

还是不行啊啊啊啊啊啊啊啊啊。

听网友说,删掉了步骤一中的配置的containerd镜像加速器

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "" #改回空地址

重启containerd

systemctl restart containerd

过段时间 查看pod运行状况

watch kubectl get pods -A

此时发现所有的pod都已经成功运行了

kube-system calico-kube-controllers-57b57c56f-mc5bc 1/1 Running 0 3m45s

kube-system calico-node-jrggj 1/1 Running 0 3m45s

kube-system calico-node-wq6q2 1/1 Running 0 3m45s

kube-system coredns-5bbd96d687-7d6dl 1/1 Running 0 8m30s

kube-system coredns-5bbd96d687-frz87 1/1 Running 0 8m30s

kube-system etcd-master 1/1 Running 0 8m43s

kube-system kube-apiserver-master 1/1 Running 0 8m43s

kube-system kube-controller-manager-master 1/1 Running 0 8m43s

kube-system kube-proxy-7tnw2 1/1 Running 0 8m30s

kube-system kube-proxy-lmh4z 1/1 Running 0 6m57s

kube-system kube-scheduler-master 1/1 Running 0 8m44s

此时coredns和calico相关的pod都是已经running状态。

查看节点状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 12m v1.26.2

node1 Ready <none> 10m v1.26.2

此时发现两个节点均是Ready状态。

至此,k8s-v1.26.2版本安装成功。

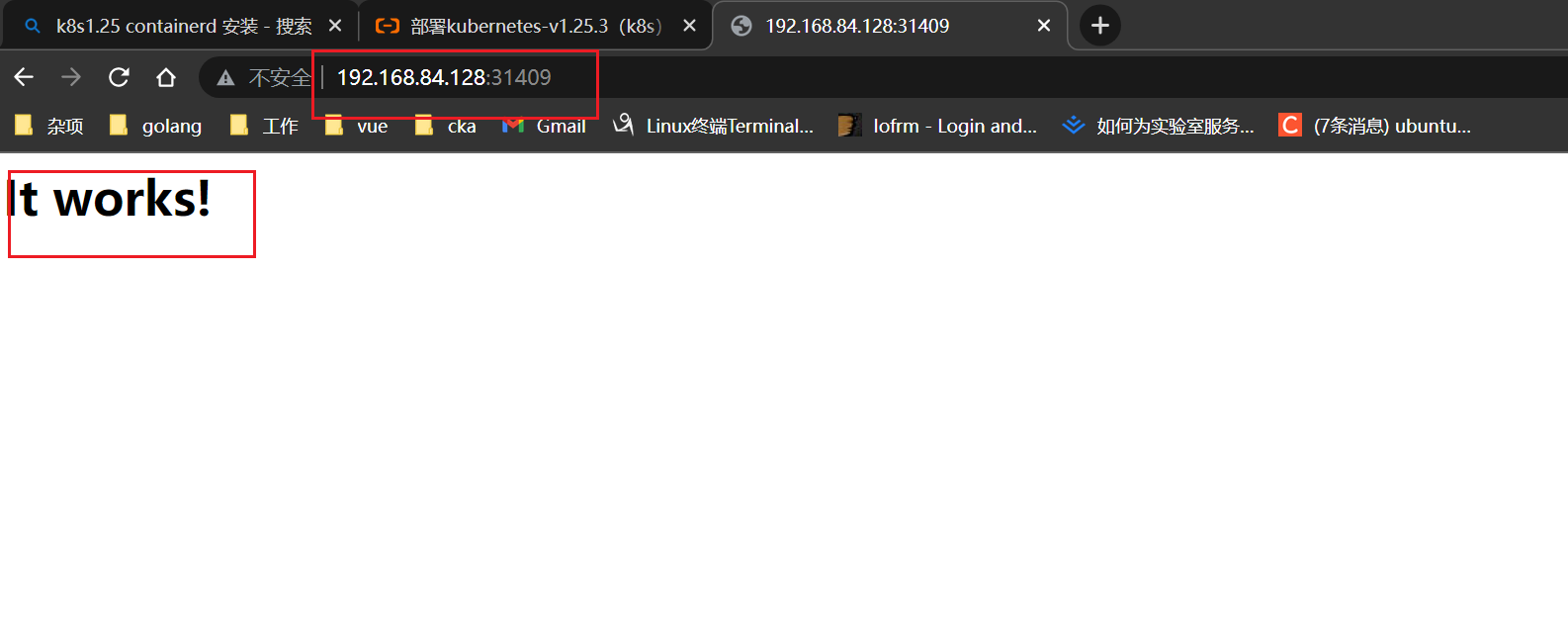

测试k8s集群

[root@master ~]# kubectl create deployment httpd --image=httpd

[root@master ~]# kubectl expose deployment httpd --port=80 --type=NodePort

[root@master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/httpd-975f8444c-t6z2c 1/1 Running 0 75s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/httpd NodePort 10.106.224.79 <none> 80:31409/TCP 63s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14m

访问:http://192.168.84.128:31409

浏览器显示如下:

It works! #成功了

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?

· Pantheons:用 TypeScript 打造主流大模型对话的一站式集成库

2019-03-12 kafka频繁rebalance