加餐-信号分类

1.原始数据

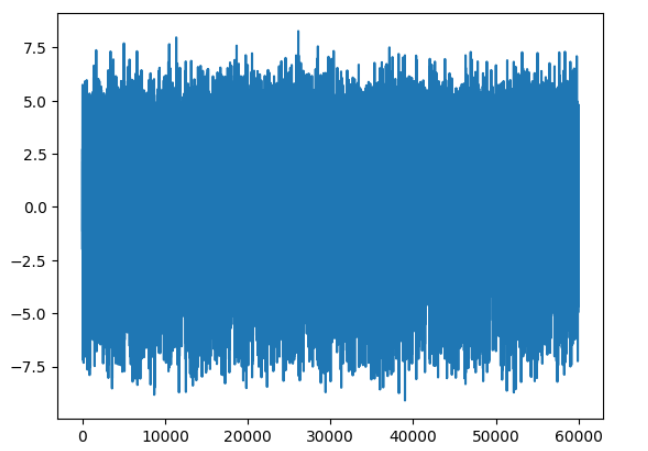

正常信号、异常信号,每个信号数据大概60000个数据点。

2.模型构建#

from scipy.signal import stft

import matplotlib.pyplot as plt

import os

import glob

import numpy as np

import pandas as pd

import matplotlib

import torch

from torch import nn

from torch.utils.data import Dataset, DataLoader

import torchvision.transforms as transforms

from torchvision.datasets import ImageFolder

from torch import optim

from tqdm import tqdm

from PIL import Image

import torch

import torch.nn.functional as F

import torchvision.transforms as transforms

import torchvision

from torch.utils.data import Dataset, DataLoader

import numpy as np

from torch.utils.tensorboard import SummaryWriter

from tensorboard import notebook

# 定义STFT参数

fs = 1000 # 采样频率

nperseg = 256 # 每个时间窗口的长度

noverlap = 128 # 重叠量

# 转换为频谱图

for item in data_list:

with open(item, 'rb') as fp:

tmp = fp.read()

signal_data = np.array([float(x) for x in tmp.decode().split('\n') if x != ''])

f, t, Zxx = stft(signal_data, fs=fs, nperseg=nperseg, noverlap=noverlap)

plt.figure(figsize=(2.24, 2.24))

plt.pcolormesh(t, f, np.abs(Zxx), shading=None)

plt.axis('off')

plt.savefig(os.path.join(img_path, item.split('\\')[-1]+'.jpg'))

matplotlib.pyplot.close()

# 设置超参数

batch_size = 32

learning_rate = 0.001

num_epochs = 100

# 设备配置

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

signal_datas = []

for item in data_list:

with open(item, 'rb') as fp:

tmp = fp.read()

signal_data = np.array([float(x) for x in tmp.decode().split('\n') if x != ''])

signal_datas.append(signal_data)

signal_datas = np.array([x[:60000] for x in signal_datas]).astype(np.float32)

data_list_new = glob.glob(os.path.join('D:/data/20210325/stft_gen/img_stft/', '*.jpg'))

spectrograms = []

for item in data_list_new:

spectrograms.append(np.asarray(Image.open(item)))

spectrograms = np.array(spectrograms).astype(np.float32)

targets = [int(x.split('_')[-1].split('.')[0]) for x in glob.glob(os.path.join('D:/data/20210325/stft_gen/img_stft/', '*.jpg'))]

targets = np.array(targets).astype(np.float32)

# 定义数据集类

class SignalDataset(Dataset):

def __init__(self, signals, spectrograms, labels):

self.signals = signals

self.spectrograms = spectrograms

self.labels = labels

def __len__(self):

return len(self.signals)

def __getitem__(self, idx):

signal = self.signals[idx]

label = self.labels[idx]

spectrogram = self.spectrograms[idx]

return signal, spectrogram, label

# 划分训练集和测试集

split = int(0.8 * len(signal_datas))

train_signals, test_signals = signal_datas[:split], signal_datas[split:]

train_spectrograms, test_spectrograms = spectrograms[:split], spectrograms[split:]

train_labels, test_labels = targets[:split], targets[split:]

# 构建数据集和数据加载器

train_dataset = SignalDataset(train_signals, train_spectrograms, train_labels)

test_dataset = SignalDataset(test_signals, test_spectrograms, test_labels)

train_loader = DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False)

"""

model_resnet18 = torchvision.models.resnet18(pretrained=True)

# 指定保存路径

save_path = "D:/data/20210325/resnet18.pth"

# 保存模型参数

torch.save(model_resnet18.state_dict(), save_path)

"""

save_path = "D:/data/20210325/resnet18.pth"

resnet = torchvision.models.resnet18()

resnet.load_state_dict(torch.load(save_path))

# 构建模型

class LSTMModel(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, num_classes):

super(LSTMModel, self).__init__()

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, hidden_size)

def forward(self, x):

# LSTM特征提取

out, _ = self.lstm(torch.unsqueeze(x, dim=1))

out = out[:, -1, :] # 取最后一个时间步的输出作为特征表示

out = self.fc(out)

return out

class ResNetModel(nn.Module):

def __init__(self, hidden_size):

super(ResNetModel, self).__init__()

# 这里可以使用预训练的ResNet模型或自定义ResNet模型

self.resnet = resnet

num_ftrs = self.resnet.fc.in_features

self.resnet.fc = nn.Linear(num_ftrs, hidden_size)

def forward(self, x):

out = self.resnet(torch.permute(x, (0, 3, 1, 2)))

return out

class FusionModel(nn.Module):

def __init__(self, lstm_input_size, resnet_input_size, hidden_size, num_classes):

super(FusionModel, self).__init__()

self.lstm_model = LSTMModel(lstm_input_size, hidden_size, num_layers=1, num_classes=num_classes)

self.resnet_model = ResNetModel(hidden_size)

self.fc = nn.Linear(hidden_size + hidden_size, num_classes)

def forward(self, x_lstm, x_resnet):

lstm_out = self.lstm_model(x_lstm)

resnet_out = self.resnet_model(x_resnet)

combined = torch.cat((lstm_out, resnet_out), dim=1)

out = self.fc(combined)

return out

3.模型训练

# 初始化模型

lstm_input_size = 60000 # 假设输入LSTM的信号数据的维度为60000

resnet_input_size = 3 # 假设ResNet输入的频谱图维度为224*224*3

hidden_size = 128 # LSTM隐藏层的大小

num_classes = 2 # 两类信号,正常和异常

model = FusionModel(lstm_input_size, resnet_input_size, hidden_size, num_classes).to(device)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

writer = SummaryWriter('D:/data/20210325/tensorboard/')

seed = 1

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

# torch.cuda.manual_seed_all(seed) # 如果使用多个GPU

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

# 训练模型

best_val_loss = float('inf')

patience = 20

counter = 0

total_step = len(train_loader)

for epoch in tqdm(range(num_epochs)):

model.train()

correct = 0

total = 0

total_loss = 0.0

for i, (signals, spectrograms, labels) in enumerate(train_loader):

signals = signals.to(device)

spectrograms = spectrograms.to(device)

labels = labels.to(torch.long).to(device)

# 前向传播

outputs = model(signals, spectrograms)

# 计算损失

loss = criterion(outputs, labels)

# 反向传播和优化

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

_, predicted = torch.max(outputs, 1)

correct += (predicted == labels).sum().item()

total += labels.size(0)

accuracy = correct / total

print('Epoch {}/{}, Loss: {:.4f}, Accuracy: {:.4f}'.format(epoch+1, num_epochs, total_loss, accuracy))

# 模型评估

model.eval()

with torch.no_grad():

val_loss = 0.0

val_correct = 0

val_total = 0

for signals, spectrograms, labels in test_loader:

signals = signals.to(device)

spectrograms = spectrograms.to(device)

labels_val = labels.to(torch.long).to(device)

outputs_val = model(signals, spectrograms)

val_loss += criterion(outputs_val, labels_val).item()

_, predicted_val = torch.max(outputs_val.data, 1)

val_total += labels.size(0)

val_correct += (predicted_val == labels_val).sum().item()

val_accuracy = val_correct / val_total

avg_val_loss = val_loss / len(test_loader)

print('Val Loss: {:.4f}, Accuracy: {:.4f}'.format(val_loss, val_accuracy))

writer.add_scalar('train_loss-1', round(total_loss/len(train_loader), 4), epoch)

writer.add_scalar('train_acc-1', round(accuracy, 4), epoch)

writer.add_scalar('val_loss-1', round(avg_val_loss, 4), epoch)

writer.add_scalar('val_acc-1', round(val_accuracy, 4), epoch)

# 如果验证集损失变小,保存当前模型

if avg_val_loss < best_val_loss:

best_val_loss = avg_val_loss

counter = 0

torch.save(model.state_dict(), 'D:/data/20210325/best_model.pt') # 保存当前最佳模型

else:

counter += 1

if counter >= patience:

print("Early stopping")

break

4.结果分析

notebook.start('--logdir D:/data/20210325/tensorboard')

作者:lotuslaw

出处:https://www.cnblogs.com/lotuslaw/p/18164605

版权:本作品采用「署名-非商业性使用-相同方式共享 4.0 国际」许可协议进行许可。

标签:

Pytorch

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧