加餐-多模态情绪分类

1.原始数据

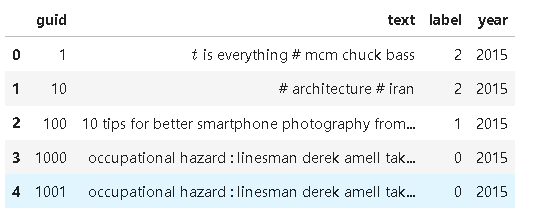

- 文本

积极、消极、中性三类文本图像对。

- 图像

2.模型构建

from transformers import AutoTokenizer, AutoModel

from transformers import BertModel, BertTokenizer

import torch

import torchvision.models as models

import glob

import os

import json

import torchvision.transforms as transforms

from PIL import Image

from tqdm import tqdm

import numpy as np

from torchvision.datasets import ImageFolder

import pandas as pd

from sklearn.preprocessing import LabelEncoder

from torch import nn

import re

from torch.utils.data import Dataset, DataLoader

from torch.utils.tensorboard import SummaryWriter

from tensorboard import notebook

# 提前在huggingface下载好模型参数及tokenizer

tokenizer = AutoTokenizer.from_pretrained(r"D:\data\bert\bert_base_uncased")

bert = AutoModel.from_pretrained(r"D:\data\bert\bert_base_uncased")

for param in bert.parameters():

param.requires_grad = False

"""

# 加载预训练模型,这里以ResNet50为例

# model = models.resnet50(pretrained=True)

# 保存模型参数

# torch.save(model.state_dict(), save_path)

"""

# 指定保存路径

save_path = r"D:\data\resnet50\resnet50.pth"

# resnet 释放layer4,其余全部冻结

resnet = models.resnet50()

resnet.load_state_dict(torch.load(save_path))

for param in resnet.parameters():

param.requires_grad = False

for param in resnet.layer4.parameters():

param.requires_grad = True

# 图像转换

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.CenterCrop((224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

class ImageTextDataset(Dataset):

def __init__(self, img_paths, texts, labels, img_transform, tokenizer):

self.img_paths = img_paths

self.texts = texts

self.labels = labels

self.img_transform = img_transform

self.tokenizer = tokenizer

def __len__(self):

return len(self.labels)

def __getitem__(self, idx):

img = Image.open(self.img_paths[idx])

img = self.img_transform(img)

text = self.texts[idx]

inputs = self.tokenizer(text, return_tensors="pt", padding="max_length", truncation=True, max_length=64)

return img, {k: v.squeeze() for k, v in inputs.items()}, self.labels[idx]

class EmotionModel(nn.Module):

def __init__(self, num_classes):

super().__init__()

# 加载预训练的 ResNet 模型,并替换最后一层全连接层为恒等映射

self.resnet = resnet

self.resnet.fc = nn.Identity()

# 加载 BERT 分词器和预训练的 BERT 模型

self.bert_tokenizer = tokenizer

self.bert = bert

# 定义注意力机制

self.attention = nn.Sequential(

nn.Linear(768 + 2048, 512),

nn.Tanh(),

nn.Linear(512, 1),

nn.Softmax(dim=1)

)

# 定义多层感知机(MLP)用于情感分类

self.mlp = nn.Sequential(

nn.Linear(768 + 2048 + 2048, 1024),

nn.ReLU(),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Linear(512, num_classes)

)

# 初始化注意力和 MLP 中的线性层权重

for layer in self.attention:

if type(layer) == nn.modules.linear.Linear:

nn.init.xavier_normal_(layer.weight)

for layer in self.mlp:

if type(layer) == nn.modules.linear.Linear:

nn.init.xavier_normal_(layer.weight)

def forward(self, imgs, texts):

# 提取图像特征

img_features = self.resnet(imgs)

# 提取文本特征

input_ids = texts['input_ids']

attention_mask = texts['attention_mask']

text_features = self.bert(input_ids=input_ids, attention_mask=attention_mask)[0][:, 0, :]

# 组合图像特征和文本特征

combinded_features = torch.cat((img_features, text_features), dim=1)

# 计算注意力权重

attention_weights = self.attention(combinded_features)

# 使用注意力权重加权图像特征

attention_features = torch.mul(img_features, attention_weights)

# 将文本特征、注意力特征和原始图像特征拼接起来

fused_features = torch.cat((text_features, attention_features, img_features), dim=1)

# 经过 MLP 进行情感分类

output = self.mlp(fused_features)

return output

3.模型训练

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

seed = 1

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

# torch.cuda.manual_seed_all(seed) # 如果使用多个GPU

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

dataset = ImageTextDataset(img_data_list, df['text'].values, df['label'].values, transform, tokenizer)

test_size = 3000

train_size = len(dataset) - test_size

train_dataset, test_dataset = torch.utils.data.random_split(dataset, [train_size, test_size])

train_loader = DataLoader(train_dataset, batch_size=128, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=128, shuffle=True)

model = EmotionModel(num_classes=3).to(device)

optimizer = torch.optim.Adadelta(model.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

num_epochs = 300

best_val_loss = float('inf')

patience = 10 # 设置停止训练的耐心值

counter = 0

writer = SummaryWriter('./tensorboard/')

for epoch in range(num_epochs):

model.train()

total_loss = 0.0

correct = 0

total = 0

for imgs, texts, labels in tqdm(train_loader, desc="训练集遍历"):

imgs, texts, labels = imgs.to(device), {k: v.to(device) for k, v in texts.items()}, labels.long().to(device)

optimizer.zero_grad()

outputs = model(imgs, texts)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

total_loss += loss.item()

_, predicted = torch.max(outputs, 1)

correct += (predicted == labels).sum().item()

total += labels.size(0)

accuracy = correct / total

writer.add_scalar('train_loss', round(total_loss/len(train_loader), 4), epoch)

writer.add_scalar('train_acc', round(accuracy, 4), epoch)

print(f"Epoch {epoch+1}/{num_epochs}, Loss: {total_loss/len(train_loader):.4f}, Accuracy: {accuracy:.4f}")

# 在验证集上进行评估

model.eval()

with torch.no_grad():

val_loss = 0.0

val_correct = 0

val_total = 0

for imgs_val, texts_val, labels_val in tqdm(test_loader, desc="验证集遍历"):

imgs_val, texts_val, labels_val = imgs_val.to(device), {k: v.to(device) for k, v in texts_val.items()}, labels_val.long().to(device)

outputs_val = model(imgs_val, texts_val)

val_loss += criterion(outputs_val, labels_val).item()

_, predicted_val = torch.max(outputs_val, 1)

val_correct += (predicted_val == labels_val).sum().item()

val_total += labels_val.size(0)

avg_val_loss = val_loss / len(test_loader)

val_accuracy = val_correct / val_total

writer.add_scalar('val_loss', round(avg_val_loss, 4), epoch)

writer.add_scalar('val_acc', round(val_accuracy, 4), epoch)

print(f"Epoch {epoch+1}/{num_epochs}", f"Val Loss: {avg_val_loss:.4f}, Val Accuracy: {val_accuracy:.4f}")

# 如果验证集损失变小,保存当前模型

if avg_val_loss < best_val_loss:

best_val_loss = avg_val_loss

counter = 0

torch.save(model.state_dict(), 'best_model_2.pt') # 保存当前最佳模型

else:

counter += 1

# 如果验证集损失连续多次没有变小,则停止训练

if counter == 5:

optimizer.param_groups[0]['lr'] /= 10

print(f'lr 切换为:-------------------->{optimizer.param_groups[0]["lr"]}')

if counter >= patience:

print("Early stopping")

break

4.结果分析

notebook.start('--logdir ./tensorboard')

作者:lotuslaw

出处:https://www.cnblogs.com/lotuslaw/p/18164589

版权:本作品采用「署名-非商业性使用-相同方式共享 4.0 国际」许可协议进行许可。

标签:

Pytorch

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧