1-1 结构化数据建模流程范例

0.环境配置

import os

#mac系统上pytorch和matplotlib在jupyter中同时跑需要更改环境变量

# os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

!pip install -U torchkeras -i https://pypi.douban.com/simple

!pip install pandas -i https://pypi.douban.com/simple

!pip install matplotlib -i https://pypi.douban.com/simple

!pip install scikit-learn -i https://pypi.douban.com/simple

import torch

import torchkeras

print(torch.__version__)

"""

2.1.0+cpu

"""

print(torchkeras.__version__)

"""

3.9.4

"""

1.准备数据

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

df_train_raw = pd.read_csv('dataset/titanic/train.csv')

df_train_raw, df_test_raw = train_test_split(df_train_raw, test_size=0.2, random_state=42)

df_train_raw.head()

字段说明:

- Survived:0代表死亡,1代表存活【y标签】

- Pclass:乘客所持票类,有三种值(1,2,3) 【转换成onehot编码】

- Name:乘客姓名 【舍去】

- Sex:乘客性别 【转换成bool特征】

- Age:乘客年龄(有缺失) 【数值特征,添加“年龄是否缺失”作为辅助特征】

- SibSp:乘客兄弟姐妹/配偶的个数(整数值) 【数值特征】

- Parch:乘客父母/孩子的个数(整数值)【数值特征】

- Ticket:票号(字符串)【舍去】

- Fare:乘客所持票的价格(浮点数,0-500不等) 【数值特征】

- Cabin:乘客所在船舱(有缺失) 【添加“所在船舱是否缺失”作为辅助特征】

- Embarked:乘客登船港口:S、C、Q(有缺失)【转换成onehot编码,四维度 S,C,Q,nan】 S,C,Q,nan】

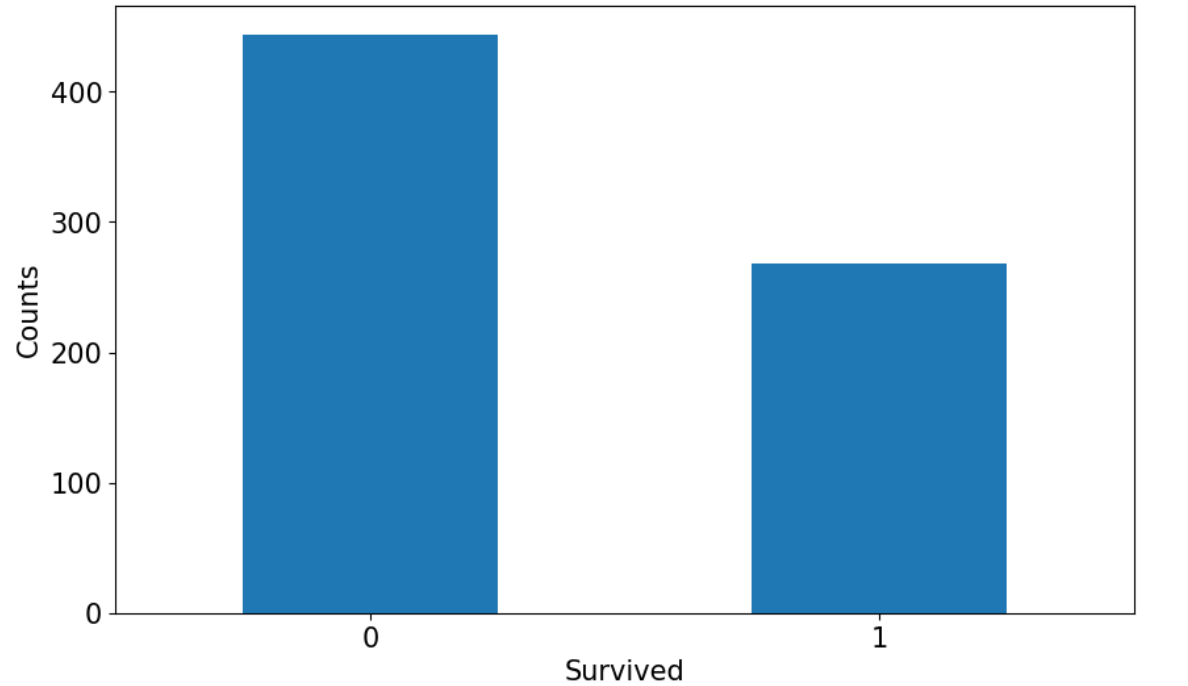

%matplotlib inline

%config InlineBackend.figure_format = 'png'

ax = df_train_raw['Survived'].value_counts().plot(kind = 'bar',

figsize = (12,8),fontsize=15,rot = 0)

ax.set_ylabel('Counts',fontsize = 15)

ax.set_xlabel('Survived',fontsize = 15)

plt.show()

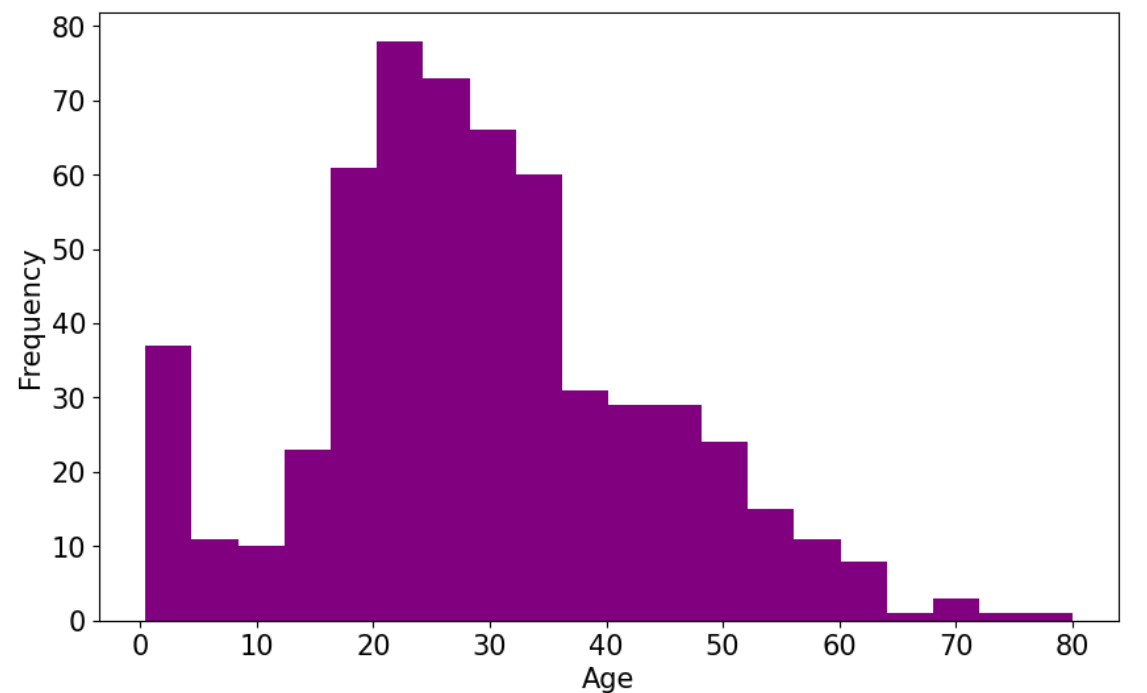

%matplotlib inline

%config InlineBackend.figure_format = 'png'

ax = df_train_raw['Age'].plot(kind = 'hist',bins = 20,color= 'purple',

figsize = (10,6),fontsize=15)

ax.set_ylabel('Frequency',fontsize = 15)

ax.set_xlabel('Age',fontsize = 15)

plt.show()

%matplotlib inline

%config InlineBackend.figure_format = 'png'

ax = df_train_raw.query('Survived == 0')['Age'].plot(kind = 'density',

figsize = (10,6),fontsize=15)

df_train_raw.query('Survived == 1')['Age'].plot(kind = 'density',

figsize = (10,6),fontsize=15)

ax.legend(['Survived==0','Survived==1'],fontsize = 12)

ax.set_ylabel('Density',fontsize = 15)

ax.set_xlabel('Age',fontsize = 15)

plt.show()

def preprocessing(dfdata):

dfresult= pd.DataFrame()

#Pclass

dfPclass = pd.get_dummies(dfdata['Pclass'], dtype='int32')

dfPclass.columns = ['Pclass_' +str(x) for x in dfPclass.columns ]

dfresult = pd.concat([dfresult,dfPclass],axis = 1)

#Sex

dfSex = pd.get_dummies(dfdata['Sex'], dtype='int32')

dfresult = pd.concat([dfresult,dfSex],axis = 1)

#Age

dfresult['Age'] = dfdata['Age'].fillna(0)

dfresult['Age_null'] = pd.isna(dfdata['Age']).astype('int32')

#SibSp,Parch,Fare

dfresult['SibSp'] = dfdata['SibSp']

dfresult['Parch'] = dfdata['Parch']

dfresult['Fare'] = dfdata['Fare']

#Carbin

dfresult['Cabin_null'] = pd.isna(dfdata['Cabin']).astype('int32')

#Embarked

dfEmbarked = pd.get_dummies(dfdata['Embarked'], dummy_na=True, dtype='int32')

dfEmbarked.columns = ['Embarked_' + str(x) for x in dfEmbarked.columns]

dfresult = pd.concat([dfresult,dfEmbarked],axis = 1)

return dfresult

x_train = preprocessing(df_train_raw).values

y_train = df_train_raw[['Survived']].values

x_test = preprocessing(df_test_raw).values

y_test = df_test_raw[['Survived']].values

print("x_train.shape =", x_train.shape )

print("x_test.shape =", x_test.shape )

print("y_train.shape =", y_train.shape )

print("y_test.shape =", y_test.shape )

"""

x_train.shape = (712, 15)

x_test.shape = (179, 15)

y_train.shape = (712, 1)

y_test.shape = (179, 1)

"""

# 使用DataLoader和TensorDataset封装成可以迭代的数据管道

dl_train = torch.utils.data.DataLoader(torch.utils.data.TensorDataset(torch.tensor(x_train).float(), torch.tensor(y_train).float()), shuffle=True, batch_size=8)

dl_val = torch.utils.data.DataLoader(torch.utils.data.TensorDataset(torch.tensor(x_test).float(),torch.tensor(y_test).float()), shuffle = False, batch_size = 8)

# 测试数据管道

for features, labels in dl_train:

print(features, labels)

break

"""

tensor([[ 0.0000, 0.0000, 1.0000, 1.0000, 0.0000, 0.7500, 0.0000,

2.0000, 1.0000, 19.2583, 1.0000, 1.0000, 0.0000, 0.0000,

0.0000],

[ 0.0000, 0.0000, 1.0000, 0.0000, 1.0000, 32.0000, 0.0000,

0.0000, 0.0000, 7.9250, 1.0000, 0.0000, 0.0000, 1.0000,

0.0000],

[ 0.0000, 1.0000, 0.0000, 1.0000, 0.0000, 2.0000, 0.0000,

1.0000, 1.0000, 26.0000, 1.0000, 0.0000, 0.0000, 1.0000,

0.0000],

[ 0.0000, 0.0000, 1.0000, 1.0000, 0.0000, 18.0000, 0.0000,

0.0000, 0.0000, 6.7500, 1.0000, 0.0000, 1.0000, 0.0000,

0.0000],

[ 0.0000, 0.0000, 1.0000, 0.0000, 1.0000, 25.0000, 0.0000,

0.0000, 0.0000, 7.0500, 1.0000, 0.0000, 0.0000, 1.0000,

0.0000],

[ 0.0000, 0.0000, 1.0000, 0.0000, 1.0000, 22.0000, 0.0000,

0.0000, 0.0000, 7.1250, 1.0000, 0.0000, 0.0000, 1.0000,

0.0000],

[ 0.0000, 0.0000, 1.0000, 0.0000, 1.0000, 22.0000, 0.0000,

0.0000, 0.0000, 7.2292, 1.0000, 1.0000, 0.0000, 0.0000,

0.0000],

[ 1.0000, 0.0000, 0.0000, 1.0000, 0.0000, 0.0000, 1.0000,

1.0000, 0.0000, 133.6500, 1.0000, 0.0000, 0.0000, 1.0000,

0.0000]]) tensor([[1.],

[0.],

[1.],

[0.],

[0.],

[0.],

[0.],

[1.]])

"""

2.定义模型

使用Pytorch通常有三种方式构建模型:使用nn.Sequential按层顺序构建模型,继承nn.Module基类构建自定义模型,继承nn.Module基类构建模型并辅助应用模型容器进行封装。 此处选择使用最简单的nn.Sequential按层顺序构建模型

def create_net():

net = torch.nn.Sequential()

net.add_module('linear1', torch.nn.Linear(15, 20))

net.add_module('relu1', torch.nn.ReLU())

net.add_module('linear2', torch.nn.Linear(20, 15))

net.add_module('relu2', torch.nn.ReLU())

net.add_module('linear3', torch.nn.Linear(15, 1))

return net

net = create_net()

print(net)

"""

Sequential(

(linear1): Linear(in_features=15, out_features=20, bias=True)

(relu1): ReLU()

(linear2): Linear(in_features=20, out_features=15, bias=True)

(relu2): ReLU()

(linear3): Linear(in_features=15, out_features=1, bias=True)

)

"""

3.训练模型

Pytorch通常需要用户编写自定义训练循环,训练循环的代码风格因人而已。 有3类典型的训练循环代码风格:脚本式训练循环,函数式训练循环,类形式训练循环。 此处介绍一种较通用的仿照Keras风格的脚本式训练循环。 该脚本式训练代码与torchkeras库的核心代码基本一致。 torchkeras详情: https://github.com/lyhue1991/torchkeras

import os

import sys

import time

import numpy as np

import datetime

from tqdm import tqdm

import torch

from copy import deepcopy

from torchkeras.metrics import Accuracy

def printlog(info):

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print('\n' + '=============' * 8 + "%s" % nowtime)

print(str(info) + '\n')

loss_fn = torch.nn.BCEWithLogitsLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.01)

metric_dict = {"acc": Accuracy()}

epochs = 20

ckpt_path = 'checkpoint.pt'

# early_stopping相关设置

monitor = 'val_acc'

patience = 5

mode = 'max'

history = {}

for epoch in range(1, epochs + 1):

printlog('Epoch {} / {}'.format(epoch, epochs))

# train

net.train()

total_loss, step = 0, 0

loop = tqdm(enumerate(dl_train), total=len(dl_train), file=sys.stdout)

train_metrics_dict = deepcopy(metric_dict)

for i, batch in loop:

features, labels = batch

# forward

preds = net(features)

loss = loss_fn(preds, labels)

# backward

loss.backward()

optimizer.step()

optimizer.zero_grad()

# metrics

step_metrics = {'train_'+name: metric_fn(preds, labels).item() for name, metric_fn in train_metrics_dict.items()}

step_log = dict({'train_loss': loss.item()}, **step_metrics)

total_loss += loss.item()

step += 1

if i != len(dl_train) -1:

loop.set_postfix(**step_log)

else:

epoch_loss = total_loss / step

epoch_metrics = {'train_'+name: metric_fn.compute().item() for name, metric_fn in train_metrics_dict.items()}

epoch_log = dict({'train_loss': epoch_loss}, **epoch_metrics)

loop.set_postfix(**epoch_log)

for name, metric_fn in train_metrics_dict.items():

metric_fn.reset()

for name, metric in epoch_log.items():

history[name] = history.get(name, []) + [metric]

# validate

net.eval() # 不启用Batchnormalization Dropout

total_loss, step = 0, 0

loop = tqdm(enumerate(dl_val), total=len(dl_val), file=sys.stdout)

val_metrics_dict = deepcopy(metric_dict)

with torch.no_grad():

for i, batch in loop:

features, labels = batch

# forward

preds = net(features)

loss = loss_fn(preds, labels)

# metrics

step_metrics = {'val_' + name: metric_fn(preds, labels).item() for

name, metric_fn in val_metrics_dict.items()}

step_log = dict({'val_loss': loss.item()}, **step_metrics)

total_loss += loss.item()

step += 1

if i != len(dl_val) - 1:

loop.set_postfix(**step_log)

else:

epoch_loss = total_loss / step

epoch_metrics = {'val_'+name: metric_fn.compute().item() for

name, metric_fn in val_metrics_dict.items()}

epoch_log = dict({'val_loss': epoch_loss}, **epoch_metrics)

loop.set_postfix(**epoch_log)

for name, metric_fn in val_metrics_dict.items():

metric_fn.reset()

epoch_log['epoch'] = epoch

for name, metric in epoch_log.items():

history[name] = history.get(name, []) + [metric]

# early-stopping

arr_scores = history[monitor]

best_score_idx = np.argmax(arr_scores) if mode == 'max' else np.argmin(arr_scores)

if best_score_idx == len(arr_scores) - 1:

torch.save(net.state_dict(), ckpt_path)

print('<<<<<< reach best {0}: {1} >>>>>>'.format(monitor, arr_scores[best_score_idx]), file=sys.stderr)

if len(arr_scores) - best_score_idx > patience:

print('<<<<<< {} without improvement in {} epoch, early stopping >>>>>>'.format(monitor, patience), file=sys.stderr)

break

net.load_state_dict(torch.load(ckpt_path))

dfhistory = pd.DataFrame(history)

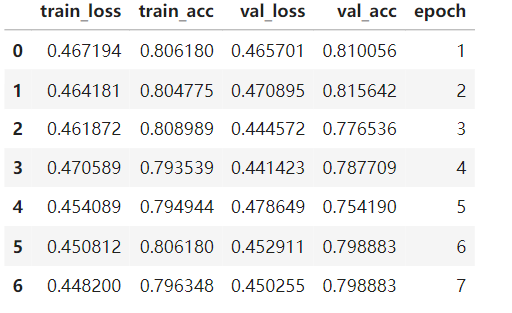

4.评估模型

dfhistory

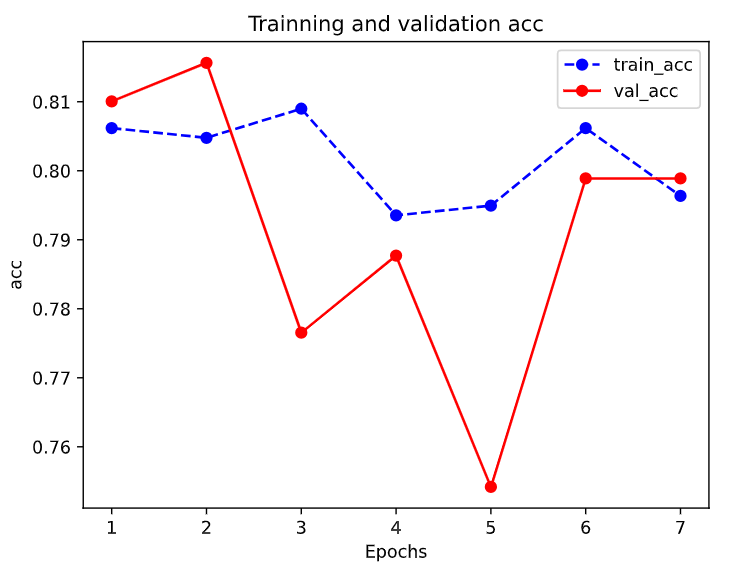

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

import matplotlib.pyplot as plt

def plot_metric(dfhistory, metric):

train_metrics = dfhistory['train_' + metric]

val_metrics = dfhistory['val_' + metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Trainning and validation ' + metric)

plt.xlabel('Epochs')

plt.ylabel(metric)

plt.legend(['train_' + metric, 'val_' + metric])

plt.show()

plot_metric(dfhistory, 'loss')

plot_metric(dfhistory, 'acc')

5.使用模型

# 预测概率

y_pred_probs = torch.sigmoid(net(torch.tensor(x_test[:10]).float())).data

y_pred_probs

"""

tensor([[0.1531],

[0.2011],

[0.1568],

[0.9407],

[0.7285],

[0.9579],

[0.7373],

[0.1192],

[0.7950],

[0.9357]])

"""

# 预测类别

y_pred = torch.where(y_pred_probs > 0.5, torch.ones_like(y_pred_probs), torch.zeros_like(y_pred_probs))

y_pred

"""

tensor([[0.],

[0.],

[0.],

[1.],

[1.],

[1.],

[1.],

[0.],

[1.],

[1.]])

"""

6.保存模型

- Pytorch有两种保存模型的方式,都是通过调用pickle序列化的方法实现的。

- 第一种方法只保存模型参数。

- 第二种方法保存完整模型

- 推荐使用第一种,第二种方法可能在切换设备和目录的时候出现各种问题

print(net.state_dict().keys())

"""

odict_keys(['linear1.weight', 'linear1.bias', 'linear2.weight', 'linear2.bias', 'linear3.weight', 'linear3.bias'])

"""

# 保存模型参数

torch.save(net.state_dict(), './data/net_parameters.pt')

net_clone = create_net()

net_clone.load_state_dict(torch.load('./data/net_parameters.pt'))

torch.sigmoid(net_clone(torch.tensor(x_test[:10]).float())).data

"""

tensor([[0.1531],

[0.2011],

[0.1568],

[0.9407],

[0.7285],

[0.9579],

[0.7373],

[0.1192],

[0.7950],

[0.9357]])

"""

# 保存完整模型

torch.save(net, './data/net_model.pt')

net_loaded = torch.load('./data/net_model.pt')

torch.sigmoid(net_loaded(torch.tensor(x_test[:10]).float())).data

"""

tensor([[0.1531],

[0.2011],

[0.1568],

[0.9407],

[0.7285],

[0.9579],

[0.7373],

[0.1192],

[0.7950],

[0.9357]])

"""

作者:lotuslaw

出处:https://www.cnblogs.com/lotuslaw/p/17873287.html

版权:本作品采用「署名-非商业性使用-相同方式共享 4.0 国际」许可协议进行许可。

标签:

Pytorch

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

2021-12-03 4-Spark学习笔记4

2021-12-03 3-Spark学习笔记3

2021-12-03 2-Spark学习笔记2

2021-12-03 1-Spark学习笔记1