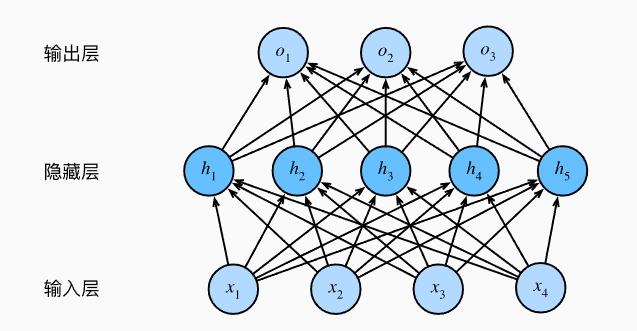

4.多层感知机

多层感知机

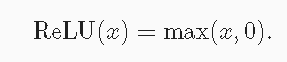

激活函数

import tensorflow as tf

import matplotlib.pyplot as plt

from matplotlib_inline import backend_inline

from IPython import display

import math

import numpy as np

import pandas as pd

def use_svg_display():

backend_inline.set_matplotlib_formats('png')

def set_figsize(figsize=(3.5, 2.5)):

use_svg_display()

plt.rcParams['figure.figsize'] = figsize

def set_axes(axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend):

"""设置matplotlib的轴"""

axes.set_xlabel(xlabel)

axes.set_ylabel(ylabel)

axes.set_xscale(xscale)

axes.set_yscale(yscale)

axes.set_xlim(xlim)

axes.set_ylim(ylim)

if legend:

axes.legend(legend)

axes.grid()

def plot(X, Y=None, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), figsize=(3.5, 2.5), axes=None):

"""绘制数据点"""

if legend is None:

legend = []

set_figsize(figsize)

axes = axes if axes else plt.gca()

# 如果X有一个轴,输出True

def has_one_axis(X):

return (hasattr(X, "ndim") and X.ndim == 1 or isinstance(X, list)

and not hasattr(X[0], "__len__"))

if has_one_axis(X):

X = [X]

if Y is None:

X, Y = [[]] * len(X), X

elif has_one_axis(Y):

Y = [Y]

if len(X) != len(Y):

X = X * len(Y)

axes.cla()

for x, y, fmt in zip(X, Y, fmts):

if len(x):

axes.plot(x, y, fmt)

else:

axes.plot(y, fmt)

set_axes(axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

x = tf.Variable(tf.range(-8.0, 8.0, 0.1), dtype=tf.float32)

y = tf.nn.relu(x)

plot(x.numpy(), y.numpy(), 'x', 'relu(x)', figsize=(5, 2.5))

plt.show()

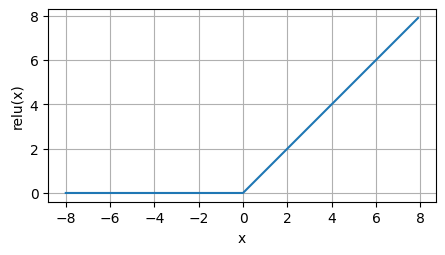

with tf.GradientTape() as t:

y = tf.nn.relu(x)

plot(x.numpy(), t.gradient(y, x).numpy(), 'x', 'grad of relu', figsize=(5, 2.5))

plt.show()

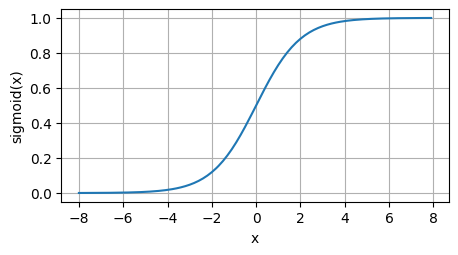

y = tf.nn.sigmoid(x)

plot(x.numpy(), y.numpy(), 'x', 'sigmoid(x)', figsize=(5, 2.5))

plt.show()

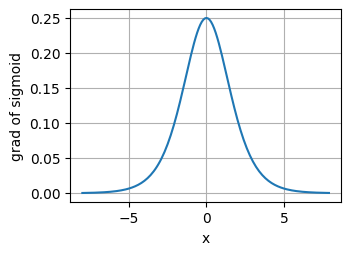

with tf.GradientTape() as t:

y = tf.nn.sigmoid(x)

plot(x.numpy(), t.gradient(y, x).numpy(), 'x', 'grad of sigmoid')

plt.show()

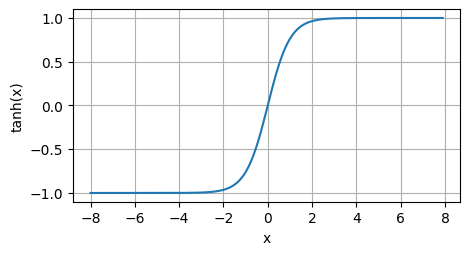

y = tf.nn.tanh(x)

plot(x.numpy(), y.numpy(), 'x', 'tanh(x)', figsize=(5, 2.5))

plt.show()

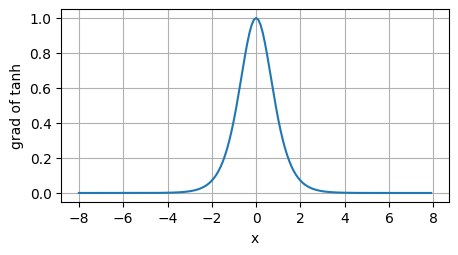

with tf.GradientTape() as t:

y = tf.nn.tanh(x)

plot(x.numpy(), t.gradient(y, x), 'x', 'grad of tanh', figsize=(5, 2.5))

plt.show()

多层感知机-从零开始实现#

# 加载数据集

def load_array(data_arrays, batch_size, is_train=True):

dataset = tf.data.Dataset.from_tensor_slices(data_arrays)

if is_train:

dataset = dataset.shuffle(buffer_size=1000)

dataset = dataset.batch(batch_size)

return dataset

def load_data_fashion_mnist(batch_size, resize=None):

mnist_train, mnist_test = tf.keras.datasets.fashion_mnist.load_data()

# 将所有数字除以255, 使所有像素值介于0-1之间,在最后添加一个批处理维度

# 并将所有标签转换为int32

process = lambda X, y: (tf.expand_dims(X, axis=3) / 255, tf.cast(y, dtype='int32'))

resize_fn = lambda X, y: (tf.image.resize_with_pad(X, resize, resize) if resize else X, y)

return (

tf.data.Dataset.from_tensor_slices(process(*mnist_train)).batch(batch_size).shuffle(len(mnist_train[0])).map(resize_fn),

tf.data.Dataset.from_tensor_slices(process(*mnist_test)).batch(batch_size).map(resize_fn)

)

batch_size = 256

train_iter, test_iter = load_data_fashion_mnist(batch_size)

# 初始化模型参数

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = tf.Variable(tf.random.normal(shape=(num_inputs, num_hiddens), mean=0, stddev=0.01))

b1 = tf.Variable(tf.zeros(num_hiddens))

W2 = tf.Variable(tf.random.normal(shape=(num_hiddens, num_outputs), mean=0, stddev=0.01))

b2 = tf.Variable(tf.zeros(num_outputs))

params = [W1, b1, W2, b2]

# 激活函数

def relu(x):

return tf.math.maximum(x, 0)

# 模型

def net(X):

X = tf.reshape(X, (-1, num_inputs))

H = relu(tf.matmul(X, W1) + b1)

return tf.matmul(H, W2) + b2

# 损失函数

def loss(y_hat, y):

# 如果输入的是一个 logit 值(值域范围 [-∞, +∞] ),则应该设置 from_logits=True

# 如果输入的是一个 logit 值(值域范围 [-∞, +∞] ),则应该设置 from_logits=True

return tf.losses.sparse_categorical_crossentropy(y, y_hat, from_logits=True)

# 训练

def sgd(params, grads, lr, batch_size):

for param, grad in zip(params, grads):

param.assign_sub(lr * grad / batch_size)

def accuracy(y_hat, y):

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = tf.argmax(y_hat, axis=1)

cmp = tf.cast(y_hat, y.dtype) == y

return float(tf.reduce_sum(tf.cast(cmp, y.dtype)))

class Updater:

def __init__(self, params, lr):

self.params = params

self.lr = lr

def __call__(self, batch_size, grads):

sgd(self.params, grads, self.lr, batch_size)

class Animator:

def __init__(self, xlabel=None, ylabel=None, legend=None, xlim=None, ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), nrows=1, ncols=1, figsize=(3.5, 2.5)):

# 增量地绘制多条线

if legend is None:

legend = []

use_svg_display()

self.fig, self.axes = plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# 使用lambda函数捕获参数

self.config_axes = lambda: set_axes(self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, y):

# 向图中添加多个数据点

if not hasattr(y, "__len__"):

y = [y]

n = len(y)

if not hasattr(x, "__len__"):

x = [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y = [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla()

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt)

self.config_axes()

display.display(self.fig)

display.clear_output(wait=True)

def train_epoch_ch3(net, train_iter, loss, updater):

metric = Accumulator(3)

for X, y in train_iter:

with tf.GradientTape() as tape:

y_hat = net(X)

if isinstance(loss, tf.keras.losses.Loss):

l = loss(y, y_hat)

else:

l = loss(y_hat, y)

if isinstance(updater, tf.keras.optimizers.Optimizer):

params = net.trainable_variables

grads = tape.gradient(l, params)

updater.apply_gradients(zip(grads, params))

else:

updater(X.shape[0], tape.gradient(l, updater.params))

# keras的loss默认返回一个批量的平均损失

l_sum = l * float(tf.size(y)) if isinstance(loss, tf.keras.losses.Loss) else tf.reduce_sum(l)

metric.add(l_sum, accuracy(y_hat, y), tf.size(y))

return metric[0] / metric[2], metric[1] / metric[2]

class Accumulator:

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

def evaluate_accuracy(net, data_iter):

metric = Accumulator(2)

for X, y in data_iter:

metric.add(accuracy(net(X), y), tf.size(y))

return metric[0] / metric[1]

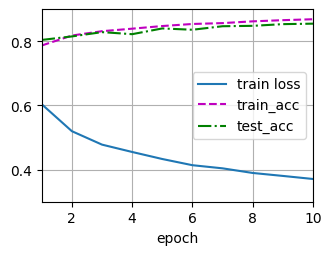

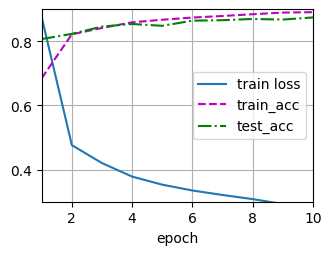

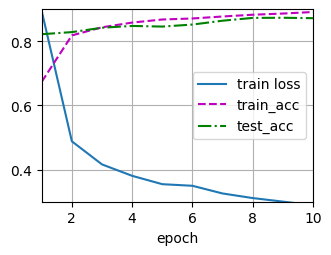

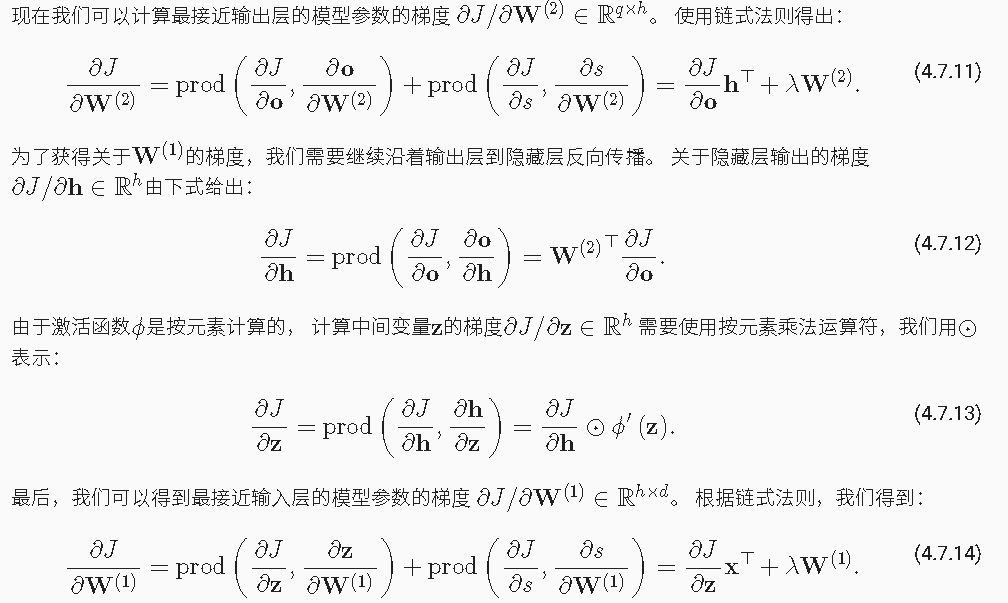

def train_ch3(net, train_iter, test_iter, loss, num_epochs, updater):

animator = Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0.3, 0.9], legend=['train loss', 'train_acc', 'test_acc'])

for epoch in range(num_epochs):

train_metrics = train_epoch_ch3(net, train_iter, loss, updater)

test_acc = evaluate_accuracy(net, test_iter)

animator.add(epoch+1, train_metrics + (test_acc,))

train_loss, train_acc = train_metrics

assert train_loss < 0.5, train_loss

assert train_acc <= 1 and train_acc > 0.7, train_acc

assert test_acc <=1 and test_acc > 0.7, test_acc

num_epochs, lr = 10, 0.1

updater = Updater([W1, W2, b1, b2], lr)

train_ch3(net, train_iter, test_iter, loss, num_epochs, updater)

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat', 'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

def show_images(imgs, num_rows, num_cols, titles=None, scale=1.5):

figsize = (num_cols * scale, num_rows * scale)

_, axes = plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for i, (ax, img) in enumerate(zip(axes, imgs)):

ax.imshow(img.numpy())

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

if titles:

ax.set_title(titles[i])

plt.show()

return axes

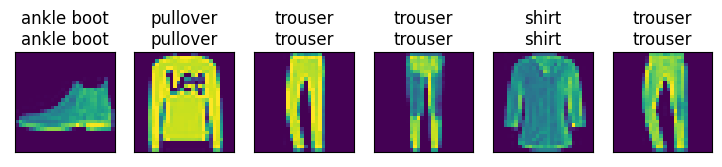

def predict_ch3(net, test_iter, n=6):

for X, y in test_iter:

break

trues = get_fashion_mnist_labels(y)

preds = get_fashion_mnist_labels(tf.argmax(net(X), axis=1))

titles = [true + '\n' + pred for true, pred in zip(trues, preds)]

show_images(tf.reshape(X[0:n], (n, 28, 28)), 1, n, titles=titles[0:n])

predict_ch3(net, test_iter)

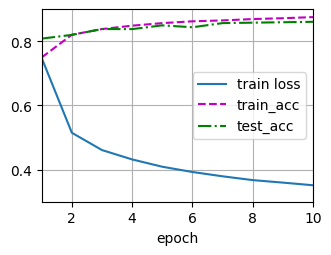

多层感知机的简洁实现

net = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(10)

])

batch_size, lr, num_epochs = 256, 0.1, 10

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

trainer = tf.keras.optimizers.SGD(learning_rate=lr)

train_iter, test_iter = load_data_fashion_mnist(batch_size)

train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

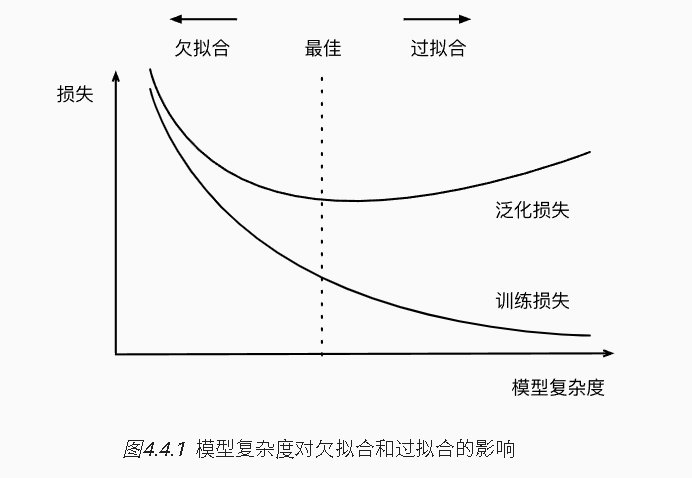

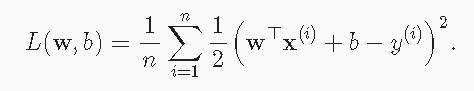

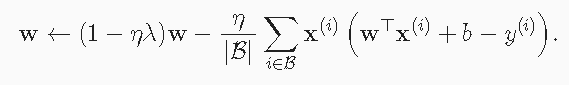

模型选择、欠拟合和过拟合

训练误差(training error)是指, 模型在训练数据集上计算得到的误差。 泛化误差(generalization error)是指, 模型应用在同样从原始样本的分布中抽取的无限多数据样本时,模型误差的期望。

影响因素:模型复杂性、数据集大小。

max_degree = 20 # 多项式的最大阶数

n_train, n_test = 100, 100 # 训练集和测试集的大小

true_w = np.zeros(max_degree) # 分配空间

true_w[:4] = np.array([5, 1.2, -3.4, 5.6])

features = np.random.normal(size=(n_train + n_test, 1))

np.random.shuffle(features)

poly_features = np.power(features, np.arange(max_degree).reshape(1, -1))

for i in range(max_degree):

poly_features[:, i] /= math.gamma(i+1) # gamma(n) = (n-1)!

# labels的维度:(n_train+n_test,)

labels = np.dot(poly_features, true_w)

labels += np.random.normal(scale=0.1, size=labels.shape)

# ndarray转换为tensor

true_w, features, poly_features, labels = [tf.constant(x, dtype=tf.float32) for x in [true_w, features, poly_features, labels]]

features[:2], poly_features[:2, :], labels[:2]

"""

(<tf.Tensor: shape=(2, 1), dtype=float32, numpy=

array([[-0.58063686],

[ 1.7074897 ]], dtype=float32)>,

<tf.Tensor: shape=(2, 20), dtype=float32, numpy=

array([[ 1.0000000e+00, -5.8063686e-01, 1.6856958e-01, -3.2625902e-02,

4.7359504e-03, -5.4997351e-04, 5.3222480e-05, -4.4147046e-06,

3.2041754e-07, -2.0671804e-08, 1.2002811e-09, -6.3357042e-11,

3.0656194e-12, -1.3692397e-13, 5.6787931e-15, -2.1982110e-16,

7.9772645e-18, -2.7246435e-19, 8.7890468e-21, -2.6859182e-22],

[ 1.0000000e+00, 1.7074897e+00, 1.4577607e+00, 8.2970381e-01,

3.5417768e-01, 1.2095095e-01, 3.4420419e-02, 8.3960732e-03,

1.7920262e-03, 3.3998516e-04, 5.8052115e-05, 9.0112180e-06,

1.2822135e-06, 1.6841281e-07, 2.0540224e-08, 2.3381481e-09,

2.4952276e-10, 2.5062209e-11, 2.3774147e-12, 2.1365323e-13]],

dtype=float32)>,

<tf.Tensor: shape=(2,), dtype=float32, numpy=array([3.6991472, 6.503802 ], dtype=float32)>)

"""

# 对模型进行训练和测试

def evaluate_loss(net, data_iter, loss):

# 评估给定数据集上模型的损失

metric = Accumulator(2) # 损失的总和,样本数量

for X, y in data_iter:

l = loss(net(X), y)

metric.add(tf.reduce_sum(l), tf.size(l))

return metric[0] / metric[1]

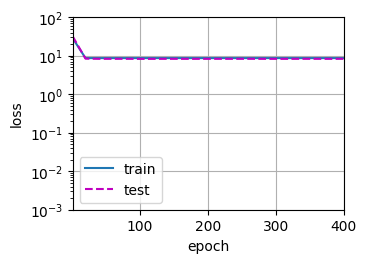

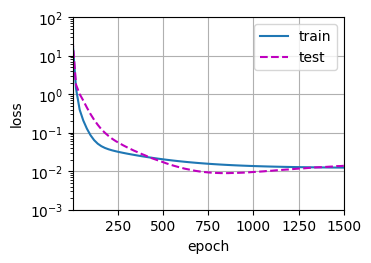

def train(train_features, test_features, train_labels, test_labels, num_epochs=400):

loss = tf.losses.MeanSquaredError()

input_shape = train_features.shape[-1]

# 不设置偏置,因为我们已经在多项式中实现了它

net = tf.keras.Sequential()

net.add(tf.keras.layers.Dense(1, use_bias=False))

batch_size = min(10, train_labels.shape[0])

train_iter = load_array((train_features, train_labels), batch_size)

test_iter = load_array((test_features, test_labels), batch_size, is_train=False)

trainer = tf.keras.optimizers.SGD(learning_rate=0.01)

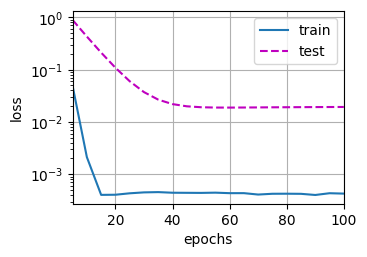

animator = Animator(xlabel='epoch', ylabel='loss', yscale='log', xlim=[1, num_epochs], ylim=[1e-3,1e2], legend=['train', 'test'])

for epoch in range(num_epochs):

train_epoch_ch3(net, train_iter, loss, trainer)

if epoch == 0 or (epoch+1) % 20 == 0:

animator.add(epoch+1, (evaluate_loss(net, train_iter, loss), evaluate_loss(net, test_iter, loss)))

print('weight:', net.get_weights()[0].T)

# 从多项式特征中选择前4个维度

train(poly_features[:n_train, :4], poly_features[n_train:, :4], labels[:n_train], labels[n_train:])

"""

weight: [[ 5.0081244 1.1937299 -3.4142315 5.6134424]]

"""

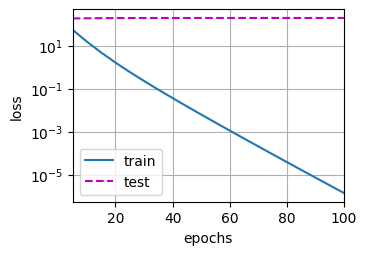

# 从多项式特征中选择前2个维度,即1和x

train(poly_features[:n_train, :2], poly_features[n_train:, :2],

labels[:n_train], labels[n_train:])

"""

weight: [[3.251812 3.4502485]]

"""

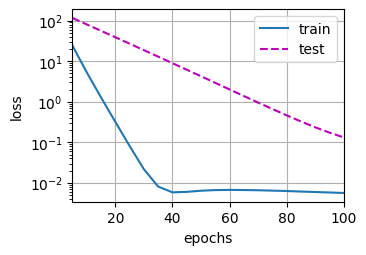

# 从多项式特征中选取所有维度

train(poly_features[:n_train, :], poly_features[n_train:, :],

labels[:n_train], labels[n_train:], num_epochs=1500)

"""

weight: [[ 5.0046334 1.242833 -3.403969 5.3268485 0.03495206 0.85826844

-0.13661306 0.34501278 0.29974663 0.02613247 0.45817637 0.1071128

0.09115538 0.43958995 0.1796739 -0.00679749 0.2960214 0.05597335

0.14703643 0.11690664]]

"""

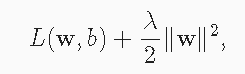

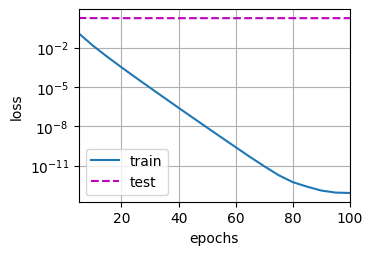

权重衰减

在训练参数化机器学习模型时, 权重衰减(weight decay)是最广泛使用的正则化的技术之一, 它通常也被称为L2正则化。

def synthetic_data(w, b, num_examples):

X = tf.zeros((num_examples, w.shape[0]))

X += tf.random.normal(shape=X.shape)

y = tf.matmul(X, tf.reshape(w, (-1, 1))) + b

y += tf.random.normal(shape=y.shape, stddev=0.01)

y = tf.reshape(y, (-1, 1))

return X, y

n_train, n_test, num_inputs, batch_size = 20, 100, 200, 5

true_w, true_b = tf.ones((num_inputs, 1)) * 0.01, 0.05

train_data = synthetic_data(true_w, true_b, n_train)

train_iter = load_array(train_data, batch_size)

test_data = synthetic_data(true_w, true_b, n_test)

test_iter = load_array(test_data, batch_size, is_train=False)

# 初始化模型参数

def init_params():

w = tf.Variable(tf.random.normal(mean=1, shape=(num_inputs, 1)))

b = tf.Variable(tf.zeros(shape=(1,)))

return [w, b]

def l2_penalty(w):

return tf.reduce_sum(tf.pow(w, 2)) / 2

def linreg(X, w, b):

return tf.matmul(X, w) + b

def squared_loss(y_hat, y):

return (y_hat - tf.reshape(y, y_hat.shape)) ** 2 / 2

def train(lambd):

w, b = init_params()

net, loss = lambda X: linreg(X, w, b), squared_loss

num_epochs, lr = 100, 0.003

animator = Animator(xlabel='epochs', ylabel='loss', yscale='log', xlim=[5, num_epochs], legend=['train', 'test'])

for epoch in range(num_epochs):

for X, y in train_iter:

with tf.GradientTape() as tape:

# 增加了L2范数惩罚项

# 广播机制是l2_penalty(w)成为一个长度为batch_size的向量

l = loss(net(X), y) + lambd * l2_penalty(w)

grads = tape.gradient(l, [w, b])

sgd([w, b], grads, lr, batch_size)

if (epoch + 1) % 5 == 0:

animator.add(epoch+1, (evaluate_loss(net, train_iter, loss), evaluate_loss(net, test_iter, loss)))

print('w的L2范数是:', tf.norm(w).numpy())

# 忽略正则化直接训练

train(lambd=0)

"""

w的L2范数是: 18.655033

"""

# 使用权重衰减

train(lambd=3)

# 简洁实现

def train_concise(wd):

net = tf.keras.models.Sequential()

net.add(tf.keras.layers.Dense(1, kernel_regularizer=tf.keras.regularizers.l2(wd)))

net.build(input_shape=(1, num_inputs))

w, b = net.trainable_variables

loss = tf.keras.losses.MeanSquaredError()

num_epochs, lr = 100, 0.003

trainer = tf.keras.optimizers.SGD(learning_rate=lr)

animator = Animator(xlabel='epochs', ylabel='loss', yscale='log', xlim=[5, num_epochs], legend=['train', 'test'])

for epoch in range(num_epochs):

for X, y in train_iter:

with tf.GradientTape() as tape:

# tf.keras需要为自定义训练代码手动添加损失

l = loss(net(X), y) + net.losses

grads = tape.gradient(l, net.trainable_variables)

trainer.apply_gradients(zip(grads, net.trainable_variables))

if (epoch+1) % 5 == 0:

animator.add(epoch+1, (evaluate_loss(net, train_iter, loss), evaluate_loss(net, test_iter, loss)))

print('w的L2范数是:', tf.norm(net.get_weights()[0]).numpy())

train_concise(0)

"""

w的L2范数是: 1.4163884

"""

train_concise(3)

"""

w的L2范数是: 0.023434483

"""

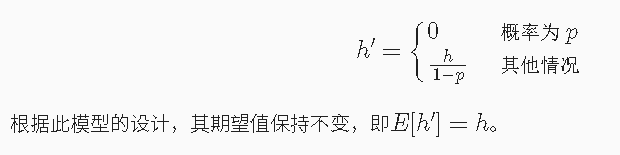

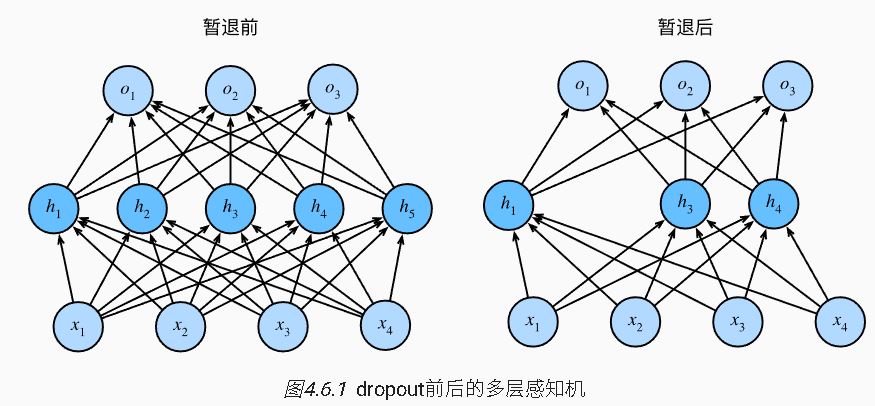

Dropout

在标准暂退法正则化中,通过按保留(未丢弃)的节点的分数进行规范化来消除每一层的偏差.

def dropout_layer(X, dropout):

assert 0 <= dropout <= 1

# 在本情况中,所有元素都被丢弃

if dropout == 1:

return tf.zeros_like(X)

# 在本情况中,所有元素都被保留

if dropout == 0:

return X

mask = tf.random.uniform(shape=tf.shape(X), minval=0, maxval=1) < 1 - dropout

return tf.cast(mask, dtype=tf.float32) * X / (1.0 - dropout)

X = tf.reshape(tf.range(16, dtype=tf.float32), (2, 8))

print(X)

print(dropout_layer(X, 0))

print(dropout_layer(X, 0.5))

print(dropout_layer(X, 1))

"""

tf.Tensor(

[[ 0. 1. 2. 3. 4. 5. 6. 7.]

[ 8. 9. 10. 11. 12. 13. 14. 15.]], shape=(2, 8), dtype=float32)

tf.Tensor(

[[ 0. 1. 2. 3. 4. 5. 6. 7.]

[ 8. 9. 10. 11. 12. 13. 14. 15.]], shape=(2, 8), dtype=float32)

tf.Tensor(

[[ 0. 0. 4. 6. 8. 0. 12. 14.]

[ 0. 0. 0. 0. 0. 0. 0. 30.]], shape=(2, 8), dtype=float32)

tf.Tensor(

[[0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0. 0.]], shape=(2, 8), dtype=float32)

"""

# 定义模型参数

num_outputs, num_hiddens1, num_hiddens2 = 10, 256, 256

# 定义模型

dropout1, dropout2 = 0.2, 0.5

class Net(tf.keras.Model):

def __init__(self, num_outputs, num_hiddens1, num_hiddens2):

super().__init__()

self.input_layer = tf.keras.layers.Flatten()

self.hidden1 = tf.keras.layers.Dense(num_hiddens1, activation='relu')

self.hidden2 = tf.keras.layers.Dense(num_hiddens2, activation='relu')

self.output_layer = tf.keras.layers.Dense(num_outputs)

def call(self, inputs, training=None):

x = self.input_layer(inputs)

x = self.hidden1(x)

# 只有在训练时才是用dropout

if training:

# 在第一个全连接层之后添加一个dropout层

x = dropout_layer(x, dropout1)

x = self.hidden2(x)

if training:

# 在第二个全连接层之后添加一个dropout层

x = dropout_layer(x, dropout2)

x = self.output_layer(x)

return x

# 训练和测试

tf.keras.backend.clear_session()

net = Net(num_outputs, num_hiddens1, num_hiddens2)

num_epochs, lr, batch_size = 10, 0.5, 256

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

train_iter, test_iter = load_data_fashion_mnist(batch_size)

trainer = tf.keras.optimizers.SGD(learning_rate=lr)

train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

# 简洁实现

net = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(256, activation=tf.nn.relu),

tf.keras.layers.Dropout(dropout1),

tf.keras.layers.Dense(256, activation=tf.nn.relu),

tf.keras.layers.Dropout(dropout2),

tf.keras.layers.Dense(10)

])

trainer = tf.keras.optimizers.SGD(learning_rate=lr)

train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

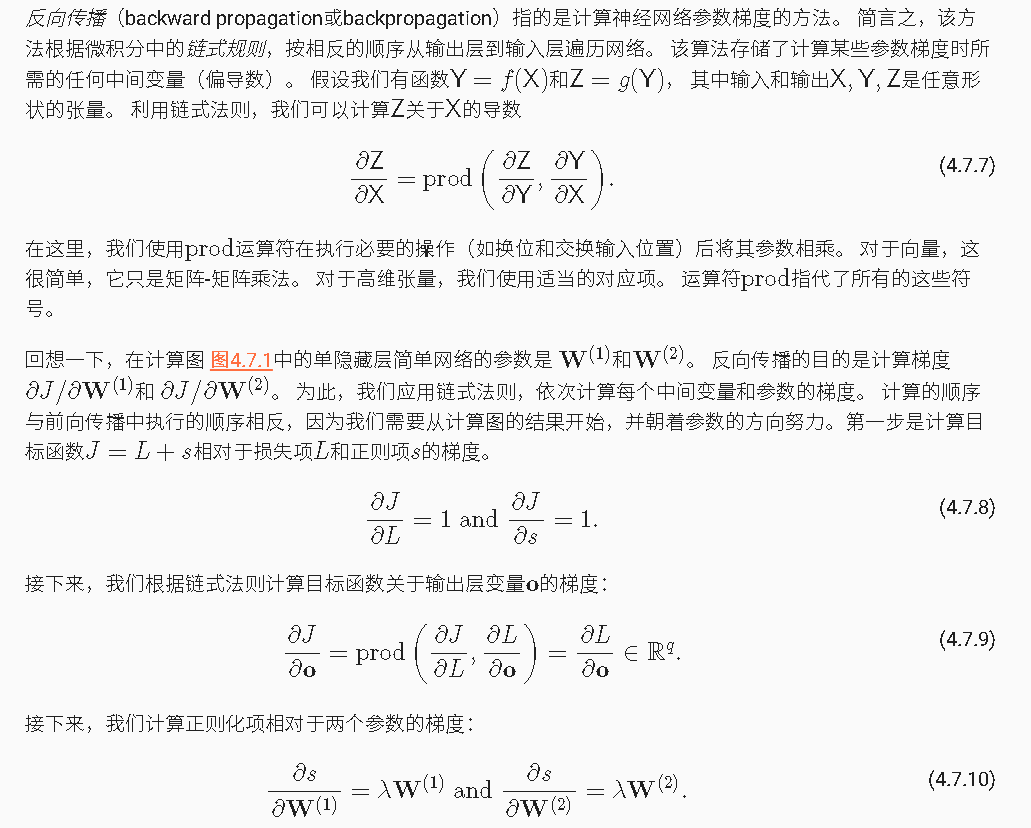

前向传播、反向传播和计算图

- 前向传播

- 反向传播

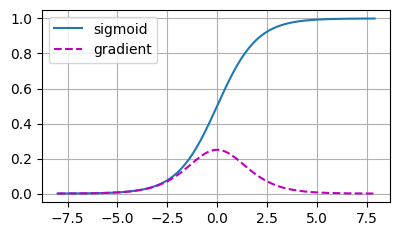

梯度消失与梯度爆炸

x = tf.Variable(tf.range(-8.0, 8.0, 0.1))

with tf.GradientTape() as t:

y = tf.nn.sigmoid(x)

plot(x.numpy(), [y.numpy(), t.gradient(y, x).numpy()], legend=['sigmoid', 'gradient'], figsize=(4.5, 2.5))

plt.show()

M = tf.random.normal((4, 4))

print('一个矩阵 \n', M)

for i in range(100):

M = tf.matmul(M, tf.random.normal((4, 4)))

print('乘以100个矩阵后 \n', M.numpy())

"""

一个矩阵

tf.Tensor(

[[-1.2109797 -0.48044246 -0.44670394 0.6837259 ]

[-0.58179045 -1.6397674 0.16387863 0.02224089]

[-2.3904455 0.92026275 1.9622763 0.32071215]

[ 0.97533286 0.49989635 1.8031533 -1.7435907 ]], shape=(4, 4), dtype=float32)

乘以100个矩阵后

[[-3.9594726e+23 -2.6864426e+24 1.0439507e+24 3.5524820e+24]

[-2.8748631e+23 -1.9505506e+24 7.5798349e+23 2.5793578e+24]

[-3.4308350e+23 -2.3277707e+24 9.0457068e+23 3.0781834e+24]

[ 5.9073450e+23 4.0080446e+24 -1.5575240e+24 -5.3001329e+24]]

"""

预测房价

import hashlib

import os

import tarfile

import zipfile

import requests

# 读取数据集

DATA_HUB = dict()

DATA_URL = 'http://d2l-data.s3-accelerate.amazonaws.com/'

def download(name, cache_dir=os.path.join('..', 'data')):

assert name in DATA_HUB, f'{name}不存在于{DATA_HUB}'

url, sha1_hash = DATA_HUB[name]

os.makedirs(cache_dir, exist_ok=True)

fname = os.path.join(cache_dir, url.split('/')[-1])

if os.path.exists(fname):

sha1 = hashlib.sha1()

with open(fname, 'rb') as f:

while True:

data = f.read(1048576)

if not data:

break

sha1.update(data)

if sha1.hexdigest() == sha1_hash:

return fname # 命中缓存

print(f'正在从{url}下载{fname}...')

r = requests.get(url, stream=True, verify=True)

with open(fname, 'wb') as f:

f.write(r.content)

return fname

def download_extract(name, folder=None):

fname = download(name)

base_dir = os.path.dirname(fname)

data_dir, ext = os.path.splitext(fname)

if ext == '.zip':

fp = zipfile.ZipFile(fname, 'r')

elif ext in ('.tar', '.gz'):

fp = tarfile.open(fname, 'r')

else:

assert False, '只有zip/tar文件可以被解压缩'

fp.extractall(base_dir)

return os.path.join(base_dir, folder) if folder else data_dir

def download_all():

for name in DATA_HUB:

download(name)

DATA_HUB['kaggle_house_train'] = (DATA_URL + 'kaggle_house_pred_train.csv', '585e9cc93e70b39160e7921475f9bcd7d31219ce')

DATA_HUB['kaggle_house_test'] = (DATA_URL + 'kaggle_house_pred_test.csv', 'fa19780a7b011d9b009e8bff8e99922a8ee2eb90')

train_data = pd.read_csv(download('kaggle_house_train'))

test_data = pd.read_csv(download('kaggle_house_test'))

"""

正在从http://d2l-data.s3-accelerate.amazonaws.com/kaggle_house_pred_train.csv下载..\data\kaggle_house_pred_train.csv...

正在从http://d2l-data.s3-accelerate.amazonaws.com/kaggle_house_pred_test.csv下载..\data\kaggle_house_pred_test.csv...

"""

print(train_data.shape)

print(test_data.shape)

"""

(1460, 81)

(1459, 80)

"""

print(train_data.iloc[:4, [0, 1, 2, 3, -3, -2, -1]])

"""

Id MSSubClass MSZoning LotFrontage SaleType SaleCondition SalePrice

0 1 60 RL 65.0 WD Normal 208500

1 2 20 RL 80.0 WD Normal 181500

2 3 60 RL 68.0 WD Normal 223500

3 4 70 RL 60.0 WD Abnorml 140000

"""

all_features = pd.concat((train_data.iloc[:, 1:-1], test_data.iloc[:, 1:]))

# 数据预处理

# 若无法获得测试数据,则可根据训练数据计算均值和标准差、

numeric_features = all_features.dtypes[all_features.dtypes != 'object'].index

all_features[numeric_features] = all_features[numeric_features].apply(lambda x: (x - x.mean()) / (x.std()))

# 在标准化数据之后,所有均值消失,因此我们可以将缺失值设置为0

all_features[numeric_features] = all_features[numeric_features].fillna(0)

# “Dummy_na=True”将“na”(缺失值)视为有效的特征值,并为其创建指示符特征

all_features = pd.get_dummies(all_features, dummy_na=True)

all_features.shape

"""

(2919, 331)

"""

n_train = train_data.shape[0]

train_features = tf.constant(all_features[:n_train].values, dtype=tf.float32)

test_features = tf.constant(all_features[n_train:].values, dtype=tf.float32)

train_labels = tf.constant(train_data.SalePrice.values.reshape(-1, 1), dtype=tf.float32)

# 训练

loss = tf.keras.losses.MeanSquaredError()

def get_net(weight_decay):

net = tf.keras.models.Sequential()

net.add(tf.keras.layers.Dense(1, kernel_regularizer=tf.keras.regularizers.l2(weight_decay)))

return net

def log_rmse(y_true, y_pred):

# 为了在取对数时进一步稳定该值,将小于1的值设置为1

clipped_preds = tf.clip_by_value(y_pred, 1, float('inf'))

return tf.sqrt(tf.reduce_mean(loss(tf.math.log(y_true), tf.math.log(clipped_preds))))

def train(net, train_features, train_labels, test_features, test_labels, num_epochs, learning_rate, batch_size):

train_ls, test_ls = [], []

train_iter = load_array((train_features, train_labels), batch_size)

# 这里使用Adam优化算法

optimizer = tf.keras.optimizers.Adam(learning_rate)

net.compile(loss=loss, optimizer=optimizer)

for epoch in range(num_epochs):

for X, y in train_iter:

with tf.GradientTape() as tape:

y_hat = net(X)

l = loss(y, y_hat)

params = net.trainable_variables

grads = tape.gradient(l, params)

optimizer.apply_gradients(zip(grads, params))

train_ls.append(log_rmse(train_labels, net(train_features)))

if test_labels is not None:

test_ls.append(log_rmse(test_labels, net(test_features)))

return train_ls, test_ls

# K折交叉验证

def get_k_fold_data(k, i, X, y):

assert k > 1

fold_size = X.shape[0] // k

X_train, y_train = None, None

for j in range(k):

idx = slice(j * fold_size, (j+1) * fold_size)

X_part, y_part = X[idx, :], y[idx]

if j == i:

X_valid, y_valid = X_part, y_part

elif X_train is None:

X_train, y_train = X_part, y_part

else:

X_train = tf.concat([X_train, X_part], 0)

y_train = tf.concat([y_train, y_part], 0)

return X_train, y_train, X_valid, y_valid

def k_fold(k, X_train, y_train, num_epochs, learning_rate, weight_decay, batch_size):

train_l_sum, valid_l_sum = 0, 0

for i in range(k):

data = get_k_fold_data(k, i, X_train, y_train)

net = get_net(weight_decay)

train_ls, valid_ls = train(net, *data, num_epochs, learning_rate, batch_size)

train_l_sum += train_ls[-1]

valid_l_sum += valid_ls[-1]

if i == 0:

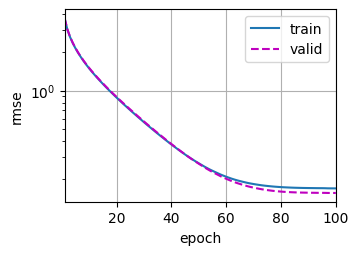

plot(list(range(1, num_epochs+1)), [train_ls, valid_ls], xlabel='epoch', ylabel='rmse', xlim=[1, num_epochs], legend=['train', 'valid'], yscale='log')

print(f'折{i+1},训练log rmse {float(train_ls[-1]):f},验证log rmse {float(valid_ls[-1]):f}')

return train_l_sum / k, valid_l_sum / k

# 模型选择

k, num_epochs, lr, weight_decay, batch_size = 5, 100, 5, 0, 64

train_l, valid_l = k_fold(k, train_features, train_labels, num_epochs, lr, weight_decay, batch_size)

print(f'{k}-折验证: 平均训练log rmse: {float(train_l):f}', f'平均验证log rmse: {float(valid_l):f}')

"""

折1,训练log rmse 0.169379,验证log rmse 0.155865

折2,训练log rmse 0.162185,验证log rmse 0.188749

折3,训练log rmse 0.163705,验证log rmse 0.168569

折4,训练log rmse 0.167645,验证log rmse 0.154788

折5,训练log rmse 0.163938,验证log rmse 0.183622

5-折验证: 平均训练log rmse: 0.165370 平均验证log rmse: 0.170319

"""

作者:lotuslaw

出处:https://www.cnblogs.com/lotuslaw/p/17323892.html

版权:本作品采用「署名-非商业性使用-相同方式共享 4.0 国际」许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

2021-04-16 1-由浅入深学爬虫