6-完整的LSTM案例

import pandas as pd

import datetime

from sklearn.metrics import mean_squared_error

from sklearn.preprocessing import MinMaxScaler

from keras.models import Sequential

from keras.layers import Dense, LSTM

import math

import matplotlib.pyplot as plt

import numpy as np

# 读取时间数据的格式化

def parser(x):

return datetime.datetime.strptime(x, '%Y/%m/%d')

# 转换成有监督数据

def timeseries_to_supervised(data, lag=1):

df = pd.DataFrame(data)

tmp = [df.shift(i) for i in range(1, lag+1)]

tmp.append(df)

df = pd.concat(tmp, axis=1)

df.fillna(0, inplace=True)

return df

# 转换成差分数据

def difference(dataset, interval=1):

diff = []

for i in range(interval, len(dataset)):

value = dataset[i] - dataset[i - interval]

diff.append(value)

return pd.Series(diff)

# 逆差分

def inverse_difference(history, yhat, interval=1):

return yhat + history[-interval]

# 缩放

def scale(train, test):

# 根据训练数据建立缩放器

scaler = MinMaxScaler(feature_range=(-1, 1))

scaler.fit(train)

train_scaled = scaler.transform(train)

test_scaled = scaler.transform(test)

return scaler, train_scaled, test_scaled

# 逆缩放

def invert_scale(scaler, X, value):

new_row = [x for x in X] + [value]

array = np.array(new_row).reshape(1, len(new_row))

inverted = scaler.inverse_transform(array)

return inverted[0, -1]

# fit LSTM来训练数据

def fit_lstm(train, batch_size, nb_epoch, neurons):

X, y = train[:, 0:-1], train[:, -1]

X = X.reshape(X.shape[0], 1, X.shape[1])

model = Sequential()

model.add(LSTM(neurons, batch_input_shape=(batch_size, X.shape[1], X.shape[2]), stateful=True))

model.add(Dense(1))

print(model.summary())

model.compile(loss='mean_squared_error', optimizer='adam')

for i in range(nb_epoch):

# 按照batch_size,一次读取batch_size个数据

model.fit(X, y, epochs=1, batch_size=batch_size, verbose=0, shuffle=False)

model.reset_states()

print("当前计算次数:", i+1)

return model

# 1步长预测

def forecast_lstm(model, batch_size, X):

X = X.reshape(1, 1, len(X))

yhat = model.predict(X, batch_size=batch_size)

return yhat[0, 0]

# 加载数据

def parser(x):

return datetime.datetime.strptime(x, '%Y/%m/%d')

ser = pd.read_csv('../LSTM系列/LSTM单变量1/data_set/shampoo-sales.csv',

header=0, parse_dates=[0], index_col=0, date_parser=parser).squeeze('columns')

# 稳定

raw_values = ser.values

diff_values = difference(raw_values, 1)

# 有监督

supervised = timeseries_to_supervised(diff_values, 1)

supervised_values = supervised.values

# 拆分训练集、测试集合

train, test = supervised_values[:-12], supervised_values[-12:]

# 缩放

scaler, train_scaled, test_scaled = scale(train, test)

# fit模型

lstm_model = fit_lstm(train_scaled, 1, 100, 4)

# 预测

train_reshaped = train_scaled[:, 0].reshape(len(train_scaled), 1, 1) # 训练数据转换为可输入的矩阵

lstm_model.predict(train_reshaped, batch_size=1)

predictions = []

for i in range(len(test_scaled)):

# 1步长预测

X, y = test_scaled[i, 0:-1], test_scaled[i, -1]

yhat = forecast_lstm(lstm_model, 1, X)

yhat = invert_scale(scaler, X, yhat)

yhat = inverse_difference(raw_values, yhat, len(test_scaled) + 1 - i)

predictions.append(yhat)

expected = raw_values[len(train) + i + 1]

print('Moth=%d, Predicted=%f, Expected=%f' % (i + 1, yhat, expected))

# 性能报告

rmse = math.sqrt(mean_squared_error(raw_values[-12:], predictions))

print('Test RMSE:%.3f' % rmse)

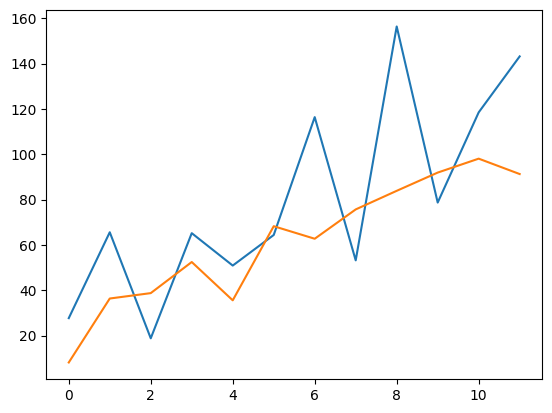

# 绘图

plt.plot(raw_values[-12:])

plt.plot(predictions)

plt.show()

作者:lotuslaw

出处:https://www.cnblogs.com/lotuslaw/p/17103784.html

版权:本作品采用「署名-非商业性使用-相同方式共享 4.0 国际」许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧