12-使用TensorFlow加载和预处理数据

理论部分#

概述#

- 读取大型数据集并对其进行有效预处理可能对其他深度学习库来说很难实现,但是TensorFlow借助Data API很容易实现:只需创建一个数据集对象,并告诉它如何从何处获取数据以及如何对其进行转换

- 现成的Data API可以读取文本文件(例如CSV文件)、具有固定大小记录的二进制文件以及使用TensorFlow的TFRecord格式(支持各种大小的记录)的二进制文件。TFRecord是一种包含协议缓冲区的灵活高效的二进制格式(一种开源二进制格式)。Data API还支持从SQL数据库中读取

- TF转换(tf.Transfrom)

- 使编写单个预处理函数成为可能,可以在完整的训练集中以批处理模式运行该函数,然后再进行训练(以加快速度),之后将其导出为TF函数并集成到训练的模型中,以便一旦部署在生产环境中,它可以即时处理新实例。

- TF数据集(TFDS)

- 提供方便的函数来下载各种类型的常见数据集

数据API#

- 整个数据API都围绕着数据集的概念。

- 链式转换

- 有了数据集后,你可以通过调用其转换方法对其应用各种转换,每个方法都返回一个新的数据集,因此你可以进行链式转换

- 数据集方法不会修改数据集,而是创建新数据集,因此请保留对这些新数据集的引用,否则将不会发生任何事情

- 乱序数据

- 当训练集中的实例相互独立且分布均匀时,梯度下降效果最佳,确保这一点的一种简单方法是使用shuffle()方法对实例进行乱序。它会创建一个新的数据集,该数据集首先将源数据集的第一项元素填充到缓冲区中,然后无论任何时候要求提供一个新元素,它都会从缓冲区中随机取出一个元素,并用源数据集中的新元素替换它,直到完全遍历源数据集为止

- 想象一下,左边有一叠纸牌:假设你只是拿出最上面的三张纸牌并对其进行洗牌,然后随机挑选一张纸牌并将其放在右边,另外两张纸牌则留在手中。从你左边拿另一张牌,将三张牌随机洗牌,随机挑选其中一张,放在右边。当你完成所有类似的卡片操作后,你将在右侧看到一叠纸牌:你认为这样洗牌完美吗?

- 为了进一步乱序实例,一种常见的方法是将源数据拆分为多个文件,然后在训练过程中以随机顺序读取它们。但是位于同一文件中的实例仍然相互接近。为了避免这种情况,你可以随机选择多个文件并同时读取它们,并且交错它们的记录。然后最重要的是,你可以使用shuffle()方法添加一个乱序缓冲区

- 为了交织效果更好,最好使用具有相同长度的文件,否则最长文件的结尾将不会交织

- 预处理数据

- 合并在一起

- 预取

- 通过最后调用prefetch(1),我们正在创建一个数据集,该数据集将尽最大可能总是提前准备一个批次。换句话说,当我们的训练算法正处理一个批次时,数据集已经并行工作以准备下一批次了,这可以显著提高性能。

- 如果我们确保加载和预处理是多线程的,我们可以在CPU上利用多个内核,希望准备一个批次数据的时间比在GPU上执行一个训练步骤的时间要短一些:这样,GPU将达到几乎100%的利用率(从CPU到GPU的数据传输时间除外),并且训练会运行得更快

- 如果数据集足够小,可以放到内存里,则可以使用数据集的cache()方法将其内容缓存到RAM中,从而显著加快训练速度。通常应该在加载和预处理数据之后,但在乱序、重复、批处理和预取之前执行此操作,这样每个实例仅被读取和预处理一次(而不是每个轮次一次),但数据仍会在每个轮次进行不同的乱序,并且仍会提前准备下一批次

- 和tf.keras一起使用数据集

TFRecord格式#

- 当训练过程中的瓶颈是加载和解析数据时,TFRecord很有用

- TFRecord格式是TensorFlow首选的格式,用于存储大量数据并有效读取数据。这是一种非常简单的二进制格式,只包含大小不同的二进制纪录序列(每个记录由一个长度、一个用于检查长度是否损坏的CRC校验和、实际数据以及最后一个CRC校验和组成)

- 压缩的TFRecord文件

- 有时压缩TFRecord文件可能很有用,尤其是在需要通过网络连接加载它们的情况下

- 协议缓冲区简介

- 即使每个记录可以使用你想要的任何二进制格式,TFRecord文件通常包含序列化的协议缓冲区(也称为protobufs)。这是一种可移植、可扩展且高效的二进制格式

- TensorFlow协议

- TensorFlow包含特殊的protobuf定义,并为其提供了解析操作

- TFRecord文件中通常使用的主要protobuf是Example protobuf,它表示数据集中的一个实例。它包含一个已命名特征的列表,其中每个特征可以是字节字符串列表、浮点数列表或正数列表

- 加载和解析Example

- 使用SequenceExample Protobuf处理列表的列表

- SequenceExample包含一个用于上下文数据的Feature对象和一个FeatureLists对象(包含一个或多个命名的FeatureList对象(一个名为“content”,另一个名为“comments”))。每个FeatureList包含一个Feature对象的列表,每个对象可以是一个字节字符串列表、一个64位整数列表或一个float列表

预处理输入特征#

- 为神经网络准备数据需要将所有特征转换为数值特征,通常将其归一化等。特别是如果你的数据包含分类特征或文本特征,则需要将它们转换为数字。

- 使用独热向量编码分类特征

- 为什么要使用oov桶(out-of-vocabulary, oov)?如果类别数量很大(例如邮政编码、城市、单词、产品或用户)并且数据集也很大,或者它们一直在变化,那么得到类别的完整列表可能不是很方便。一种解决方法是基于数据样本(而不是整个训练集)定义词汇列表,并为不在数据样本中的其他类别添加一些桶。如果我们查找词汇表中不存在的类别,则查找表将计算该类别的哈希,并将整个未知类别分配给oov桶之中的一个。

- 使用嵌入编码分类特征

- 根据经验,如果类别数少于10 ,则通常采用独热编码方式,如果类别数大于50,通常最好使用Embedding。10-50个类别中,则可能需要尝试两种方法,然后看看哪种最适合你

- Embedding是便是类别的可训练密集向量。默认情况下,嵌入是随机初始化的,由于这些嵌入是可训练的,因此它们咋训练过程中会逐步改善。由于它们代表的类别相当相似,“梯度下降”肯定最终会把它们推到接近的位置,而把它们推离不属于它们类别的embedding。实际上,表征越好,神经网络就越容易做出准确的预测,因此训练使embedding成为类别的有用表征,这称为表征学习。

- Keras预处理层

- Discretization(离散化)层不可微分,应该仅在模型开始时使用。实际上,模型的预处理层在训练过程中冻结,因此它们的参数不受梯度下降的影响,因此不需要微分。这也意味着,如果你希望它可训练的话,则不要在自定义预处理层中直接使用Embedding层:相反,应像前面的代码示例一样,将其单独添加到你的模型中

- TF Transform

- 如果预处理是计算密集的,那么在训练之前(而不是即时)进行处理会大大提高速度:在训练之前,每个实例对数据进行一次预处理,而不是在训练期间每个实例进行一次数据预处理。如前所述,如果数据集足够小而适合于RAM,则可以使用cache()方法。但是如果数据集太大,那么Apache Beam或Spark这样的工具会有所帮助,它们可以使你对大量数据(甚至分布在多个服务器上)运行有效的数据处理流水线,因此你可以使用它们在训练之前对所有训练数据进行预处理

- 采用由Apache Beam或Spark代码预处理过的数据进行训练,并在将其部署到你的应用程序或浏览器之前,添加额外的预处理层来进行实时预处理,这样导致目前有两个版本的预处理代码,但是如果你只能定义一次预处理操作该怎么办?这就是TF Transform设计的目的。它是TensorFlow Extended(TFX)的一部分,TFX是用于在生产环境中部署TensorFlow模型的端到端平台。通过使用TF Transform函数进行预处理操作,你只需定义一次预处理函数(Python中),接下来,TF Transform允许你使用Apache Beam将TF Transform函数应用于整个训练集。重要的是,TF Transform还将生成等效的TensorFlow函数,你可以将其插入部署的模型中。

代码部分#

引入#

import sys

assert sys.version_info >= (3, 5)

IS_COLAB = 'google.colab' in sys.modules

IS_KAGGLE = 'kaggle_secrets' in sys.modules

if IS_COLAB or IS_KAGGLE:

!pip install -q -U tfx==0.21.2

print('you can safely ignore the package incompatibility errors.')

import sklearn

assert sklearn.__version__ >= '0.20'

import tensorflow as tf

from tensorflow import keras

assert tf.__version__ >= '2.0'

import numpy as np

import os

np.random.seed(42)

%matplotlib inline

import matplotlib as mpl

import matplotlib.pyplot as plt

mpl.rc('axes', labelsize=14)

mpl.rc('xtick', labelsize=12)

mpl.rc('ytick', labelsize=12)

PROJECT_ROOT_DIR = '.'

CHAPTER_ID = 'data'

IMAGES_PATH = os.path.join(PROJECT_ROOT_DIR, 'images', CHAPTER_ID)

os.makedirs(IMAGES_PATH, exist_ok=True)

def save_fig(fig_id, tight_layout=True, fig_extension='png', resolution=300):

path = os.path.join(IMAGES_PATH, fig_id + '.' + fig_extension)

print('Saving figure', fig_id)

if tight_layout:

plt.tight_layout()

plt.savefig(path, format=fig_extension, dpi=resolution)

数据集#

X = tf.range(10)

dataset = tf.data.Dataset.from_tensor_slices(X)

dataset # <TensorSliceDataset shapes: (), types: tf.int32>

# 等价

dataset = tf.data.Dataset.range(10)

for item in dataset:

print(item)

'''

tf.Tensor(0, shape=(), dtype=int64)

tf.Tensor(1, shape=(), dtype=int64)

tf.Tensor(2, shape=(), dtype=int64)

tf.Tensor(3, shape=(), dtype=int64)

tf.Tensor(4, shape=(), dtype=int64)

tf.Tensor(5, shape=(), dtype=int64)

tf.Tensor(6, shape=(), dtype=int64)

tf.Tensor(7, shape=(), dtype=int64)

tf.Tensor(8, shape=(), dtype=int64)

tf.Tensor(9, shape=(), dtype=int64)

'''

dataset = dataset.repeat(3).batch(7)

for item in dataset:

print(item)

'''

tf.Tensor([0 1 2 3 4 5 6], shape=(7,), dtype=int64)

tf.Tensor([7 8 9 0 1 2 3], shape=(7,), dtype=int64)

tf.Tensor([4 5 6 7 8 9 0], shape=(7,), dtype=int64)

tf.Tensor([1 2 3 4 5 6 7], shape=(7,), dtype=int64)

tf.Tensor([8 9], shape=(2,), dtype=int64)

'''

dataset = dataset.map(lambda x: x * 2)

for item in dataset:

print(item)

'''

tf.Tensor([ 0 2 4 6 8 10 12], shape=(7,), dtype=int64)

tf.Tensor([14 16 18 0 2 4 6], shape=(7,), dtype=int64)

tf.Tensor([ 8 10 12 14 16 18 0], shape=(7,), dtype=int64)

tf.Tensor([ 2 4 6 8 10 12 14], shape=(7,), dtype=int64)

tf.Tensor([16 18], shape=(2,), dtype=int64)

'''

dataset = dataset.unbatch()

dataset = dataset.filter(lambda x: x< 10)

for item in dataset.take(3):

print(item)

'''

tf.Tensor(0, shape=(), dtype=int64)

tf.Tensor(2, shape=(), dtype=int64)

tf.Tensor(4, shape=(), dtype=int64)

'''

tf.random.set_seed(42)

dataset = tf.data.Dataset.range(10).repeat(3)

dataset = dataset.shuffle(buffer_size=3, seed=42).batch(7)

for item in dataset:

print(item)

'''

tf.Tensor([1 3 0 4 2 5 6], shape=(7,), dtype=int64)

tf.Tensor([8 7 1 0 3 2 5], shape=(7,), dtype=int64)

tf.Tensor([4 6 9 8 9 7 0], shape=(7,), dtype=int64)

tf.Tensor([3 1 4 5 2 8 7], shape=(7,), dtype=int64)

tf.Tensor([6 9], shape=(2,), dtype=int64)

'''

切分加利福尼亚数据集为多个csv文件#

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

housing = fetch_california_housing()

X_train_full, X_test, y_train_full, y_test = train_test_split(housing.data, housing.target.reshape(-1, 1), random_state=42)

X_train, X_valid, y_train, y_valid = train_test_split(X_train_full, y_train_full, random_state=42)

scaler = StandardScaler()

scaler.fit(X_train)

X_mean = scaler.mean_

X_std = scaler.scale_

def save_to_multiple_csv_files(data, name_prefix, header=None, n_parts=10):

housing_dir = os.path.join('datasets', 'housing')

os.makedirs(housing_dir, exist_ok=True)

path_format = os.path.join(housing_dir, 'mv_{}_{:02d}.csv')

filepaths = []

m = len(data)

for file_idx, row_indices in enumerate(np.array_split(np.arange(m), n_parts)):

part_csv = path_format.format(name_prefix, file_idx)

filepaths.append(part_csv)

with open(part_csv, 'wt', encoding='utf-8') as f:

if header is not None:

f.write(header)

f.write('\n')

for row_idx in row_indices:

f.write(','.join([repr(col) for col in data[row_idx]]))

f.write('\n')

return filepaths

train_data = np.c_[X_train, y_train]

valid_data = np.c_[X_valid, y_valid]

test_data = np.c_[X_test, y_test]

header_cols = housing.feature_names + ['MedianHouseValue']

header = ','.join(header_cols)

train_filepaths = save_to_multiple_csv_files(train_data, 'train', header, n_parts=20)

valid_filepaths = save_to_multiple_csv_files(valid_data, 'valid', header, n_parts=10)

test_filepaths = save_to_multiple_csv_files(test_data, 'test', header, n_parts=10)

import pandas as pd

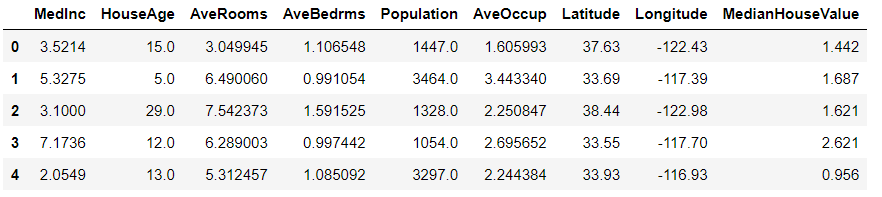

pd.read_csv(train_filepaths[0]).head()

with open(train_filepaths[0]) as f:

for i in range(5):

print(f.readline(), end='')

'''

MedInc,HouseAge,AveRooms,AveBedrms,Population,AveOccup,Latitude,Longitude,MedianHouseValue

3.5214,15.0,3.0499445061043287,1.106548279689234,1447.0,1.6059933407325193,37.63,-122.43,1.442

5.3275,5.0,6.490059642147117,0.9910536779324056,3464.0,3.4433399602385686,33.69,-117.39,1.687

3.1,29.0,7.5423728813559325,1.5915254237288134,1328.0,2.2508474576271187,38.44,-122.98,1.621

7.1736,12.0,6.289002557544757,0.9974424552429667,1054.0,2.6956521739130435,33.55,-117.7,2.621

'''

train_filepaths

'''

['datasets\\housing\\mv_train_00.csv',

'datasets\\housing\\mv_train_01.csv',

'datasets\\housing\\mv_train_02.csv',

'datasets\\housing\\mv_train_03.csv',

'datasets\\housing\\mv_train_04.csv',

'datasets\\housing\\mv_train_05.csv',

'datasets\\housing\\mv_train_06.csv',

'datasets\\housing\\mv_train_07.csv',

'datasets\\housing\\mv_train_08.csv',

'datasets\\housing\\mv_train_09.csv',

'datasets\\housing\\mv_train_10.csv',

'datasets\\housing\\mv_train_11.csv',

'datasets\\housing\\mv_train_12.csv',

'datasets\\housing\\mv_train_13.csv',

'datasets\\housing\\mv_train_14.csv',

'datasets\\housing\\mv_train_15.csv',

'datasets\\housing\\mv_train_16.csv',

'datasets\\housing\\mv_train_17.csv',

'datasets\\housing\\mv_train_18.csv',

'datasets\\housing\\mv_train_19.csv']

'''

构建一个输入流水线#

filepath_dataset = tf.data.Dataset.list_files(train_filepaths, seed=42)

for filepath in filepath_dataset:

print(filepath)

'''

tf.Tensor(b'datasets\\housing\\mv_train_15.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_08.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_03.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_01.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_10.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_05.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_19.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_16.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_02.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_09.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_00.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_07.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_12.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_04.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_17.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_11.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_14.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_18.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_06.csv', shape=(), dtype=string)

tf.Tensor(b'datasets\\housing\\mv_train_13.csv', shape=(), dtype=string)

'''

n_readers = 5

dataset = filepath_dataset.interleave(

lambda filepath: tf.data.TextLineDataset(filepath).skip(1),

cycle_length=n_readers

)

for line in dataset.take(5):

print(line.numpy())

'''

b'4.6477,38.0,5.03728813559322,0.911864406779661,745.0,2.5254237288135593,32.64,-117.07,1.504'

b'8.72,44.0,6.163179916317992,1.0460251046025104,668.0,2.794979079497908,34.2,-118.18,4.159'

b'3.8456,35.0,5.461346633416459,0.9576059850374065,1154.0,2.8778054862842892,37.96,-122.05,1.598'

b'3.3456,37.0,4.514084507042254,0.9084507042253521,458.0,3.2253521126760565,36.67,-121.7,2.526'

b'3.6875,44.0,4.524475524475524,0.993006993006993,457.0,3.195804195804196,34.04,-118.15,1.625'

'''

record_defaults = [0, np.nan, tf.constant(np.nan, dtype=tf.float64), 'Hello', tf.constant([])]

parsed_fields = tf.io.decode_csv('1,2,3,4,5', record_defaults)

parsed_fields

'''

[<tf.Tensor: shape=(), dtype=int32, numpy=1>,

<tf.Tensor: shape=(), dtype=float32, numpy=2.0>,

<tf.Tensor: shape=(), dtype=float64, numpy=3.0>,

<tf.Tensor: shape=(), dtype=string, numpy=b'4'>,

<tf.Tensor: shape=(), dtype=float32, numpy=5.0>]

'''

parsed_fields = tf.io.decode_csv(',,,,5', record_defaults)

parsed_fields

'''

[<tf.Tensor: shape=(), dtype=int32, numpy=0>,

<tf.Tensor: shape=(), dtype=float32, numpy=nan>,

<tf.Tensor: shape=(), dtype=float64, numpy=nan>,

<tf.Tensor: shape=(), dtype=string, numpy=b'Hello'>,

<tf.Tensor: shape=(), dtype=float32, numpy=5.0>]

'''

# 第五个值是强制性赋值的

try:

parsed_fields = tf.io.decode_csv(',,,,', record_defaults)

except tf.errors.InvalidArgumentError as e:

print(e) # Field 4 is required but missing in record 0! [Op:DecodeCSV]

try:

parsed_fields = tf.io.decode_csv('1,2,3,4,5,6,7', record_defaults)

except tf.errors.InvalidArgumentError as e:

print(e) # Expect 5 fields but have 7 in record 0 [Op:DecodeCSV]

n_inputs = 8

@tf.function

def preprocess(line):

defs = [0.] * n_inputs + [tf.constant([], dtype=tf.float32)]

fields = tf.io.decode_csv(line, record_defaults=defs)

x = tf.stack(fields[:-1])

y = tf.stack(fields[-1:])

return (x - X_mean) / X_std, y

preprocess(b'4.2083, 44.0, 5.3232, 0.9171, 846.0, 2.3370, 37.47, -122.2, 2.782')

'''

(<tf.Tensor: shape=(8,), dtype=float32, numpy=

array([ 0.16579157, 1.216324 , -0.05204565, -0.39215982, -0.5277444 ,

-0.2633488 , 0.8543046 , -1.3072058 ], dtype=float32)>,

<tf.Tensor: shape=(1,), dtype=float32, numpy=array([2.782], dtype=float32)>)

'''

def csv_reader_dataset(filepaths, repeat=1, n_readers=5, n_read_threads=None, shuffle_buffer_size=10000, n_parse_threads=5, batch_size=32):

dataset = tf.data.Dataset.list_files(filepaths).repeat(repeat)

dataset = dataset.interleave(lambda filepath: tf.data.TextLineDataset(filepath).skip(1), cycle_length=n_readers, num_parallel_calls=n_read_threads)

dataset = dataset.shuffle(shuffle_buffer_size)

dataset = dataset.map(preprocess, num_parallel_calls=n_parse_threads)

dataset = dataset.batch(batch_size)

return dataset.prefetch(1)

tf.random.set_seed(42)

train_set = csv_reader_dataset(train_filepaths, batch_size=3)

for X_batch, y_batch in train_set.take(2):

print('X =', X_batch)

print('y =', y_batch)

print()

'''

X = tf.Tensor(

[[ 0.5804519 -0.20762321 0.05616303 -0.15191229 0.01343246 0.00604472

1.2525111 -1.3671792 ]

[ 5.818099 1.8491895 1.1784915 0.28173092 -1.2496178 -0.3571987

0.7231292 -1.0023477 ]

[-0.9253566 0.5834586 -0.7807257 -0.28213993 -0.36530012 0.27389365

-0.76194876 0.72684526]], shape=(3, 8), dtype=float32)

y = tf.Tensor(

[[1.752]

[1.313]

[1.535]], shape=(3, 1), dtype=float32)

X = tf.Tensor(

[[-0.8324941 0.6625668 -0.20741376 -0.18699841 -0.14536144 0.09635526

0.9807942 -0.67250353]

[-0.62183803 0.5834586 -0.19862501 -0.3500319 -1.1437552 -0.3363751

1.107282 -0.8674123 ]

[ 0.8683102 0.02970133 0.3427381 -0.29872298 0.7124906 0.28026953

-0.72915536 0.86178064]], shape=(3, 8), dtype=float32)

y = tf.Tensor(

[[0.919]

[1.028]

[2.182]], shape=(3, 1), dtype=float32)

'''

train_set = csv_reader_dataset(train_filepaths, repeat=None)

valid_set = csv_reader_dataset(valid_filepaths)

test_set = csv_reader_dataset(test_filepaths)

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

model = keras.models.Sequential([

keras.layers.Dense(30, activation='relu', input_shape=X_train.shape[1:]),

keras.layers.Dense(1),

])

model.compile(loss='mse', optimizer=keras.optimizers.SGD(learning_rate=1e-3))

batch_size = 32

model.fit(train_set, steps_per_epoch=len(X_train) // batch_size, epochs=10, validation_data=valid_set)

model.evaluate(train_set, steps=len(X_test) // batch_size) # 0.4764566719532013

new_set = test_set.map(lambda X, y: X)

X_new = X_test

model.predict(new_set, steps=len(X_new) // batch_size)

'''

array([[1.8159392],

[2.357008 ],

[0.9800829],

...,

[2.4641705],

[3.0484562],

[2.8796172]], dtype=float32)

'''

optimizer = keras.optimizers.Nadam(learning_rate=0.01)

loss_fn = keras.losses.mean_squared_error

n_epochs = 5

batch_size = 32

n_steps_per_epoch = len(X_train) // batch_size

total_steps = n_epochs * n_steps_per_epoch

global_step = 0

for X_batch, y_batch in train_set.take(total_steps):

global_step += 1

print('\rGlobal step {}/{}'.format(global_step, total_steps), end='')

with tf.GradientTape() as tape:

y_pred = model(X_batch)

main_loss = tf.reduce_mean(loss_fn(y_batch, y_pred))

loss = tf.add_n([main_loss] + model.losses)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

optimizer = keras.optimizers.Nadam(learning_rate=0.01)

loss_fn = keras.losses.mean_squared_error

@tf.function

def train(model, n_epochs, batch_size=32, n_readers=5, n_read_threads=5, shuffle_buffer_size=10000, n_parse_threads=5):

train_set = csv_reader_dataset(train_filepaths, repeat=n_epochs, n_readers=n_readers, n_read_threads=n_read_threads, shuffle_buffer_size=shuffle_buffer_size, n_parse_threads=n_parse_threads, batch_size=batch_size)

for X_batch, y_batch in train_set:

with tf.GradientTape() as tape:

y_pred = model(X_batch)

main_loss = tf.reduce_mean(loss_fn(y_batch, y_pred))

loss = tf.add_n([main_loss] + model.losses)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train(model, 5)

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

optimizer = keras.optimizers.Nadam(learning_rate=0.01)

loss_fn = keras.losses.mean_squared_error

@tf.function

def train(model, n_epochs, batch_size=32, n_readers=5, n_read_threads=5, shuffle_buffer_size=10000, n_parse_threads=5):

train_set = csv_reader_dataset(train_filepaths, repeat=n_epochs, n_readers=n_readers, n_read_threads=n_read_threads, shuffle_buffer_size=shuffle_buffer_size, n_parse_threads=n_parse_threads, batch_size=batch_size)

n_steps_per_epoch = len(X_train) // batch_size

total_steps = n_epochs * n_steps_per_epoch

global_step = 0

for X_batch, y_batch in train_set.take(total_steps):

global_step += 1

if tf.equal(global_step % 100, 0):

tf.print('\rGlobal step', global_step, '/', total_steps)

with tf.GradientTape() as tape:

y_pred = model(X_batch)

main_loss = tf.reduce_mean(loss_fn(y_batch, y_pred))

loss = tf.add_n([main_loss] + model.losses)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train(model, 5)

for m in dir(tf.data.Dataset):

if not (m.startswith('_') or m.endswith('_')):

func = getattr(tf.data.Dataset, m)

if hasattr(func, '__doc__'):

print('● {:21s}{}'.format(m + '()', func.__doc__.split('\n')[0]))

'''

● apply() Applies a transformation function to this dataset.

● as_numpy_iterator() Returns an iterator which converts all elements of the dataset to numpy.

● batch() Combines consecutive elements of this dataset into batches.

● bucket_by_sequence_length()A transformation that buckets elements in a `Dataset` by length.

● cache() Caches the elements in this dataset.

● cardinality() Returns the cardinality of the dataset, if known.

● concatenate() Creates a `Dataset` by concatenating the given dataset with this dataset.

● element_spec() The type specification of an element of this dataset.

● enumerate() Enumerates the elements of this dataset.

● filter() Filters this dataset according to `predicate`.

● flat_map() Maps `map_func` across this dataset and flattens the result.

● from_generator() Creates a `Dataset` whose elements are generated by `generator`. (deprecated arguments)

● from_tensor_slices() Creates a `Dataset` whose elements are slices of the given tensors.

● from_tensors() Creates a `Dataset` with a single element, comprising the given tensors.

● get_single_element() Returns the single element of the `dataset` as a nested structure of tensors.

● group_by_window() Groups windows of elements by key and reduces them.

● interleave() Maps `map_func` across this dataset, and interleaves the results.

● list_files() A dataset of all files matching one or more glob patterns.

● map() Maps `map_func` across the elements of this dataset.

● options() Returns the options for this dataset and its inputs.

● padded_batch() Combines consecutive elements of this dataset into padded batches.

● prefetch() Creates a `Dataset` that prefetches elements from this dataset.

● random() Creates a `Dataset` of pseudorandom values.

● range() Creates a `Dataset` of a step-separated range of values.

● reduce() Reduces the input dataset to a single element.

● repeat() Repeats this dataset so each original value is seen `count` times.

● scan() A transformation that scans a function across an input dataset.

● shard() Creates a `Dataset` that includes only 1/`num_shards` of this dataset.

● shuffle() Randomly shuffles the elements of this dataset.

● skip() Creates a `Dataset` that skips `count` elements from this dataset.

● snapshot() API to persist the output of the input dataset.

● take() Creates a `Dataset` with at most `count` elements from this dataset.

● take_while() A transformation that stops dataset iteration based on a `predicate`.

● unbatch() Splits elements of a dataset into multiple elements.

● unique() A transformation that discards duplicate elements of a `Dataset`.

● window() Returns a dataset of "windows".

● with_options() Returns a new `tf.data.Dataset` with the given options set.

● zip() Creates a `Dataset` by zipping together the given datasets.

'''

TFRecord二进制格式#

with tf.io.TFRecordWriter('my_data.tfrecord') as f:

f.write("This is the first record")

f.write("And this is the second record")

filepaths = ['my_data.tfrecord']

dataset = tf.data.TFRecordDataset(filepaths)

for item in dataset:

print(item)

'''

tf.Tensor(b'This is the first record', shape=(), dtype=string)

tf.Tensor(b'And this is the second record', shape=(), dtype=string)

'''

filepaths = ['my_test_{}.tfrecord'.format(i) for i in range(5)]

for i, filepath in enumerate(filepaths):

with tf.io.TFRecordWriter(filepath) as f:

for j in range(3):

f.write('File {} record {}'.format(i, j).encode('utf-8'))

dataset = tf.data.TFRecordDataset(filepaths, num_parallel_reads=3)

for item in dataset:

print(item)

'''

tf.Tensor(b'File 0 record 0', shape=(), dtype=string)

tf.Tensor(b'File 1 record 0', shape=(), dtype=string)

tf.Tensor(b'File 2 record 0', shape=(), dtype=string)

tf.Tensor(b'File 0 record 1', shape=(), dtype=string)

tf.Tensor(b'File 1 record 1', shape=(), dtype=string)

tf.Tensor(b'File 2 record 1', shape=(), dtype=string)

tf.Tensor(b'File 0 record 2', shape=(), dtype=string)

tf.Tensor(b'File 1 record 2', shape=(), dtype=string)

tf.Tensor(b'File 2 record 2', shape=(), dtype=string)

tf.Tensor(b'File 3 record 0', shape=(), dtype=string)

tf.Tensor(b'File 4 record 0', shape=(), dtype=string)

tf.Tensor(b'File 3 record 1', shape=(), dtype=string)

tf.Tensor(b'File 4 record 1', shape=(), dtype=string)

tf.Tensor(b'File 3 record 2', shape=(), dtype=string)

tf.Tensor(b'File 4 record 2', shape=(), dtype=string)

'''

options = tf.io.TFRecordOptions(compression_type='GZIP')

with tf.io.TFRecordWriter('my_compressed.tfrecord', options) as f:

f.write(b'This is the first record')

f.write(b'And this is the second record')

dataset = tf.data.TFRecordDataset(['my_compressed.tfrecord'], compression_type='GZIP')

for item in dataset:

print(item)

'''

tf.Tensor(b'This is the first record', shape=(), dtype=string)

tf.Tensor(b'And this is the second record', shape=(), dtype=string)

'''

Protocol Buffers#

%%writefile person.proto

syntax = 'proto3';

message Person {

string name = 1;

int32 id = 2;

repeated string email = 3;

}

!python -m grpc_tools.protoc person.proto --python_out=. -I. --descriptor_set_out=person.desc --include_imports

!dir person*

:<<'

驱动器 C 中的卷是 Windows

卷的序列号是 36E0-6044

C:\Users\86188\Desktop\Data_Analysis\机器学习实战\机器学习实战项目\第十三章-使用TensorFlow加载和预处理数据 的目录

2021/11/18 19:17 92 person.desc

2021/11/18 19:14 112 person.proto

2021/11/18 19:17 2,732 person_pb2.py

3 个文件 2,936 字节

0 个目录 113,898,012,672 可用字节

'

from person_pb2 import Person

person = Person(name='A1', id=123, email=['a@b.com'])

print(person)

'''

name: "A1"

id: 123

email: "a@b.com"

'''

person.name # 'A1'

person.name = 'Alice'

person.email[0] # 'a@b.com'

person.email.append('c@d.com')

s = person.SerializeToString()

s # b'\n\x05Alice\x10{\x1a\x07a@b.com\x1a\x07c@d.com'

person2 = Person()

person2.ParseFromString(s)

person == person2 # True

自定义protobuf#

person_tf = tf.io.decode_proto(

bytes=s,

message_type='Person',

field_names=['name', 'id', 'email'],

output_types=[tf.string, tf.int32, tf.string],

descriptor_source='person.desc'

)

person_tf.values

TensorFlow Protobufs#

BytesList = tf.train.BytesList

FloatList = tf.train.FloatList

Int64List = tf.train.Int64List

Feature = tf.train.Feature

Features = tf.train.Features

Example = tf.train.Example

person_example = Example(

features=Features(

feature={

'name': Feature(bytes_list=BytesList(value=[b'Alice'])),

'id': Feature(int64_list=Int64List(value=[123])),

'emails': Feature(bytes_list=BytesList(value=[b'a@b.com', b'c@d.com']))

}

)

)

with tf.io.TFRecordWriter('my_contacts.tfrecord') as f:

f.write(person_example.SerializeToString())

feature_description = {

'name': tf.io.FixedLenFeature([], tf.string, default_value=''),

'id': tf.io.FixedLenFeature([], tf.int64, default_value=0),

'emails': tf.io.VarLenFeature(tf.string),

}

for serialized_example in tf.data.TFRecordDataset(['my_contacts.tfrecord']):

parsed_example = tf.io.parse_single_example(serialized_example, feature_description)

parsed_example

'''

{'emails': <tensorflow.python.framework.sparse_tensor.SparseTensor at 0x20d7d358e48>,

'id': <tf.Tensor: shape=(), dtype=int64, numpy=123>,

'name': <tf.Tensor: shape=(), dtype=string, numpy=b'Alice'>}

'''

parsed_example['emails'].values[0] # <tf.Tensor: shape=(), dtype=string, numpy=b'a@b.com'>

tf.sparse.to_dense(parsed_example['emails'], default_value=b'') # <tf.Tensor: shape=(2,), dtype=string, numpy=array([b'a@b.com', b'c@d.com'], dtype=object)>

parsed_example['emails'].values # <tf.Tensor: shape=(2,), dtype=string, numpy=array([b'a@b.com', b'c@d.com'], dtype=object)>

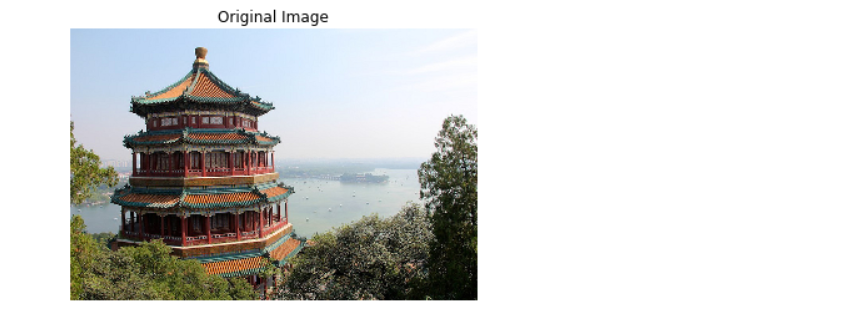

在TFRecord中存储图片#

from sklearn.datasets import load_sample_images

img = load_sample_images()['images'][0]

plt.imshow(img)

plt.axis('off')

plt.title('Original Image')

plt.show()

data = tf.io.encode_jpeg(img)

example_with_image = Example(features=Features(feature={

'image': Feature(bytes_list=BytesList(value=[data.numpy()]))

}))

serialized_example = example_with_image.SerializeToString()

feature_description = {'image': tf.io.VarLenFeature(tf.string)}

example_with_image = tf.io.parse_single_example(serialized_example, feature_description)

decoded_img = tf.io.decode_jpeg(example_with_image['image'].values[0])

decoded_img = tf.io.decode_image(example_with_image['image'].values[0])

plt.imshow(decoded_img)

plt.title('Decoded Image')

plt.axis('off')

plt.show()

在TFRecord中存储张量和稀疏张量#

t = tf.constant([[0., 1.], [2., 3.], [4., 5.]])

s = tf.io.serialize_tensor(t)

s

'''

<tf.Tensor: shape=(), dtype=string, numpy=b'\x08\x01\x12\x08\x12\x02\x08\x03\x12\x02\x08\x02"\x18\x00\x00\x00\x00\x00\x00\x80?\x00\x00\x00@\x00\x00@@\x00\x00\x80@\x00\x00\xa0@'>

'''

tf.io.parse_tensor(s, out_type=tf.float32)

'''

<tf.Tensor: shape=(3, 2), dtype=float32, numpy=

array([[0., 1.],

[2., 3.],

[4., 5.]], dtype=float32)>

'''

serialized_sparse = tf.io.serialize_sparse(parsed_example['emails'])

serialized_sparse

'''

<tf.Tensor: shape=(3,), dtype=string, numpy=

array([b'\x08\t\x12\x08\x12\x02\x08\x02\x12\x02\x08\x01"\x10\x00\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00',

b'\x08\x07\x12\x04\x12\x02\x08\x02"\x10\x07\x07a@b.comc@d.com',

b'\x08\t\x12\x04\x12\x02\x08\x01"\x08\x02\x00\x00\x00\x00\x00\x00\x00'],

dtype=object)>

'''

BytesList(value=serialized_sparse.numpy())

'''

value: "\010\t\022\010\022\002\010\002\022\002\010\001\"\020\000\000\000\000\000\000\000\000\001\000\000\000\000\000\000\000"

value: "\010\007\022\004\022\002\010\002\"\020\007\007a@b.comc@d.com"

value: "\010\t\022\004\022\002\010\001\"\010\002\000\000\000\000\000\000\000"

'''

dataset = tf.data.TFRecordDataset(['my_contacts.tfrecord']).batch(10)

for serialized_examples in dataset:

parsed_examples = tf.io.parse_example(serialized_examples, feature_description)

parsed_examples # {'image': <tensorflow.python.framework.sparse_tensor.SparseTensor at 0x20d7d191940>}

用SequenceExample处理序列的数据#

FeatureList = tf.train.FeatureList

FeatureLists = tf.train.FeatureLists

SequenceExample = tf.train.SequenceExample

context = Features(feature={

'author_id': Feature(int64_list=Int64List(value=[123])),

'title': Feature(bytes_list=BytesList(value=[b'A', b'desert', b'place', b'.'])),

'pub_date': Feature(int64_list=Int64List(value=[1623, 12, 25]))

})

content = [['When', 'shall', 'we', 'three', 'meet', 'again', '?'],

['In', 'thunder', ',', 'lightning', ',', 'or', 'in', 'rain', '?']]

comments = [['When', 'the', 'hurlyburly', 's', 'done', '.'],

['When', 'the', 'battle', "'s'", 'lost', 'and', 'won', '.']]

def words_to_feature(words):

return Feature(bytes_list=BytesList(value=[word.encode('utf-8') for word in words]))

content_features = [words_to_feature(sentence) for sentence in content]

comments_features = [words_to_feature(comment) for comment in comments]

sequence_example = SequenceExample(

context=context,

feature_lists=FeatureLists(feature_list={

'content': FeatureList(feature=content_features),

'comments': FeatureList(feature=comments_features)

})

)

sequence_example

'''

context {

feature {

key: "author_id"

value {

int64_list {

value: 123

}

}

}

feature {

key: "pub_date"

value {

int64_list {

value: 1623

value: 12

value: 25

}

}

}

feature {

key: "title"

value {

bytes_list {

value: "A"

value: "desert"

value: "place"

value: "."

}

}

}

}

feature_lists {

feature_list {

key: "comments"

value {

feature {

bytes_list {

value: "When"

value: "the"

value: "hurlyburly"

value: "s"

value: "done"

value: "."

}

}

feature {

bytes_list {

value: "When"

value: "the"

value: "battle"

value: "\'s\'"

value: "lost"

value: "and"

value: "won"

value: "."

}

}

}

}

feature_list {

key: "content"

value {

feature {

bytes_list {

value: "When"

value: "shall"

value: "we"

value: "three"

value: "meet"

value: "again"

value: "?"

}

}

feature {

bytes_list {

value: "In"

value: "thunder"

value: ","

value: "lightning"

value: ","

value: "or"

value: "in"

value: "rain"

value: "?"

}

}

}

}

}

'''

serialized_sequence_example = sequence_example.SerializeToString()

context_feature_descriptions = {

'author_id': tf.io.FixedLenFeature([], tf.int64, default_value=0),

'title': tf.io.VarLenFeature(tf.string),

'pub_date': tf.io.FixedLenFeature([3], tf.int64, default_value=[0, 0, 0])

}

sequence_feature_descriptions = {

'content': tf.io.VarLenFeature(tf.string),

'comments': tf.io.VarLenFeature(tf.string),

}

parsed_context, parsed_feature_lists = tf.io.parse_single_sequence_example(

serialized_sequence_example, context_feature_descriptions,

sequence_feature_descriptions)

parsed_context

'''

{'title': <tensorflow.python.framework.sparse_tensor.SparseTensor at 0x20d7d6c9278>,

'author_id': <tf.Tensor: shape=(), dtype=int64, numpy=123>,

'pub_date': <tf.Tensor: shape=(3,), dtype=int64, numpy=array([1623, 12, 25], dtype=int64)>}

'''

parsed_context['title'].values # <tf.Tensor: shape=(4,), dtype=string, numpy=array([b'A', b'desert', b'place', b'.'], dtype=object)>

parsed_feature_lists

'''

{'comments': <tensorflow.python.framework.sparse_tensor.SparseTensor at 0x20d7d6c94a8>,

'content': <tensorflow.python.framework.sparse_tensor.SparseTensor at 0x20d7d6c95c0>}

'''

print(tf.RaggedTensor.from_sparse(parsed_feature_lists['content']))

'''

<tf.RaggedTensor [[b'When', b'shall', b'we', b'three', b'meet', b'again', b'?'], [b'In', b'thunder', b',', b'lightning', b',', b'or', b'in', b'rain', b'?']]>

'''

Features API#

import os

import tarfile

import urllib.request

DOWNLOAD_ROOT = "https://raw.githubusercontent.com/ageron/handson-ml2/master/"

HOUSING_PATH = os.path.join("datasets", "housing")

HOUSING_URL = DOWNLOAD_ROOT + "datasets/housing/housing.tgz"

def fetch_housing_data(housing_url=HOUSING_URL, housing_path=HOUSING_PATH):

os.makedirs(housing_path, exist_ok=True)

tgz_path = os.path.join(housing_path, "housing.tgz")

# urllib.request.urlretrieve(housing_url, tgz_path)

housing_tgz = tarfile.open(tgz_path)

housing_tgz.extractall(path=housing_path)

housing_tgz.close()

fetch_housing_data()

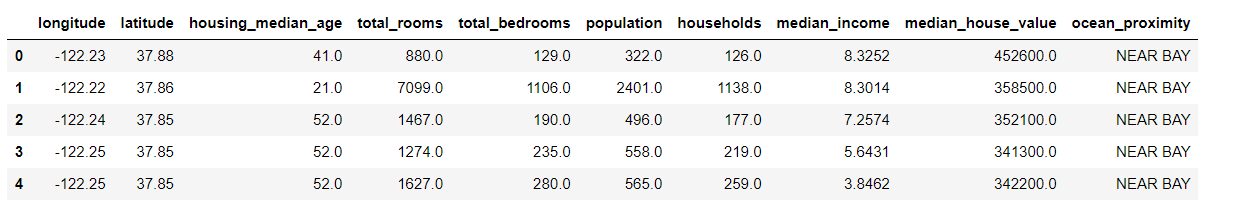

import pandas as pd

def load_housing_data(housing_path=HOUSING_PATH):

csv_path = os.path.join(housing_path, 'housing.csv')

return pd.read_csv(csv_path)

housing = load_housing_data()

housing.head()

housing_median_age = tf.feature_column.numeric_column('housing_median_age')

age_mean, age_std = X_mean[1], X_std[1]

housing_median_age = tf.feature_column.numeric_column(

'housing_median_age', normalizer_fn=lambda x: (x - age_mean) / age_std

)

median_incom = tf.feature_column.numeric_column('median_income')

bucketized_income = tf.feature_column.bucketized_column(

median_incom, boundaries=[1.5, 3., 4.5, 6.]

)

bucketized_income

'''

BucketizedColumn(source_column=NumericColumn(key='median_income', shape=(1,), default_value=None, dtype=tf.float32, normalizer_fn=None), boundaries=(1.5, 3.0, 4.5, 6.0))

'''

ocean_prox_vocab = ['<1H OCEAN', 'INLAND', 'ISLAND', 'NEAR BAY', 'NEAR OCEAN']

ocean_proximity = tf.feature_column.categorical_column_with_vocabulary_list(

'ocean_proximity', ocean_prox_vocab

)

ocean_proximity

'''

VocabularyListCategoricalColumn(key='ocean_proximity', vocabulary_list=('<1H OCEAN', 'INLAND', 'ISLAND', 'NEAR BAY', 'NEAR OCEAN'), dtype=tf.string, default_value=-1, num_oov_buckets=0)

'''

city_hash = tf.feature_column.categorical_column_with_hash_bucket(

'city', hash_bucket_size=1000)

city_hash # HashedCategoricalColumn(key='city', hash_bucket_size=1000, dtype=tf.string)

bucketized_age = tf.feature_column.bucketized_column(

housing_median_age, boundaries=[-1., -0.5, 0., 1.]

)

age_and_ocean_proximity = tf.feature_column.crossed_column(

[bucketized_age, ocean_proximity], hash_bucket_size=100

)

latitude = tf.feature_column.numeric_column('latitude')

longitude = tf.feature_column.numeric_column('longitude')

bucketized_latitude = tf.feature_column.bucketized_column(

latitude, boundaries=list(np.linspace(32., 42., 20-1)))

bucketized_longitude = tf.feature_column.bucketized_column(

longitude, boundaries=list(np.linspace(-125., -114., 20-1)))

location = tf.feature_column.crossed_column(

[bucketized_latitude, bucketized_longitude], hash_bucket_size=1000)

ocean_proximity_one_hot = tf.feature_column.indicator_column(ocean_proximity)

ocean_proximity_embed = tf.feature_column.embedding_column(ocean_proximity, dimension=2)

使用Feature Column解析#

median_house_value = tf.feature_column.numeric_column('median_house_value')

columns = [housing_median_age, median_house_value]

feature_descriptions = tf.feature_column.make_parse_example_spec(columns)

feature_descriptions

'''

{'housing_median_age': FixedLenFeature(shape=(1,), dtype=tf.float32, default_value=None),

'median_house_value': FixedLenFeature(shape=(1,), dtype=tf.float32, default_value=None)}

'''

with tf.io.TFRecordWriter('my_data_with_features.tfrecords') as f:

for x, y in zip(X_train[:, 1:2], y_train):

example = Example(features=Features(feature={

'housing_median_age': Feature(float_list=FloatList(value=[x])),

'median_house_value': Feature(float_list=FloatList(value=[y]))

}))

f.write(example.SerializeToString())

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

def parse_examples(serialized_examples):

examples = tf.io.parse_example(serialized_examples, feature_descriptions)

targets = examples.pop('median_house_value')

return examples, targets

batch_size = 32

dataset = tf.data.TFRecordDataset(['my_data_with_features.tfrecords'])

dataset = dataset.repeat().shuffle(10000).batch(batch_size).map(parse_examples)

columns_without_target = columns[:-1]

model = keras.models.Sequential([

keras.layers.DenseFeatures(feature_columns=columns_without_target),

keras.layers.Dense(1)

])

model.compile(loss='mse', optimizer=keras.optimizers.SGD(learning_rate=1e-3),

metrics=['accuracy'])

model.fit(dataset, steps_per_epoch=len(X_train) // batch_size, epochs=5)

some_columns = [ocean_proximity_embed, bucketized_income]

dense_features = keras.layers.DenseFeatures(some_columns)

dense_features({

'ocean_proximity': [['NEAR OCEAN'], ['INLAND'], ['INLAND']],

'median_income': [[3.], [7.3], [1.]]

})

'''

<tf.Tensor: shape=(3, 7), dtype=float32, numpy=

array([[ 0. , 0. , 1. , 0. , 0. ,

0.42921174, -0.04258468],

[ 0. , 0. , 0. , 0. , 1. ,

0.90720206, 0.5746765 ],

[ 1. , 0. , 0. , 0. , 0. ,

0.90720206, 0.5746765 ]], dtype=float32)>

'''

TF Transform#

try:

import tensorflow_transform as tft

def preprocess(inputs):

median_age = inputs['housing_median_age']

ocean_proximity = inputs['ocean_proximity']

standardized_age = tft.scale_to_z_score(median_age - tft.mean(median_age))

ocean_proximity_id = tft.compute_and_apply_vocabulary(ocean_proximity)

return {

'standardized_median_age': standardized_age,

'ocean_proximity_id': ocean_proximity_id

}

except ImportError as e:

pass

TensorFlow 数据集#

import tensorflow_datasets as tfds

datasets = tfds.load(name='mnist')

mnist_train, mnist_test = datasets['train'], datasets['test']

print(tfds.list_builders())

'''

['abstract_reasoning', 'accentdb', 'aeslc', 'aflw2k3d', 'ag_news_subset', 'ai2_arc', 'ai2_arc_with_ir', 'amazon_us_reviews', 'anli', 'arc', 'bair_robot_pushing_small', 'bccd', 'beans', 'big_patent', 'bigearthnet', 'billsum', 'binarized_mnist', 'binary_alpha_digits', 'blimp', 'bool_q', 'c4', 'caltech101', 'caltech_birds2010', 'caltech_birds2011', 'cars196', 'cassava', 'cats_vs_dogs', 'celeb_a', 'celeb_a_hq', 'cfq', 'cherry_blossoms', 'chexpert', 'cifar10', 'cifar100', 'cifar10_1', 'cifar10_corrupted', 'citrus_leaves', 'cityscapes', 'civil_comments', 'clevr', 'clic', 'clinc_oos', 'cmaterdb', 'cnn_dailymail', 'coco', 'coco_captions', 'coil100', 'colorectal_histology', 'colorectal_histology_large', 'common_voice', 'coqa', 'cos_e', 'cosmos_qa', 'covid19', 'covid19sum', 'crema_d', 'curated_breast_imaging_ddsm', 'cycle_gan', 'd4rl_adroit_door', 'd4rl_adroit_hammer', 'd4rl_adroit_pen', 'd4rl_adroit_relocate', 'd4rl_mujoco_ant', 'd4rl_mujoco_halfcheetah', 'd4rl_mujoco_hopper', 'd4rl_mujoco_walker2d', 'dart', 'davis', 'deep_weeds', 'definite_pronoun_resolution', 'dementiabank', 'diabetic_retinopathy_detection', 'div2k', 'dmlab', 'doc_nli', 'dolphin_number_word', 'downsampled_imagenet', 'drop', 'dsprites', 'dtd', 'duke_ultrasound', 'e2e_cleaned', 'efron_morris75', 'emnist', 'eraser_multi_rc', 'esnli', 'eurosat', 'fashion_mnist', 'flic', 'flores', 'food101', 'forest_fires', 'fuss', 'gap', 'geirhos_conflict_stimuli', 'gem', 'genomics_ood', 'german_credit_numeric', 'gigaword', 'glue', 'goemotions', 'gpt3', 'gref', 'groove', 'gtzan', 'gtzan_music_speech', 'hellaswag', 'higgs', 'horses_or_humans', 'howell', 'i_naturalist2017', 'imagenet2012', 'imagenet2012_corrupted', 'imagenet2012_multilabel', 'imagenet2012_real', 'imagenet2012_subset', 'imagenet_a', 'imagenet_r', 'imagenet_resized', 'imagenet_v2', 'imagenette', 'imagewang', 'imdb_reviews', 'irc_disentanglement', 'iris', 'kddcup99', 'kitti', 'kmnist', 'lambada', 'lfw', 'librispeech', 'librispeech_lm', 'libritts', 'ljspeech', 'lm1b', 'lost_and_found', 'lsun', 'lvis', 'malaria', 'math_dataset', 'mctaco', 'mlqa', 'mnist', 'mnist_corrupted', 'movie_lens', 'movie_rationales', 'movielens', 'moving_mnist', 'multi_news', 'multi_nli', 'multi_nli_mismatch', 'natural_questions', 'natural_questions_open', 'newsroom', 'nsynth', 'nyu_depth_v2', 'ogbg_molpcba', 'omniglot', 'open_images_challenge2019_detection', 'open_images_v4', 'openbookqa', 'opinion_abstracts', 'opinosis', 'opus', 'oxford_flowers102', 'oxford_iiit_pet', 'para_crawl', 'patch_camelyon', 'paws_wiki', 'paws_x_wiki', 'pet_finder', 'pg19', 'piqa', 'places365_small', 'plant_leaves', 'plant_village', 'plantae_k', 'protein_net', 'qa4mre', 'qasc', 'quac', 'quickdraw_bitmap', 'race', 'radon', 'reddit', 'reddit_disentanglement', 'reddit_tifu', 'ref_coco', 'resisc45', 'rlu_atari', 'rlu_dmlab_explore_object_rewards_few', 'rlu_dmlab_explore_object_rewards_many', 'rlu_dmlab_rooms_select_nonmatching_object', 'rlu_dmlab_rooms_watermaze', 'rlu_dmlab_seekavoid_arena01', 'robonet', 'rock_paper_scissors', 'rock_you', 's3o4d', 'salient_span_wikipedia', 'samsum', 'savee', 'scan', 'scene_parse150', 'schema_guided_dialogue', 'scicite', 'scientific_papers', 'sentiment140', 'shapes3d', 'siscore', 'smallnorb', 'snli', 'so2sat', 'speech_commands', 'spoken_digit', 'squad', 'stanford_dogs', 'stanford_online_products', 'star_cfq', 'starcraft_video', 'stl10', 'story_cloze', 'summscreen', 'sun397', 'super_glue', 'svhn_cropped', 'symmetric_solids', 'tao', 'ted_hrlr_translate', 'ted_multi_translate', 'tedlium', 'tf_flowers', 'the300w_lp', 'tiny_shakespeare', 'titanic', 'trec', 'trivia_qa', 'tydi_qa', 'uc_merced', 'ucf101', 'vctk', 'visual_domain_decathlon', 'voc', 'voxceleb', 'voxforge', 'waymo_open_dataset', 'web_nlg', 'web_questions', 'wider_face', 'wiki40b', 'wiki_bio', 'wiki_table_questions', 'wiki_table_text', 'wikiann', 'wikihow', 'wikipedia', 'wikipedia_toxicity_subtypes', 'wine_quality', 'winogrande', 'wmt13_translate', 'wmt14_translate', 'wmt15_translate', 'wmt16_translate', 'wmt17_translate', 'wmt18_translate', 'wmt19_translate', 'wmt_t2t_translate', 'wmt_translate', 'wordnet', 'wsc273', 'xnli', 'xquad', 'xsum', 'xtreme_pawsx', 'xtreme_xnli', 'yelp_polarity_reviews', 'yes_no', 'youtube_vis']

'''

plt.figure(figsize=(6, 3))

mnist_train = mnist_train.repeat(5).batch(32).prefetch(1)

for item in mnist_train:

images = item['image']

labels = item['label']

for index in range(5):

plt.subplot(1, 5, index + 1)

image = images[index, ..., 0]

label = labels[index].numpy()

plt.imshow(image, cmap='binary')

plt.title(label)

plt.axis('off')

break

datasets = tfds.load(name='mnist')

mnist_train, mnist_test = datasets['train'], datasets['test']

mnist_train = mnist_train.repeat(5).batch(32)

mnist_train = mnist_train.map(lambda items: (items['image'], items['label']))

mnist_train = mnist_train.prefetch(1)

for images, labels in mnist_train.take(1):

print(images.shape)

print(labels.numpy())

'''

(32, 28, 28, 1)

[4 1 0 7 8 1 2 7 1 6 6 4 7 7 3 3 7 9 9 1 0 6 6 9 9 4 8 9 4 7 3 3]

'''

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

datasets = tfds.load(name='mnist', batch_size=32, as_supervised=True)

mnist_train = datasets['train'].repeat().prefetch(1)

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28, 1]),

keras.layers.Lambda(lambda images: tf.cast(images, tf.float32)),

keras.layers.Dense(10, activation='softmax')

])

model.compile(loss='sparse_categorical_crossentropy', optimizer=keras.optimizers.SGD(learning_rate=1e-3), metrics=['accuracy'])

model.fit(mnist_train, steps_per_epoch=60000 // 32, epochs=5)

Tensorflow Hub#

keras.backend.clear_session()

np.random.seed(42)

tf.random.set_seed(42)

# import tensorflow_hub as hub

# hub_layer = hub.KerasLayer("https://tfhub.dev/google/nnlm-en-dim50/2",

# output_shape=[50], input_shape=[], dtype=tf.string)

# model = keras.Sequential()

# model.add(hub_layer)

# model.add(keras.layers.Dense(16, activation='relu'))

# model.add(keras.layers.Dense(1, activation='sigmoid'))

# model.summary()

# sentences = tf.constant(["It was a great movie", "The actors were amazing"])

# embeddings = hub_layer(sentences)

# embeddings

TextVectorization层#

def preprocess(X_batch, n_words=50):

shape = tf.shape(X_batch) * tf.constant([1, 0]) + tf.constant([0, n_words])

Z = tf.strings.substr(X_batch, 0, 300)

Z = tf.strings.lower(Z)

Z = tf.strings.regex_replace(Z, b'<br\\s*/?>', b' ')

Z = tf.strings.regex_replace(Z, b'[^a-z]', b' ')

Z = tf.strings.split(Z)

return Z.to_tensor(shape=shape, default_value=b'<pad>')

X_example = tf.constant(["It's a great, great movie! I loved it.", "It was terrible, run away!!!"])

preprocess(X_example)

'''

<tf.Tensor: shape=(2, 50), dtype=string, numpy=

array([[b'it', b's', b'a', b'great', b'great', b'movie', b'i', b'loved',

b'it', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>'],

[b'it', b'was', b'terrible', b'run', b'away', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>', b'<pad>',

b'<pad>']], dtype=object)>

'''

from collections import Counter # 计算每个元素出现了几次

def get_vocabulary(data_sample, max_size=1000):

preprossed_reviews = preprocess(data_sample).numpy()

counter = Counter()

for words in preprossed_reviews:

for word in words:

if word != b'<pad>':

counter[word] += 1

return [b'<pad>'] + [word for word, count in counter.most_common(max_size)]

get_vocabulary(X_example)

'''

[b'<pad>',

b'it',

b'great',

b's',

b'a',

b'movie',

b'i',

b'loved',

b'was',

b'terrible',

b'run',

b'away']

'''

class TextVectorization(keras.layers.Layer):

def __init__(self, max_vocabulary_size=1000, n_oov_buckets=100, dtype=tf.string, **kwargs):

super().__init__(dtype=dtype, **kwargs)

self.max_vocabulary_size = max_vocabulary_size

self.n_oov_buckets = n_oov_buckets

def adapt(self, data_sample):

self.vocab = get_vocabulary(data_sample, self.max_vocabulary_size)

words = tf.constant(self.vocab)

word_ids = tf.range(len(self.vocab), dtype=tf.int64)

vocab_init = tf.lookup.KeyValueTensorInitializer(words, word_ids)

self.table = tf.lookup.StaticVocabularyTable(vocab_init, self.n_oov_buckets)

def call(self, inputs):

preprocessed_inputs = preprocess(inputs)

return self.table.lookup(preprocessed_inputs)

text_vectorization = TextVectorization()

text_vectorization.adapt(X_example)

text_vectorization(X_example)

'''

<tf.Tensor: shape=(2, 50), dtype=int64, numpy=

array([[ 1, 3, 4, 2, 2, 5, 6, 7, 1, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 1, 8, 9, 10, 11, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0]], dtype=int64)>

'''

from pathlib import Path

DOWNLOAD_ROOT = "http://ai.stanford.edu/~amaas/data/sentiment/"

FILENAME = "aclImdb_v1.tar.gz"

filepath = keras.utils.get_file(FILENAME, DOWNLOAD_ROOT + FILENAME, extract=True)

path = Path(filepath).parent / "aclImdb"

path # WindowsPath('C:/Users/86188/.keras/datasets/aclImdb')

for name, subdirs, files in os.walk(path):

indent = len(Path(name).parts) - len(path.parts)

print(" " * indent + Path(name).parts[-1] + os.sep)

for index, filename in enumerate(sorted(files)):

if index == 3:

print(' ' * (indent + 1) + '...')

break

print(' ' * (indent + 1) + filename)

'''

aclImdb\

README

imdb.vocab

imdbEr.txt

test\

labeledBow.feat

urls_neg.txt

urls_pos.txt

neg\

0_2.txt

10000_4.txt

10001_1.txt

...

pos\

0_10.txt

10000_7.txt

10001_9.txt

...

train\

labeledBow.feat

unsupBow.feat

urls_neg.txt

...

neg\

0_3.txt

10000_4.txt

10001_4.txt

...

pos\

0_9.txt

10000_8.txt

10001_10.txt

...

unsup\

0_0.txt

10000_0.txt

10001_0.txt

...

'''

def review_paths(dirpath):

return [str(path) for path in dirpath.glob('*.txt')]

train_pos = review_paths(path / 'train' / 'pos')

train_neg = review_paths(path / 'train' / 'neg')

test_valid_pos = review_paths(path / 'test' / 'pos')

test_valid_neg = review_paths(path / 'test' / 'neg')

len(train_pos), len(train_neg), len(test_valid_pos), len(test_valid_neg) # (12500, 12500, 12500, 12500)

np.random.shuffle(test_valid_pos)

test_pos = test_valid_pos[:5000]

test_neg = test_valid_neg[:5000]

valid_pos = test_valid_pos[5000:]

valid_neg = test_valid_neg[5000:]

def imdb_dataset(filepaths_positive, filepaths_negative):

reviews = []

labels = []

for filepaths, label in ((filepaths_negative, 0), (filepaths_positive, 1)):

for filepath in filepaths:

with open(filepath, encoding='utf-8') as review_file:

reviews.append(review_file.read())

labels.append(label)

return tf.data.Dataset.from_tensor_slices((tf.constant(reviews), tf.constant(labels)))

for X, y in imdb_dataset(train_pos, train_neg).take(3):

print(X)

print(y)

print()

'''

tf.Tensor(b"Story of a man who has unnatural feelings for a pig. Starts out with a opening scene that is a terrific example of absurd comedy. A formal orchestra audience is turned into an insane, violent mob by the crazy chantings of it's singers. Unfortunately it stays absurd the WHOLE time with no general narrative eventually making it just too off putting. Even those from the era should be turned off. The cryptic dialogue would make Shakespeare seem easy to a third grader. On a technical level it's better than you might think with some good cinematography by future great Vilmos Zsigmond. Future stars Sally Kirkland and Frederic Forrest can be seen briefly.", shape=(), dtype=string)

tf.Tensor(0, shape=(), dtype=int32)

tf.Tensor(b"Airport '77 starts as a brand new luxury 747 plane is loaded up with valuable paintings & such belonging to rich businessman Philip Stevens (James Stewart) who is flying them & a bunch of VIP's to his estate in preparation of it being opened to the public as a museum, also on board is Stevens daughter Julie (Kathleen Quinlan) & her son. The luxury jetliner takes off as planned but mid-air the plane is hi-jacked by the co-pilot Chambers (Robert Foxworth) & his two accomplice's Banker (Monte Markham) & Wilson (Michael Pataki) who knock the passengers & crew out with sleeping gas, they plan to steal the valuable cargo & land on a disused plane strip on an isolated island but while making his descent Chambers almost hits an oil rig in the Ocean & loses control of the plane sending it crashing into the sea where it sinks to the bottom right bang in the middle of the Bermuda Triangle. With air in short supply, water leaking in & having flown over 200 miles off course the problems mount for the survivor's as they await help with time fast running out...<br /><br />Also known under the slightly different tile Airport 1977 this second sequel to the smash-hit disaster thriller Airport (1970) was directed by Jerry Jameson & while once again like it's predecessors I can't say Airport '77 is any sort of forgotten classic it is entertaining although not necessarily for the right reasons. Out of the three Airport films I have seen so far I actually liked this one the best, just. It has my favourite plot of the three with a nice mid-air hi-jacking & then the crashing (didn't he see the oil rig?) & sinking of the 747 (maybe the makers were trying to cross the original Airport with another popular disaster flick of the period The Poseidon Adventure (1972)) & submerged is where it stays until the end with a stark dilemma facing those trapped inside, either suffocate when the air runs out or drown as the 747 floods or if any of the doors are opened & it's a decent idea that could have made for a great little disaster flick but bad unsympathetic character's, dull dialogue, lethargic set-pieces & a real lack of danger or suspense or tension means this is a missed opportunity. While the rather sluggish plot keeps one entertained for 108 odd minutes not that much happens after the plane sinks & there's not as much urgency as I thought there should have been. Even when the Navy become involved things don't pick up that much with a few shots of huge ships & helicopters flying about but there's just something lacking here. George Kennedy as the jinxed airline worker Joe Patroni is back but only gets a couple of scenes & barely even says anything preferring to just look worried in the background.<br /><br />The home video & theatrical version of Airport '77 run 108 minutes while the US TV versions add an extra hour of footage including a new opening credits sequence, many more scenes with George Kennedy as Patroni, flashbacks to flesh out character's, longer rescue scenes & the discovery or another couple of dead bodies including the navigator. While I would like to see this extra footage I am not sure I could sit through a near three hour cut of Airport '77. As expected the film has dated badly with horrible fashions & interior design choices, I will say no more other than the toy plane model effects aren't great either. Along with the other two Airport sequels this takes pride of place in the Razzie Award's Hall of Shame although I can think of lots of worse films than this so I reckon that's a little harsh. The action scenes are a little dull unfortunately, the pace is slow & not much excitement or tension is generated which is a shame as I reckon this could have been a pretty good film if made properly.<br /><br />The production values are alright if nothing spectacular. The acting isn't great, two time Oscar winner Jack Lemmon has said since it was a mistake to star in this, one time Oscar winner James Stewart looks old & frail, also one time Oscar winner Lee Grant looks drunk while Sir Christopher Lee is given little to do & there are plenty of other familiar faces to look out for too.<br /><br />Airport '77 is the most disaster orientated of the three Airport films so far & I liked the ideas behind it even if they were a bit silly, the production & bland direction doesn't help though & a film about a sunken plane just shouldn't be this boring or lethargic. Followed by The Concorde ... Airport '79 (1979).", shape=(), dtype=string)

tf.Tensor(0, shape=(), dtype=int32)

tf.Tensor(b"This film lacked something I couldn't put my finger on at first: charisma on the part of the leading actress. This inevitably translated to lack of chemistry when she shared the screen with her leading man. Even the romantic scenes came across as being merely the actors at play. It could very well have been the director who miscalculated what he needed from the actors. I just don't know.<br /><br />But could it have been the screenplay? Just exactly who was the chef in love with? He seemed more enamored of his culinary skills and restaurant, and ultimately of himself and his youthful exploits, than of anybody or anything else. He never convinced me he was in love with the princess.<br /><br />I was disappointed in this movie. But, don't forget it was nominated for an Oscar, so judge for yourself.", shape=(), dtype=string)

tf.Tensor(0, shape=(), dtype=int32)

'''

def imdb_dataset(filepaths_positive, filepaths_negative, n_read_threads=5):

dataset_neg = tf.data.TextLineDataset(filepaths_negative, num_parallel_reads=n_read_threads)

dataset_neg = dataset_neg.map(lambda review: (review, 0))

dataset_pos = tf.data.TextLineDataset(filepaths_positive, num_parallel_reads=n_read_threads)

dataset_pos = dataset_pos.map(lambda review: (review, 1))

return tf.data.Dataset.concatenate(dataset_pos, dataset_neg)

batch_size = 32

train_set = imdb_dataset(train_pos, train_neg).shuffle(25000).batch(batch_size).prefetch(1)

valid_set = imdb_dataset(valid_pos, valid_neg).batch(batch_size).prefetch(1)

test_set = imdb_dataset(test_pos, test_neg).batch(batch_size).prefetch(1)

text_vectorization(X_example)

'''

<tf.Tensor: shape=(2, 50), dtype=int64, numpy=

array([[ 9, 14, 2, 64, 64, 12, 5, 256, 9, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[ 9, 13, 269, 532, 334, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]],

dtype=int64)>

'''

text_vectorization.vocab[:10] # [b'<pad>', b'the', b'a', b'of', b'and', b'i', b'to', b'is', b'this', b'it']

simple_example = tf.constant([[1, 3, 1, 0, 0], [2, 2, 0, 0, 0]])

tf.reduce_sum(tf.one_hot(simple_example, 4), axis=1)

'''

<tf.Tensor: shape=(2, 4), dtype=float32, numpy=

array([[2., 2., 0., 1.],

[3., 0., 2., 0.]], dtype=float32)>

'''

词袋模型#

class BagofWords(keras.layers.Layer):

def __init__(self, n_tokens, dtype=tf.float32, **kwargs):

super().__init__(dtype=dtype, **kwargs)

self.n_tokens = n_tokens

def call(self, inputs):

one_hot = tf.one_hot(inputs, self.n_tokens)

return tf.reduce_sum(one_hot, axis=1)[:, 1:]

bag_of_words = BagofWords(n_tokens=4)

bag_of_words(simple_example)

'''

<tf.Tensor: shape=(2, 3), dtype=float32, numpy=

array([[2., 0., 1.],

[0., 2., 0.]], dtype=float32)>

'''

n_tokens = max_vocabulary_size + n_oov_buckets + 1 # add 1 for <pad>

bag_of_words = BagofWords(n_tokens)

model = keras.models.Sequential([

text_vectorization,

bag_of_words,

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy', optimizer='nadam', metrics=['accuracy'])

model.fit(train_set, epochs=5, validation_data=valid_set)

embedding替换词袋#

To compute the mean embedding for each review, and multiply it by the square root of the number of words in that review, we will need a little function. For each sentence, this function needs to compute , where is the mean of all the word embeddings in the sentence (excluding padding tokens), and is the number of words in the sentence (also excluding padding tokens). We can rewrite as , where is the sum of all word embeddings (it does not matter whether or not we include the padding tokens in this sum, since their representation is a zero vector). So the function must return .

def compute_mean_embedding(inputs):

not_pad = tf.math.count_nonzero(inputs, axis=-1)

n_words = tf.math.count_nonzero(not_pad, axis=-1, keepdims=True)

sqrt_n_words = tf.math.sqrt(tf.cast(n_words, tf.float32))

return tf.reduce_sum(inputs, axis=1) / sqrt_n_words

another_example = tf.constant([[[1., 2., 3.], [4., 5., 0.], [0., 0., 0.]],

[[6., 0., 0.], [0., 0., 0.], [0., 0., 0.]]])

compute_mean_embedding(another_example)

'''

<tf.Tensor: shape=(2, 3), dtype=float32, numpy=

array([[3.535534 , 4.9497476, 2.1213205],

[6. , 0. , 0. ]], dtype=float32)>

'''

tf.reduce_mean(another_example[0:1, :2], axis=1) * tf.sqrt(2.)

'''

<tf.Tensor: shape=(1, 3), dtype=float32, numpy=array([[3.535534 , 4.9497476, 2.1213202]], dtype=float32)>

'''

tf.reduce_mean(another_example[1:2, :1], axis=1) * tf.sqrt(1.) # <tf.Tensor: shape=(1, 3), dtype=float32, numpy=array([[6., 0., 0.]], dtype=float32)>

embedding_size = 20

model = keras.models.Sequential([

text_vectorization,

keras.layers.Embedding(input_dim=n_tokens, output_dim=embedding_size, mask_zero=True), # <pad> tokens => zero vectors

keras.layers.Lambda(compute_mean_embedding),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy', optimizer='nadam', metrics=['accuracy'])

model.fit(train_set, epochs=5, validation_data=valid_set)

作者:lotuslaw

出处:https://www.cnblogs.com/lotuslaw/p/15572406.html

版权:本作品采用「署名-非商业性使用-相同方式共享 4.0 国际」许可协议进行许可。

分类:

《机器学习实战》学习

标签:

机器学习实战

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧