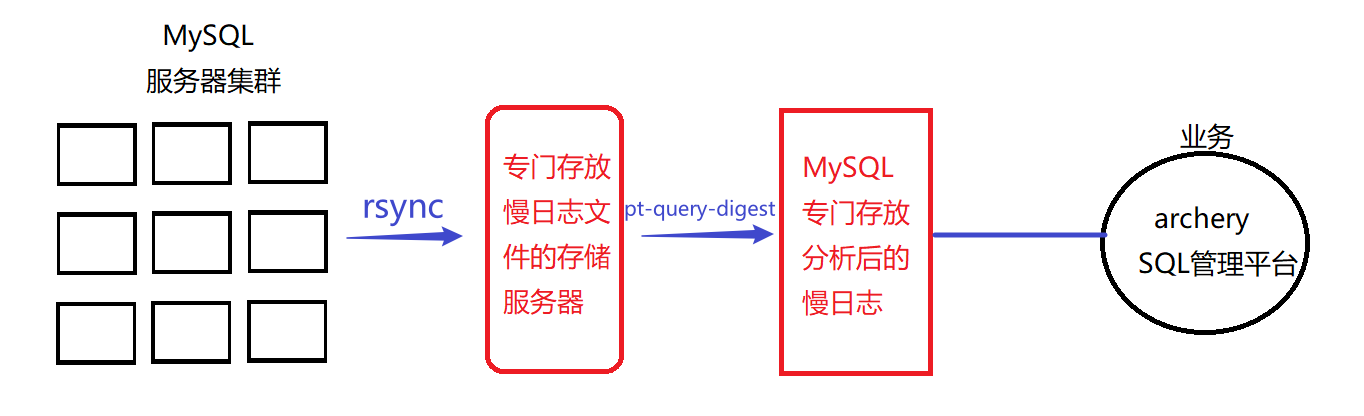

MySQL集群慢日志收集与分析

一、【agent端】各主机推送slow.log到远端慢日志存储服务器;

1. 确保集群中的各节点开启了慢日志;

show variables like 'slow_query%'; set global slow_query_log='ON'; set global slow_query_log_file='/data/mysql/logs/slow.log';

2. 编写rsync推送脚本

CentOS6: crontab -l > /tmp/crontab$$.tmp echo "55 * * * * /data/mysql/upload_logs.sh >> upload_logs.log 2>&1" >>/tmp/crontab$$.tmp crontab /tmp/crontab$$.tmp crontab -l export MYSQL_PWD='xxxxx' export BINLOG=`mysql -uroot -Bse "select @@log_bin_basename"` export SLOWLOG=`mysql -uroot -Bse "select @@slow_query_log_file"` cat > /data/mysql/upload_logs.sh <<EOF #!/bin/bash RSYNC_PASSWORD=xxxx /usr/bin/rsync -avz ${SLOWLOG} deploy@172.20.9.146::mysql-slowlog/`ifconfig |grep -w 'inet' |grep -v '127' |awk '{print $2}'|awk -F':' '{print $2}'|sed 's/\./\_/g'`/ EOF chmod +x /data/mysql/upload_logs.sh sh -x /data/mysql/upload_logs.sh

CentOS7 crontab -l > /tmp/crontab$$.tmp echo "31 * * * * /data/mysql/upload_logs.sh >> upload_logs.log 2>&1" >>/tmp/crontab$$.tmp crontab /tmp/crontab$$.tmp crontab -l export MYSQL_PWD='xxxxxx' export BINLOG=`mysql -uroot -Bse "select @@log_bin_basename"` export SLOWLOG=`mysql -uroot -Bse "select @@slow_query_log_file"` cat > /data/mysql/upload_logs.sh <<EOF #!/bin/bash RSYNC_PASSWORD=xxxxxx /usr/bin/rsync -avz ${SLOWLOG} deploy@172.20.9.146::mysql-slowlog/`ifconfig |grep -w 'inet' |grep -v '127' |awk '{print $2}'|sed 's/\./\_/g'`/ EOF chmod +x /data/mysql/upload_logs.sh sh -x /data/mysql/upload_logs.sh

执行如上命令,可自动生成脚本,如下

RSYNC_PASSWORD=xxxxxx /usr/bin/rsync -avz /data/mysql/logs/slow.log deploy@172.20.9.146::mysql-slowlog/172_20_0_111/

二、【server端】慢日志存储服务器批量分析slow.log,然后写入指定的数据库.

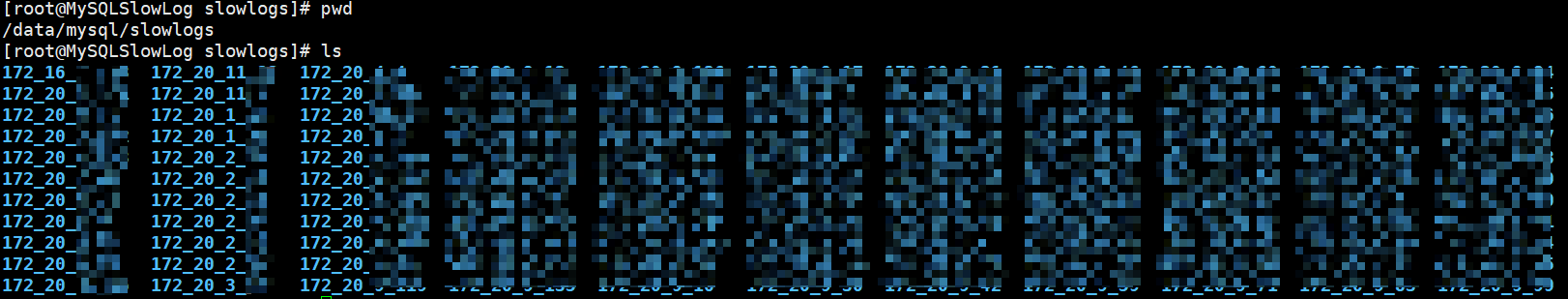

如下收集到这么多主机的慢日志,每个目录中都有一个slow.log,用脚本批量分析。

[root@MySQLSlowLog mysql]# cat analyze_slowlog.sh #!/bin/bash #获取各目录名 ls -l /data/mysql/slowlogs/ | awk 'NR == 1 {next} {print $NF}' > DirectoryList #配置Archery数据库的连接地址 archery_db_host="172.xx.xx.90" archery_db_port=3306 archery_db_user="query_slow" archery_db_password="Querxxxx4" archery_db_database="sqlaud" #pt工具路径 pt_query_digest="/opt/percona-toolkit-3.3.1/bin/pt-query-digest" for i in `cat DirectoryList` do slowlog_DIR="/data/mysql/slowlogs/$i" #获取慢日志的目录 slowlog_FILE="slow.log" #获取慢日志的文件名 hostname="${i//_/.}:3306" #获取被分析实例的主机信息 #获取上次分析时间,初始化时请删除last_analysis_time_$hostname文件,可分析全部日志数据 if [[ -s ${slowlog_DIR}/last_analysis_time_${hostname} ]]; then last_analysis_time=`cat ${slowlog_DIR}/last_analysis_time_${hostname}` else last_analysis_time='1000-01-01 00:00:00' fi #收集日志 ${pt_query_digest} \ --user=${archery_db_user} --password=${archery_db_password} --port=${archery_db_port} \ --review h=${archery_db_host},D=${archery_db_database},t=mysql_slow_query_review \ --history h=${archery_db_host},D=${archery_db_database},t=mysql_slow_query_review_history \ --no-report --limit=100% --charset=utf8 \ --since "$last_analysis_time" \ --filter="\$event->{Bytes} = length(\$event->{arg}) and \$event->{hostname}=\"$hostname\" and \$event->{client}=\$event->{ip} " \ ${slowlog_DIR}/${slowlog_FILE} >> /tmp/analysis_slow_query.log if [[ $? -ne 0 ]]; then echo "===${slowlog_DIR}/${slowlog_FILE} 推送失败 `date +"%Y-%m-%d %H:%M:%S"` ===" >> /tmp/analysis_slow_query.log else echo `date +"%Y-%m-%d %H:%M:%S"`> ${slowlog_DIR}/last_analysis_time_${hostname} echo "===${slowlog_DIR}/${slowlog_FILE} 推送成功 `date +"%Y-%m-%d %H:%M:%S"`===" >> /tmp/analysis_slow_query.log fi done

写入计划任务

* 3 * * * root /data/mysql/analyze_slowlog.sh