Kubernetes使用vSphere存储 - vSphere-CSI

参考官方:Introduction · vSphere CSI Driver (k8s.io)

前置须知

1. 虚拟机创建于vSpher 6.7U3版本及以上

2. Kubernetes节点网络能访问到vCenter域名或IP

3. 官方示例操作系统镜像http://partnerweb.vmware.com/GOSIG/Ubuntu_18_04_LTS.html

4. 本次测试使用操作系统镜像CentOS7.6

5. 虚拟机版本不低于15

6. 虚拟机安装vmtools,CentOS7上安装yum install -y open-vm-tools && systemctl enable vmtoolsd --now

7. 配置虚拟机参数:disk.EnableUUID=1 (参考govc操作)

kubeadm部署集群

1. 环境配置

|

节点 |

IP |

角色 |

|

k8s-master |

192.168.190.30 |

etcd、apiserver、controller-manager、scheduler |

|

k8s-node1 |

192.168.190.31 |

kubelet、kube-proxy |

|

k8s-node2 |

192.168.190.32 |

kubelet、kube-proxy |

2. 安装docker-ce-19.03,kubeadm-1.19.11,kubelet-1.19.11,kubectl-1.19.11,系统参数配置(略)

3. Kubeadm生成配置文件,vSphere-CSI需要依赖vSphere-CPI作为Cloud-Provider,此处我们配置使用外部扩展的方式(out-of-tree)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 | # 生成默认配置kubeadm config print init-defaults >kubeadm-config.yaml# 修改后的配置apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authenticationkind: InitConfigurationlocalAPIEndpoint: advertiseAddress: 192.168.190.30 bindPort: 6443nodeRegistration: criSocket: /var/run/dockershim.sock name: k8s-master taints: - effect: NoSchedule key: node-role.kubernetes.io/master kubeletExtraArgs: # 添加配置,指定云供应商驱动为外部扩展模式 cloud-provider: external # 指定external模式---apiServer: timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns: type: CoreDNSetcd: local: dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containers # 修改仓库为国内阿里云仓库kind: ClusterConfigurationkubernetesVersion: v1.19.11 # 修改版本为v1.19.11networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 # 添加pod网络,适配后面flannel网络scheduler: {} |

4. k8s-master节点执行安装

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | [root@k8s-master ~]# kubeadm init --config /etc/kubernetes/kubeadminit.yaml[init] Using Kubernetes version: v1.19.11... ...[preflight] Pulling images required for setting up a Kubernetes cluster[preflight] This might take a minute or two, depending on the speed of your internet connection[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'... ...You should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.190.30:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:[sha sum from above output] # 配置客户端认证[root@k8s-master ~]# mkdir -p $HOME/.kube[root@k8s-master ~]# cp /etc/kubernetes/admin.conf $HOME/.kube/config |

5. 部署flannel网络插件

1 | [root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml |

6. 配置worker节点join配置文件,此处与一般情况下的kubeadm join命名不一样,需要生成join配置,并添加Cloud-Provider配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | # 导出master配置,并拷贝到所有worker节点/etc/kubernetes目录下[root@k8s-master ~]# kubectl -n kube-public get configmap cluster-info -o jsonpath='{.data.kubeconfig}' > discovery.yamlapiVersion: v1clusters:- cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0l --- 略 --- RVJUSUZJQ0FURS0tLS0tCg== server: https://192.168.190.30:6443 name: ""contexts: nullcurrent-context: ""kind: Configpreferences: {}users: null# 生成worker节点join配置文件,并拷贝到所有worker节点/etc/kubernetes目录下[root@k8s-master ~]# tee /etc/kubernetes/kubeadminitworker.yaml >/dev/null <<EOFapiVersion: kubeadm.k8s.io/v1beta1caCertPath: /etc/kubernetes/pki/ca.crtdiscovery: file: kubeConfigPath: /etc/kubernetes/discovery.yaml timeout: 5m0s tlsBootstrapToken: abcdef.0123456789abcdefkind: JoinConfigurationnodeRegistration: criSocket: /var/run/dockershim.sock kubeletExtraArgs: # cloud-provider配置为external cloud-provider: externalEOF |

7. 添加work节点,需要预先把join配置文件拷贝到worker节点上

1 2 3 | # 确保worker节点上/etc/kubernetes目录下已从master上拷贝文件,discover.yaml和kubeadminitworker.yaml# 添加节点[root@k8s-node1 ~]# kubeadm join --config /etc/kubernetes/kubeadminitworker.yaml |

8. 集群情况

1 2 3 4 5 6 7 8 9 10 11 | [root@k8s-master ~]# kubectl get node -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEk8s-master Ready master 7h28m v1.19.11 192.168.190.30 192.168.190.30 CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.15k8s-node1 Ready <none> 7h12m v1.19.11 192.168.190.31 192.168.190.31 CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.15k8s-node2 Ready <none> 7h11m v1.19.11 192.168.190.32 192.168.190.32 CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.15<br><br># worker节点的Taints状态,待vSphere-CPI安装成功后,会自动移除node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule[root@k8s-master ~]# kubectl describe nodes | egrep "Taints:|Name:"Name: k8s-masterTaints: node-role.kubernetes.io/master:NoScheduleName: k8s-node1Taints: node.cloudprovider.kubernetes.io/uninitialized=true:NoScheduleName: k8s-node2Taints: node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule |

部署vSphere Cloud Provider Interface(CPI)

1. 生成配置文件,包含vCenter认证及使用的数据中心

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | [root@k8s-master kubernetes]# cat vsphere.conf[Global]port = "443"insecure-flag = "true"secret-name = "cpi-engineering-secret"secret-namespace = "kube-system"[VirtualCenter "192.168.1.100"]datacenters = "Datacenter"# insecure-flag 是否信任ssl# secret-name vCenter认证secret# secret-namespace vCenter认证secret所处namespace# VirtualCenter vCenter地址# datacenters 数据中心名称[root@k8s-master kubernetes]# cat cpi-engineering-secret.yamlapiVersion: v1kind: Secretmetadata: name: cpi-engineering-secret namespace: kube-systemstringData: 192.168.1.100.username: "administrator@vsphere.local" 192.168.1.100.password: "xxxxx"# "vCenter地址".username vc用户# "vCenter地址".password vc用户密码 # 通过vsphere.conf文件创建configmap[root@k8s-master kubernetes]# kubectl create configmap cloud-config --from-file=vsphere.conf --namespace=kube-system # 通过cpi-engineering-secret.yaml创建secret[root@k8s-master kubernetes]# kubectl create -f cpi-engineering-secret.yaml |

2. 安装CPI

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | # 安装CPI前状态确认,保证work节点包含下面的Taints[root@k8s-master ~]# kubectl describe nodes | egrep "Taints:|Name:"Name: k8s-masterTaints: node-role.kubernetes.io/master:NoScheduleName: k8s-node1Taints: node.cloudprovider.kubernetes.io/uninitialized=true:NoScheduleName: k8s-node2Taints: node.cloudprovider.kubernetes.io/uninitialized=true:NoSchedule# 安装CPI,可直接通过下面部署[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/cloud-provider-vsphere/master/manifests/controller-manager/cloud-controller-manager-roles.yamlclusterrole.rbac.authorization.k8s.io/system:cloud-controller-manager created[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/cloud-provider-vsphere/master/manifests/controller-manager/cloud-controller-manager-role-bindings.yamlclusterrolebinding.rbac.authorization.k8s.io/system:cloud-controller-manager created[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/cloud-provider-vsphere/master/manifests/controller-manager/vsphere-cloud-controller-manager-ds.yamlserviceaccount/cloud-controller-manager createddaemonset.extensions/vsphere-cloud-controller-manager createdservice/vsphere-cloud-controller-manager created# 默认情况下,CPI使用的镜像仓库为gcr.io,国内基本就无限拉取失败了,推荐自己通过阿里云容器镜像构建功能代理到国内,比如下面: image: registry.cn-hangzhou.aliyuncs.com/gisuni/manager:v1.0.1# 安装CPI后的状态,worker节点Taints自动清除[root@k8s-master ~]# kubectl describe nodes | egrep "Taints:|Name:"Name: k8s-masterTaints: node-role.kubernetes.io/master:NoScheduleName: k8s-node1Taints: <none>Name: k8s-node2Taints: <none> |

安装vSphere Container Storage Interface(CSI)

1. 创建CSI配置文件(为啥不能和CPI公用配置呢?)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | # CSI的配置文件为一个secret对象<br>[root@k8s-master kubernetes]# cat csi-vsphere.conf[Global]insecure-flag = "true"cluster-id = "k8s-20210603"[VirtualCenter "192.168.1.100"]datacenters = "Datacenter"user = "administrator@vsphere.local"password = "xxxxx"port = "443"# cluster-id 自定义一个kubernetes集群id,不超过64个字符# VirtualCenter vCenter地址# user vCenter用户# password vCenter用户密码# 通过csi-vsphere.conf生成secret[root@k8s-master kubernetes]# kubectl create secret generic vsphere-config-secret --from-file=csi-vsphere.conf --namespace=kube-system |

2. 安装CSI,gcr.io镜像问题,可以参考CPI的处理办法

1 2 3 4 5 6 7 8 9 10 11 12 13 | # 安装rbac[root@k8s-master kubernetes]# kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.2.0/manifests/v2.2.0/rbac/vsphere-csi-controller-rbac.yaml[root@k8s-master kubernetes]# kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.2.0/manifests/v2.2.0/rbac/vsphere-csi-node-rbac.yaml# 安装controller和node driver[root@k8s-master kubernetes]# kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.2.0/manifests/v2.2.0/deploy/vsphere-csi-controller-deployment.yaml[root@k8s-master kubernetes]# kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.2.0/manifests/v2.2.0/deploy/vsphere-csi-node-ds.yaml# 其中用到的gcr.io镜像可以替换为下面的阿里云代理镜像 image: registry.cn-hangzhou.aliyuncs.com/gisuni/driver:v2.2.0 image: registry.cn-hangzhou.aliyuncs.com/gisuni/syncer:v2.2.0 image: registry.cn-hangzhou.aliyuncs.com/gisuni/driver:v2.2.0 |

3. CSI安装完成后pod运行情况

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@k8s-master ~]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-6d56c8448f-bj2mw 1/1 Running 0 7h24metcd-k8s-master 1/1 Running 0 8hkube-apiserver-k8s-master 1/1 Running 0 8hkube-controller-manager-k8s-master 1/1 Running 0 8hkube-flannel-ds-7rmg8 1/1 Running 0 7h19mkube-flannel-ds-8pm67 1/1 Running 0 7h19mkube-flannel-ds-dxlwg 1/1 Running 0 7h19mkube-proxy-7dzp5 1/1 Running 0 8hkube-proxy-8hzmk 1/1 Running 0 7h46mkube-proxy-crf9p 1/1 Running 0 7h47mkube-scheduler-k8s-master 1/1 Running 0 8hvsphere-cloud-controller-manager-fstg2 1/1 Running 0 7h4mvsphere-csi-controller-5b68fbc4b6-xpxb5 6/6 Running 0 5h17mvsphere-csi-node-5nml4 3/3 Running 0 5h17mvsphere-csi-node-jv8kh 3/3 Running 0 5h17mvsphere-csi-node-z6xj5 3/3 Running 0 5h17m |

创建容器卷

1. Storage Class创建,选择基于vSAN的虚拟机存储策略

1 2 3 4 5 6 7 8 9 10 | kind: StorageClassapiVersion: storage.k8s.io/v1metadata: name: vsphere-vsan-sc annotations: storageclass.kubernetes.io/is-default-class: "true"provisioner: csi.vsphere.vmware.comparameters: storagepolicyname: "storage_for_k8s" # vCenter上虚拟机存储策略名称 fstype: ext4 |

2. 创建pvc

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | [root@k8s-master ~]# cat pvc.yamlkind: PersistentVolumeClaimapiVersion: v1metadata: name: testspec: accessModes: - ReadWriteOnce resources: requests: storage: 4Gi[root@k8s-master ~]# kubectl create -f pvc.yamlpersistentvolumeclaim/test created[root@k8s-master ~]# kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEtest Bound pvc-9408ac89-93ee-41d1-ba71-67957265b8df 4Gi RWO vsphere-vsan-sc 4s |

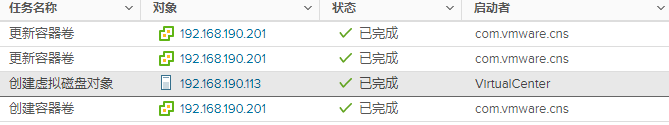

3. 创建pvc后vCenter上的任务

4. 通集群-监控-云原生存储-容器卷,查看容器卷

挂载容器卷

挂载容器卷的流程为:

1. pod调度到某一worker节点,比如k8s-node1

2. 将vSAN容器卷挂载到k8s-node1节点虚拟机上

3. 从k8s-node1上格式化容器卷并挂载到pod中

总结

1. 通过vSphere-CSI可以让vCenter上自定义的Kubernetes集群使用vSphere原生的容器卷

2. vSphere原生容器卷性能基本和虚拟机磁盘相等

3. 减少因使用longhorn、rook、openebs导致的数据多次副本冗余

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· AI技术革命,工作效率10个最佳AI工具