Hadoop进阶算法-矩阵相乘

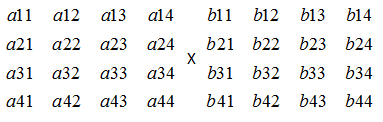

1、结果矩阵r=左矩阵aX右矩阵b(i表示行,j表示列)

根据矩阵乘法规则:r11=(a11,a12,a13,a14)X(b11,b21,b31,b41),即左矩阵每一行 X 右矩阵每一列 = 结果每一个数据,对于矩阵定义与运算不多说,大家应该都熟悉。

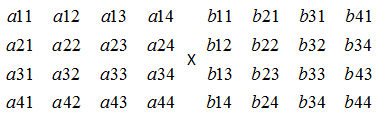

因为hadoop获取数据都是一行一行获取,所以需要将右矩阵进行转置后,再进行运算。

2、右矩阵转置后的形式:

r11=(a11,a12,a13,a14)X(b11,b21,b31,b41),即变成:左矩阵每一行 X 右矩阵每一行 = 结果每一个数据

3、 转置代码:

3.1map阶段

import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class MapMatrixTranspose extends Mapper<LongWritable,Text,Text,Text>{ private Text OUTKEY = new Text(); private Text OUTVALUES = new Text(); /** * key:1 * values:1_1,2_2,3_-1,4_0 * 1 1_-1,2_1,3_4,4_3,5_2 * 2 1_4,2_6,3_4,4_6,5_1 * * 说明:矩阵与矩阵相乘(左行X右列),考虑到hadoop是按行读取,所以需要先将右矩阵进行转置,变成(左行X右行) */ @Override protected void map(LongWritable key, Text values, Context context) throws IOException, InterruptedException { //按行获取内容,每次读取一行(元素与元素之间以tab键分割); String[] rowAndLines = values.toString().split("\t"); //行号 String row = rowAndLines[0]; //每行内容 String[] lines = rowAndLines[1].split(","); //循环输出内容 key:列号 value:行号_值,行号_值,行号_值,行号_值 for (String line : lines) { String colunm = line.split("_")[0]; //获取列号 String value = line.split("_")[1]; //获取每列的值 OUTKEY.set(colunm); OUTVALUES.set(row + "_" + value); //输出 context.write(OUTKEY, OUTVALUES); } } }

3.2 reduce阶段

import java.io.IOException; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class ReduceMatrixTranspose extends Reducer<Text, Text, Text, Text>{ private Text OUTKEY = new Text(); private Text OUTVALUES = new Text(); //key:列号 value:行号_值,行号_值,行号_值,行号_值 @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { StringBuilder sb = new StringBuilder(); for (Text text : values) { //text:行号_值 sb.append(text + ","); } String result = null; if(sb.toString().endsWith(",")){ result = sb.substring(0, sb.length()-1); } //设置key,value OUTKEY.set(key); OUTVALUES.set(result); context.write(OUTKEY, OUTVALUES); } }

3.3测试map和reduce阶段代码并将结果输出到hdfs文件中:

import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; /** * 主方法 * @author lowi */ public class Transpose { private static String inPath = "/user/step1_matrixinput/rightmatrix.txt"; private static String outPath = "/user/step1_matrixout"; //hdfs地址 private static String hdfs = "hdfs://localhost:9000"; public static int run(){ try { // 创建conf配置 Configuration conf = new Configuration(); // 设置hdfs地址 conf.set("fs.defaultFS", hdfs); // 创建job实例 Job job = Job.getInstance(conf, "step1"); //设置job主类 job.setJarByClass(Transpose.class); //设置job的map类与reduce类 job.setMapperClass(MapMatrixTranspose.class); job.setReducerClass(ReduceMatrixTranspose.class); //设置mapper输出类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); //设置reduce输出类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); FileSystem fs = FileSystem.get(conf); //设置输入、输出路径 Path inputPath = new Path(inPath); if(fs.exists(inputPath)){ FileInputFormat.addInputPath(job, inputPath); } Path outputPath = new Path(outPath); fs.delete(outputPath, true); FileOutputFormat.setOutputPath(job, outputPath); return job.waitForCompletion(true)?1:-1; } catch (IOException e) { e.printStackTrace(); } catch (ClassNotFoundException e) { e.printStackTrace(); } catch (InterruptedException e) { e.printStackTrace(); } return -1; } //主方法 public static void main(String[] args) { int result = -1; result = Transpose.run(); if(result == 1){ System.out.println("矩阵转置成功========"); }else { System.out.println("矩阵转置失败========"); } } }

4、矩阵相乘

4.1map阶段

import java.io.BufferedReader; import java.io.FileReader; import java.io.IOException; import java.util.ArrayList; import java.util.List; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class MapMatrixMultiply extends Mapper<LongWritable,Text,Text,Text>{ private Text OUTKEY = new Text(); private Text OUTVALUES = new Text(); private List<String> cacheList = new ArrayList<>(); /** * 初始化方法 * 会在map方法之前执行一次,且只执行一次 * 作用:将转置的右侧矩阵放在list中 */ @Override protected void setup(Mapper<LongWritable, Text, Text, Text>.Context context) throws IOException, InterruptedException { super.setup(context); //通过输入流将全局缓存中的右侧矩阵读入List<String>中 FileReader fr = new FileReader("matrix2"); BufferedReader br = new BufferedReader(fr); //读取缓存中每一行 //每一行的格式:行 tab 列_值,列_值,列_值,列_值 String line = null; while((line = br.readLine()) != null){ cacheList.add(line); } //关闭输入流 fr.close(); br.close(); } /** * map实现方法 * key:行 * values:行 tab 列_值,列_值,列_值,列_值 */ @Override protected void map(LongWritable key, Text values, Context context) throws IOException, InterruptedException { //左侧矩阵 //行,tab键进行区分,因为每行数据形式:行号 tab 列_值,列_值,列_值,列_值,如:1 1_-1,2_1,3_4,4_3,5_2 String row_matrix1 = values.toString().split("\t")[0]; //列_值,数组 String[] column_value_array_matrix1 = values.toString().split("\t")[1].split(","); for (String line : cacheList) { //右矩阵行line //格式:行 tab 列_值,列_值,列_值,列_值 String row_matrix2 = line.toString().split("\t")[0]; String[] column_value_array_matrix2 = line.toString().split("\t")[1].split(","); //矩阵2行相乘得到的结果 int result = 0; //遍历左矩阵每一行的每一列 for (String column_value_matrix1 : column_value_array_matrix1) { String column_matrix1 = column_value_matrix1.split("_")[0]; String column_value1 = column_value_matrix1.split("_")[1]; //遍历右矩阵每一行的,每一列 for (String column_value_matrix2 : column_value_array_matrix2) { //判断前缀相同,进行相乘 if(column_value_matrix2.startsWith(column_matrix1 + "_")){ String column_value2 = column_value_matrix2.split("_")[1]; result += Integer.valueOf(column_value1) * Integer.valueOf(column_value2); } } } //result是作为矩阵的值,输出的格式:行 列_值,列_值,列_值,列_值,列_值 OUTKEY.set(row_matrix1); OUTVALUES.set(row_matrix2 + "_" + result); context.write(OUTKEY, OUTVALUES); } } }

4.2reduce阶段

import java.io.IOException; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class ReduceMatrixMultiply extends Reducer<Text, Text, Text, Text>{ private Text OUTKEY = new Text(); private Text OUTVALUES = new Text(); @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { StringBuilder sb = new StringBuilder(); for (Text text : values) { //text:列_值 sb.append(text + ","); } String result = null; if(sb.toString().endsWith(",")){ result = sb.substring(0, sb.length()-1); } //设置key,value OUTKEY.set(key); OUTVALUES.set(result); context.write(OUTKEY, OUTVALUES); } }

4.3测试

import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import hdp.test.matrix.transpose.Transpose; public class Multiply { private static String inPath = "/user/step2_matrixinput/leftmatrix.txt"; private static String outPath = "/user/matrixout"; //将step1中输出的右侧转置矩阵作为全局缓存 private static String cache = "/user/step1_matrixout/part-r-00000"; //hdfs地址 private static String hdfs = "hdfs://localhost:9000"; public int run(){ try { // 创建conf配置 Configuration conf = new Configuration(); // 设置hdfs地址 conf.set("fs.defaultFS", hdfs); // 创建job实例 Job job = Job.getInstance(conf, "step2"); //添加分布式缓存文件 job.addCacheArchive(new URI(cache + "#matrix2")); //设置job主类 job.setJarByClass(Transpose.class); //设置job的map类与reduce类 job.setMapperClass(MapMatrixMultiply.class); job.setReducerClass(ReduceMatrixMultiply.class); //设置mapper输出类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); //设置reduce输出类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); FileSystem fs = FileSystem.get(conf); //设置输入、输出路径 Path inputPath = new Path(inPath); if(fs.exists(inputPath)){ FileInputFormat.addInputPath(job, inputPath); } Path outputPath = new Path(outPath); fs.delete(outputPath, true); FileOutputFormat.setOutputPath(job, outputPath); return job.waitForCompletion(true)?1:-1; } catch (IOException e) { e.printStackTrace(); } catch (ClassNotFoundException e) { e.printStackTrace(); } catch (InterruptedException e) { e.printStackTrace(); } catch (URISyntaxException e) { e.printStackTrace(); } return -1; } public static void main(String[] args) { int result = -1; result = new Multiply().run(); if(result == 1){ System.out.println("矩阵相乘执行成功======="); }else{ System.out.println("矩阵相乘执行失败======="); } } }

以上就是mapreduce实现,矩阵的相乘详细代码

浙公网安备 33010602011771号

浙公网安备 33010602011771号