爬虫案例:博客文章列表

博客实例:

爬取博客园文章列表,假设页面的URL是https://www.cnblogs.com/loaderman

要求:

-

使用requests获取页面信息,用XPath / re 做数据提取

-

获取每个博客里的

标题,描述,链接地址,日期等 -

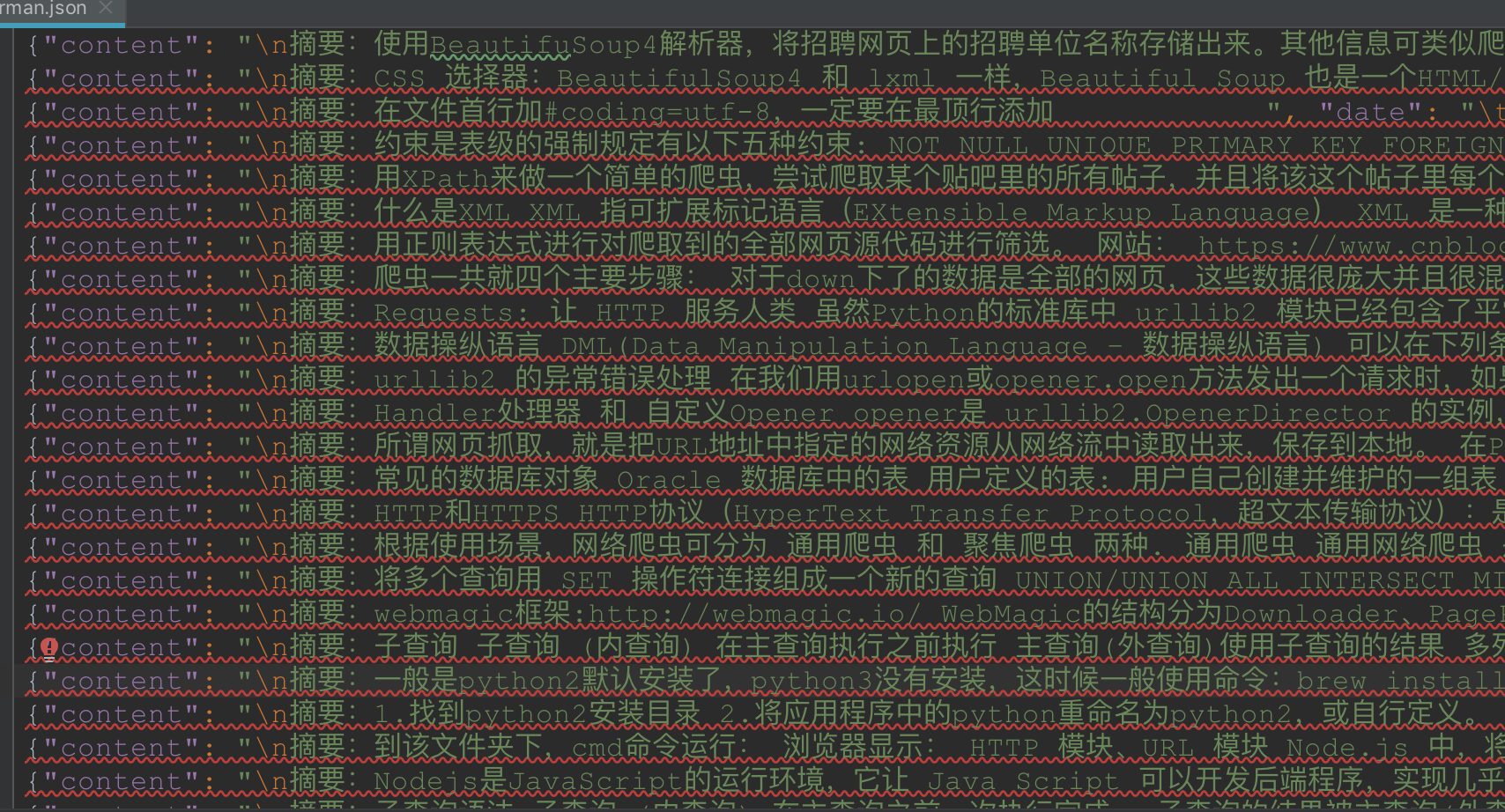

保存到 json 文件内

代码

# -*- coding:utf-8 -*- import urllib2 import json from lxml import etree url = "https://www.cnblogs.com/loaderman/" headers = {"User-Agent": "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0;"} request = urllib2.Request(url, headers=headers) html = urllib2.urlopen(request).read() # 响应返回的是字符串,解析为HTML DOM模式 text = etree.HTML(html) text = etree.HTML(html) # 返回所有结点位置,contains()模糊查询方法,第一个参数是要匹配的标签,第二个参数是标签名部分内容 node_list = text.xpath('//div[contains(@class, "post")]') print (node_list) items = {} for each in node_list: print (each) title = each.xpath(".//h2/a[@class='postTitle2']/text()")[0] detailUrl = each.xpath(".//a[@class='postTitle2']/@href")[0] content = each.xpath(".//div[@class='c_b_p_desc']/text()")[0] date = each.xpath(".//p[@class='postfoot']/text()")[0] items = { "title": title, "image": detailUrl, "content": content, "date": date, } with open("loaderman.json", "a") as f: f.write(json.dumps(items, ensure_ascii=False).encode("utf-8") + "\n")

效果:

最后,关注【码上加油站】微信公众号后,有疑惑有问题想加油的小伙伴可以码上加入社群,让我们一起码上加油吧!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号