unity5.6.1 videoPlayer

unity5.6开始增加了videoPlayer,使得视频播放相对比较简单,项目需求进行了一下研究应用,也遇到很多坑,Google 百度一下发现确实有这些问题,一些简单问题如下:

1)播放无声音

2)通过slider控制播放进度

3)视频截图(texture->texture2d)

4)视频结束时事件激活

基本上无太大问题,以上四个问题解决方案在下文红色文字区域,先介绍一下video Player应用,后续对这四个问题进行解决(代码之后有更新)。

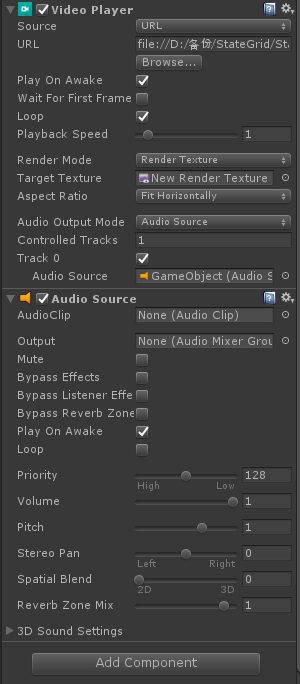

(一)新建video Player 可以在ui下田间video Play组建,也可以直接右键-video-videoplayer,添加后可以看到如下图所示的组件

本文主要重点说一下一下参数:source有两种模式clip模式和url模式,clip则可以直接通过videoClip进行播放,url则可以通过url进行播放。renderMode为渲染模式,既可以为camera,material等,如果是使用ui播放的选用render texture,本文采用此模式。audioOutputMode有三种,none模式,direct模式(没尝试)和audiosource模式,本文采用audiosource模式,采用此模式时只需要将audiosource组建拖入上图中videoPlayer中的audiosource参数槽中即可,不需要任何处理,但有时会出现拖入后videoPlayer中的audiosource参数槽消失,且无声音播放,所以一般采用代码添加,如下所示:

//代码添加 videoPlayer = gameObject.AddComponent<VideoPlayer>(); //videoPlayer = gameObject.GetComponent<VideoPlayer>(); audioSource = gameObject.AddComponent<AudioSource>(); //audioSource = gameObject.GetComponent<AudioSource>(); videoPlayer.playOnAwake = false; audioSource.playOnAwake = false; audioSource.Pause();

(二)视频播放的控制与音频/动画播放类似,videoPlayer有play/pause等方法,具体可以参见后面完整代码。

在调用视频播放结束时事件loopPointReached(此处为借鉴别人称呼,此事件其实并不是视频播放结束时的事件),顾名思义,此事件为达到视频播放循环点时的事件,即当videoplay 的isLooping属性为true(即循环播放视频)时,视频结束时调用此方法,所以当视频非循环播放时,此事件在视频结束时调用不到。要想调用此方法可以把视频设置为循环播放,在loopPointReached指定的事件中停止播放视频

(三)关于视频播放的ui选择问题,选用render texture时需要指定target texture。

1)在project面板上create-renderTexture,并把新建的renderTexture拖到videoplayer相应的参数槽上

2)在Hierarchy面板上新建ui-RawImage,并把上一步新建的renderTexture拖到RawImage的texture上即可。

其实可以不用这么处理,videoPlayer有texture变量,直接在update里面把texture值赋给RawImage的texture即可,代码如下

rawImage.texture = videoPlayer.texture;

视频截图时可以通过videoPlayer.texture,把图像保存下来不过需要把texture转变为texture2d,虽然后者继承在前者,但是无法强制转货回去,转换以及存储图片代码如下:

private void SaveRenderTextureToPNG(Texture inputTex, string file) { RenderTexture temp = RenderTexture.GetTemporary(inputTex.width, inputTex.height, 0, RenderTextureFormat.ARGB32); Graphics.Blit(inputTex, temp); Texture2D tex2D = GetRTPixels(temp); RenderTexture.ReleaseTemporary(temp); File.WriteAllBytes(file, tex2D.EncodeToPNG()); } private Texture2D GetRTPixels(RenderTexture rt) { RenderTexture currentActiveRT = RenderTexture.active; RenderTexture.active = rt; Texture2D tex = new Texture2D(rt.width, rt.height); tex.ReadPixels(new Rect(0, 0, tex.width, tex.height), 0, 0); RenderTexture.active = currentActiveRT; return tex; }

最后说一下通过slider控制视频播放进度的问题,

通过slider控制视频播放存在两个问题,一方面在update实时把videoPlayer.time 赋值给slider,一方面需要把slider的value反馈给time,如果用slider的OnValueChanged(float value) 方法则存在矛盾,导致问题。所以可以通过UI事件的BeginDrag和EndDrag事件

事件进行,即当BeginDrag时,停止给slider赋值,当EndDrag时重新开始赋值。如下图所示

全代码

using System; using System.Collections; using System.Collections.Generic; using System.IO; using UnityEngine; using UnityEngine.UI; using UnityEngine.Video; public class VideoController : MonoBehaviour { public GameObject screen; public Text videoLength; public Text currentLength; public Slider volumeSlider; public Slider videoSlider; private string video1Url; private string video2Url; private VideoPlayer videoPlayer; private AudioSource audioSource; private RawImage videoScreen; private float lastCountTime = 0; private float totalPlayTime = 0; private float totalVideoLength = 0; private bool b_firstVideo = true; private bool b_adjustVideo = false; private bool b_skip = false; private bool b_capture = false; private string imageDir =@"D:\test\Test\bwadmRe"; // Use this for initialization void Start () { videoScreen = screen.GetComponent<RawImage>(); string dir = Path.Combine(Application.streamingAssetsPath,"Test"); video1Url = Path.Combine(dir, "01.mp4"); video2Url = Path.Combine(dir, "02.mp4"); //代码添加 videoPlayer = gameObject.AddComponent<VideoPlayer>(); //videoPlayer = gameObject.GetComponent<VideoPlayer>(); audioSource = gameObject.AddComponent<AudioSource>(); //audioSource = gameObject.GetComponent<AudioSource>(); videoPlayer.playOnAwake = false; audioSource.playOnAwake = false; audioSource.Pause(); videoPlayer.audioOutputMode = VideoAudioOutputMode.AudioSource; videoPlayer.SetTargetAudioSource(0, audioSource); VideoInfoInit(video1Url); videoPlayer.loopPointReached += OnFinish; } #region private method private void VideoInfoInit(string url) { videoPlayer.source = VideoSource.Url; videoPlayer.url = url; videoPlayer.prepareCompleted += OnPrepared; videoPlayer.isLooping = true; videoPlayer.Prepare(); } private void OnPrepared(VideoPlayer player) { player.Play(); totalVideoLength = videoPlayer.frameCount / videoPlayer.frameRate; videoSlider.maxValue = totalVideoLength; videoLength.text = FloatToTime(totalVideoLength); lastCountTime = 0; totalPlayTime = 0; } private string FloatToTime(float time) { int hour = (int)time / 3600; int min = (int)(time - hour * 3600) / 60; int sec = (int)(int)(time - hour * 3600) % 60; string text = string.Format("{0:D2}:{1:D2}:{2:D2}", hour, min, sec); return text; } private IEnumerator PlayTime(int count) { for(int i=0;i<count;i++) { yield return null; } videoSlider.value = (float)videoPlayer.time; //videoSlider.value = videoSlider.maxValue * (time / totalVideoLength); } private void OnFinish(VideoPlayer player) { Debug.Log("finished"); } private void SaveRenderTextureToPNG(Texture inputTex, string file) { RenderTexture temp = RenderTexture.GetTemporary(inputTex.width, inputTex.height, 0, RenderTextureFormat.ARGB32); Graphics.Blit(inputTex, temp); Texture2D tex2D = GetRTPixels(temp); RenderTexture.ReleaseTemporary(temp); File.WriteAllBytes(file, tex2D.EncodeToPNG()); } private Texture2D GetRTPixels(RenderTexture rt) { RenderTexture currentActiveRT = RenderTexture.active; RenderTexture.active = rt; Texture2D tex = new Texture2D(rt.width, rt.height); tex.ReadPixels(new Rect(0, 0, tex.width, tex.height), 0, 0); RenderTexture.active = currentActiveRT; return tex; } #endregion #region public method //开始 public void OnStart() { videoPlayer.Play(); } //暂停 public void OnPause() { videoPlayer.Pause(); } //下一个 public void OnNext() { string nextUrl = b_firstVideo ? video2Url : video1Url; b_firstVideo = !b_firstVideo; videoSlider.value = 0; VideoInfoInit(nextUrl); } //音量控制 public void OnVolumeChanged(float value) { audioSource.volume = value; } //视频控制 public void OnVideoChanged(float value) { //videoPlayer.time = value; //print(value); //print(value); } public void OnPointerDown() { b_adjustVideo = true; b_skip = true; videoPlayer.Pause(); //OnVideoChanged(); //print("down"); } public void OnPointerUp() { videoPlayer.time = videoSlider.value; videoPlayer.Play(); b_adjustVideo = false; //print("up"); } public void OnCapture() { b_capture = true; } #endregion // Update is called once per frame void Update () { if (videoPlayer.isPlaying) { videoScreen.texture = videoPlayer.texture; float time = (float)videoPlayer.time; currentLength.text = FloatToTime(time); if(b_capture) { string name = DateTime.Now.Minute.ToString() + "_" + DateTime.Now.Second.ToString() + ".png"; SaveRenderTextureToPNG(videoPlayer.texture,Path.Combine(imageDir,name)); b_capture = false; } if(!b_adjustVideo) { totalPlayTime += Time.deltaTime; if (!b_skip) { videoSlider.value = (float)videoPlayer.time; lastCountTime = totalPlayTime; } if (totalPlayTime - lastCountTime >= 0.8f) { b_skip = false; } } //StartCoroutine(PlayTime(15)); } } }

如果使用插件AVPro Video所有问题不是问题

//-----------------------------------------2018_11_29-----------------------------------------------//

版本为2018.2.14,在新版本中videoplayer已经不存在上述问题。

通过下述两个事件可以添加时间时间和帧时间,但是帧事件要设置sendFrameReadyEvents=true

public event TimeEventHandler clockResyncOccurred; public event FrameReadyEventHandler frameReady;

代码更新

using System.Collections; using System.Collections.Generic; using UnityEngine.UI; using UnityEngine.Video; using UnityEngine; using UnityEngine.EventSystems; /// <summary> /// 视频播放控制脚本 /// </summary> public class CustomerVideoPlayer : MonoBehaviour { public RawImage rawImage; public Button btnPlay; public Button btnPause; public Button btnFornt; public Button btnBack; public Button btnNext; public Slider sliderVolume; public Slider sliderVideo; public Text txtVolume; public Text txtProgress; public Text txtCurrentProgress; //需要添加播放器的物体 public GameObject obj; //是否拿到视频总时长 public bool isShow; //前进后退的大小 public float numBer = 20f; //时 分的转换 private int hour, min; private float time; private float time_Count; private float time_Current; private bool isVideo; private VideoPlayer vPlayer; private AudioSource source; private string urlNetWork = @"D:\001.mp4"; void Start() { //一定要动态添加这两个组件,要不然会没声音 vPlayer = obj.AddComponent<VideoPlayer>(); source = obj.AddComponent<AudioSource>(); vPlayer.playOnAwake = false; source.playOnAwake = false; source.Pause(); btnPlay.onClick.AddListener(delegate { OnClick(0); }); btnPause.onClick.AddListener(delegate { OnClick(1); }); btnFornt.onClick.AddListener(delegate { OnClick(2); }); btnBack.onClick.AddListener(delegate { OnClick(3); }); btnNext.onClick.AddListener(delegate { OnClick(4); }); sliderVolume.value = source.volume; txtVolume.text = string.Format("{0:0}%", source.volume * 100); Init(urlNetWork); } /// <summary> /// 初始化信息 /// </summary> /// <param name="url"></param> private void Init(string url) { isVideo = true; isShow = true; time_Count = 0; time_Current = 0; sliderVideo.value = 0; //设置为URL模式 vPlayer.source = VideoSource.Url; vPlayer.url = url; vPlayer.audioOutputMode = VideoAudioOutputMode.AudioSource; vPlayer.SetTargetAudioSource(0, source); vPlayer.prepareCompleted += OnPrepared; vPlayer.Prepare(); } /// <summary> /// 声音设置 /// </summary> /// <param name="value"></param> public void AdjustVolume(float value) { source.volume = value; txtVolume.text = string.Format("{0:0}%", value * 100); } /// <summary> /// 调整进度 /// </summary> /// <param name="value"></param> public void AdjustVideoProgress(float value) { if (vPlayer.isPrepared) { vPlayer.time = (long)value; time = (float)vPlayer.time; hour = (int)time / 60; min = (int)time % 60; txtProgress.text = string.Format("{0:D2}:{1:D2}", hour.ToString(), min.ToString()); } } /// <summary> /// 视频控制 /// </summary> /// <param name="num"></param> private void OnClick(int num) { switch (num) { case 0: vPlayer.Play(); Time.timeScale = 1; break; case 1: vPlayer.Pause(); Time.timeScale = 0; break; case 2: sliderVideo.value = sliderVideo.value + numBer; break; case 3: sliderVideo.value = sliderVideo.value - numBer; break; case 4: vPlayer.Stop(); Init(urlNetWork); break; default: break; } } void OnPrepared(VideoPlayer player) { player.Play(); sliderVideo.onValueChanged.AddListener(delegate { AdjustVideoProgress(sliderVideo.value); }); } void Update() { if (vPlayer.isPlaying && isShow) { rawImage.texture = vPlayer.texture; //帧数/帧速率=总时长 如果是本地直接赋值的视频,我们可以通过VideoClip.length获取总时长 sliderVideo.maxValue = vPlayer.frameCount / vPlayer.frameRate; time = sliderVideo.maxValue; hour = (int)time / 60; min = (int)time % 60; txtCurrentProgress.text = string.Format("/ {0:D2}:{1:D2}", hour.ToString(), min.ToString()); //sliderVideo.onValueChanged.AddListener(delegate { ChangeVideo(sliderVideo.value); }); isShow = !isShow; } if (Mathf.Abs((int)vPlayer.time - (int)sliderVideo.maxValue) == 0) { vPlayer.frame = (long)vPlayer.frameCount; vPlayer.Stop(); isVideo = false; return; } else if(isVideo && vPlayer.isPlaying) { time_Count += Time.deltaTime; if ((time_Count - time_Current) >= 1) { sliderVideo.value += 1; time_Current = time_Count; } } } }