centos8下基于现有的kubernetes集群搭建kubespherev3.3.0

centos8下基于现有的kubernetes集群搭建kubespherev3.3.0

一.环境准备

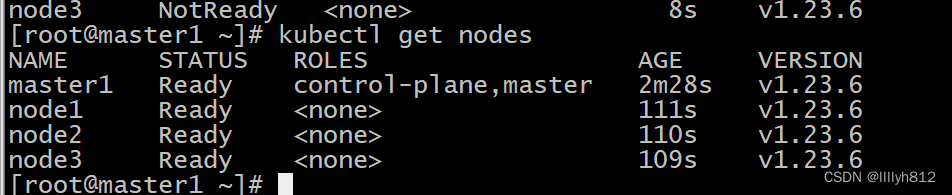

1.主机清单与环境

2.kubernetes集群搭建可以在笔者之前的文章中学习

kubernetes集群搭建

3.harbor镜像仓库已存在

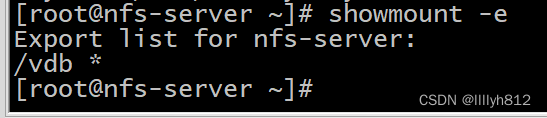

4.nfs网络共享

var code = “f1f05c0e-4978-4830-9445-2e08fa135978”

二.搭建kubesphere

1.master节点操作

[root@master1 ~]# wget https://get.helm.sh/helm-v3.8.2-linux-amd64.tar.gz

--2022-10-18 21:23:03-- https://get.helm.sh/helm-v3.8.2-linux-amd64.tar.gz

Resolving get.helm.sh (get.helm.sh)... 152.199.39.108, 2606:2800:247:1cb7:261b:1f9c:2074:3c

Connecting to get.helm.sh (get.helm.sh)|152.199.39.108|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 13633605 (13M) [application/x-tar]

Saving to: ‘helm-v3.8.2-linux-amd64.tar.gz.1’

helm-v3.8.2-linux-amd64.tar.gz 100%[====================================================>] 13.00M 11.9MB/s in 1.1s

2022-10-18 21:23:05 (11.9 MB/s) - ‘helm-v3.8.2-linux-amd64.tar.gz.1’ saved [13633605/13633605]

[root@master1 ~]# tar -xf helm

helm/ helm-v3.8.2-linux-amd64.tar.gz

[root@master1 ~]# tar -xf helm-v3.8.2-linux-amd64.tar.gz

[root@master1 ~]# rm -rf helm

[root@master1 ~]# tar -xf helm-v3.8.2-linux-amd64.tar.gz

[root@master1 ~]# mv linux-amd64/helm /usr/local/bin/

[root@master1 ~]# mkdir /root/helm

# 创建helm初始化应用目录

[root@master1 ~]# echo 'export HELM_HOME=/root/helm' >> .bash_profile

[root@master1 ~]# source .bash_profile

[root@master1 ~]# echo $HELM_HOME

/root/helm

# 配置helm初始化应用目录环境变量

[root@master1 ~]# helm version

version.BuildInfo{Version:"v3.8.2", GitCommit:"6e3701edea09e5d55a8ca2aae03a68917630e91b", GitTreeState:"clean", GoVersion:"go1.17.5"}

# 查看helm版本

[root@master1 ~]# helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"stable" has been added to your repositories

# 设置helm仓库

[root@master1 ~]# vim kubesphere-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

annotations:

storageclss.kubernetes.io/is-default-class: "true"

provisioner: nfs/provisioner-229 # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

[root@master1 ~]# vim kubesphere-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner # replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner # replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner # replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

[root@master1 ~]# vim kubesphere-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner # replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest #提前下载镜像

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 172.20.251.155 #NFS服务器IP或域名

- name: NFS_PATH

value: /vdb #为NFS服务器共享目录

volumes:

- name: nfs-client-root

nfs:

server: 172.20.251.155 #NFS服务器IP或域名

path: /vdb #为NFS服务器共享目录

[root@master1 ~]# helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --set nfs.server=172.20.251.155 --set nfs.path=/vdb

NAME: nfs-subdir-external-provisioner

LAST DEPLOYED: Wed Oct 19 06:59:26 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

[root@master1 ~]# docker pull quay.io/external_storage/nfs-client-provisioner:latest

注意:kubernetes集群所有节点都要下载

[root@master1 ~]# kubectl apply -f kubesphere-sc.yaml

storageclass.storage.k8s.io/kubesphere-data unchanged

[root@master1 ~]# kubectl apply -f kubesphere-rbac.yaml

serviceaccount/nfs-client-provisioner unchanged

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner unchanged

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner unchanged

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner unchanged

^[[Arolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner unchanged

[root@master1 ~]# kubectl apply -f kubesphere-deployment.yaml

Warning: resource deployments/nfs-client-provisioner is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

deployment.apps/nfs-client-provisioner configured

# 应用yaml文件

[root@master1 ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

- kube-apiserver

# 在之后添加下面一行

- --feature-gates=RemoveSelfLink=false

#添加之后使用kubeadm部署的集群会自动加载部署pod

#kubeadm安装的apiserver是Static Pod,它的配置文件被修改后,立即生效。

#Kubelet 会监听该文件的变化,当您修改了 /etc/kubenetes/manifest/kube-apiserver.yaml 文件之后,kubelet 将自动终止原有的 #kube-apiserver-{nodename} 的 Pod,并自动创建一个使用了新配置参数的 Pod 作为替代。

#如果您有多个 Kubernetes Master 节点,您需要在每一个 Master 节点上都修改该文件,并使各节点上的参数保持一致。

#这里需注意如果api-server启动失败 需重新在执行一遍

[root@master1 ~]# curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/images-list.txt

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 5401 100 5401 0 0 3877 0 0:00:01 0:00:01 --:--:-- 3877

# 下载kubesphere所需要的docker镜像的txt文件

[root@master1 ~]# dnf -y install git

# 安装git

[root@master1 ~]# git clone https://github.com/kubesphere/ks-installer.git

Cloning into 'ks-installer'...

remote: Enumerating objects: 26267, done.

remote: Counting objects: 100% (611/611), done.

remote: Compressing objects: 100% (304/304), done.

remote: Total 26267 (delta 304), reused 515 (delta 259), pack-reused 25656

Receiving objects: 100% (26267/26267), 92.98 MiB | 7.12 MiB/s, done.

Resolving deltas: 100% (14840/14840), done.

# git clone项目

[root@master1 ~]# curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/kubesphere-installer.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 4553 100 4553 0 0 3122 0 0:00:01 0:00:01 --:--:-- 0

[root@master1 ~]# curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/cluster-configuration.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 10021 100 10021 0 0 7183 0 0:00:01 0:00:01 --:--:-- 754k

# 用于安装

[root@master1 ~]# vim cluster-configuration.yaml

ctl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.

local_registry: "172.20.251.150" # Add your private registry address if it is needed.

# 修改local_registry为本地harbor地址

[root@master1 ~]# sed -i "s#^\s*image: kubesphere.*/ks-installer:.*# image: 172.20.251.150/kubesphere/ks-installer:v3.3.0#" kubesphere-installer.yaml

# 使用sed命令将 ks-installer 替换为本地harbor仓库的地址

[root@master1 ~]# kubectl apply -f kubesphere-installer.yaml

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

namespace/kubesphere-system created

serviceaccount/ks-installer created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

[root@master1 ~]# kubectl apply -f cluster-configuration.yaml

clusterconfiguration.installer.kubesphere.io/ks-installer created

# 使用命令kubectl应用两个yaml文件

[root@master1 ~]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

# 查看安装日志

二.node节点操作

[root@node1 ~]# docker pull quay.io/external_storage/nfs-client-provisioner:latest

[root@node2 ~]# docker pull quay.io/external_storage/nfs-client-provisioner:latest

[root@node3 ~]# docker pull quay.io/external_storage/nfs-client-provisioner:latest

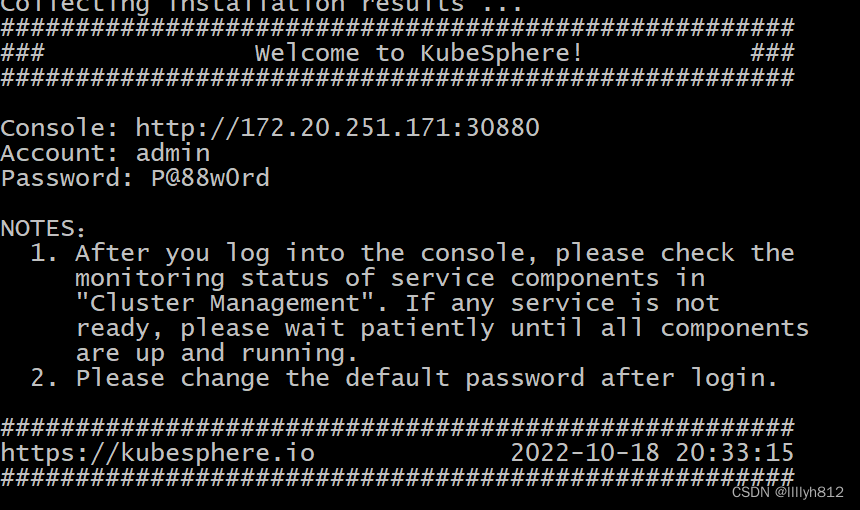

三.验证结果

[root@master1 ~]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

# 查看安装日志

出现下图即为成功

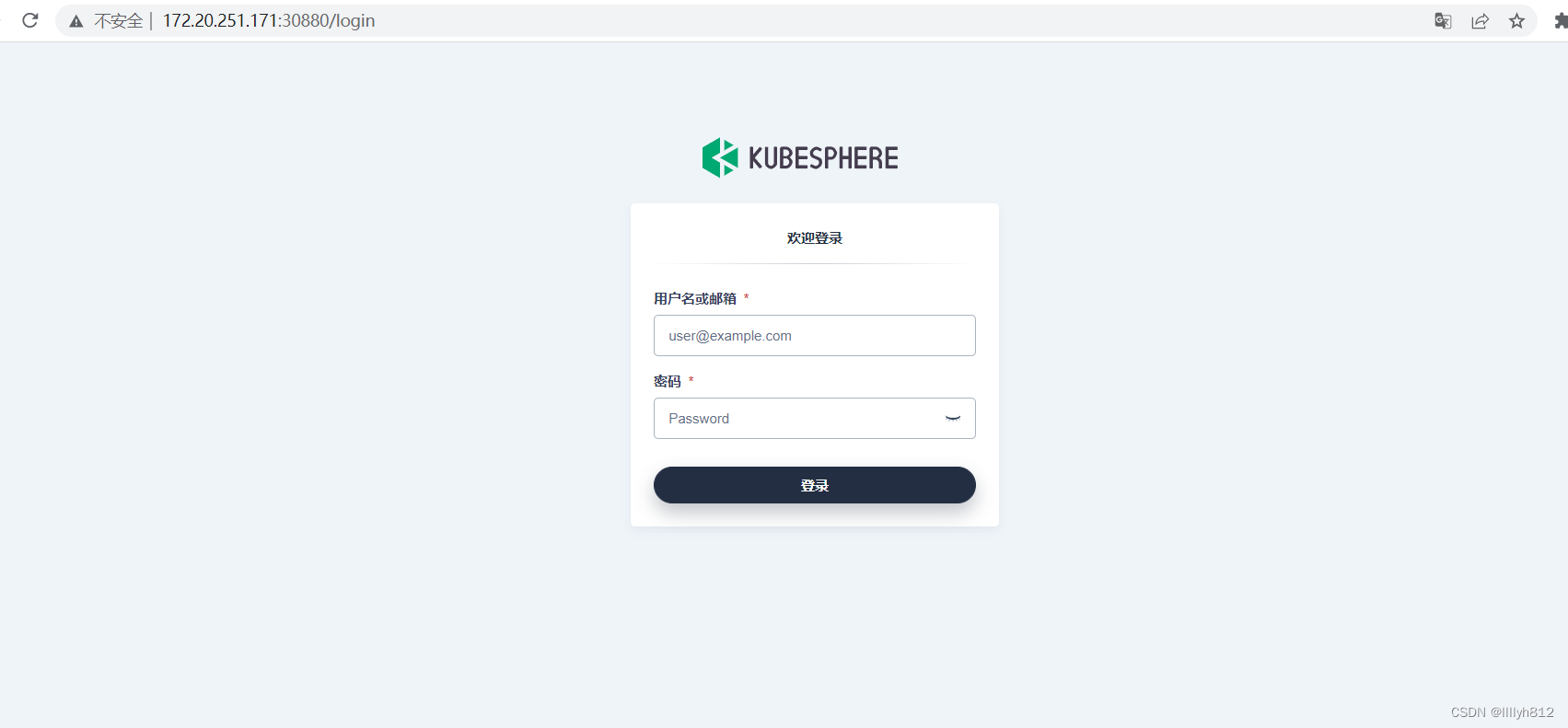

网页访问

搭建成功