设置Distribution clean up 每次删除Command的数量

Replication Job “Distribution clean up: distribution” 默认设置是,每10minutes运行一次,每次删除2000个Command。这对于有1.9亿条Commands的distribution来说,显得力不从心。需要修改 sp [distribution].[dbo].[sp_MSdelete_publisherdb_trans],重新设置每次删除的Commands 数量,我的设置是每次删除20000 command。

设置的过程比较简单,在PROCEDURE [dbo].[sp_MSdelete_publisherdb_trans]中,查找2000,替换为 20000,需要修改三个地方

1, DELETE TOP(20000) MSrepl_commands WITH (PAGLOCK)

WHILE 1 = 1 BEGIN DELETE TOP(20000) MSrepl_commands WITH (PAGLOCK) from MSrepl_commands with (INDEX(ucMSrepl_commands)) WHERE publisher_database_id = @publisher_database_id AND xact_seqno IN (SELECT DISTINCT snap_xact_seqno FROM @snapshot_xact_seqno) OPTION (MAXDOP 1) SELECT @row_count = @@rowcount -- Update output parameter SELECT @num_commands = @num_commands + @row_count IF @row_count < 20000 -- passed the result set. We're done BREAK END

2,DELETE TOP(20000) MSrepl_commands WITH (PAGLOCK)

WHILE 1 = 1 BEGIN if @has_immediate_sync = 0 DELETE TOP(20000) MSrepl_commands WITH (PAGLOCK) from MSrepl_commands with (INDEX(ucMSrepl_commands)) where publisher_database_id = @publisher_database_id and xact_seqno <= @max_xact_seqno and (type & ~@snapshot_bit) not in (@directory_type, @alt_directory_type) and (type & ~@replpost_bit) <> @scriptexec_type OPTION (MAXDOP 1) else -- Use nolock hint on subscription table to avoid deadlock -- with snapshot agent. DELETE TOP(20000) MSrepl_commands WITH (PAGLOCK) from MSrepl_commands with (INDEX(ucMSrepl_commands)) where publisher_database_id = @publisher_database_id and xact_seqno <= @max_xact_seqno and -- do not delete directory, alt directory or script exec commands. they are deleted -- above. We have to do this because we use a (nolock) hint and we have to make sure we -- don't delete dir commands when the file has not been cleaned up in the code above. It's -- ok to delete snap commands that are out of retention and perform lazy delete of dir (type & ~@snapshot_bit) not in (@directory_type, @alt_directory_type) and (type & ~@replpost_bit) <> @scriptexec_type and ( -- Select the row if it is older than max retention. xact_seqno <= @max_immediate_sync_seqno or -- Select the snap cmd if it is not for immediate_sync article -- We know the command is for immediate_sync publication if -- the snapshot tran include articles that has virtual -- subscritptions. (use subscritpion table to avoid join with -- article and publication table). We skip sync tokens because -- they are never pointed to by subscriptions... ( (type & @snapshot_bit) <> 0 and (type & ~@snapshot_bit) not in (@syncinit, @syncdone) and not exists (select * from MSsubscriptions s with (nolock) where s.publisher_database_id = @publisher_database_id and s.article_id = MSrepl_commands.article_id and s.subscriber_id < 0) ) ) OPTION (MAXDOP 1) select @row_count = @@rowcount -- Update output parameter select @num_commands = @num_commands + @row_count IF @row_count < 20000 -- passed the result set. We're done BREAK END

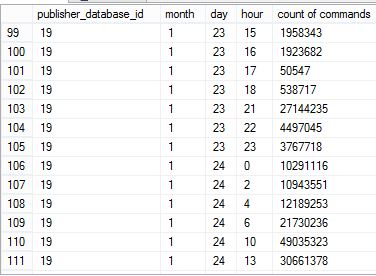

3,使用Script 查看Command的分布图

USE distribution GO SELECT T.[publisher_database_id], DATEPART(mm, [entry_time]) 'month', DATEPART(dd, [entry_time]) 'day', DATEPART(hh, [entry_time]) 'hour', COUNT(C.[xact_seqno]) 'count of commands' FROM [dbo].[MSrepl_transactions](nolock) T INNER JOIN [dbo].[MSrepl_commands](nolock) C ON T.[xact_seqno] = C.[xact_seqno] and T.publisher_database_id=c.publisher_database_id GROUP BY T.[publisher_database_id], DATEPART(mm, [entry_time]), DATEPART(dd, [entry_time]), DATEPART(hh, [entry_time]) ORDER BY 1, 2, 3, 4

附上本机的查询结果

引用文档《 How to resolve when Distribution Database is growing huge (+25gig)》

Yes, I know, huge database is kind of relative, but generally if you see Distribution database growing more the 25gig it means the Cleanup processes is having a hard time deleting replicated transactions. I’ll cover the how and why on Cleanup processes later, but for now I wanted to post a technique we’ve used to purge rows from the Distribution database. This solution involves modifying the SQL Replication stored procedures to increase the number or rows being deleted per transaction. If you’re uncomfortable making the code change, skip down to STEP 7).

This first posting coverage a “conservative” approach. Later I’m post steps for a more “aggressive” solution.

1) script msrepl_commands cleanup proc and save original sp code

sp_helptext sp_MSdelete_publisherdb_trans

2) change from CREATE to ALTER

ALTER PROCEDURE sp_MSdelete_publisherdb_trans

3) change all 3 locations from 2000 to 100000 rows

DELETE TOP(2000) MSrepl_commands . . .

4) script msrepl_transaction cleanup proc and save original sp code

sp_helptext sp_MSdelete_dodelete

5) change from CREATE to ALTER

ALTER PROCEDURE sp_MSdelete_dodelete

6) change both locations from 5000 to 100000 rows

delete TOP(5000) MSrepl_transactions . . .

7) Determine oldest day containing transactions

USE distribution GO SELECT T.[publisher_database_id], DATEPART(mm, [entry_time]) 'month', DATEPART(dd, [entry_time]) 'day', DATEPART(hh, [entry_time]) 'hour', COUNT(C.[xact_seqno]) 'count of commands' FROM [dbo].[MSrepl_transactions](nolock) T INNER JOIN [dbo].[MSrepl_commands](nolock) C ON T.[xact_seqno] = C.[xact_seqno] GROUP BY T.[publisher_database_id], DATEPART(mm, [entry_time]), DATEPART(dd, [entry_time]), DATEPART(hh, [entry_time]) ORDER BY 1, 2, 3, 4

8) Execute cleanup via SSMS or a TSQL job to delete JUST oldest day. (24 hours @ 5 days = 120), then continue to reduce the @max_distretention valued by a few hours for each run.

EXEC dbo.sp_MSdistribution_cleanup @min_distretention = 0, @max_distretention = 120

Example output: (4 hours to removed 340million rows)

Removed 3493 replicated transactions consisting of 343877158 statements in 15043 seconds (22859 rows/sec).