承接上篇来探讨如何提高C++运行速度

有三种方向可提高C++运行速度:语言、算法和硬件

1、语言方向:函数调用使用指针传递代替向量拷贝(节约数据拷贝时间)

皮尔逊相关系数函数调用指针传递版如下

#include <iostream> #include <iomanip> #include <vector> #include <cmath> #include <opencv2/opencv.hpp> using namespace std; using namespace cv; // 计算平均值 double mean(vector<double> *v) { double sum = 0; for (int i = 0; i < (*v).size(); i++) { sum += (*v)[i]; } return sum / (*v).size(); } // 计算协方差 double covariance(vector<double> *x, vector<double> *y) { double x_mean = mean(x); double y_mean = mean(y); double sum = 0; for (int i = 0; i < (*x).size(); i++) { sum += ((*x)[i] - x_mean) * ((*y)[i] - y_mean); } return sum / (*x).size(); } // 计算相关系数 double correlation(vector<double> *x, vector<double> *y) { double cov = covariance(x, y); double x_std = sqrt(covariance(x, x)); double y_std = sqrt(covariance(y, y)); double res = 0; if (x_std != 0 && y_std != 0) res = cov / (x_std * y_std); return res; } //二维向量转成一维向量 vector<double> vector2to1(vector<vector<double>> *a) { int n = (*a).size(); // a的行数 int m = (*a)[0].size(); // a的列数 vector<double> b; for (int i = 0; i < n; i++) for (int j = 0; j < m; j++) b.push_back((*a)[i][j]); return b; } vector<vector<double>> sub_x; //截取二维向量指定的区域 vector<vector<double>>* vector_sub(vector<vector<double>> *a, int start_row, int start_col, int end_row, int end_col) { sub_x.clear(); for (int i = start_row; i < end_row; i++) { vector<double> row; for (int j = start_col; j < end_col; j++) { row.push_back((*a)[i][j]); } sub_x.push_back(row); } return &sub_x; } // 计算两个矩阵的归一化互相关系数 vector<vector<double>> correlation2(vector<vector<double>> x, vector<vector<double>> y) { int N1 = x.size(); // x的行数 int N2 = x[0].size(); // x的列数 int M1 = y.size(); // y的行数 int M2 = y[0].size(); // y的列数 int N = N1 + M1 - 1; // z的行数 int M = N2 + M2 - 1; // z的列数 vector<vector<double>> z(N, vector<double>(M, 0)); // 初始化z //补0的行 for (int i = 0; i < N1 - 1; i++) { y.insert(y.begin(), vector<double>(M2, 0));//在y开始插入1行10元素 y.push_back(vector<double>(M2, 0));//在y尾部追加1行0元素 } //补0的列 for (int i = 0; i < N2 - 1; i++) { for (int i = 0; i < y.size(); i++) { y[i].insert(y[i].begin(), 0);//在头部补0 y[i].push_back(0);//在尾部补0 } } vector<double> x1 = vector2to1(&x); vector<double> y1; for (int n = 0; n < N; n++) for (int m = 0; m < M; m++) { y1 = vector2to1(vector_sub(&y, n, m, n + N1, m + N2)); z[n][m] = correlation(&x1, &y1); } return z; } int main() { vector<vector<double>> img1; Mat image1 = imread("C:/Users/MingYi-LZQ/Desktop/3.tif", IMREAD_UNCHANGED); if (image1.empty()) { cout << "Could not open or find the image" << endl; return -1; } img1.resize(image1.rows, vector<double>(image1.cols)); for (int i = 0; i < image1.rows; i++) { for (int j = 0; j < image1.cols; j++) { img1[i][j] = (double)image1.at<uchar>(i, j); } } vector<vector<double>> img2; Mat image2 = imread("C:/Users/MingYi-LZQ/Desktop/4.tif", IMREAD_UNCHANGED); if (image2.empty()) { cout << "Could not open or find the image" << endl; return -1; } img2.resize(image2.rows, vector<double>(image2.cols)); for (int i = 0; i < image2.rows; i++) { for (int j = 0; j < image2.cols; j++) { img2[i][j] = (double)image2.at<uchar>(i, j); } } vector<vector<double>> z = correlation2(img1, img2); double max_corr = 0; int x_peak = 0; int y_peak = 0; vector<vector<double>> offset; for (int i = 0; i < z.size(); i++) { for (int j = 0; j < z[0].size(); j++) { if (z[i][j] > max_corr) { max_corr = z[i][j];//找到最大的相关系数 x_peak = j + 1;//最大相关系数对应的x坐标 y_peak = i + 1;//最大相关系数对应的y坐标 } } } //计算出实际x y的偏移量 vector<double> temp; temp.push_back(x_peak - img1.size()); temp.push_back(y_peak - img1[0].size()); offset.push_back(temp); return 0; }

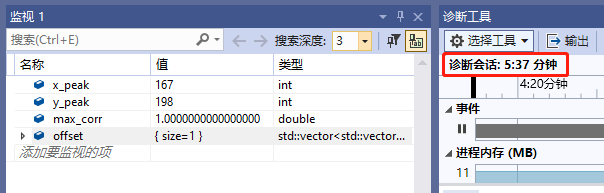

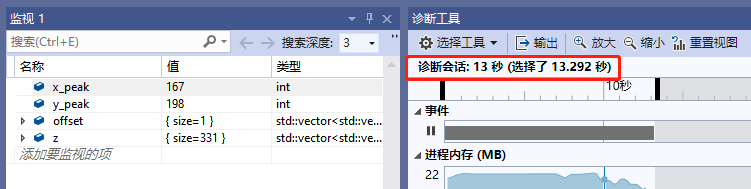

指针传递运行结果-耗时5分37秒

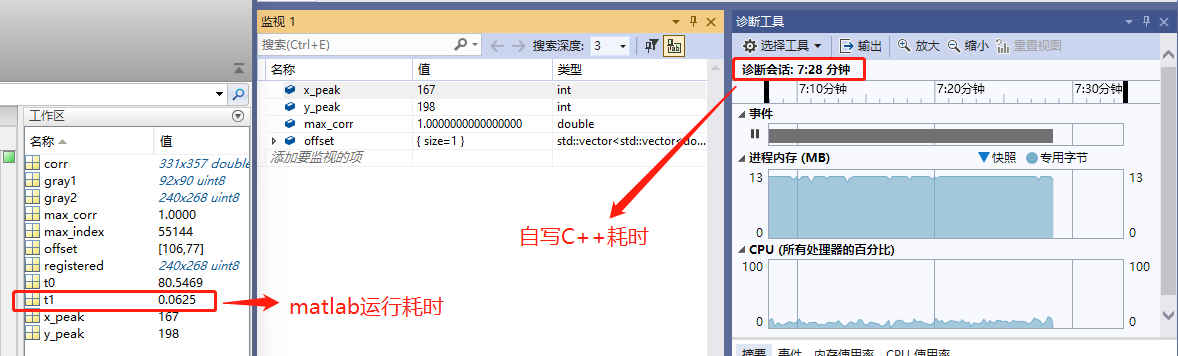

向量传递运行结果-耗时7分28秒

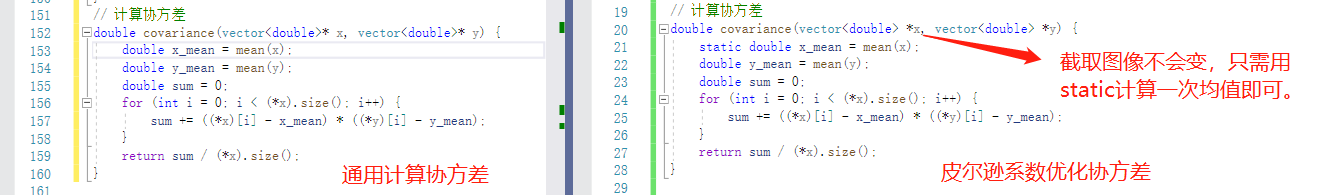

优化多余步骤

优化后的代码

#include <iostream> #include <iomanip> #include <vector> #include <cmath> #include <opencv2/opencv.hpp> using namespace std; using namespace cv; // 计算平均值 double mean(vector<double>* v) { double sum = 0; for (int i = 0; i < (*v).size(); i++) { sum += (*v)[i]; } return sum / (*v).size(); } // 计算协方差 double covariance(vector<double>* x, vector<double>* y) { double x_mean = mean(x); double y_mean = mean(y); double sum = 0; for (int i = 0; i < (*x).size(); i++) { sum += ((*x)[i] - x_mean) * ((*y)[i] - y_mean); } return sum / (*x).size(); } // 计算相关系数 double correlation(vector<double>* x, vector<double>* y) { double cov = covariance(x, y); double x_std = sqrt(covariance(x, x)); double y_std = sqrt(covariance(y, y)); double res = 0; if (x_std != 0 && y_std != 0) res = cov / (x_std * y_std); return res; } // 计算两个矩阵的归一化互相关系数 vector<vector<double>> correlation2(vector<vector<double>> x, vector<vector<double>> y) { int N1 = x.size(); // x的行数 int N2 = x[0].size(); // x的列数 int M1 = y.size(); // y的行数 int M2 = y[0].size(); // y的列数 int N = N1 + M1 - 1; // z的行数 int M = N2 + M2 - 1; // z的列数 vector<vector<double>> z(N, vector<double>(M, 0)); // 初始化z //补0的行 for (int i = 0; i < N1 - 1; i++) { y.insert(y.begin(), vector<double>(M2, 0));//在y开始插入1行10元素 y.push_back(vector<double>(M2, 0));//在y尾部追加1行0元素 } //补0的列 for (int i = 0; i < N2 - 1; i++) { for (int i = 0; i < y.size(); i++) { y[i].insert(y[i].begin(), 0);//在头部补0 y[i].push_back(0);//在尾部补0 } } vector<double> x1; //二维向量x转成一维向量x1 for (int i = 0; i < N1; i++) for (int j = 0; j < N2; j++) x1.push_back(x[i][j]); vector<double> y1(N1*N2); for (int n = 0; n < N; n++) for (int m = 0; m < M; m++) { //从原始图像上直接提取N1*N2数据存放到y1中 for (int i = 0; i < N1; i++) { for (int j = 0; j < N2; j++) { y1[i * N2 + j] = y[n + i][m + j]; } } z[n][m] = correlation(&x1, &y1);//计算x与y1的相关系数并存到对应z相关系数向量中 } return z; } int main() { vector<vector<double>> img1; Mat image1 = imread("C:/Users/MingYi-LZQ/Desktop/3.tif", IMREAD_UNCHANGED); if (image1.empty()) { cout << "Could not open or find the image" << endl; return -1; } img1.resize(image1.rows, vector<double>(image1.cols)); for (int i = 0; i < image1.rows; i++) { for (int j = 0; j < image1.cols; j++) { img1[i][j] = (double)image1.at<uchar>(i, j); } } vector<vector<double>> img2; Mat image2 = imread("C:/Users/MingYi-LZQ/Desktop/4.tif", IMREAD_UNCHANGED); if (image2.empty()) { cout << "Could not open or find the image" << endl; return -1; } img2.resize(image2.rows, vector<double>(image2.cols)); for (int i = 0; i < image2.rows; i++) { for (int j = 0; j < image2.cols; j++) { img2[i][j] = (double)image2.at<uchar>(i, j); } } vector<vector<double>> z = correlation2(img1, img2); double max_corr = 0; int x_peak = 0; int y_peak = 0; vector<vector<double>> offset; for (int i = 0; i < z.size(); i++) { for (int j = 0; j < z[0].size(); j++) { if (z[i][j] > max_corr) { max_corr = z[i][j];//找到最大的相关系数 x_peak = j + 1;//最大相关系数对应的x坐标 y_peak = i + 1;//最大相关系数对应的y坐标 } } } //计算出实际x y的偏移量 vector<double> temp; temp.push_back(x_peak - img1.size()); temp.push_back(y_peak - img1[0].size()); offset.push_back(temp); return 0; }

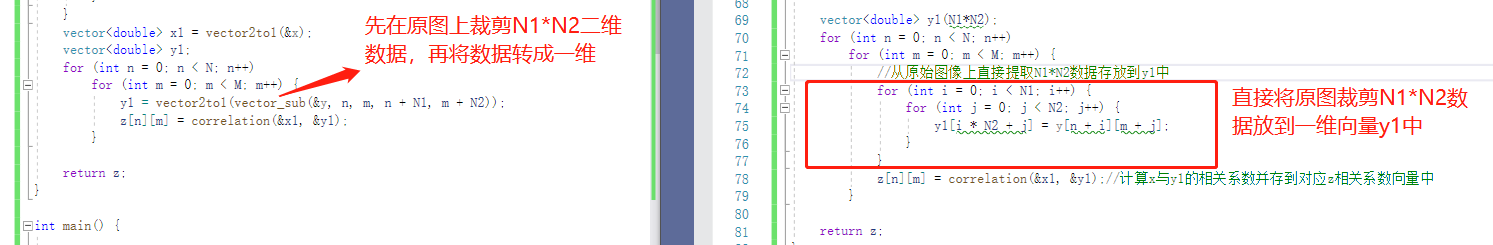

运行结果

2、算法方向:优化计算截取图像的均值

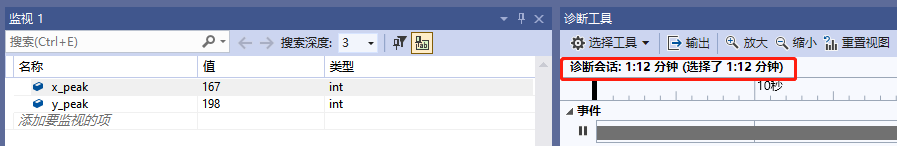

运行结果

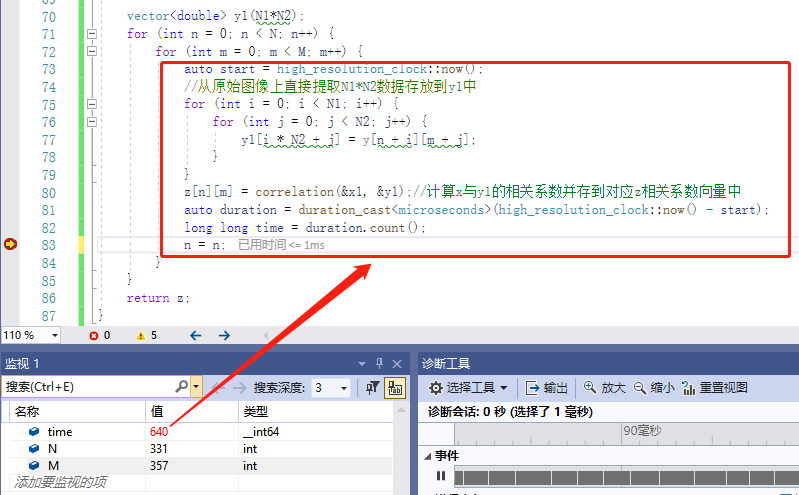

我们可以发现不管是优化语言还是算法,代码的运行速度都没有较大的提升,优化后的1分12秒比matlab的0.0625s还是相差甚远。导致这种悬殊的根本原因是这两个for代码

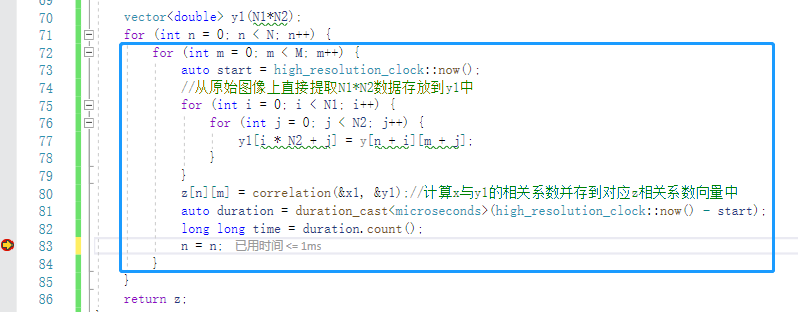

执行一次红框内的代码大约640us,一共要执行N*M遍。这两个for循环运行时间大约 = N * M * time / 1000 / 1000秒 = 331*357*640/1000/1000= 75秒。如果把下图蓝框内的代码作为一个线程,开辟N(331)个线程来同时运行,那岂不是75/331 = 0.22秒就能执行完毕?

3、硬件方向:使用多线程

使用CPU多线程的代码如下

#include <iostream> #include <iomanip> #include <vector> #include <cmath> #include <thread> #include <chrono> #include <opencv2/opencv.hpp> using namespace std; using namespace std::chrono; using namespace cv; // 计算平均值 double mean(vector<double> *v) { double sum = 0; for (int i = 0; i < (*v).size(); i++) { sum += (*v)[i]; } return sum / (*v).size(); } // 计算协方差 double covariance(vector<double> *x, vector<double> *y) { static double x_mean = mean(x); double y_mean = mean(y); double sum = 0; for (int i = 0; i < (*x).size(); i++) { sum += ((*x)[i] - x_mean) * ((*y)[i] - y_mean); } return sum / (*x).size(); } // 计算相关系数 double correlation(vector<double> *x, vector<double> *y) { double cov = covariance(x, y); double x_std = sqrt(covariance(x, x)); double y_std = sqrt(covariance(y, y)); double res = 0; if (x_std != 0 && y_std != 0) res = cov / (x_std * y_std); return res; } /// <summary> /// 定义并行函数,计算相关系数 /// </summary> /// <param name="x">截取图像转换成一维向量</param> /// <param name="y">原始图像二维向量</param> /// <param name="z">输出二维皮尔逊相关系数</param> /// <param name="n">补0后图像的行数。每个线程处理一行M列的相关系数</param> /// <param name="M">补0后图像的列数</param> /// <param name="N1">截取图像的行数</param> /// <param name="N2">截取图像的列数</param> void parallel_function(vector<double>& x, vector<vector<double>>& y, vector<vector<double>>& z, int n, int M,int N1, int N2) { vector<double> y1(N1*N2);//定义原始图像上一行从左到右N1*N2的二维向量转一维 for (int m = 0; m < M; m++) {//从左到右处理M列截取图像与y1的相关系数 //从原始图像上提取N1*N2数据存放到y1中 for (int i = 0; i < N1; i++) { for (int j = 0; j < N2; j++) { y1[i*N2 + j] = y[n + i][m + j]; } } z[n][m] = correlation(&x, &y1);//计算x与y1的相关系数并存到对应z相关系数向量中 } } // 计算两个矩阵的归一化互相关系数 vector<vector<double>> correlation2(vector<vector<double>> x, vector<vector<double>> y) { int N1 = x.size(); // x的行数 int N2 = x[0].size(); // x的列数 int M1 = y.size(); // y的行数 int M2 = y[0].size(); // y的列数 int N = N1 + M1 - 1; // z的行数 int M = N2 + M2 - 1; // z的列数 vector<vector<double>> z(N, vector<double>(M, 0)); // 初始化z //补0的行 for (int i = 0; i < N1 - 1; i++) { y.insert(y.begin(), vector<double>(M2, 0));//在y开始插入1行10元素 y.push_back(vector<double>(M2, 0));//在y尾部追加1行0元素 } //补0的列 for (int i = 0; i < N2 - 1; i++) { for (int i = 0; i < y.size(); i++) { y[i].insert(y[i].begin(), 0);//在头部补0 y[i].push_back(0);//在尾部补0 } } vector<double> x1; //二维向量x转成一维向量x1 for (int i = 0; i < N1; i++) for (int j = 0; j < N2; j++) x1.push_back(x[i][j]); int num_threads = N * M; vector<thread> threads; // 创建N个线程,将补0后的图像分成N行M列,每个线程处理一行数据 for (int n = 0; n < N; n++) { threads.push_back(thread(parallel_function, ref(x1), ref(y), ref(z), n, M, N1, N2)); } // 等待所有线程执行完毕 for (auto& t : threads) { t.join(); } return z; } int main() { vector<vector<double>> img1; Mat image1 = imread("C:/Users/MingYi-LZQ/Desktop/3.tif", IMREAD_UNCHANGED); if (image1.empty()) { cout << "Could not open or find the image" << endl; return -1; } img1.resize(image1.rows, vector<double>(image1.cols)); for (int i = 0; i < image1.rows; i++) { for (int j = 0; j < image1.cols; j++) { img1[i][j] = (double)image1.at<uchar>(i, j); } } vector<vector<double>> img2; Mat image2 = imread("C:/Users/MingYi-LZQ/Desktop/4.tif", IMREAD_UNCHANGED); if (image2.empty()) { cout << "Could not open or find the image" << endl; return -1; } img2.resize(image2.rows, vector<double>(image2.cols)); for (int i = 0; i < image2.rows; i++) { for (int j = 0; j < image2.cols; j++) { img2[i][j] = (double)image2.at<uchar>(i, j); } } vector<vector<double>> z = correlation2(img1, img2); double max_corr = 0; int x_peak = 0; int y_peak = 0; vector<vector<double>> offset; for (int i = 0; i < z.size(); i++) { for (int j = 0; j < z[0].size(); j++) { if (z[i][j] > max_corr) { max_corr = z[i][j];//找到最大的相关系数 x_peak = j + 1;//最大相关系数对应的x坐标 y_peak = i + 1;//最大相关系数对应的y坐标 } } } //计算出实际x y的偏移量 vector<double> temp; temp.push_back(x_peak - img1[0].size()); temp.push_back(y_peak - img1.size()); offset.push_back(temp); return 0; }

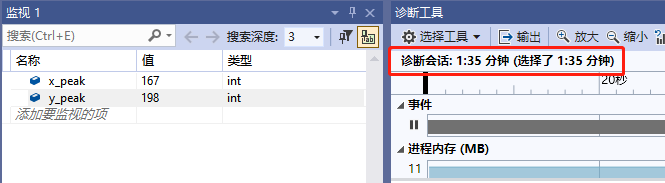

运行结果13秒,与75秒相比大约只提升了6倍,并不是想象的提高了331倍。那这是为什么呢?

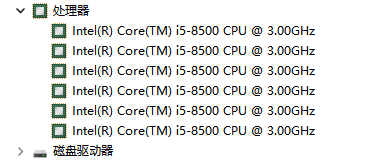

原因是我的电脑真正的硬件线程只有6个

虽然代码里开辟了331个线程,但这331个线程只能一次同时运行6个,所以使用cpu多线程最大能提升6倍的时间。那matlab是怎么在我的电脑上只用了0.0625秒完成计算的呢?因为我的电脑里还装了一块显卡

虽然这块显卡的时钟只有1.5GHz比CPU的3.0GHz慢了一倍,但是CUDA核心数是1280,是CPU线程数的200倍,即使频率慢一倍但整体还是会快100倍,优化后的代码如果放在GPU上运行大概是13/100 = 0.13秒运行完。

使用GPU多线程的代码如下

#include "cuda_runtime.h" #include "device_launch_parameters.h" #include <stdio.h> #include <stdlib.h> #include <iostream> #include <iomanip> #include <vector> #include <cmath> #include <opencv2/opencv.hpp> using namespace std; using namespace cv; using namespace chrono; /// <summary> /// 计算截取图像的均值 /// </summary> /// <param name="v">原始图像</param> /// <param name="row">截取图像在原始图像上的行</param> /// <param name="col">截取图像在原始图像上的列</param> /// <param name="high">截取图像的高</param> /// <param name="width">截取图像的宽</param> /// <param name="width_ori">原始图像的宽</param> /// <returns>截取图像的均值</returns> __device__ double mean(double* v, int row, int col, int high, int width, int width_ori) { double sum = 0; double t[16]; for (int m = 0; m < 16; m++) t[m] = v[m]; for (int i = 0; i < high; i++) { for (int j = 0; j < width; j++) sum += v[(row + i) * width_ori + col + j]; } return sum / (high * width); } /// <summary> /// 截取图像在原始图像上从左到右从上到下,计算每次移动两张图像的皮尔逊互相关系数。(此函数在GPU上运行) /// </summary> /// <param name="mat1">截取图像</param> /// <param name="mat2">原始图像</param> /// <param name="result">计算结果</param> /// <param name="high">截取图像的高</param> /// <param name="width">截取图像的宽</param> /// <param name="width_ori">原始图像的宽</param> /// <returns></returns> __global__ void correlation2(double* mat1, double* mat2, double* result, int high, int width, int width_ori) { const int bid = blockIdx.x; const int tid = threadIdx.x; int row = bid; int col = tid; //计算 mat1与mat2的协方差 double x_mean = mean(mat1, 0, 0, high, width, width); double y_mean = mean(mat2, row, col, high, width, width_ori); double sum = 0; for (int i = 0; i < high; i++) { for (int j = 0; j < width; j++) sum += (mat1[i * width + j] - x_mean) * (mat2[(row + i) * width_ori + col + j] - y_mean); } double cov = sum / (high * width); //计算截取图像mat1的标准差 sum = 0; for (int i = 0; i < high; i++) { for (int j = 0; j < width; j++) sum += (mat1[i * width + j] - x_mean) * (mat1[i * width + j] - x_mean); } double x_std = sqrt(sum / (high * width)); //计算原始图像mat2的标准差(图像大小等于截取图像的大小) sum = 0; for (int i = 0; i < high; i++) { for (int j = 0; j < width; j++) sum += (mat2[(row + i) * width_ori + col + j] - y_mean) * (mat2[(row + i) * width_ori + col + j] - y_mean); } double y_std = sqrt(sum / (high * width)); //计算每张图像的互相关系数 double corr = 0; if (x_std != 0 && y_std != 0) corr = cov / (x_std * y_std); result[bid * blockDim.x + tid] = corr; } int main(int argc, char* argv[]) { double* mat1, * mat2, * result;//在CPU定义指针 double* g_mat1, * g_mat2, * g_mat_result;//在GPU上定义指针 int len_mat1 = 0, len_mat2; auto start = high_resolution_clock::now(); //读取截取图像 Mat image1 = imread("C:/Users/MingYi-LZQ/Desktop/3.tif", IMREAD_UNCHANGED); if (image1.empty()) { cout << "Could not open or find the image" << endl; return -1; } //读取原始图像 Mat image2 = imread("C:/Users/MingYi-LZQ/Desktop/4.tif", IMREAD_UNCHANGED); if (image2.empty()) { cout << "Could not open or find the image" << endl; return -1; } //计算截取图像和原始图像的长度 len_mat1 = image1.rows * image1.cols; len_mat2 = image2.rows * image2.cols; //在CPU上开辟内存 mat1 = (double*)malloc(len_mat1 * sizeof(double)); mat2 = (double*)malloc(len_mat2 * sizeof(double)); //把截取图像灰度值放到内存中 for (int i = 0; i < image1.rows; i++) { for (int j = 0; j < image1.cols; j++) { mat1[i * image1.cols + j] = (double)image1.at<uchar>(i, j); } } //把原始图像灰度值放到内存中 for (int i = 0; i < image2.rows; i++) { for (int j = 0; j < image2.cols; j++) { mat2[i * image2.cols + j] = (double)image2.at<uchar>(i, j); } } int num_block = (image2.rows - image1.rows + 1); int num_thread = (image2.cols - image1.cols + 1); const int len_result = num_block * num_thread; //在CPU上开辟存储结果的内存 result = (double*)malloc(len_result * sizeof(double)); //在GPU上开辟截取图像、原始图像和运行结果内存 cudaMalloc((void**)&g_mat1, len_mat1 * sizeof(double)); cudaMalloc((void**)&g_mat2, len_mat2 * sizeof(double)); cudaMalloc((void**)&g_mat_result, len_result * sizeof(double)); //把截取图像和原始图像拷贝到GPU显存中 cudaMemcpy(g_mat1, mat1, sizeof(double) * len_mat1, cudaMemcpyHostToDevice); cudaMemcpy(g_mat2, mat2, sizeof(double) * len_mat2, cudaMemcpyHostToDevice); correlation2 << <num_block, num_thread >> > (g_mat1, g_mat2, g_mat_result, image1.rows, image1.cols, image2.cols); //把GPU上的运行结果拷贝到CPU上 cudaMemcpy(result, g_mat_result, sizeof(double) * len_result, cudaMemcpyDeviceToHost); vector<double> arr_res; double max_corr = 0; int x_peak = 0; int y_peak = 0; for (long i = 0; i < len_result; i++) { arr_res.push_back(result[i]); if (result[i] > max_corr) { max_corr = result[i];//找到最大的相关系数 y_peak = i / num_thread + image1.rows;//最大相关系数对应的y坐标 x_peak = i % num_thread + image1.cols;//最大相关系数对应的x坐标 } } auto duration = duration_cast<microseconds>(high_resolution_clock::now() - start); long long time = duration.count();//统计运行时间 return 0; }

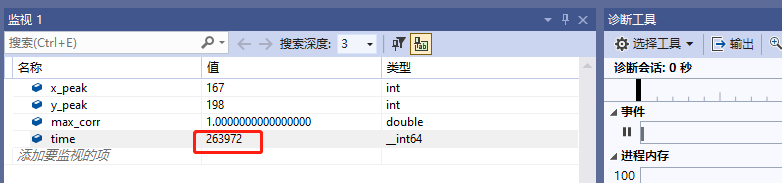

运行结果

耗时0.263秒,现实比理论还是有点区别的。因为本例程没有使用eigen库,eigen库进行了指令级别优化,如果使用eigen库的话估计就可以和matlab的0.0625秒媲美了。计算同样两张图像的相关系数由最开始的7分28秒优化CPU多线程的13秒到最终的GPU多线程的0.263秒,可见显卡并行计算突出的优势。