pandas库简介

1.pandas库简介

在 Python 自带的科学计算库中,Pandas 模块是最适于数据科学相关操作的工具。它与 Scikit-learn 两个模块几乎提供了数据科学家所需的全部工具。Pandas 是一种开源的、易于使用的数据结构和Python编程语言的数据分析工具。它可以对数据进行导入、清洗、处理、统计和输出。pandas 是基于 Numpy 库的,可以说,pandas 库就是为数据分析而生的。

根据大多数一线从事机器学习应用的研发人员的经验,如果问他们究竟在机器学习的哪个环节最耗费时间,恐怕多数人会很无奈地回答您:“数据预处理。”。事实上,多数在业界的研发团队往往不会投人太多精力从事全新机器学习模型的研究,而是针对具体的项目和特定的数据,使用现有的经典模型进行分析。这样一来,时间多数被花费在处理数据,甚至是数据清洗的工作上,特别是在数据还相对原始的条件下。Pandas便应运而生,它是一款针对于数据处理和分析的Python工具包,实现了大量便于数据读写、清洗、填充以及分析的功能。这样就帮助研发人员节省了大量用于数据预处理下作的代码,同时也使得他们有更多的精力专注于具体的机器学习任务。

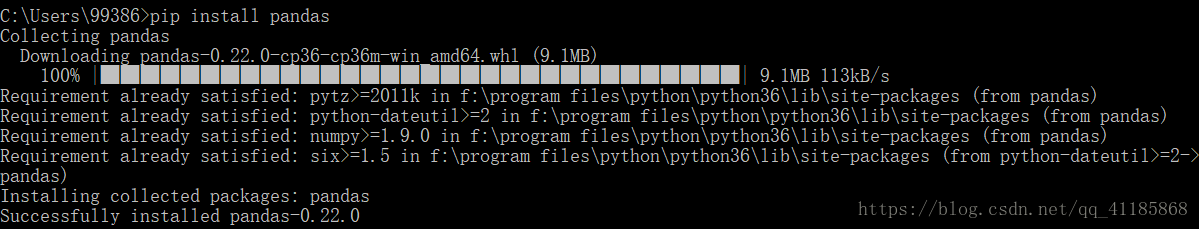

2.pandas库安装

pip install pandas

3. pandas库使用方法

1、函数使用方法

Pickling

read_pickle(path[, compression]) Load pickled pandas object (or any object) from file.

Flat File

read_table(filepath_or_buffer[, sep, …]) (DEPRECATED) Read general delimited file into DataFrame.

read_csv(filepath_or_buffer[, sep, …]) Read a comma-separated values (csv) file into DataFrame.

read_fwf(filepath_or_buffer[, colspecs, …]) Read a table of fixed-width formatted lines into DataFrame.

read_msgpack(path_or_buf[, encoding, iterator]) Load msgpack pandas object from the specified file path

Clipboard

read_clipboard([sep]) Read text from clipboard and pass to read_csv.

Excel

read_excel(io[, sheet_name, header, names, …]) Read an Excel file into a pandas DataFrame.

ExcelFile.parse([sheet_name, header, names, …]) Parse specified sheet(s) into a DataFrame

ExcelWriter(path[, engine, date_format, …]) Class for writing DataFrame objects into excel sheets, default is to use xlwt for xls, openpyxl for xlsx.

JSON

read_json([path_or_buf, orient, typ, dtype, …]) Convert a JSON string to pandas object.

json_normalize(data[, record_path, meta, …]) Normalize semi-structured JSON data into a flat table.

build_table_schema(data[, index, …]) Create a Table schema from data.

HTML

read_html(io[, match, flavor, header, …]) Read HTML tables into a list of DataFrame objects.

HDFStore: PyTables (HDF5)

read_hdf(path_or_buf[, key, mode]) Read from the store, close it if we opened it.

HDFStore.put(key, value[, format, append]) Store object in HDFStore

HDFStore.append(key, value[, format, …]) Append to Table in file.

HDFStore.get(key) Retrieve pandas object stored in file

HDFStore.select(key[, where, start, stop, …]) Retrieve pandas object stored in file, optionally based on where criteria

HDFStore.info() Print detailed information on the store.

HDFStore.keys() Return a (potentially unordered) list of the keys corresponding to the objects stored in the HDFStore.

HDFStore.groups() return a list of all the top-level nodes (that are not themselves a pandas storage object)

HDFStore.walk([where]) Walk the pytables group hierarchy for pandas objects

Feather

read_feather(path[, columns, use_threads]) Load a feather-format object from the file path

Parquet

read_parquet(path[, engine, columns]) Load a parquet object from the file path, returning a DataFrame.

SAS

read_sas(filepath_or_buffer[, format, …]) Read SAS files stored as either XPORT or SAS7BDAT format files.

SQL

read_sql_table(table_name, con[, schema, …]) Read SQL database table into a DataFrame.

read_sql_query(sql, con[, index_col, …]) Read SQL query into a DataFrame.

read_sql(sql, con[, index_col, …]) Read SQL query or database table into a DataFrame.

Google BigQuery

read_gbq(query[, project_id, index_col, …]) Load data from Google BigQuery.

STATA

read_stata(filepath_or_buffer[, …]) Read Stata file into DataFrame.

StataReader.data(**kwargs) (DEPRECATED) Reads observations from Stata file, converting them into a dataframe

StataReader.data_label() Returns data label of Stata file

StataReader.value_labels() Returns a dict, associating each variable name a dict, associating each value its corresponding label

StataReader.variable_labels() Returns variable labels as a dict, associating each variable name with corresponding label

StataWriter.write_file()

————————————————

版权声明:本文为CSDN博主「一个处女座的程序猿」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_41185868/article/details/79781561