北京市政百姓信件可视化展示

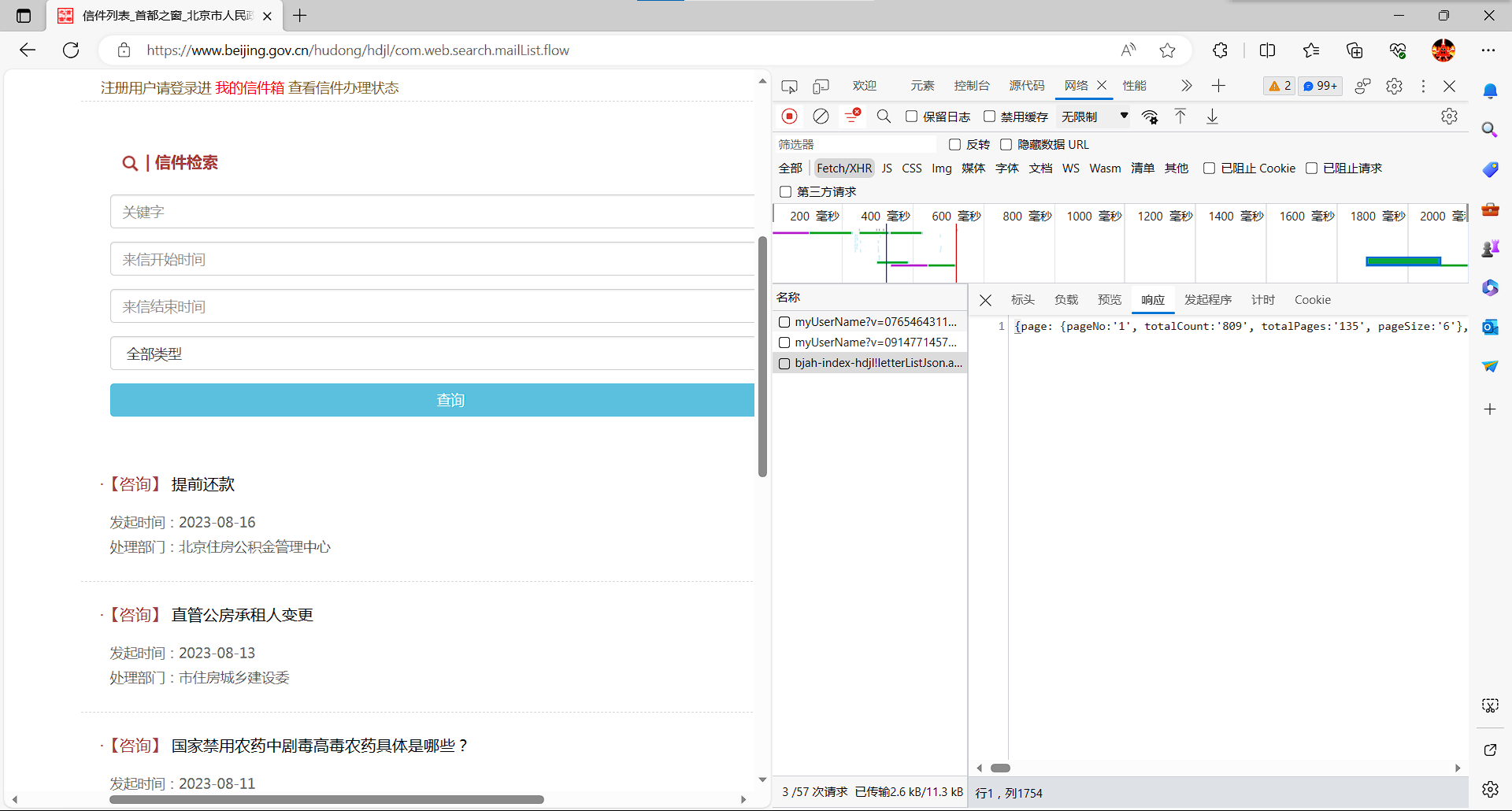

这是原网站:https://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow

点击查询后我们可以看到其中的相应信息,{page: {pageNo:'1', totalCount:'809', totalPages:'135', pageSize:'6'}, result: .....}

这里可以看到一共有809封信件,并且根据不同的分类,信件的url地址的不一样的

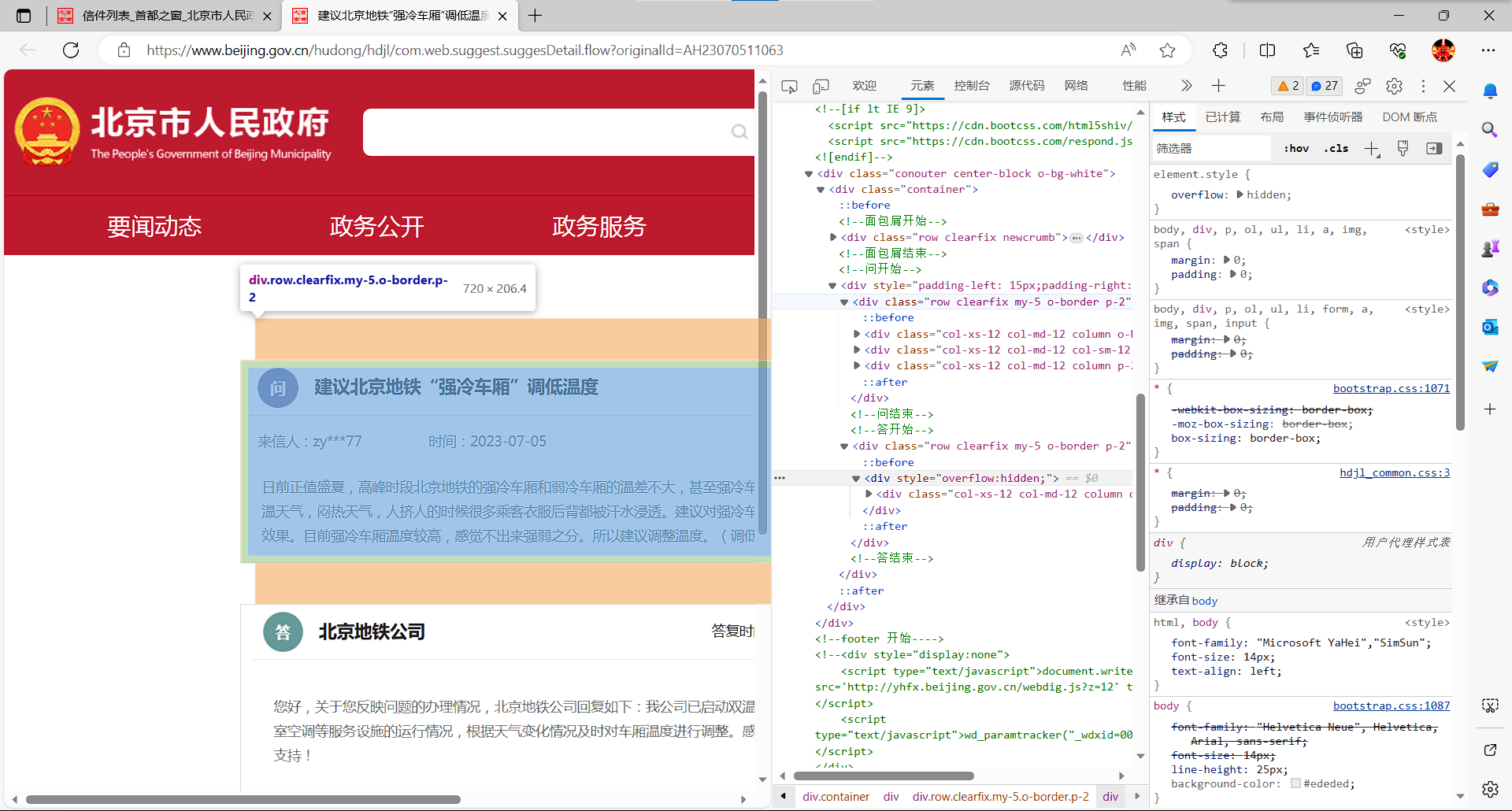

我们可以根据id遍历得到所有信件的详细内容

https://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=AH23081610397

https://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=AH23070511063

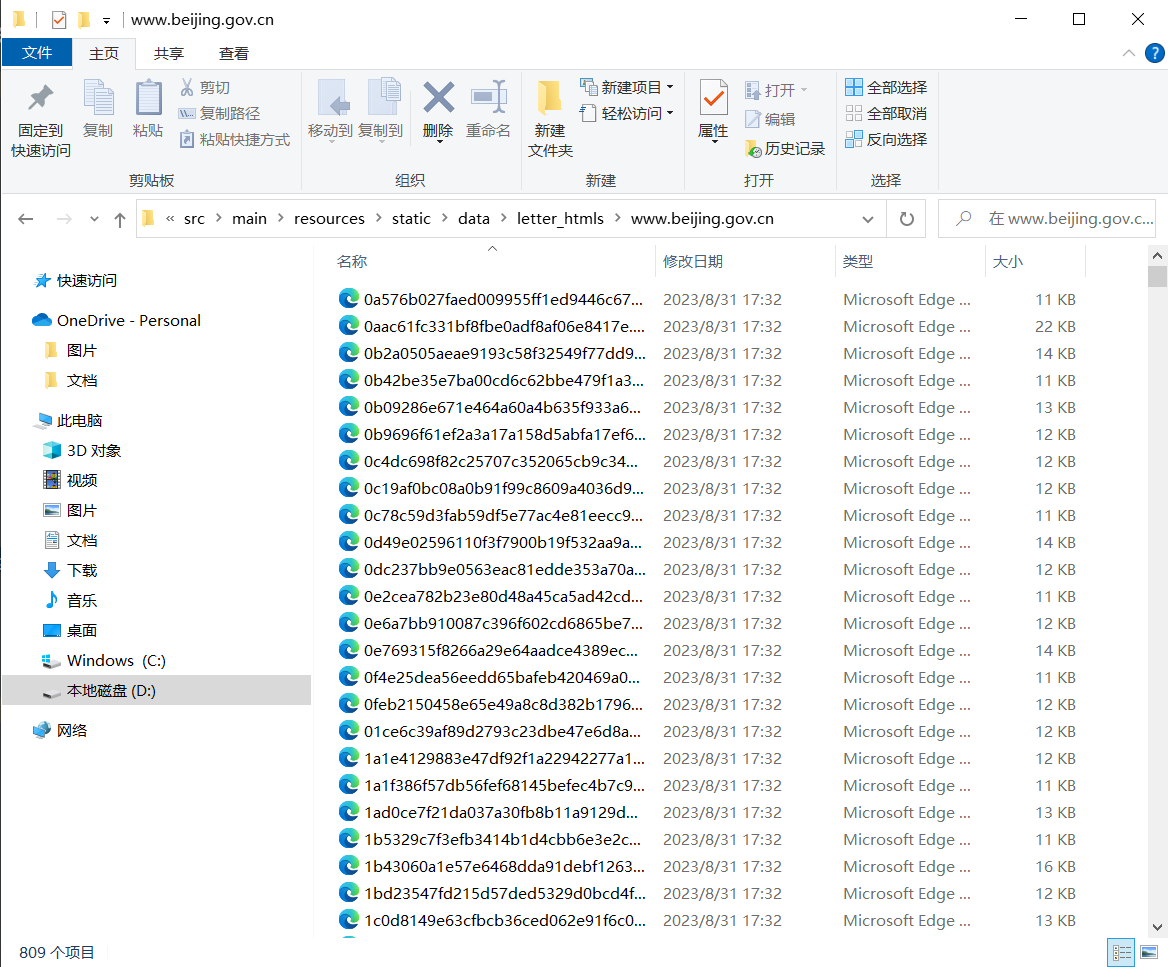

在爬取完网页数据之后保存每个页面

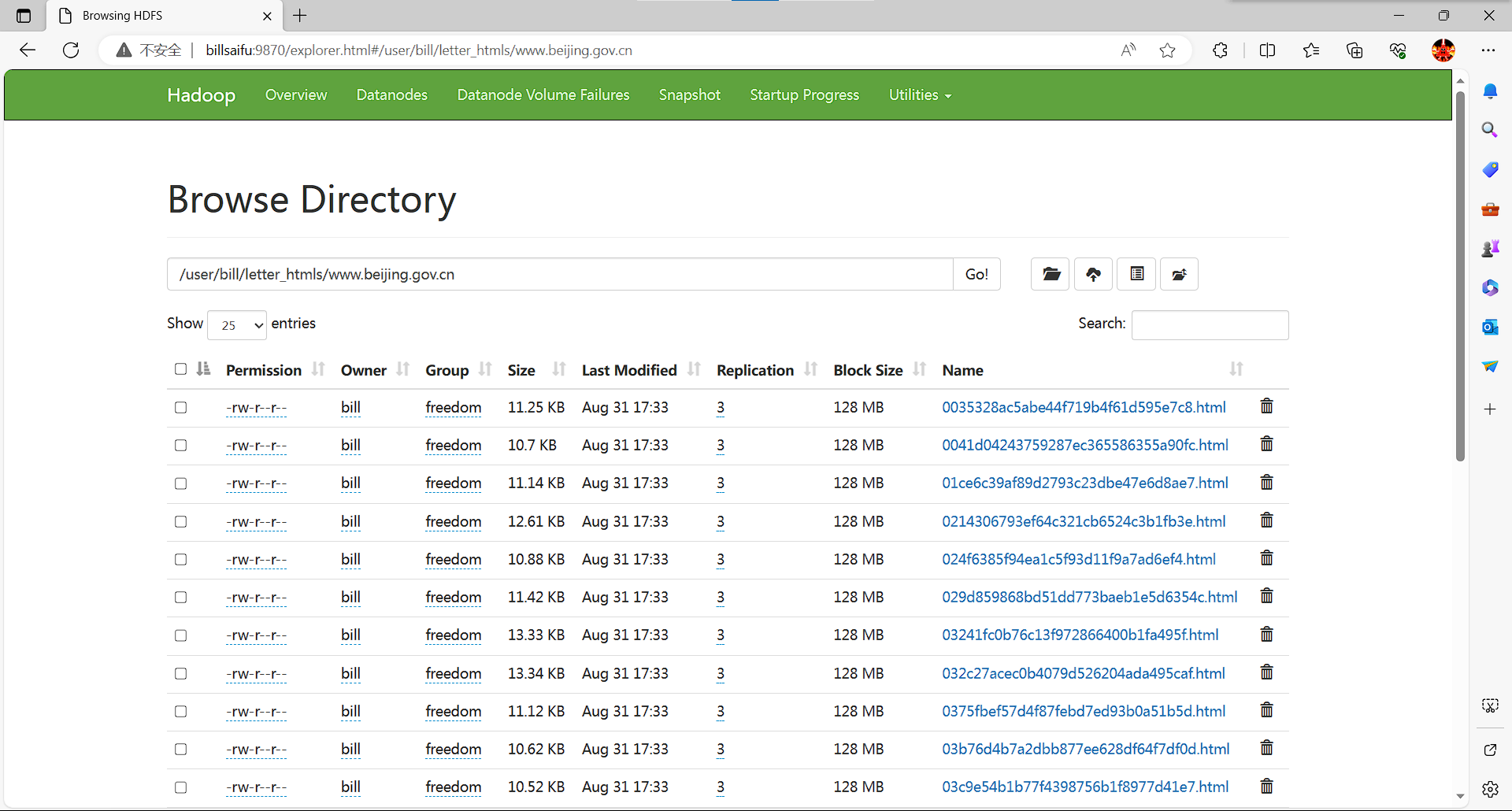

打开虚拟机和hadoop集群将网页数据上传到hdfs当中

编写mapreduce程序对信件进行数据清洗

map

package org.example;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.select.Elements;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class LetterMapper extends Mapper<Text,Text,Text,Text> {

private Text outK=new Text();

private List<Text> outV=new ArrayList<>();

private String page;

private Document doc;

@Override

protected void map(Text key, Text value, Mapper<Text, Text, Text, Text>.Context context) throws IOException, InterruptedException {

page=value.toString();

doc= Jsoup.parse(page);

String url=doc.getElementsByTag("url").first().text();

String letterType=null;

String letterTitle=null;

String replyOrganization=null;

if(url.contains("consult")) letterType="咨询";

else letterType="建议";

String id=url.substring(url.lastIndexOf("=") + 1);

Elements strong = doc.getElementsByTag("strong");

letterTitle=strong.get(0).text();

replyOrganization=strong.get(1).text();

String letterRecipient=doc.getElementsByClass("col-xs-10 col-lg-3 col-sm-3 col-md-4 text-muted").first().text().substring(4);

String letterDate=doc.getElementsByClass("col-xs-5 col-lg-3 col-sm-3 col-md-3 text-muted").first().text().substring(3);

String letterContent=doc.getElementsByClass("col-xs-12 col-md-12 column p-2 text-muted mx-2").first().text();

String replyDate=doc.getElementsByClass("col-xs-12 col-sm-3 col-md-3 my-2").first().text().substring(5);

String replyContent=doc.getElementsByClass("col-xs-12 col-md-12 column p-4 text-muted my-3").first().text();

outK.set(id);

outV.add(new Text(replyContent));

outV.add(new Text(replyDate));

outV.add(new Text(replyOrganization));

outV.add(new Text(letterContent));

outV.add(new Text(letterDate));

outV.add(new Text(letterRecipient));

outV.add(new Text(letterTitle));

outV.add(new Text(letterType));

outV.add(new Text(id));

for(Text i:outV){

context.write(outK,i);

}

}

}reduce

package org.example;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class LetterReducer extends Reducer<Text,Text, NullWritable,Text> {

private Text outV=new Text();

@Override

protected void reduce(Text key, Iterable<Text> values, Reducer<Text, Text, NullWritable, Text>.Context context) throws IOException, InterruptedException {

StringBuilder s=new StringBuilder();

for(Text i:values){

s.append(i.toString()+"\t");

}

outV.set(s.toString());

context.write(NullWritable.get(),outV);

}

}自定义文件切片,按一个文件的形式进行切片

package org.example;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.InputSplit;

import org.apache.hadoop.mapreduce.JobContext;

import org.apache.hadoop.mapreduce.RecordReader;

import org.apache.hadoop.mapreduce.TaskAttemptContext;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

import java.util.List;

public class LetterFileInputFormat extends FileInputFormat<Text, Text> {

@Override

public List<InputSplit> getSplits(JobContext job) throws IOException {

// 返回一个自定义的InputSplit列表,这里将整个文件作为一个切片

List<InputSplit> splits = super.getSplits(job);

return splits;

}

@Override

public RecordReader<Text, Text> createRecordReader(InputSplit split, TaskAttemptContext context) throws IOException, InterruptedException {

// 返回一个自定义的RecordReader,这里将整个文件的内容作为一个Value

LetterFileRecordReader reader = new LetterFileRecordReader();

reader.initialize(split, context);

return reader;

}

// 自定义RecordReader,将整个文件的内容作为一个Value

public static class LetterFileRecordReader extends RecordReader<Text, Text> {

private Path file;

private Configuration conf;

private boolean processed = false;

private Text key;

private Text value;

@Override

public void initialize(InputSplit split, TaskAttemptContext context) throws IOException, InterruptedException {

file = ((FileSplit) split).getPath();

conf = context.getConfiguration();

}

@Override

public boolean nextKeyValue() throws IOException, InterruptedException {

if (!processed) {

byte[] contents = new byte[(int) file.getFileSystem(conf).getFileStatus(file).getLen()];

file.getFileSystem(conf).open(file).readFully(0, contents);

String contentString = new String(contents);

key = new Text(file.getName());

value = new Text(contentString);

processed = true;

return true;

}

return false;

}

@Override

public Text getCurrentKey() throws IOException, InterruptedException {

return key;

}

@Override

public Text getCurrentValue() throws IOException, InterruptedException {

return value;

}

@Override

public float getProgress() throws IOException, InterruptedException {

return processed ? 1.0f : 0.0f;

}

@Override

public void close() throws IOException {

//不进行操作

}

}

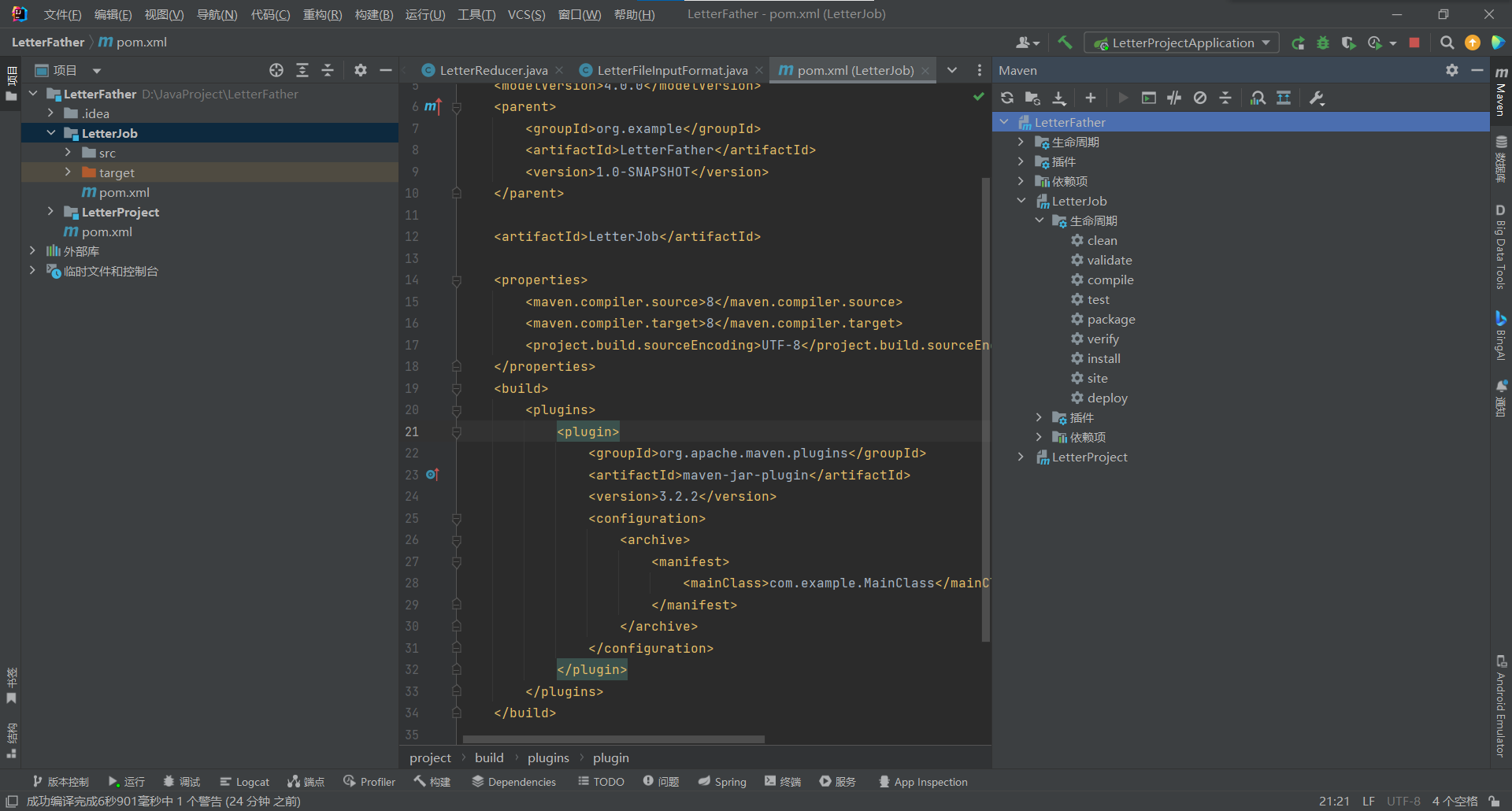

}导入打包工具

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.3.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>

jar-with-dependencies

</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>然后进行打包,点击clean->package

然后将包上传到虚拟机当中,我改名为LP.jar

启动命令hadoop jar LP.jar org.example.Main /user/bill/letter_htmls/www.beijing.gov.cn/ /user/bill/letter_res

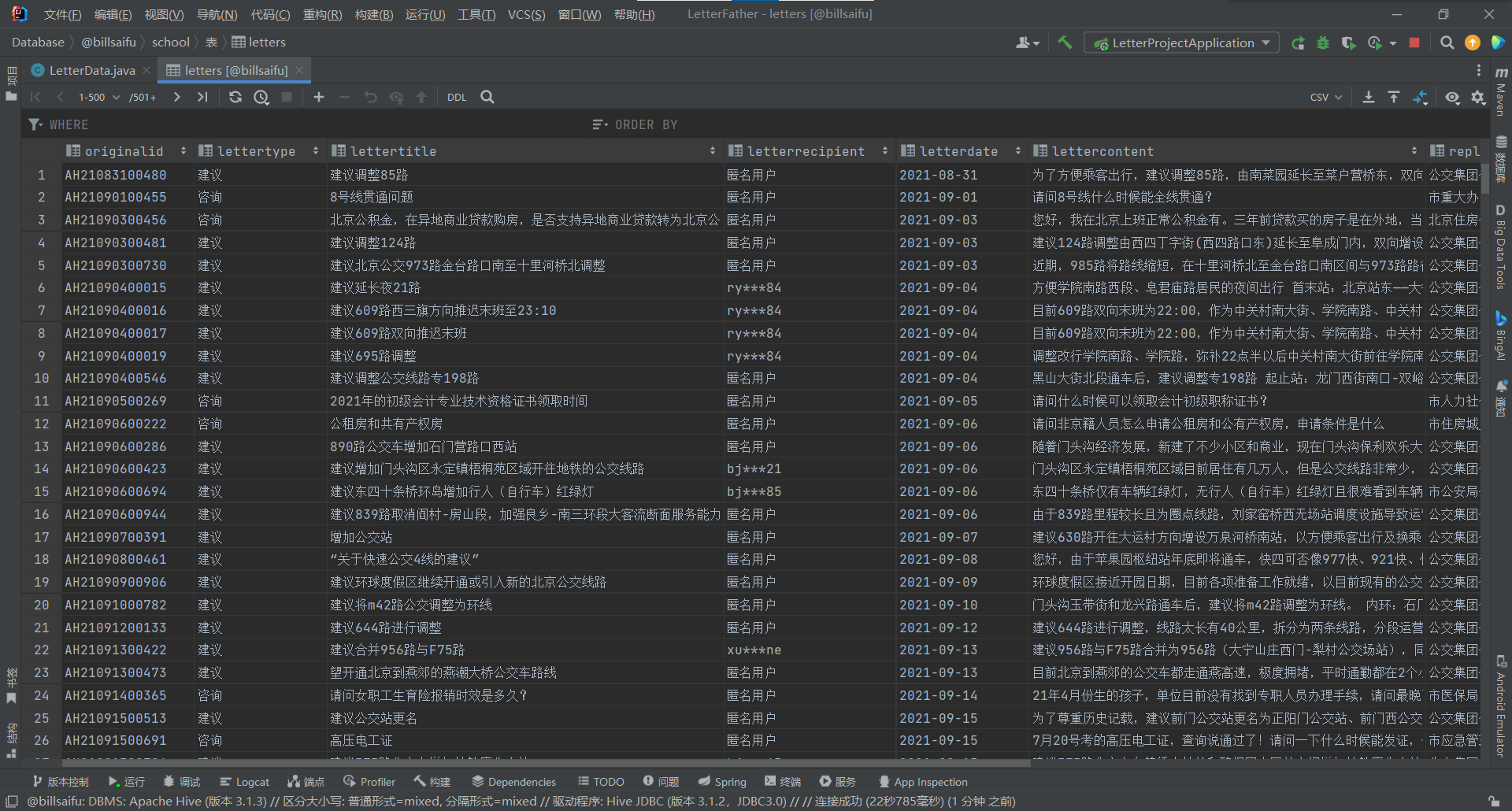

得到清洗后的结果将结果导入到hive表中,并查询得到想要的信息

利用sqoop将得到的数据导出到mysql中

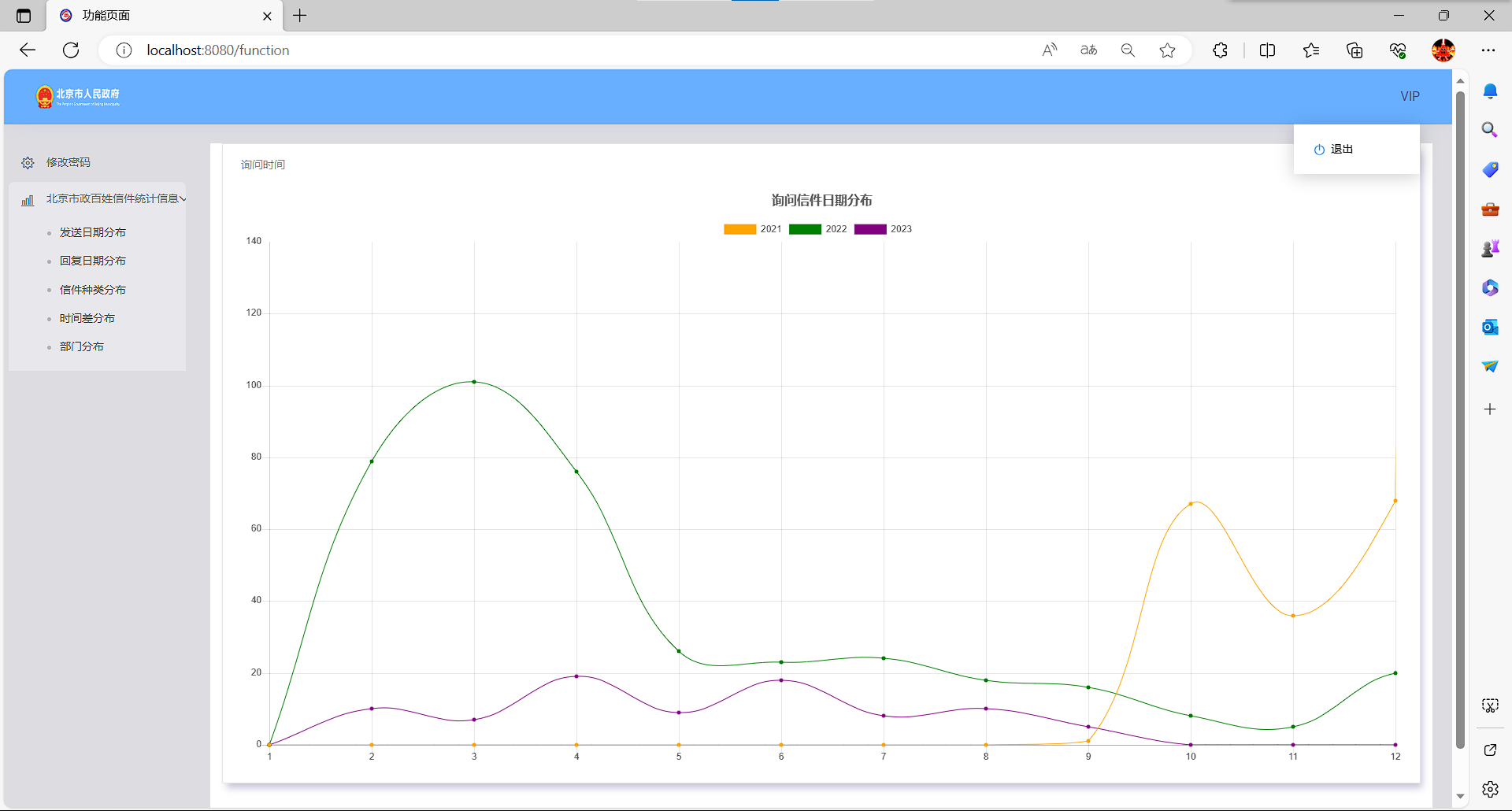

编写页面进行数据展示

几个注意事项

1.jdbc:mysql://billsaifu:3306/school?useSSL=false&characterEncoding=UTF-8&allowMultiQueries=true

allowMultiQueries=true这个设置,在mybatis中就可以同时执行多条sql语句

2.导入hive-jdbc包的时候需要额外添加一个配置

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>3.1.3</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-runner</artifactId>

</exclusion>

</exclusions>

</dependency>下面给出源码

爬取

package com.example.letterproject.utils;

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.processor.PageProcessor;

public class LetterCrawler implements PageProcessor {

private Site site=new Site();

private static int num=0;

@Override

public void process(Page page) {

System.out.println(++num);

page.putField("html",page.getRawText());

}

@Override

public Site getSite() {

site.setCharset("UTF-8");

site.setUserAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.188");

return site;

}

}package com.example.letterproject.utils;

import com.fasterxml.jackson.databind.JsonNode;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.node.ArrayNode;

import lombok.SneakyThrows;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import us.codecraft.webmagic.Spider;

import java.net.URL;

import java.util.ArrayList;

import java.util.List;

public class LetterUtils {

private static String get_json(String s){

s=s.replace("page:","\"page\":");

s=s.replace("pageNo:","\"pageNo\":");

s=s.replace("totalCount:","\"totalCount\":");

s=s.replace("totalPages:","\"totalPages\":");

s=s.replace("pageSize:","\"pageSize\":");

s=s.replace("result:","\"result\":");

s=s.replace("originalId:","\"originalId\":");

s=s.replace("letterType:","\"letterType\":");

s=s.replace("letterTypeName:","\"letterTypeName\":");

s=s.replace("letterTitle:","\"letterTitle\":");

s=s.replace("showLetterTitle:","\"showLetterTitle\":");

s=s.replace("writeDate:","\"writeDate\":");

s=s.replace("orgNames:","\"orgNames\":");

s=s.replace("showOrgNames:","\"showOrgNames\":");

s=s.replace("'","\"");

return s;

}

@SneakyThrows

public void loadLetters(){

Document start_page = Jsoup.parse(new URL("https://www.beijing.gov.cn/hudong/hdjl/sindex/bjah-index-hdjl!letterListJson.action?keyword=&startDate=&endDate=&letterType=0&page.pageNo=1&page.pageSize=0&orgtitleLength=26"), 30000);

String json_start=start_page.text();

json_start=get_json(json_start);

ObjectMapper objectMapper=new ObjectMapper();

JsonNode jsonNode=objectMapper.readTree( json_start);

String num= String.valueOf(jsonNode.get("page").get("totalCount")).replace("\"","");

Document end_page=Jsoup.parse(new URL("https://www.beijing.gov.cn/hudong/hdjl/sindex/bjah-index-hdjl!letterListJson.action?keyword=&startDate=&endDate=&letterType=0&page.pageNo=1&page.pageSize="+num+"&orgtitleLength=26"),3000);

String json_end=end_page.text();

json_end=get_json(json_end);

jsonNode=objectMapper.readTree(json_end);

ArrayNode arrayNode= (ArrayNode) jsonNode.get("result");

List<String> url_list=new ArrayList<>();

for(JsonNode i:arrayNode){

String id=i.get("originalId").toString().replace("\"","");

String kind=i.get("letterTypeName").toString().replace("\"","");

if(kind.equals("咨询")) {

url_list.add("https://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId="+id);

}

else {

url_list.add("https://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId="+id);

}

}

String[] urls=url_list.toArray(new String[0]);

Spider.create(new LetterCrawler())

.thread(100)

.addUrl(urls)

.addPipeline(new LetterFilePipeline("LetterProject/src/main/resources/static/data/letter_htmls/"))

.run();

}

}package com.example.letterproject.utils;

import lombok.SneakyThrows;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import java.net.URI;

public class LetterHDFS {

private FileSystem fs = null;

@SneakyThrows

public void init(){

Configuration conf = new Configuration();

conf.set("dfs.client.use.datanode.hostname", "true");

conf.set("fs.defaultFS", "hdfs://billsaifu:9000");

URI uri=new URI("hdfs://billsaifu:9000");

String username="bill";

fs=FileSystem.get(uri,conf,username);

}

@SneakyThrows

public void Mkdir(String filepath){

fs.mkdirs(new Path(filepath));

}

@SneakyThrows

public void CpFile(String localPath,String filePath){

fs.copyFromLocalFile(false,true,new Path(localPath),new Path(filePath));

}

@SneakyThrows

public void GetFile(String filePath,String localPath){

fs.copyToLocalFile(false,new Path(filePath),new Path(localPath),true);

}

@SneakyThrows

public void DelFile(String filePath){

fs.delete(new Path(filePath));

}

@SneakyThrows

public void MvFile(String OldFilePath,String NewFilePath){

fs.rename(new Path(OldFilePath),new Path(NewFilePath));

}

@SneakyThrows

public void FileDetail(String filePath){

RemoteIterator<LocatedFileStatus> listFiles=fs.listFiles(new Path(filePath),true);

while (listFiles.hasNext()){

LocatedFileStatus one=listFiles.next();

System.out.println("文件路径: "+one.getPath());

System.out.println("文件名: "+one.getPath().getName());

System.out.println("文件所有者: "+one.getOwner());

System.out.println("文件所有组: "+one.getGroup());

System.out.println("文件大小: "+one.getLen());

}

}

@SneakyThrows

public boolean isFile(String filePath){

return fs.exists(new Path(filePath));

}

@SneakyThrows

public void closeFS(){

if(fs!=null) fs.close();

}

}hive操作

package com.example.letterproject.utils;

import com.example.letterproject.pojo.Letter;

import lombok.SneakyThrows;

import java.sql.*;

import java.util.ArrayList;

import java.util.List;

public class LetterHive {

private String hiveUrl="jdbc:hive2://billsaifu:10000/school";

private String username="bill";

private Connection connection;

private PreparedStatement preparedStatement;

@SneakyThrows

public void getConnection(){

Class.forName("org.apache.hive.jdbc.HiveDriver");

connection= DriverManager.getConnection(hiveUrl,username,null);

}

@SneakyThrows

public void createTable(){

getConnection();

String sql="create table if not exists letters ( " +

"originalId STRING," +

"letterType STRING," +

"letterTitle STRING," +

"letterRecipient STRING," +

"letterDate STRING," +

"letterContent STRING," +

"replyOrganization STRING," +

"replyDate STRING," +

"replyContent STRING)" +

"ROW FORMAT DELIMITED " +

"FIELDS TERMINATED BY '\t'" +

"STORED AS TEXTFILE";

preparedStatement=connection.prepareStatement(sql);

preparedStatement.executeUpdate();

}

@SneakyThrows

public Boolean updateTable(String sql,String... p){

getConnection();

preparedStatement=connection.prepareStatement(sql,ResultSet.TYPE_SCROLL_INSENSITIVE,ResultSet.CONCUR_UPDATABLE);

if(p!=null)

for(int i=0;i<p.length;i++){

preparedStatement.setString(i+1,p[i]);

}

boolean row=preparedStatement.execute();

return row;

}

@SneakyThrows

public ResultSet queryTable(String sql,String... p){

getConnection();

List<Letter> letterList=new ArrayList<>();

preparedStatement=connection.prepareStatement(sql,ResultSet.TYPE_SCROLL_INSENSITIVE,ResultSet.CONCUR_UPDATABLE);

if(p!=null)

for(int i=0;i<p.length;i++){

preparedStatement.setString(i+1,p[i]);

}

ResultSet resultSet=preparedStatement.executeQuery();

return resultSet;

}

@SneakyThrows

public void closeConnection(){

if(preparedStatement!=null) preparedStatement.close();

if(connection!=null) connection.close();

}

}package com.example.letterproject.dao;

import com.example.letterproject.utils.LetterHive;

import java.sql.ResultSet;

public class LetterHiveDao{

private LetterHive letterHive;

public LetterHiveDao() {

this.letterHive = new LetterHive();

}

public void createTable() {

letterHive.createTable();

letterHive.closeConnection();

}

public ResultSet selectLetter(String sql, String... p) {

return letterHive.queryTable(sql,p);

}

public Boolean updateLetter(String sql,String... p) {

return letterHive.updateTable(sql,p);

}

public void close(){

letterHive.closeConnection();

}

}package com.example.letterproject.service;

import com.example.letterproject.dao.LetterHiveDao;

public class LetterHiveService {

private LetterHiveDao letterHiveDao;

public LetterHiveService() {

this.letterHiveDao = new LetterHiveDao();

}

public void createLetterTable(){

letterHiveDao.createTable();

}

public void loadLetter(String inpath) {

String sql="load data inpath ? overwrite into table letters";

letterHiveDao.updateLetter(sql,inpath);

System.out.println("导入成功");

}

public void selectLetterByStartTime(){

letterHiveDao.updateLetter("drop table letter_start_num");

String sql="create table if not exists letter_start_num row format delimited fields terminated by '\t' stored as textfile as " +

"select year(letterDate) as year,month(letterDate) as month,count(*) as letter_num from letters group by year(letterDate),month(letterDate) order by year(letterDate),month(letterDate)";

letterHiveDao.updateLetter(sql);

System.out.println("查询成功");

}

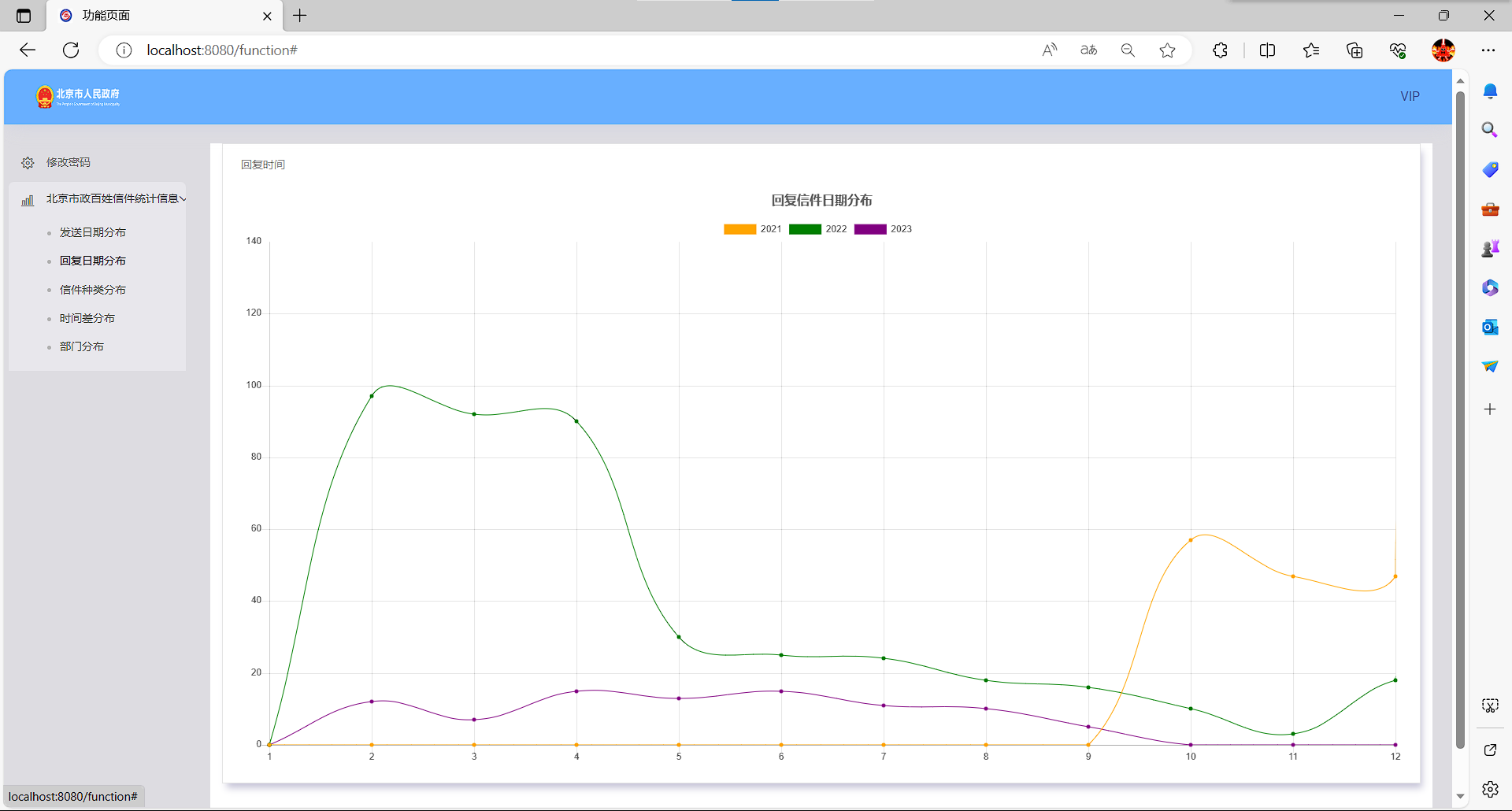

public void selectLetterByEndTime(){

letterHiveDao.updateLetter("drop table letter_end_num");

String sql= "create table if not exists letter_end_num row format delimited fields terminated by '\t' stored as textfile as " +

"select year(replyDate) as year,month(replyDate) as month,count(*) as letter_num from letters group by year(replyDate),month(replyDate) order by year(replyDate),month(replyDate)";

letterHiveDao.updateLetter(sql);

System.out.println("查询成功");

}

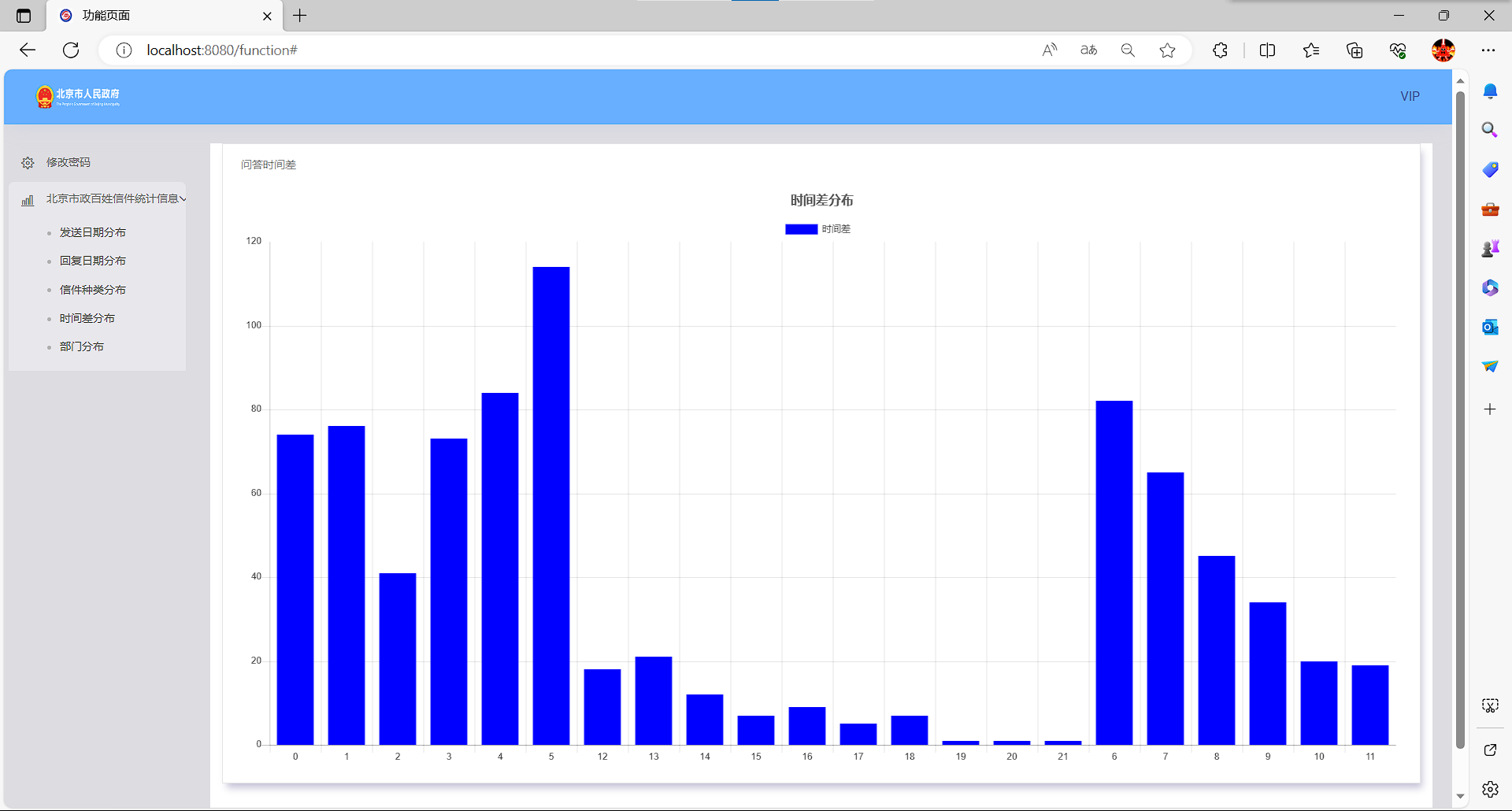

public void selectLetterByToad(){

letterHiveDao.updateLetter("drop table letter_toad");

String sql= "create table if not exists letter_toad row format delimited fields terminated by '\t' stored as textfile as " +

"select datediff(replyDate,letterDate) as toad,count(*) as toad_num from letters group by datediff(replyDate,letterDate) order by datediff(replyDate,letterDate)";

letterHiveDao.updateLetter(sql);

System.out.println("查询成功");

}

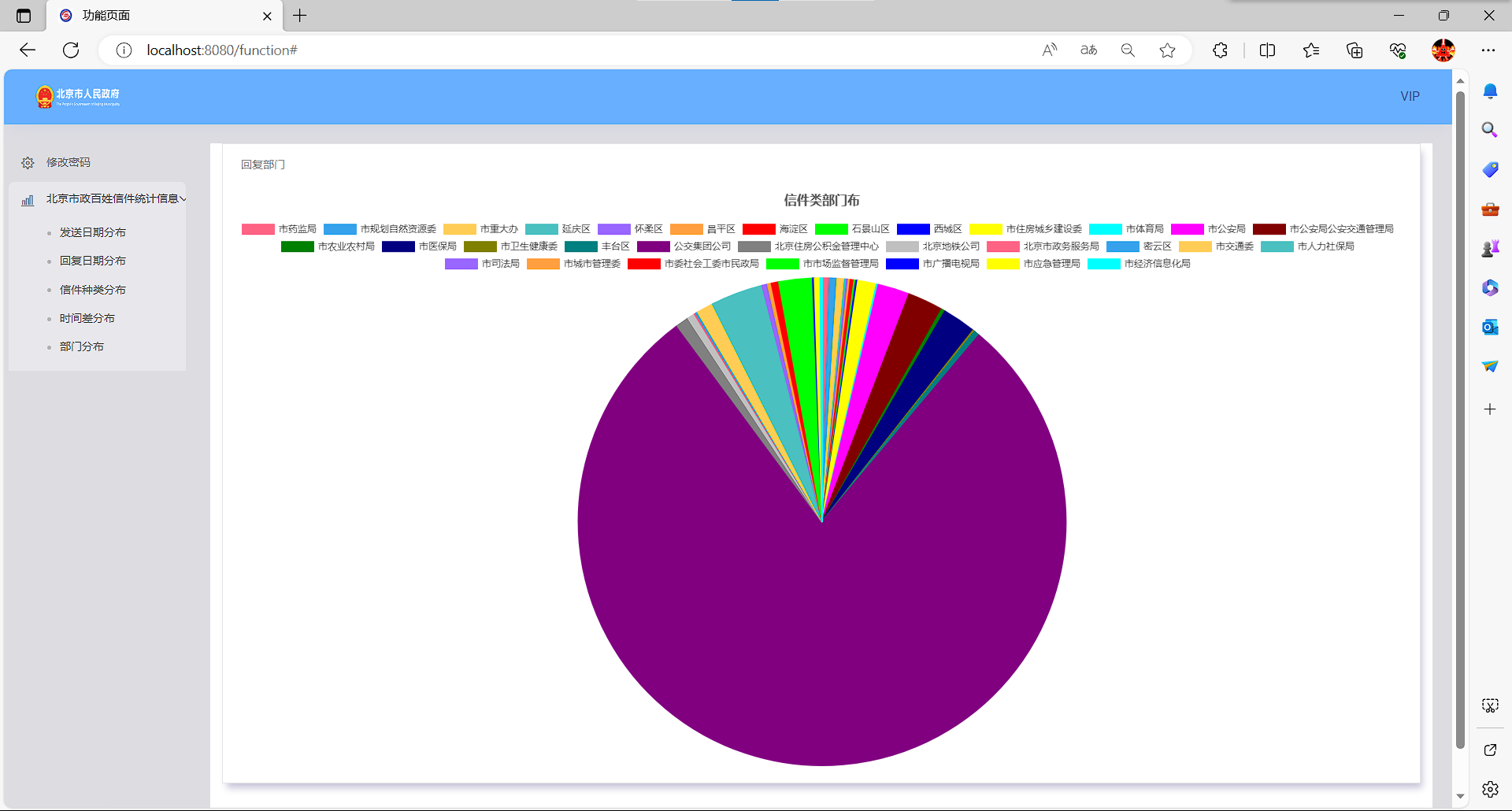

public void selectLetterByOrganization(){

letterHiveDao.updateLetter("drop table letter_organization");

String sql= "create table if not exists letter_organization row format delimited fields terminated by '\t' stored as textfile as " +

"select replyOrganization as organization,count(*) as letter_num from letters group by replyOrganization order by replyOrganization";

letterHiveDao.updateLetter(sql);

System.out.println("查询成功");

}

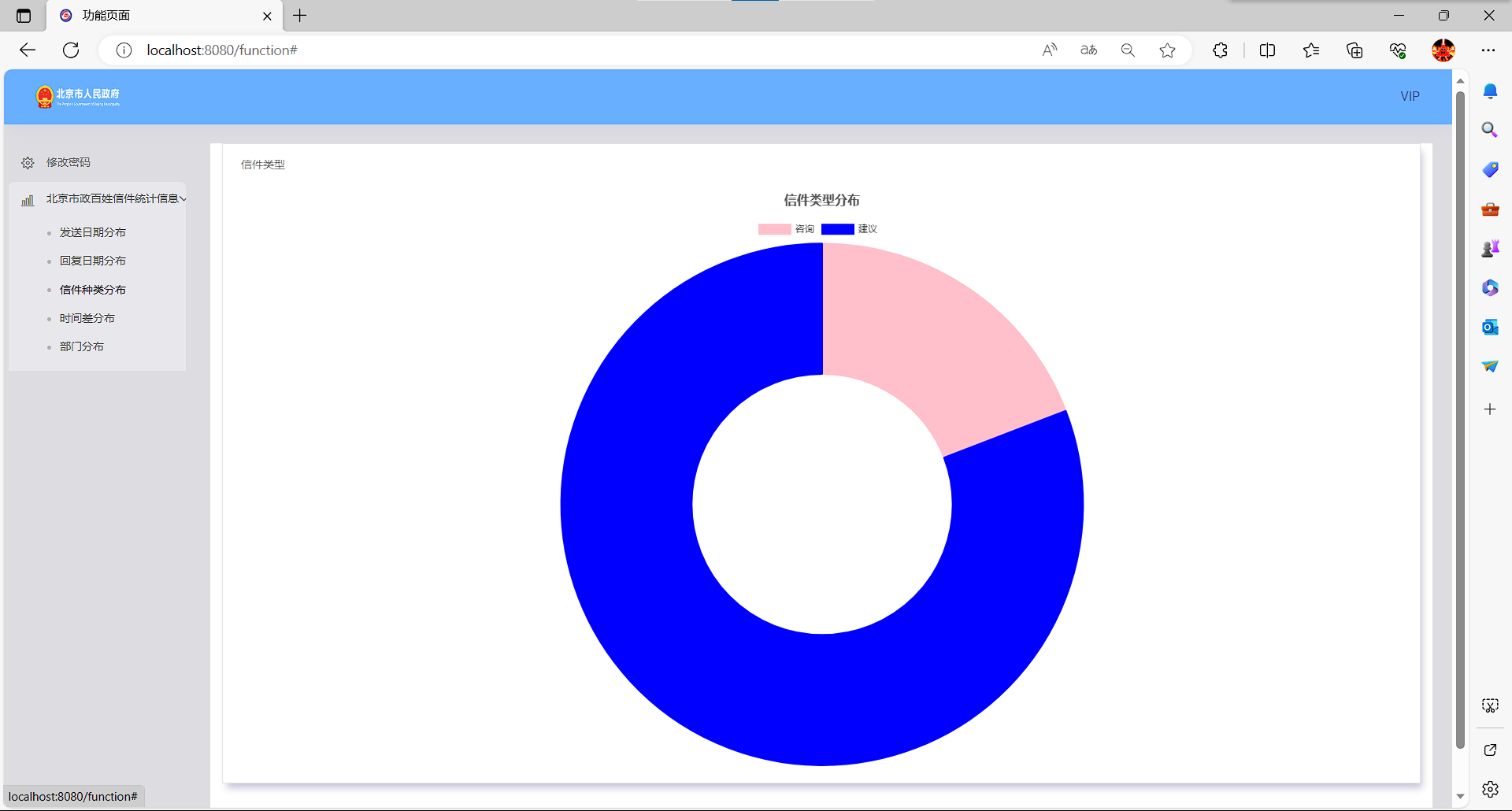

public void selectLetterByType(){

letterHiveDao.updateLetter("drop table letter_type");

String sql= "create table if not exists letter_type row format delimited fields terminated by '\t' stored as textfile as " +

"select letterType as type,count(*) as letter_num from letters group by letterType order by letterType";

letterHiveDao.updateLetter(sql);

System.out.println("查询成功");

}

}数据初始化

package com.example.letterproject.utils;

import com.example.letterproject.service.LetterDateService;

import com.example.letterproject.service.LetterHiveService;

import com.jcraft.jsch.ChannelExec;

import com.jcraft.jsch.JSch;

import com.jcraft.jsch.Session;

import lombok.SneakyThrows;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.CommandLineRunner;

import org.springframework.stereotype.Component;

import java.io.InputStream;

@Component

public class LetterData implements CommandLineRunner {

@Autowired

private LetterDateService letterDateService;

@SneakyThrows

private boolean sshHost(String commond){

JSch jsch = new JSch();

Session session = jsch.getSession("bill","billsaifu", 22);

session.setPassword("732338737q");

session.setConfig("StrictHostKeyChecking", "no");

session.connect();

ChannelExec channelExec = (ChannelExec) session.openChannel("exec");

channelExec.setCommand(commond);

InputStream in = channelExec.getInputStream();

channelExec.connect();

boolean flag=false;

byte[] tmp = new byte[1024];

while (true) {

while (in.available() > 0) {

int i = in.read(tmp, 0, 1024);

if (i < 0) break;

System.out.print(new String(tmp, 0, i));

}

if (channelExec.isClosed()) {

if (in.available() > 0) continue;

flag=channelExec.getExitStatus()==0;

System.out.println("Exit status: " + channelExec.getExitStatus());

break;

}

}

channelExec.disconnect();

session.disconnect();

return flag;

}

@SneakyThrows

public void initData() {

boolean flag=false;

System.out.println("==================开始爬取信件数据==================");

LetterUtils letterUtils=new LetterUtils();

letterUtils.loadLetters();

System.out.println("==================爬取完成==================");

System.out.println("本地上传数据到HDFS");

LetterHDFS letterHDFS=new LetterHDFS();

letterHDFS.init();

letterHDFS.DelFile("/user/bill/letter_res");

letterHDFS.CpFile("LetterProject/src/main/resources/static/data/letter_htmls","/user/bill/");

System.out.println("==================网页数据上传完成==================");

System.out.println("==================开始mapreduce清洗数据");

flag=sshHost("cd test;hadoop jar LP.jar org.example.Main /user/bill/letter_htmls/www.beijing.gov.cn/ /user/bill/letter_res");

if(flag) System.out.println("==================mapreduce成功==================");

else {

System.out.println("==================mapreduce失败==================");

return;

}

LetterHiveService letterHiveService=new LetterHiveService();

letterHiveService.createLetterTable();

System.out.println("==================hive表格创建成功==================");

if(letterHDFS.isFile("/user/bill/letter_res/part-r-00000"))

letterHiveService.loadLetter("/user/bill/letter_res/part-r-00000");

else {

System.out.println("==================part-r-00000不存在==================");

return;

}

letterHDFS.closeFS();

letterHiveService.selectLetterByStartTime();

letterHiveService.selectLetterByEndTime();

letterHiveService.selectLetterByType();

letterHiveService.selectLetterByToad();

letterHiveService.selectLetterByOrganization();

System.out.println("==================hive表格数据上传成功==================");

letterDateService.init();

flag=sshHost("sqoop export " +

" --connect jdbc:mysql://billsaifu:3306/school?characterEncoding=utf8 " +

" --username root " +

" --password 123456 " +

" --table letter_start_num " +

" --export-dir /user/hive/warehouse/school.db/letter_start_num " +

" --input-fields-terminated-by \"\\t\" ");

if (flag){

System.out.println("==================letter_start_num导出成功==================");

}

else {

System.out.println("==================letter_start_num导出失败==================");

return;

}

flag=sshHost("sqoop export " +

" --connect jdbc:mysql://billsaifu:3306/school?characterEncoding=utf8 " +

" --username root " +

" --password 123456 " +

" --table letter_end_num " +

" --export-dir /user/hive/warehouse/school.db/letter_end_num " +

" --input-fields-terminated-by \"\\t\" ");

if (flag){

System.out.println("==================letter_end_num导出成功==================");

}

else {

System.out.println("==================letter_end_num导出失败==================");

return;

}

flag=sshHost("sqoop export " +

" --connect jdbc:mysql://billsaifu:3306/school?characterEncoding=utf8 " +

" --username root " +

" --password 123456 " +

" --table letter_type " +

" --export-dir /user/hive/warehouse/school.db/letter_type " +

" --input-fields-terminated-by \"\\t\" ");

if (flag){

System.out.println("==================letter_type导出成功==================");

}

else {

System.out.println("==================letter_type导出失败==================");

return;

}

flag=sshHost("sqoop export " +

" --connect jdbc:mysql://billsaifu:3306/school?characterEncoding=utf8 " +

" --username root " +

" --password 123456 " +

" --table letter_toad " +

" --export-dir /user/hive/warehouse/school.db/letter_toad " +

" --input-fields-terminated-by \"\\t\" ");

if (flag){

System.out.println("==================letter_toad导出成功==================");

}

else {

System.out.println("==================letter_toad导出失败==================");

return;

}

flag=sshHost("sqoop export " +

" --connect jdbc:mysql://billsaifu:3306/school?characterEncoding=utf8 " +

" --username root " +

" --password 123456 " +

" --table letter_organization " +

" --export-dir /user/hive/warehouse/school.db/letter_organization " +

" --input-fields-terminated-by \"\\t\" ");

if (flag){

System.out.println("==================letter_organization导出成功==================");

}

else {

System.out.println("==================letter_organization导出失败==================");

return;

}

}

@Override

public void run(String... args) throws Exception {

System.out.println("==================初始化获取百姓信件数据==================");

initData();

System.out.println("==================信件数据初始化成功==================");

}

}上面是部分源码