ELK平台搭建(上)

一、目的

为指导在Centos6.8系统下搭建标准ELK平台的工作。

二、定义

Elasticsearch Logstash Kibana结合Redis协同工作。

三、适用范围

适用于运营维护组运维工程师,针对在系统Centos6.8下搭建标准ELK平台的工作。

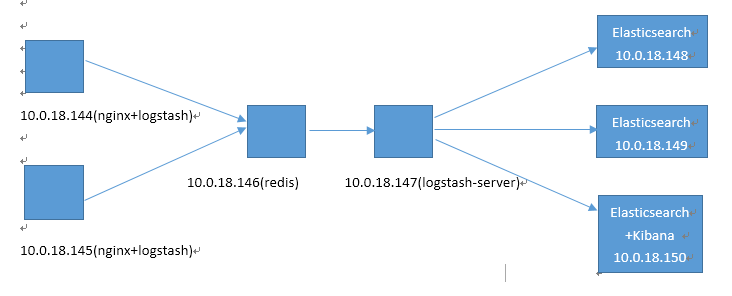

四、环境(结构图如下,IP不一致)

|

|

Elasticsearch+Logstash_server |

Logstash_agent |

Elasticsearch+Logstash_agent |

Logstash_agent |

Elasticsearch+Logstash_agent+Redis+Kibana |

|

操作系统 |

CentOS 6.8 x64 |

CentOS 6.8 x64 |

CentOS 6.8 x64 |

CentOS 6.8 x64 |

CentOS 6.8 x64 |

|

CPU/内存 |

双8核cpu/32G |

双8核cpu/32G |

双8核cpu/16G |

双8核cpu/16G |

双8核cpu/64G |

|

外网IP |

|

|

|

|

1.1.1.1 |

|

内网IP |

192.168.0.15 |

192.168.0.16 |

192.168.0.17 |

192.168.0.18 |

192.168.0.19 |

|

Elasticsearch版本 |

elasticsearch-2.4.0 |

|

|

|

|

|

Logstash版本 |

logstash-2.3.4.tar |

|

|

|

|

|

Kibana版本 |

kibana-4.6.1-linux-x86_64 |

|

|

|

|

|

Redis版本 |

redis-3.0.7 |

|

|

|

|

|

JDK版本 |

jdk-8u144-linux-x64 |

|

|

|

五、准备安装包

https://www.elastic.co/downloads/past-releases/elasticsearch-2-4-0

https://www.elastic.co/downloads/past-releases/logstash-2-3-4

https://www.elastic.co/downloads/past-releases/kibana-4-6-1

http://download.redis.io/releases/redis-3.0.7.tar.gz

六. 安装过程

6.1 安装java环境

在五台服务器上分别安装jdk-8u144-linux-x64

#mkdir -p /usr/java

#tar -xzvf jdk-8u144-linux-x64.tar.gz -C /usr/java

#vim /etc/profile

增添以下内容:

export JAVA_HOME=/usr/java/jdk1.8.0_144

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

# source /etc/profile

# java -version

java version "1.8.0_144"

Java(TM) SE Runtime Environment (build 1.8.0_144-b01)

Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode)

6.2 安装logstash

6.2.1 解压logstash源码包

Logstash_server与logstash_agent安装方法一致

在五台服务器上分别解压logstash

# tar -xzvf logstash-2.3.4.tar.gz -C /data/soft/

6.2.2 创建config

在五台服务器上分别创建config目录

# mkdir -p /data/soft/logstash-2.3.4/conf/

6.3 安装 redis

6.2.1 在192.168.0.19上安装redis

#yum install gcc gcc-c++ -y #安装过的,就不需要再安装了

#wget http://download.redis.io/releases/redis-3.0.7.tar.gz

#tar xf redis-3.0.7.tar.gz

#cd redis-3.0.7

#make

#mkdir -p /usr/local/redis/{conf,bin}

#cp ./*.conf /usr/local/redis/conf/

#cp runtest* /usr/local/redis/

#cd utils/

#cp mkrelease.sh /usr/local/redis/bin/

#cd ../src

#cp redis-benchmark redis-check-aof redis-check-dump redis-cli redis-sentinel redis-server redis-trib.rb /usr/local/redis/bin/

创建redis数据存储目录

#mkdir -pv /data/redis/db

#mkdir -pv /data/log/redis

6.2.2 修改redis配置文件

#cd /usr/local/redis/conf

#vi redis.conf

dir ./ 修改为dir /data/redis/db/

保存退出

6.2.3 启动redis

#nohup /usr/local/redis/bin/redis-server /usr/local/redis/conf/redis.conf &

6.2.4 查看redis进程

#ps -ef | grep redis

root 4425 1149 0 16:21 pts/0 00:00:00 /usr/local/redis/bin/redis-server *:6379

root 4435 1149 0 16:22 pts/0 00:00:00 grep redis

#netstat -tunlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1402/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1103/master

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 4425/redis-server *

tcp 0 0 :::22 :::* LISTEN 1402/sshd

tcp 0 0 ::1:25 :::* LISTEN 1103/master

tcp 0 0 :::6379 :::* LISTEN 4425/redis-server *

6.3配置使用logstash_agent

6.3.1 在192.168.0.16上收集/data/soft/tomcat-send/logs/catalina.out日志

# cd /data/soft/logstash-2.3.4/conf/

#vim logstash.conf

input {

file {

path => "/data/soft/tomcat-send/logs/catalina.out"

type => "36tomcat-send"

start_position => "beginning"

}

}

output {

if [type] == "36tomcat-send" {

redis {

host => "192.168.0.19"

data_type => "list"

key => '36elk_tomcat-send'

}

}

}

此配置文件意思是要将该路径下产生的日志输送到redis当中,其中type 和 key是自己定义的类型,方便识别。

6.3.2 启动logstash_agent

#/data/soft/logstash-2.3.4/bin/logstash -f /data/soft/logstash-2.3.4/co

nf/logstash.conf --configtest #检测语法

#/data/soft/logstash-2.3.4/bin/logstash -f /data/soft/logstash-2.3.4/co

nf/logstash.conf

#ps -ef |grep logstash #检验启动成功

6.4安装elasticsearch

在三台服务器 192.168.0.15、192.168.0.17、192.168.0.19上分别安装elasticsearch。例如在192.168.0.15上安装elasticsearch

6.4.1 添加elasticsearch用户,因为Elasticsearch服务器启动的时候,需要在普通用户权限下来启动。

#adduser elasticsearch

#passwd elasticsearch

# tar -xzvf elasticsearch-2.4.0.tar.gz -C /home/elasticsearch/

直接将包拷贝至/home/elasticsearch时候无法修改配置文件,因此需要修改权限;

chmod 777 /home/elasticsearch -R

chown elasticsearch.elasticsearch /home/elasticsearch -R

#su - elasticsearch

6.4.2 修改elasticsearch 配置文件

# cd elasticsearch-2.4.0

#mkdir {data,logs}

# cd config

#vim elasticsearch.yml

cluster.name: serverlog #集群名称,可以自定义

node.name: node-1 #节点名称,也可以自定义

path.data: /home/elasticsearch/elasticsearch-2.3.4/data #data存储路径

path.logs: /home/elasticsearch/elasticsearch-2.3.4/logs #log存储路径

network.host: 192.168.0.15 #节点ip

http.port: 9200 #节点端口

discovery.zen.ping.unicast.hosts: ["192.168.0.17","192.168.0.19"] #集群ip列表

discovery.zen.minimum_master_nodes: 3 #集群节点数

6.4.3 启动服务

#cd elasticsearch-2.3.4

#./bin/elasticsearch -d

查看进程

#ps -ef | grep elasticsearch

查看端口

#netstat -tunlp

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN -

tcp 0 0 ::ffff:10.0.18.148:9300 :::* LISTEN 1592/java

tcp 0 0 :::22 :::* LISTEN -

tcp 0 0 ::1:25 :::* LISTEN -

tcp 0 0 ::ffff:10.0.18.148:9200 :::* LISTEN

启动连个端口:9200集群之间事务通信,9300集群之间选举通信,其余两台elasticsearch服务根据这台服务的配置文件做相应调整。

6.4.4 等待三台elasticsearch做好后,查看集群健康信息

# curl -XGET 'http://192.168.0.19:9200/_cluster/health?pretty'

{

"cluster_name" : "serverlog",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 105,

"active_shards" : 210,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

6.4.5 查看节点数

# curl -XGET 'http://192.168.0.15:9200/_cat/nodes?v'

host ip heap.percent ram.percent load node.role master name

192.168.0.15 192.168.0.15 27 28 0.10 d * node-1

192.168.0.19 192.168.0.19 20 100 6.03 d m node-3

192.168.0.17 192.168.0.17 30 87 0.17 d m node-2

注意:*表示当前master节点

6.4.6 查看节点分片的信息

#curl -XGET 'http://10.0.18.148:9200/_cat/indices?v'

health status index pri rep docs.count docs.deleted store.size pri.store.size

6.4.7 在三台Elasticsearch节点上安装插件,如下:

#su - elasticsearch

#cd elasticsearch-2.3.4

#./bin/plugin install license #license插件

-> Installing license...

Trying https://download.elastic.co/elasticsearch/release/org/elasticsearch/plugin/license/2.3.4/license-2.3.4.zip ...

Downloading .......DONE

Verifying https://download.elastic.co/elasticsearch/release/org/elasticsearch/plugin/license/2.3.4/license-2.3.4.zip checksums if available ...

Downloading .DONE

Installed license into /home/elasticsearch/elasticsearch-2.3.4/plugins/license

# ./bin/plugin install marvel-agent #marvel-agent插件

-> Installing marvel-agent...

Trying https://download.elastic.co/elasticsearch/release/org/elasticsearch/plugin/marvel-agent/2.3.4/marvel-agent-2.3.4.zip ...

Downloading ..........DONE

Verifying https://download.elastic.co/elasticsearch/release/org/elasticsearch/plugin/marvel-agent/2.3.4/marvel-agent-2.3.4.zip checksums if available ...

Downloading .DONE

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: plugin requires additional permissions @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

* java.lang.RuntimePermission setFactory

* javax.net.ssl.SSLPermission setHostnameVerifier

See http://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html

for descriptions of what these permissions allow and the associated risks.

Continue with installation? [y/N]y #输入y,表示同意安装此插件

Installed marvel-agent into /home/elasticsearch/elasticsearch-2.3.4/plugins/marvel-agent

# ./bin/plugin install mobz/elasticsearch-head #安装head插件

-> Installing mobz/elasticsearch-head...

Trying https://github.com/mobz/elasticsearch-head/archive/master.zip ...

Downloading ...........................................................................................................................................................................DONE

Verifying https://github.com/mobz/elasticsearch-head/archive/master.zip checksums if available ...

NOTE: Unable to verify checksum for downloaded plugin (unable to find .sha1 or .md5 file to verify)

Installed head into /home/elasticsearch/elasticsearch-2.3.4/plugins/head

安装bigdesk插件

#cd plugins/

#mkdir bigdesk

#cd bigdesk

#git clone https://github.com/lukas-vlcek/bigdesk _site

Initialized empty Git repository in /home/elasticsearch/elasticsearch-2.3.4/plugins/bigdesk/_site/.git/

remote: Counting objects: 5016, done.

remote: Total 5016 (delta 0), reused 0 (delta 0), pack-reused 5016

Receiving objects: 100% (5016/5016), 17.80 MiB | 1.39 MiB/s, done.

Resolving deltas: 100% (1860/1860), done.

修改_site/js/store/BigdeskStore.js文件,大致在142行,如下:

return (major == 1 && minor >= 0 && maintenance >= 0 && (build != 'Beta1' || build != 'Beta2'));

修改为:

return (major >= 1 && minor >= 0 && maintenance >= 0 && (build != 'Beta1' || build != 'Beta2'));

添加插件的properties文件:

#cat >plugin-descriptor.properties<<EOF

description=bigdesk - Live charts and statistics for Elasticsearch cluster.

version=2.5.1

site=true

name=bigdesk

EOF

安装kopf插件

#./bin/plugin install lmenezes/elasticsearch-kopf

-> Installing lmenezes/elasticsearch-kopf...

Trying https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip

Downloading .....................................................................................................................................DONE

Verifying https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip checksums if available ...

NOTE: Unable to verify checksum for downloaded plugin (unable to find .sha1 or .md5 file to verify)

Installed kopf into /home/elasticsearch/elasticsearch-2.3.4/plugins/kopf

6.4.8 查看安装的插件

#cd elasticsearch-2.3.4

# ./bin/plugin list

Installed plugins in /home/elasticsearch/elasticsearch-2.3.4/plugins:

- head

- license

- bigdesk

- marvel-agent

- kopf

6.5 配置使用logstash_server

6.5.1 在192.168.0.15 上

#cd /data/soft/logstash-2.3.4/conf

# mkdir -p {16..19}.config

#cd 16.config

#vim logstash_server.conf

input {

redis {

type => "36tomcat-send"

host => "192.168.0.19"

port => "6379"

data_type => "list"

key => '36elk_tomcat-send'

batch_count => 1

}

}

output {

if [type] == "36tomcat-send" {

elasticsearch {

hosts => ["192.168.0.15:9200"] #其中一台ES 服务器

Index => "192.168.0.16-tomcat-send-log-%{+YYYY.MM.dd}"

} #定义的索引名称,后面会用到

}

}

里面的key与type 与6.3.1 logstash_agent 中的配置要相互匹配。

在性能上为了优化调优参数:

#/newnet.bak/elk/logstash-5.4.1/config/logstash.yml

pipeline.batch.size: 10000

pipeline.workers: 8

pipeline.output.workers: 8

pipeline.batch.delay: 10

6.5.2 启动logstash_server

#/data/soft/logstash-2.3.4/bin/logstash -f /data/soft/logstash-2.3.4/ conf/16.config/logstash_server.conf --configtest

#/data/soft/logstash-2.3.4/bin/logstash -f /data/soft/logstash-2.3.4/conf/16.config/logstash_server.conf

# ps -ef |grep logstash

6.6 安装配置kibana

6.6.1 在192.168.0.19上安装kibana

# tar -xzvf kibana-4.6.1-linux-x86_64.tar.gz -C /data/soft/

6.6.2 安装插件

#cd /data/soft/kibana-4.6.1-linux-x86_64/bin/kibana

#./kibana plugin --install elasticsearch/marvel/latest

Installing marvel

Attempting to transfer from https://download.elastic.co/elasticsearch/marvel/marvel-latest.tar.gz

Transferring 2421607 bytes....................

Transfer complete

Extracting plugin archive

Extraction complete

Optimizing and caching browser bundles...

Plugin installation complete

6.6.3 修改kibana配置文件

#cd /data/soft/kibana-4.6.1-linux-x86_64/config

#vim kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.0.19:9200"

6.6.4 启动kibana

# nohup bin/kibana &

然后命令行输入exit

# netstat -anput |grep 5601

7、施工后校验

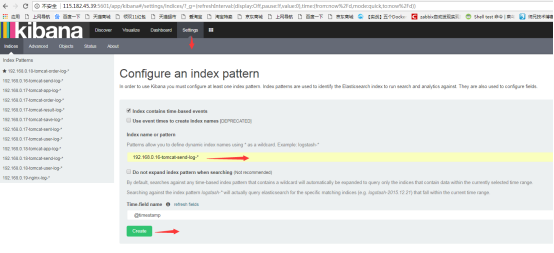

7.1 在浏览器访问kibana端口并创建index,如下:

红方框中的索引名称是我在logstash server 服务器的配置文件中配置的index名称

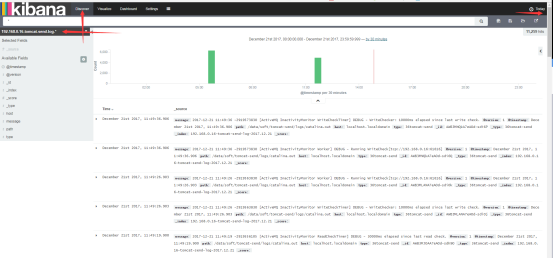

点击绿色按钮“Create”,就可以创建成功了!然后查看kibana界面的“Discovery”,

可以看到已经搜集到日志数据了!

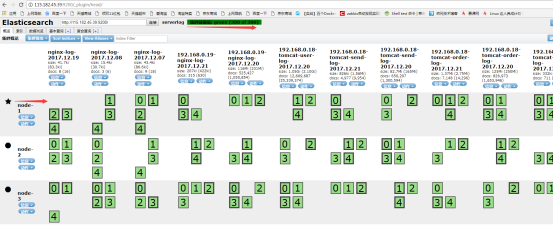

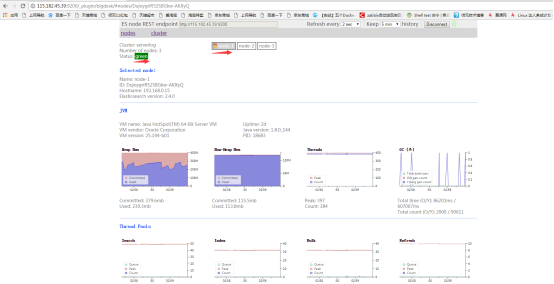

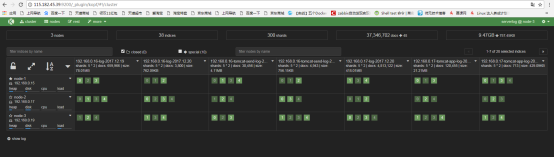

7.2 访问head,查看集群是否一致,如下图:

http://115.182.45.39:9200/_plugin/head

7.3 访问bigdesk,查看信息,如下图:

上图中也标记了node-1为master节点(有星星标记),上图显示的数据是不断刷新的!

7.4 访问kopf,查看信息,如下图:

上面提到了查看节点分片的信息。

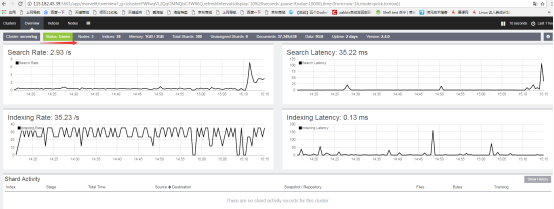

7.5 关于Marvel的问题

Marvel是监控你的Elasticsearch集群,并提供可操作的见解,以帮助您充分利用集群的最佳方式,它是免费的在开发和生产中使用。

http://115.182.45.39:5601/app/marvel#

7.6 节点分片信息相关的问题

在本次实验的过程中,第一次查看分片信息是没有的,因为没有创建索引,后面等创建过索引之后,就可以看到创建的索引信息了,但是还有集群的信息没有显示出来,问题应该和第2个一样,Elasticsearch有问题,重启之后,就查看到了如下:

# curl -XGET '192.168.0.19:9200/_cat/indices?v'

health status index pri rep docs.count docs.deleted store.size pri.store.size

green open 192.168.0.18-tomcat-send-log-2017.12.20 5 1 650297 0 165.4mb 82.6mb

green open 192.168.0.16-log-2017.12.20 5 1 3800 0 1.4mb 762kb

green open 192.168.0.17-tomcat-order-log-2017.12.20 5 1 905074 0 274.9mb 137.4mb

green open 192.168.0.17-tomcat-order-log-2017.12.21 5 1 7169 0 2.5mb 1.2mb

green open 192.168.0.19-nginx-log-2017.12.20 5 1 525427 0 231.4mb 115.6mb

green open 192.168.0.19-nginx-log-2017.12.21 5 1 315 0 421.6kb 207.2kb

7.7 关于创建多个index索引名称,存储不同类型日志的情况

也许我们不止tomcat-send这一种日志需要搜集分析,还有httpd、nginx、mysql等日志,但是如果都搜集在一个索引下面,会很乱,不易于排查问题,如果每一种类型的日志都创建一个索引,这样分类创建索引,会比较直观,实现是在logstash server 服务器上创建多个conf文件,然后启动,如下:

input {

redis {

type => "36tomcat-send"

host => "192.168.0.19"

port => "6379"

data_type => "list"

key => '36elk_tomcat-send'

batch_count => 1

}

redis {

type => "36activemq"

host => "192.168.0.19"

port => "6379"

data_type => "list"

key => '36elk_activemq'

batch_count => 1

}

redis {

type => "36tomcat-instant"

host => "192.168.0.19"

port => "6379"

data_type => "list"

key => '36elk_tomcat-instant'

batch_count => 1

}

redis {

type => "36zookeeper"

host => "192.168.0.19"

port => "6379"

data_type => "list"

key => '36elk_zookeeper'

batch_count => 1

}

}

output {

if [type] == "36tomcat-send" {

elasticsearch {

hosts => ["192.168.0.15:9200"]

index => "192.168.0.16-tomcat-send-log-%{+YYYY.MM.dd}"

}

}

if [type] == "36activemq" {

elasticsearch {

hosts => ["192.168.0.15:9200"]

index => "192.168.0.16-activemq-log-%{+YYYY.MM.dd}"

}

}

if [type] == "36tomcat-instant" {

elasticsearch {

hosts => ["192.168.0.15:9200"]

index => "192.168.0.16-tomcat-instant-log-%{+YYYY.MM.dd}"

}

}

if [type] == "36zookeeper" {

elasticsearch {

hosts => ["192.168.0.15:9200"]

index => "192.168.0.16-zookeeper-log-%{+YYYY.MM.dd}"

}

}

}

再对应的日志服务器(称为客户端)本身配置conf文件,如下:

input {

file {

path => "/data/soft/tomcat-send/logs/catalina.out"

type => "36tomcat-send"

start_position => "beginning"

}

file {

path => "/data/soft/tomcat-instant/logs/catalina.out"

type => "36tomcat-instant"

start_position => "beginning"

}

file {

path => "/data/soft/activemq-5.9.0/data/activemq.log"

type => "36activemq"

start_position => "beginning"

}

file {

path => "/data/soft/zookeeper-3.4.6/logs/zookeeper.out"

type => "36zookeeper"

start_position => "beginning"

}

}

output {

if [type] == "36tomcat-send" {

redis {

host => "192.168.0.19"

data_type => "list"

key => '36elk_tomcat-send'

}

}

if [type] == "36tomcat-instant" {

redis {

host => "192.168.0.19"

data_type => "list"

key => '36elk_tomcat-instant'

}

}

if [type] == "36activemq" {

redis {

host => "192.168.0.19"

data_type => "list"

key => '36elk_activemq'

}

}

if [type] == "36zookeeper" {

redis {

host => "192.168.0.19"

data_type => "list"

key => '36elk_zookeeper'

}

}

}

致此,ELK平台搭建成功!

八、参考文献

主要参考两篇技术博客:

http://blog.51cto.com/467754239/1700828

http://blog.51cto.com/linuxg/1843114