视频学习--《语义分割中的自注意力机制和低秩重建》

《语义分割中的自注意力机制和低秩重建》-李夏

-

语义分割(Semantic Segmentation):对图像中的每个像素同时输出一个label

全卷积网络:理论上感知域增大,实际有效感知域很小。

-

Nonlocal Network (对应自注意力机制)

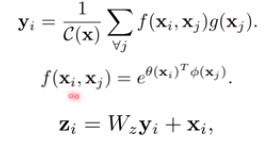

Non-local neural networks:为了推测某一位置上的物品信息,需要建立此位置和图像中所有点的关系,计算方法:

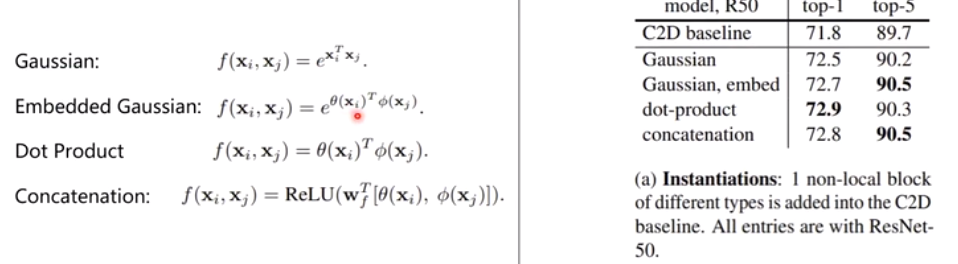

f()为xi,xj的关系建模,C(x)是对f()的归一化,g(xj)是对参考像素的变换,相似度的其他选择:

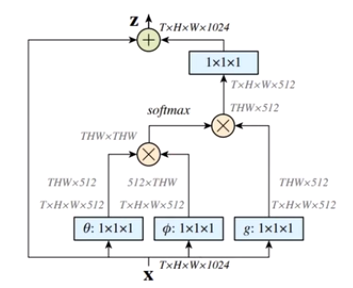

具体实现:

复杂度为N*N*C

-

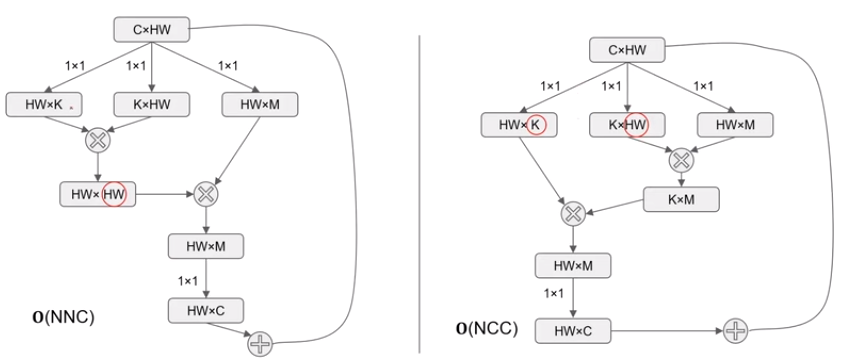

A^2-Nets

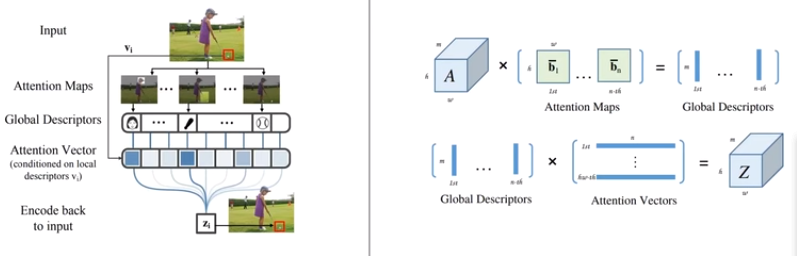

A^2-Nets: Double Attention Networks

与Nonlocal network对比(右图为nonlocal net,左图为A^2-Nets):

计算复杂度减小为 N*C*C

-

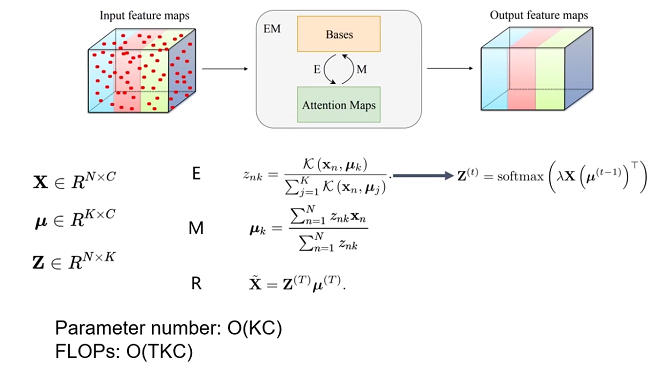

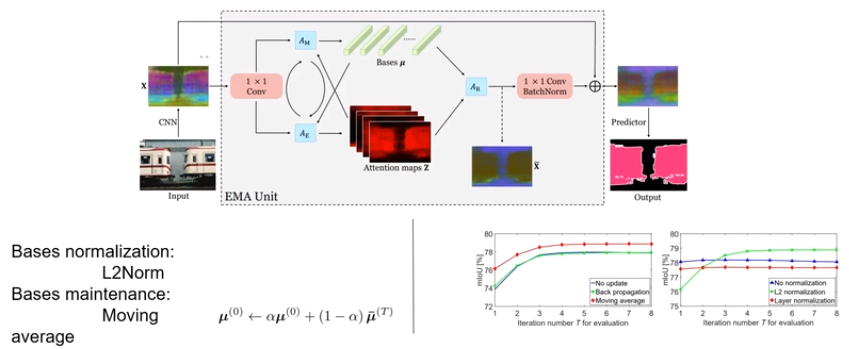

EM Attention Networks

Expectation Maximization Attention Networks for Semantic Segmentation

N为图像数量,C为输入feature map的维度,Z为映射矩阵,结构上实现:

-

Tricks for semantic segmentation

Tricks that must work:

- Not use Pytorch's official ResNet.

- Avoid weight decay on BN and Conv's bias.

- Use OHEM for test.

- Interpolating with align_corners=True.

- Set crop size as 8x+1.

- Inference with sliding window on Cityscapes.

- Inference with the whole image on PASCAL VOC.

Tricks may work:

-

Use a 10 times larger lr at the segmentation head.

-

Training with warmup strategy.