C++实现感知机模型

使用C++实现感知机模型

最近在上机器学习课程,刚学习了感知机模型,于是决定自己动手写一下。

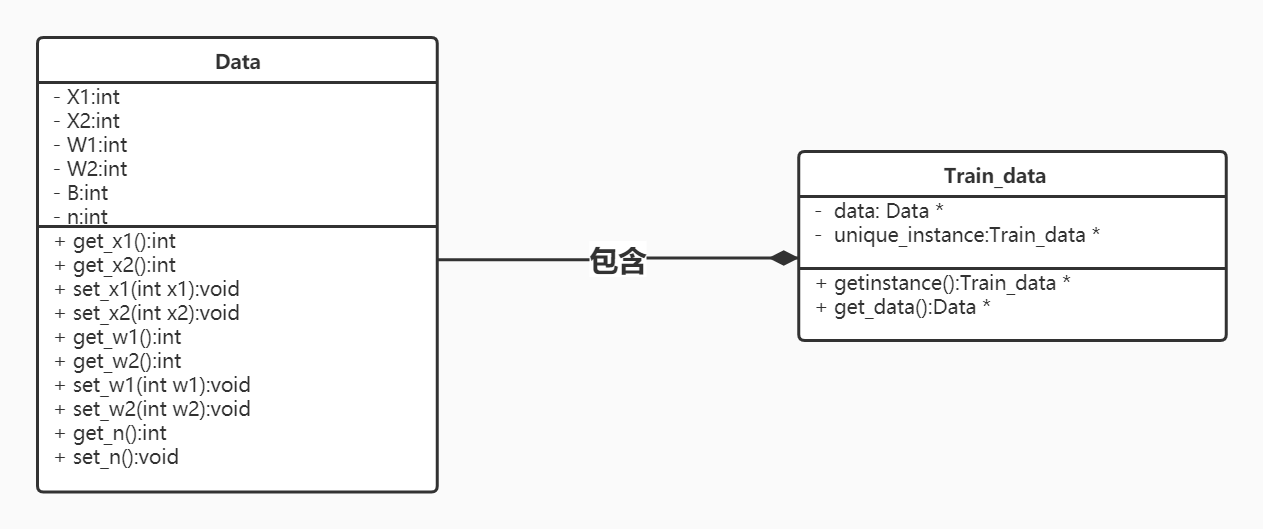

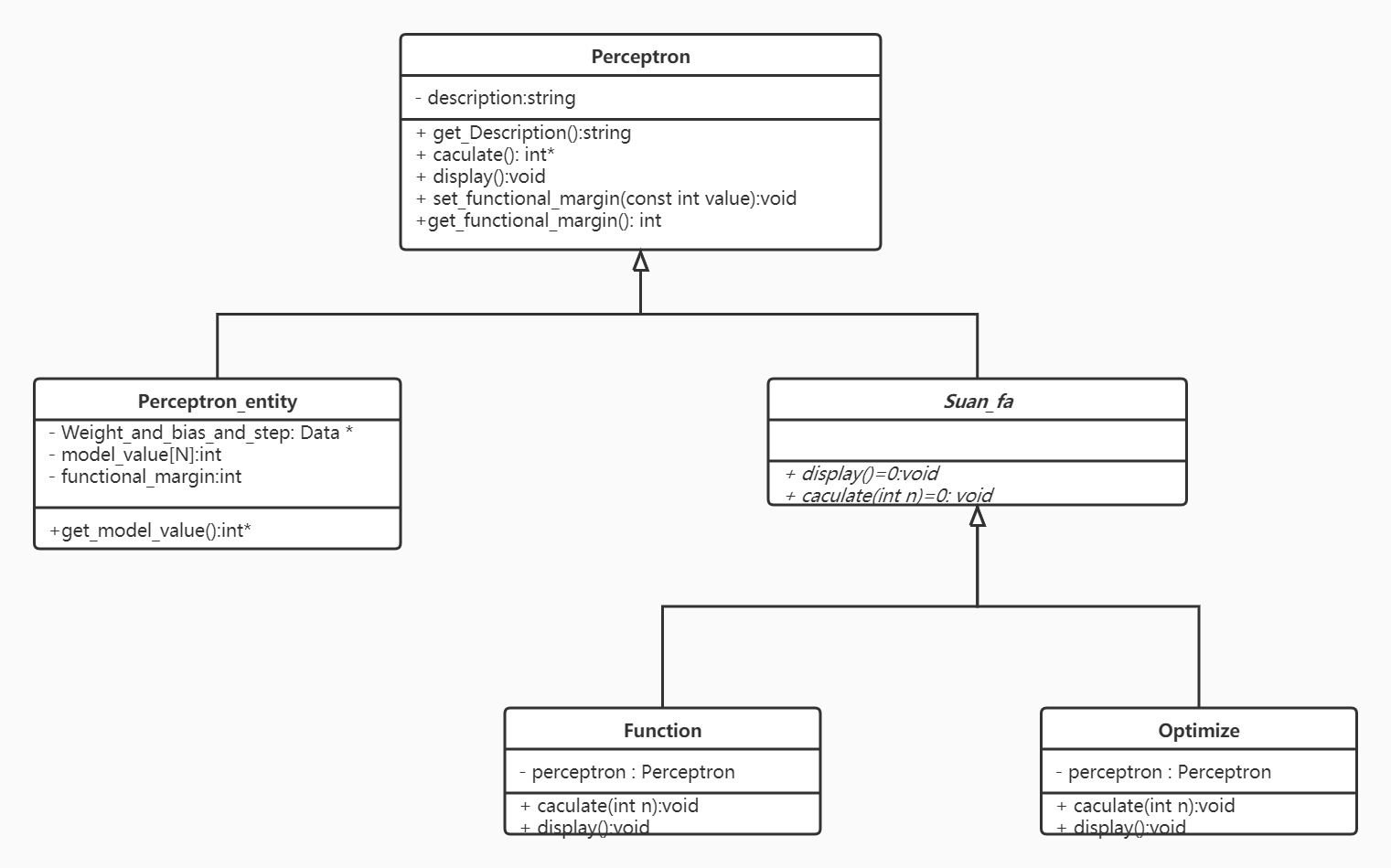

设计想法: 想要构建出一种通用的模型设计形式,方便之后的机器学习模型也可以套用,于是尝试将最近看的设计模式运用上,类图一中在Train_data类中使用单例模式,在类图二中使用装饰者模式。

结构说明: Train_data类中封装了Data* data,通过data指针指向存储的训练数据;Perceptron_entity类中封装了Data* Weight_and_bias_step、int modle_value[N]、int functional_margin[N],通过Weight_and_bias_step指针指向存储的权重W、偏置B以及步长n; 通过modle_value[N]存储感知机模型的值(WX+b), 通过functional_margin[N]存储函数间隔的值( Yi*(WX+b) ), 通过Perceptron_entity类来实例化感知机对象;Function类和 Optimize类分别是用来计算函数间隔和优化权重W、偏置B以及步长n。

结果分析:1. 事实证明,设计模式的使用是要分场景的; 在Train_data类中并不需要使用单例模式,因为我还需要对Data进行修改,在Public中写了set函数对数据进行修改,这与单例模式的本意相悖;单例模式的本意是初始化一份资源,只可以供其他函数读取。 2. 此外,对类的的设计不够清晰,将数据、权重、偏置、以及学习率封装在Data类中,导致之后函数调用不够清楚。

改进想法:1.对类进一步细化,将Data类分为两个类:Data和 Weight_bias,Data类封装数据;Weight_bias类封装W1、W2、B、n。 2.增强代码的复用性: 使用户能够对Perceptron_entity类中的Weight_and_bias_step进行修改,对一份数据可以定义不同Perceptron_entity的对象,可以尝试提供友元函数,破坏封装性,来达到这个目的。 3.进一步提升:使用户能够对Train_data类的对象中的原始数据进行修改和增加, 从而达到:不同数据,不同Perceptron_entity,的使用。

一.类图

二.C++代码

Data.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 | #pragma onceclass Data{private: int X1; int X2; int sample_signal;//正样本or负样本 bool symbol=false;//标记分类正确与否 int W1; int W2; int B; int n; //步长public: Data(); ~Data(); void set_x1(const int x1); void set_x2(const int x2); void set_sample_signal(const int sample_signal); void set_W1(const int W1); void set_W2(const int W2); void set_B(const int B); void set_n(const int n); void set_symbol(bool symbol); int get_x1(); int get_x2(); int get_sample_signal(); int get_W1( ); int get_W2( ); int get_B( ); int get_n( ); bool get_symbol( );};Data::Data(){}Data::~Data(){}inline void Data::set_x1(const int x1){ this->X1=x1;}inline void Data::set_x2(const int x2){ this->X2 = x2;}inline void Data::set_sample_signal(const int sample_signal){ this->sample_signal = sample_signal;}inline void Data::set_W1(const int W1){ this->W1 = W1;}inline void Data::set_W2(const int W2){ this->W2 = W2;}inline void Data::set_B(const int B){ this->B = B;}inline void Data::set_n(const int n){ this->n = n;}inline void Data::set_symbol(bool symbol){ this->symbol = symbol;}inline int Data::get_x1(){ return X1;}inline int Data::get_x2(){ return X2;}inline int Data::get_sample_signal(){ return sample_signal;}inline int Data::get_W1(){ return W1;}inline int Data::get_W2(){ return W2;}inline int Data::get_B(){ return B;}inline int Data::get_n(){ return n;}inline bool Data::get_symbol(){ return symbol;} |

Train_data.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 | #pragma once#include"Data.h"#define N 3class Train_data{private: Data *data; static Train_data * unique_instance; Train_data();public: int judge(); ~Train_data(); static Train_data* getinstance(); Data * get_data(); };Train_data* Train_data::unique_instance = nullptr;Train_data::Train_data(){ this->data = new Data[N]; data[0].set_x1(3); data[0].set_x2(3); data[0].set_sample_signal(1); data[1].set_x1(4); data[1].set_x2(3); data[1].set_sample_signal(1); data[2].set_x1(1); data[2].set_x2(1); data[2].set_sample_signal(-1);}Train_data::~Train_data(){ //释放数组 delete [ ]data;}inline Train_data* Train_data::getinstance(){ if (unique_instance == nullptr) { unique_instance = new Train_data(); } return unique_instance;}inline Data * Train_data::get_data(){ return this->data;}inline int Train_data::judge(){ int number = 0; for (int i = 0; i < N; i++) if (data[i].get_symbol() == true) number++; return number;} |

Perceptron.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | #pragma once#include<iostream>#include<string>#include"Data.h"#include"F://python/include/Python.h"using namespace std;class Perceptron{private: string description;public: Perceptron(); ~Perceptron(); //ostream& operator <<(ostream& ostr, const Perceptron& x); string get_Description() {} virtual int* caculate() { return nullptr; } virtual void caculate(int n) {}; virtual void display(){} virtual void set_functional_margin(const int value, const int n) {} virtual int * get_functional_margin() { return nullptr; } virtual Data * get_Weight_and_bias_and_step(){ return nullptr; }};Perceptron::Perceptron(){ this->description = "This is a perceptron class";}Perceptron::~Perceptron(){} |

Perceptron_entity.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 | #pragma once#include"Perceptron.h"#include"Train_data.h"#include"Data.h"#include <cstdlib>class Perceptron_entity: public Perceptron{private: Data * Weight_and_bias_and_step; int model_value[N]; int functional_margin[N];public: Perceptron_entity(); ~Perceptron_entity(); string get_Description(); int * caculate(); void display(); void set_functional_margin(const int value, const int n); int * get_functional_margin(); int * get_model_value(); Data* get_Weight_and_bias_and_step();};inline Perceptron_entity::Perceptron_entity(){ cout << "init" << endl; Weight_and_bias_and_step = new Data(); Weight_and_bias_and_step->set_W1(0); Weight_and_bias_and_step->set_W2(0); Weight_and_bias_and_step->set_B(0); Weight_and_bias_and_step->set_n(1); cout << Weight_and_bias_and_step->get_n() << endl;}Perceptron_entity::~Perceptron_entity(){ delete Weight_and_bias_and_step;}inline string Perceptron_entity::get_Description(){ return string();}inline int* Perceptron_entity::caculate(){ cout <<" model_value "<< endl; Train_data *sample= Train_data::getinstance(); for(int i=0;i<N;i++) model_value[i]=(sample->get_data())[i].get_x1() * Weight_and_bias_and_step->get_W1() + (sample->get_data())[i].get_x2() * Weight_and_bias_and_step->get_W2() + Weight_and_bias_and_step->get_B(); //if (temp >= 0) // model_value = 1; //else // model_value = -1; return model_value;}inline void Perceptron_entity::set_functional_margin(const int value, const int n){ this->functional_margin[n] = value;}inline int* Perceptron_entity::get_functional_margin(){ return functional_margin;}inline int * Perceptron_entity::get_model_value(){ return model_value;}inline Data* Perceptron_entity::get_Weight_and_bias_and_step(){ return Weight_and_bias_and_step;}inline void Perceptron_entity::display(){ //int j = 0; // Train_data* sample = Train_data::getinstance(); cout <<" print Weight_and_bias_and_step " << endl; cout << "W1: " << Weight_and_bias_and_step->get_W1()<<endl; cout << "W2: " << Weight_and_bias_and_step->get_W2()<<endl; cout << "B: " << Weight_and_bias_and_step->get_B()<<endl; cout << "n: " << Weight_and_bias_and_step->get_n()<<endl; ////***python调用***// ////初始化python模块 //Py_Initialize(); //// 检查初始化是否成功 //if (!Py_IsInitialized()) //{ // cout << "szzvz" << endl; // Py_Finalize(); //} //PyRun_SimpleString("import sys"); ////添加Insert模块路径 ////PyRun_SimpleString(chdir_cmd.c_str()); //PyRun_SimpleString("sys.path.append('./')"); ////PyRun_SimpleString("sys.argv = ['python.py']"); //PyObject* pModule = NULL; ////导入模块 //pModule = PyImport_ImportModule("draw"); //if (!pModule) //{ // cout << "Python get module failed." << endl; // //} //cout << "Python get module succeed." << endl; //PyObject* pFunc = NULL; //pFunc = PyObject_GetAttrString(pModule, "_draw"); //PyObject* ret=PyEval_CallObject(pFunc, NULL); ////获取Insert模块内_add函数 //PyObject* pv = PyObject_GetAttrString(pModule, "_draw_"); //if (!pv || !PyCallable_Check(pv)) //{ // cout << "Can't find funftion (_draw_)" << endl; //} //// cout << "Get function (_draw_) succeed." << endl; //////初始化要传入的参数,args配置成传入两个参数的模式 //PyObject* args = PyTuple_New(2*N+3); //PyObject* ArgList = PyTuple_New(1); ////将Long型数据转换成Python可接收的类型 //for (int i=0; i < N; i++,j++) //{ // PyObject* arg1 = PyLong_FromLong((sample->get_data())[i].get_x1()); // PyObject* arg2 = PyLong_FromLong((sample->get_data())[i].get_x2()); // PyTuple_SetItem(args, j, arg1); // PyTuple_SetItem(args, ++j, arg2); //} // PyObject* arg3 = PyLong_FromLong(Weight_and_bias_and_step->get_W1()); // PyTuple_SetItem(args, j++, arg3); // PyObject* arg4 = PyLong_FromLong(Weight_and_bias_and_step->get_W2()); // PyTuple_SetItem(args, j++, arg4); // PyObject* arg5 = PyLong_FromLong(Weight_and_bias_and_step->get_B()); // PyTuple_SetItem(args, j++, arg5); // PyTuple_SetItem(ArgList, 0, args); ////传入参数调用函数,并获取返回值 //PyObject* pRet = PyObject_CallObject(pv, ArgList); //if (pRet) //{ // //将返回值转换成long型 // long result = PyLong_AsLong(pRet); // cout << "result:" << result << endl; //} //Py_CLEAR(pModule); //Py_CLEAR(pFunc); //Py_CLEAR(ret); //Py_CLEAR(pv); //Py_CLEAR(args); //Py_CLEAR(ArgList); //Py_CLEAR(pRet); //Py_Finalize(); //system("pause");} |

Suan_fa.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | #pragma once#include"Perceptron.h"#include"Data.h"class Suan_fa: public Perceptron{private:public: Suan_fa(); ~Suan_fa();};Suan_fa::Suan_fa(){}Suan_fa::~Suan_fa(){} |

Function.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | #pragma once#include"Suan_fa.h"#include"Data.h"#include"Perceptron_entity.h"class Function: public Suan_fa{private: Perceptron * perceptron;public: Function(Perceptron * entity); ~Function(); void caculate(int n); void display();};Function::Function(Perceptron * entity){ this->perceptron = entity;}Function::~Function(){}inline void Function::caculate(int n){ cout <<" print origin samples "<< endl; //计算functional_margin Train_data* sample = Train_data::getinstance(); cout << " print first sample " << endl; cout <<"x1: "<<(sample->get_data())[0].get_x1() << endl; cout <<"y1: "<<(sample->get_data())[0].get_x2() << endl; cout << " print second sample " << endl; cout <<"x2: "<<(sample->get_data())[1].get_x1() << endl; cout <<"y2: "<<(sample->get_data())[1].get_x2() << endl; cout << " print third sample " << endl; cout <<"x3: "<<(sample->get_data())[2].get_x1() << endl; cout <<"y3: "<<(sample->get_data())[2].get_x2() << endl; int* array = perceptron->caculate(); cout <<array[0]<< endl; cout <<array[1]<< endl; cout <<array[2]<< endl; perceptron->set_functional_margin(array[n]*(sample->get_data())[n].get_sample_signal(),n);}inline void Function::display(){ perceptron->display(); //cout << "this is function" << endl;} |

Optimize.h文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 | #pragma once#include"Perceptron.h"#include"Suan_fa.h"#include"Train_data.h"class Optimize:public Suan_fa{private: Perceptron * perceptron;public: Optimize(Perceptron * entity); ~Optimize(); void caculate(int n); void display();};Optimize::Optimize(Perceptron * entity){ this->perceptron = entity;}Optimize::~Optimize(){}inline void Optimize::caculate(int n){ cout <<" print functional_margin "<< endl; Train_data * sample = Train_data::getinstance(); cout << perceptron->get_functional_margin()[0] << endl; cout << perceptron->get_functional_margin()[1] << endl; cout << perceptron->get_functional_margin()[2] << endl; if (perceptron->get_functional_margin()[n] <= 0) { cout <<" this time functional_margin 小于0 "<< endl<<endl; /* cout <<perceptron->get_Weight_and_bias_and_step()->get_W1()<< endl; cout <<perceptron->get_Weight_and_bias_and_step()->get_n()<< endl; cout <<(sample->get_data())[n].get_sample_signal()<< endl; cout <<(sample->get_data())[n].get_x1()<< endl;*/ (sample->get_data())[n].set_symbol(false); perceptron->get_Weight_and_bias_and_step()->set_W1(perceptron->get_Weight_and_bias_and_step()->get_W1() + perceptron->get_Weight_and_bias_and_step()->get_n() * (sample->get_data())[n].get_sample_signal() * (sample->get_data())[n].get_x1()); perceptron->get_Weight_and_bias_and_step()->set_W2(perceptron->get_Weight_and_bias_and_step()->get_W2() + perceptron->get_Weight_and_bias_and_step()->get_n() * (sample->get_data())[n].get_sample_signal() * (sample->get_data())[n].get_x2()); perceptron->get_Weight_and_bias_and_step()->set_B(perceptron->get_Weight_and_bias_and_step()->get_B() + perceptron->get_Weight_and_bias_and_step()->get_n() * (sample->get_data())[n].get_sample_signal()); } else (sample->get_data())[n].set_symbol(true);}inline void Optimize::display(){ perceptron->display(); //cout << "this is optimize" << endl;} |

main.cpp文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | #include"Data.h"#include"Function.h"#include"Optimize.h"#include"Perceptron.h"#include"Perceptron_entity.h"#include"Suan_fa.h"#include"Train_data.h"#include<iostream>#include"F://python/include/Python.h"using namespace std;int main(){ int loop_number = 1; int number = 0; Train_data * samples = Train_data::getinstance(); Perceptron* Entity = new Perceptron_entity(); while (samples->judge()!=3) { cout<<"This is "<<loop_number<<" loop "<<endl; //Entity->display(); Function entity(Entity); entity.caculate(number); entity.display(); Optimize optimize(Entity); optimize.caculate(number); cout <<" print the Weight_and_bias_and_step after optimize " << endl; optimize.display(); loop_number++; if (number == 2) number = number % 2; else number++; } return 0;} |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通